Francisco J. Rodríguez-Lera

VAULT: A Mobile Mapping System for ROS 2-based Autonomous Robots

Jun 11, 2025

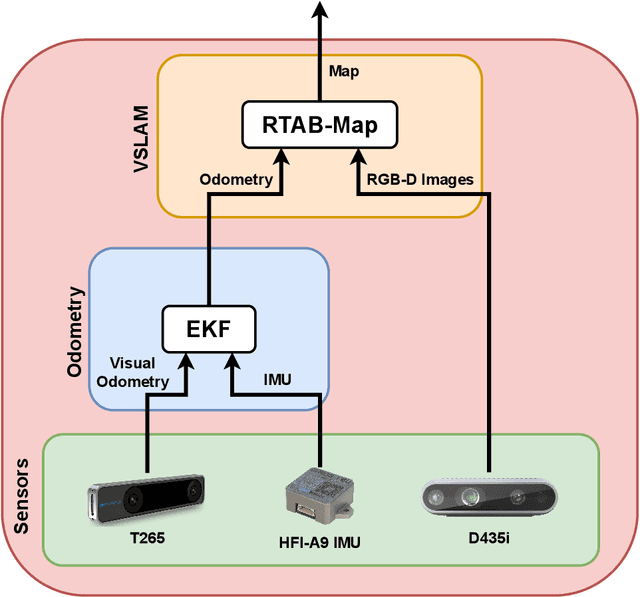

Abstract:Localization plays a crucial role in the navigation capabilities of autonomous robots, and while indoor environments can rely on wheel odometry and 2D LiDAR-based mapping, outdoor settings such as agriculture and forestry, present unique challenges that necessitate real-time localization and consistent mapping. Addressing this need, this paper introduces the VAULT prototype, a ROS 2-based mobile mapping system (MMS) that combines various sensors to enable robust outdoor and indoor localization. The proposed solution harnesses the power of Global Navigation Satellite System (GNSS) data, visual-inertial odometry (VIO), inertial measurement unit (IMU) data, and the Extended Kalman Filter (EKF) to generate reliable 3D odometry. To further enhance the localization accuracy, Visual SLAM (VSLAM) is employed, resulting in the creation of a comprehensive 3D point cloud map. By leveraging these sensor technologies and advanced algorithms, the prototype offers a comprehensive solution for outdoor localization in autonomous mobile robots, enabling them to navigate and map their surroundings with confidence and precision.

Integrating Quantized LLMs into Robotics Systems as Edge AI to Leverage their Natural Language Processing Capabilities

Jun 11, 2025Abstract:Large Language Models (LLMs) have experienced great advancements in the last year resulting in an increase of these models in several fields to face natural language tasks. The integration of these models in robotics can also help to improve several aspects such as human-robot interaction, navigation, planning and decision-making. Therefore, this paper introduces llama\_ros, a tool designed to integrate quantized Large Language Models (LLMs) into robotic systems using ROS 2. Leveraging llama.cpp, a highly optimized runtime engine, llama\_ros enables the efficient execution of quantized LLMs as edge artificial intelligence (AI) in robotics systems with resource-constrained environments, addressing the challenges of computational efficiency and memory limitations. By deploying quantized LLMs, llama\_ros empowers robots to leverage the natural language understanding and generation for enhanced decision-making and interaction which can be paired with prompt engineering, knowledge graphs, ontologies or other tools to improve the capabilities of autonomous robots. Additionally, this paper provides insights into some use cases of using llama\_ros for planning and explainability in robotics.

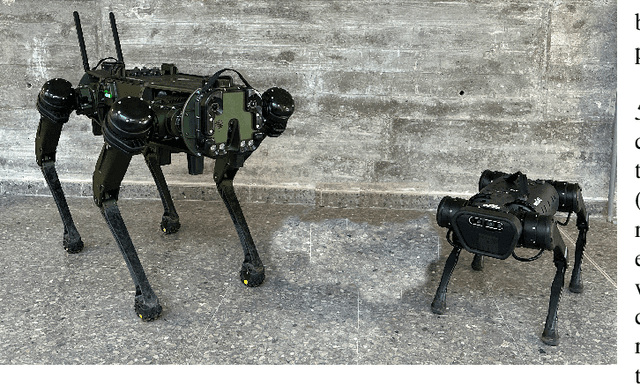

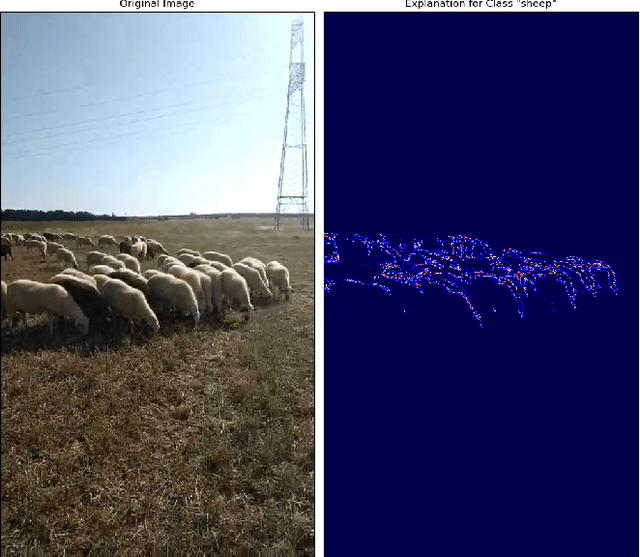

Lessons Learned in Quadruped Deployment in Livestock Farming

Apr 24, 2024

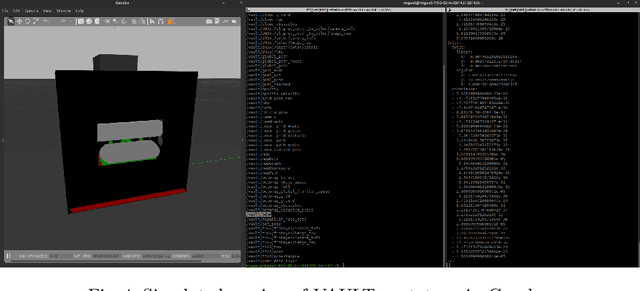

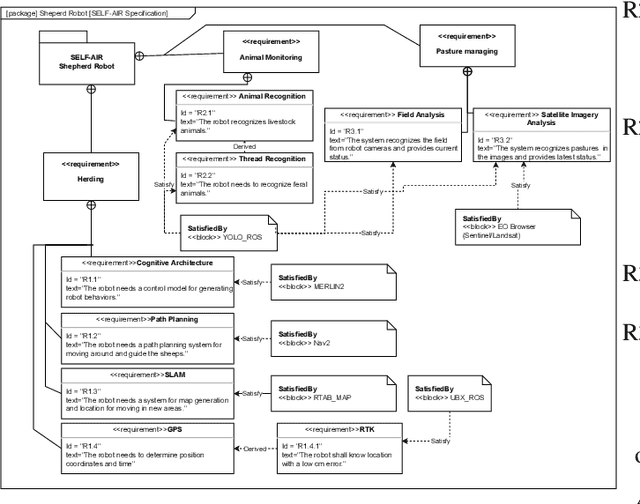

Abstract:The livestock industry faces several challenges, including labor-intensive management, the threat of predators and environmental sustainability concerns. Therefore, this paper explores the integration of quadruped robots in extensive livestock farming as a novel application of field robotics. The SELF-AIR project, an acronym for Supporting Extensive Livestock Farming with the use of Autonomous Intelligent Robots, exemplifies this innovative approach. Through advanced sensors, artificial intelligence, and autonomous navigation systems, these robots exhibit remarkable capabilities in navigating diverse terrains, monitoring large herds, and aiding in various farming tasks. This work provides insight into the SELF-AIR project, presenting the lessons learned.

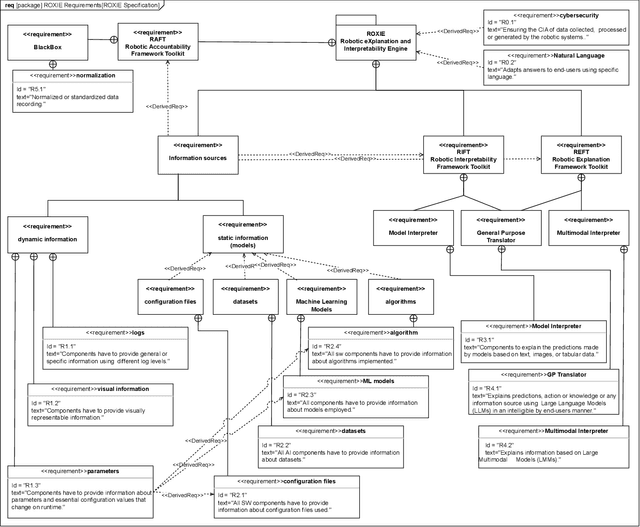

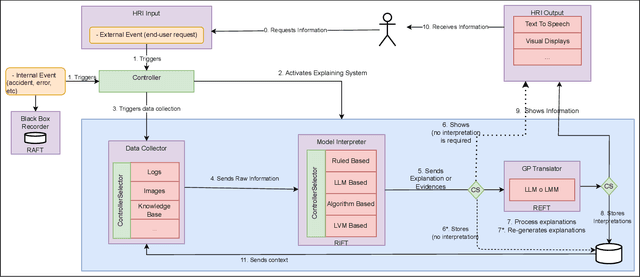

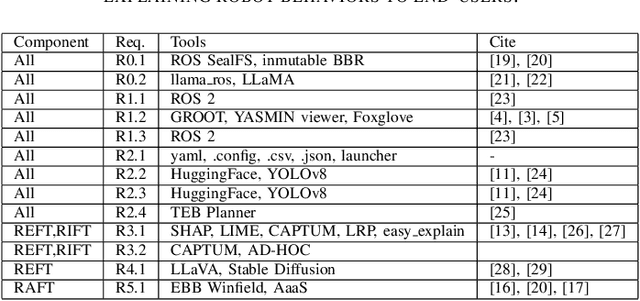

ROXIE: Defining a Robotic eXplanation and Interpretability Engine

Mar 25, 2024

Abstract:In an era where autonomous robots increasingly inhabit public spaces, the imperative for transparency and interpretability in their decision-making processes becomes paramount. This paper presents the overview of a Robotic eXplanation and Interpretability Engine (ROXIE), which addresses this critical need, aiming to demystify the opaque nature of complex robotic behaviors. This paper elucidates the key features and requirements needed for providing information and explanations about robot decision-making processes. It also overviews the suite of software components and libraries available for deployment with ROS 2, empowering users to provide comprehensive explanations and interpretations of robot processes and behaviors, thereby fostering trust and collaboration in human-robot interactions.

Explaining Autonomy: Enhancing Human-Robot Interaction through Explanation Generation with Large Language Models

Feb 06, 2024Abstract:This paper introduces a system designed to generate explanations for the actions performed by an autonomous robot in Human-Robot Interaction (HRI). Explainability in robotics, encapsulated within the concept of an eXplainable Autonomous Robot (XAR), is a growing research area. The work described in this paper aims to take advantage of the capabilities of Large Language Models (LLMs) in performing natural language processing tasks. This study focuses on the possibility of generating explanations using such models in combination with a Retrieval Augmented Generation (RAG) method to interpret data gathered from the logs of autonomous systems. In addition, this work also presents a formalization of the proposed explanation system. It has been evaluated through a navigation test from the European Robotics League (ERL), a Europe-wide social robotics competition. Regarding the obtained results, a validation questionnaire has been conducted to measure the quality of the explanations from the perspective of technical users. The results obtained during the experiment highlight the potential utility of LLMs in achieving explanatory capabilities in robots.

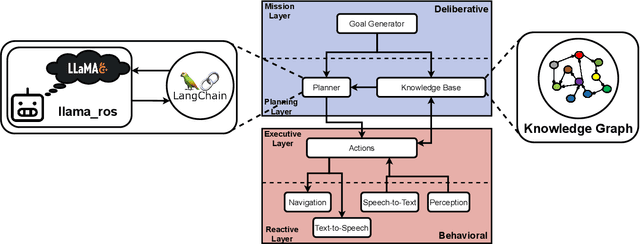

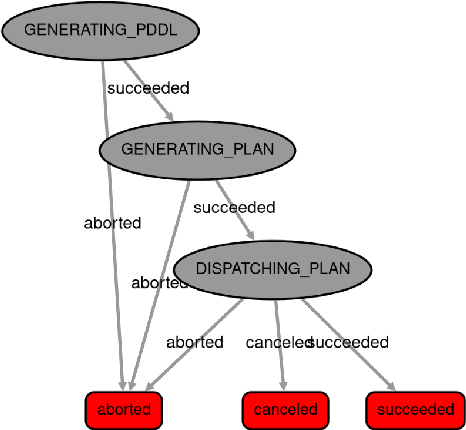

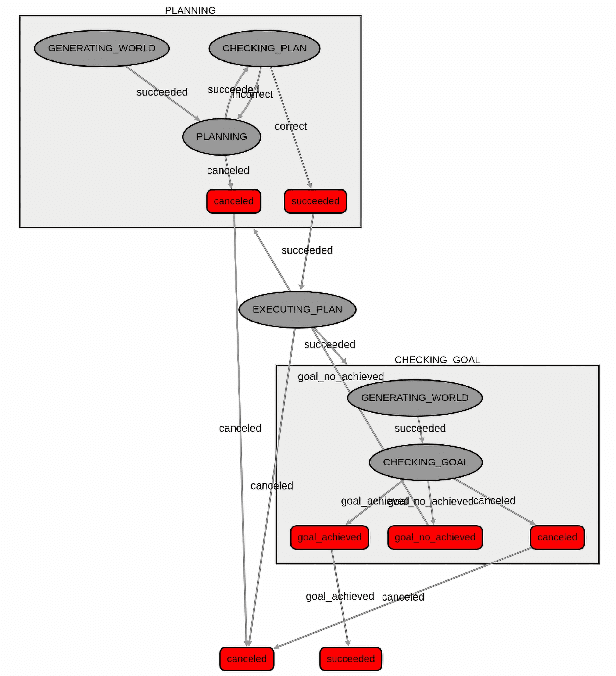

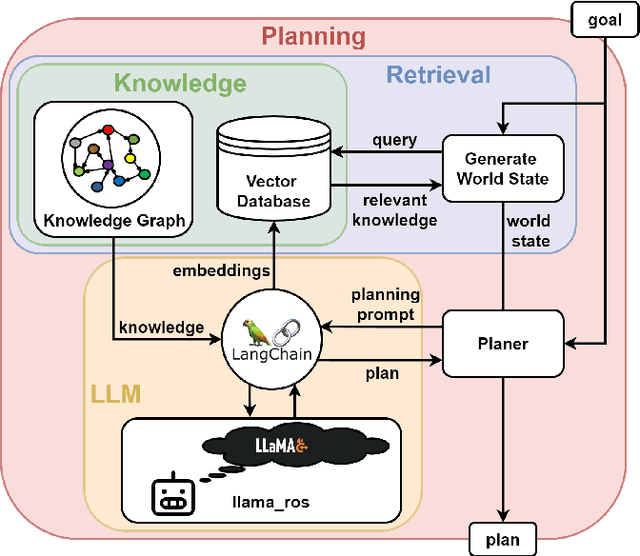

Integration of Large Language Models within Cognitive Architectures for Autonomous Robots

Sep 26, 2023

Abstract:The usage of Large Language Models (LLMs) has increased recently, not only due to the significant improvements in their accuracy but also because of the use of the quantization that allows running these models without intense hardware requirements. As a result, the LLMs have proliferated. It implies the creation of a great variety of LLMs with different capabilities. This way, this paper proposes the integration of LLMs in cognitive architectures for autonomous robots. Specifically, we present the design, development and deployment of the llama\_ros tool that allows the easy use and integration of LLMs in ROS 2-based environments, afterward integrated with the state-of-the-art cognitive architecture MERLIN2 for updating a PDDL-based planner system. This proposal is evaluated quantitatively and qualitatively, measuring the impact of incorporating the LLMs in the cognitive architecture.

SAILOR: Perceptual Anchoring For Robotic Cognitive Architectures

Mar 14, 2023Abstract:Symbolic anchoring is a crucial problem in the field of robotics, as it enables robots to obtain symbolic knowledge from the perceptual information acquired through their sensors. In cognitive-based robots, this process of processing sub-symbolic data from real-world sensors to obtain symbolic knowledge is still an open problem. To address this issue, this paper presents SAILOR, a framework for providing symbolic anchoring in ROS 2 ecosystem. SAILOR aims to maintain the link between symbolic data and perceptual data in real robots over time. It provides a semantic world modeling approach using two deep learning-based sub-symbolic robotic skills: object recognition and matching function. The object recognition skill allows the robot to recognize and identify objects in its environment, while the matching function enables the robot to decide if new perceptual data corresponds to existing symbolic data. This paper provides a description of the framework, the pipeline and development as well as its integration in MERLIN2, a hybrid cognitive architecture fully functional in robots running ROS 2.

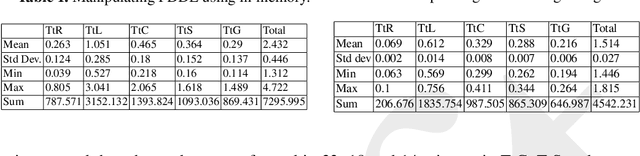

KANT: A tool for Grounding and Knowledge Management

Apr 18, 2022

Abstract:The intelligent robotics community usually organizes knowledge into symbolic and sub-symbolic levels. These two levels establish the set of symbols and rules for manipulating knowledge based on their (symbol system - dictionary). Thus, the correspondences -- Grounding or knowledge representation -- require specific software techniques for anchoring continuous and discrete state variables between these two levels. This paper presents the design and evaluation of an Open Source tool called KANT(Knowledge mAnagemeNT) to let different components of the system architecture controlling the robot query, save, edit, and delete the data from the Knowledge Base without having to worry about the type and the implementation of the source data. Using KANT, components managing subsymbolic information can smoothly interact with symbolic components. Besides, implementation mechanisms used in KANT, such as the use of in-memory and non-SQL databases, improve the performance of the knowledge management systems in ROS middleware, as shown by the evaluations presented in this work.

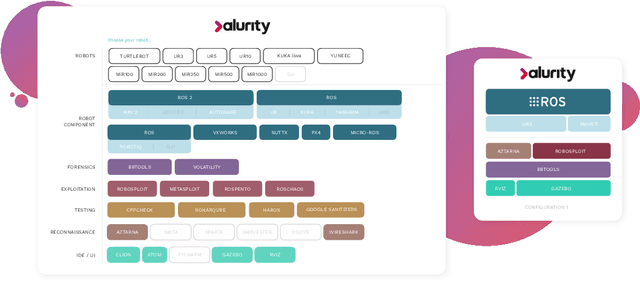

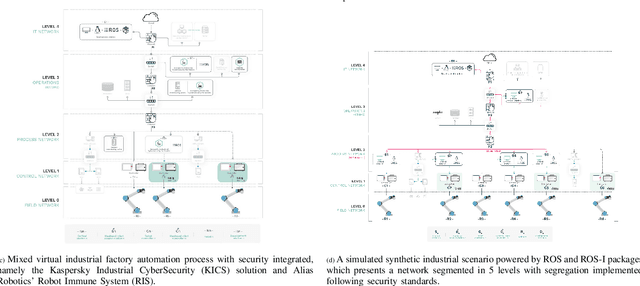

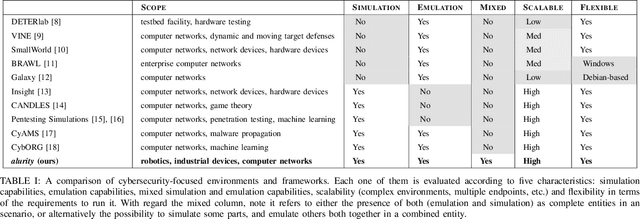

alurity, a toolbox for robot cybersecurity

Oct 16, 2020

Abstract:The reuse of technologies and inherent complexity of most robotic systems is increasingly leading to robots with wide attack surfaces and a variety of potential vulnerabilities. Given their growing presence in public environments, security research is increasingly becoming more important than in any other area, specially due to the safety implications that robot vulnerabilities could cause on humans. We argue that security triage in robotics is still immature and that new tools must be developed to accelerate the testing-triage-exploitation cycle, necessary for prioritizing and accelerating the mitigation of flaws. The present work tackles the current lack of offensive cybersecurity research in robotics by presenting a toolbox and the results obtained with it through several use cases conducted over a year period. We propose a modular and composable toolbox for robot cybersecurity: alurity. By ensuring that both roboticists and security researchers working on a project have a common, consistent and easily reproducible development environment, alurity aims to facilitate the cybersecurity research and the collaboration across teams.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge