Laura Fernández-Becerra

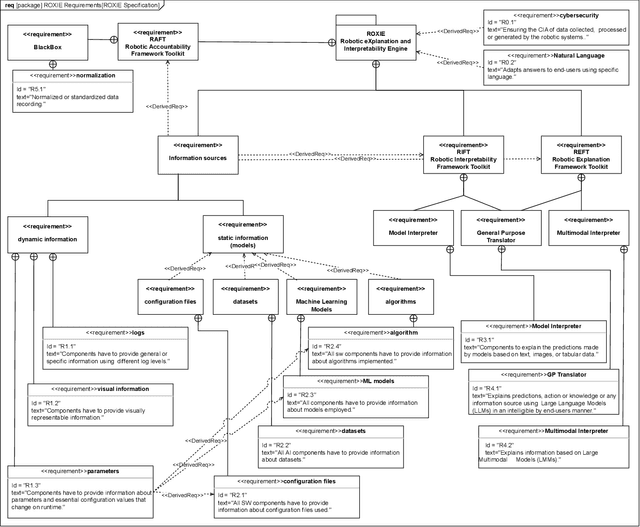

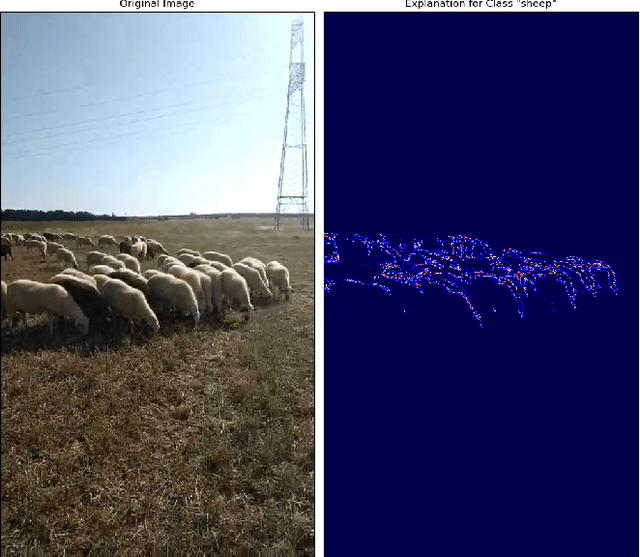

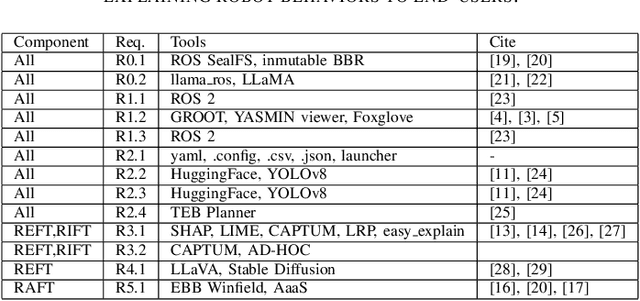

ROXIE: Defining a Robotic eXplanation and Interpretability Engine

Mar 25, 2024

Abstract:In an era where autonomous robots increasingly inhabit public spaces, the imperative for transparency and interpretability in their decision-making processes becomes paramount. This paper presents the overview of a Robotic eXplanation and Interpretability Engine (ROXIE), which addresses this critical need, aiming to demystify the opaque nature of complex robotic behaviors. This paper elucidates the key features and requirements needed for providing information and explanations about robot decision-making processes. It also overviews the suite of software components and libraries available for deployment with ROS 2, empowering users to provide comprehensive explanations and interpretations of robot processes and behaviors, thereby fostering trust and collaboration in human-robot interactions.

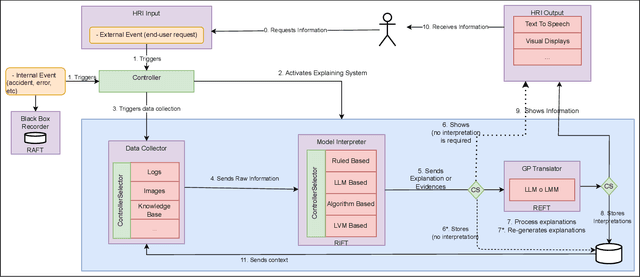

Enhancing Trust in Autonomous Agents: An Architecture for Accountability and Explainability through Blockchain and Large Language Models

Mar 14, 2024Abstract:The deployment of autonomous agents in environments involving human interaction has increasingly raised security concerns. Consequently, understanding the circumstances behind an event becomes critical, requiring the development of capabilities to justify their behaviors to non-expert users. Such explanations are essential in enhancing trustworthiness and safety, acting as a preventive measure against failures, errors, and misunderstandings. Additionally, they contribute to improving communication, bridging the gap between the agent and the user, thereby improving the effectiveness of their interactions. This work presents an accountability and explainability architecture implemented for ROS-based mobile robots. The proposed solution consists of two main components. Firstly, a black box-like element to provide accountability, featuring anti-tampering properties achieved through blockchain technology. Secondly, a component in charge of generating natural language explanations by harnessing the capabilities of Large Language Models (LLMs) over the data contained within the previously mentioned black box. The study evaluates the performance of our solution in three different scenarios, each involving autonomous agent navigation functionalities. This evaluation includes a thorough examination of accountability and explainability metrics, demonstrating the effectiveness of our approach in using accountable data from robot actions to obtain coherent, accurate and understandable explanations, even when facing challenges inherent in the use of autonomous agents in real-world scenarios.

Using Large Language Models for Interpreting Autonomous Robots Behaviors

Apr 28, 2023Abstract:The deployment of autonomous robots in various domains has raised significant concerns about their trustworthiness and accountability. This study explores the potential of Large Language Models (LLMs) in analyzing ROS 2 logs generated by autonomous robots and proposes a framework for log analysis that categorizes log files into different aspects. The study evaluates the performance of three different language models in answering questions related to StartUp, Warning, and PDDL logs. The results suggest that GPT 4, a transformer-based model, outperforms other models, however, their verbosity is not enough to answer why or how questions for all kinds of actors involved in the interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge