Bernhard Dieber

Lost in Translation? Converting RegExes for Log Parsing into Dynatrace Pattern Language

Jun 24, 2025Abstract:Log files provide valuable information for detecting and diagnosing problems in enterprise software applications and data centers. Several log analytics tools and platforms were developed to help filter and extract information from logs, typically using regular expressions (RegExes). Recent commercial log analytics platforms provide domain-specific languages specifically designed for log parsing, such as Grok or the Dynatrace Pattern Language (DPL). However, users who want to migrate to these platforms must manually convert their RegExes into the new pattern language, which is costly and error-prone. In this work, we present Reptile, which combines a rule-based approach for converting RegExes into DPL patterns with a best-effort approach for cases where a full conversion is impossible. Furthermore, it integrates GPT-4 to optimize the obtained DPL patterns. The evaluation with 946 RegExes collected from a large company shows that Reptile safely converted 73.7% of them. The evaluation of Reptile's pattern optimization with 23 real-world RegExes showed an F1-score and MCC above 0.91. These results are promising and have ample practical implications for companies that migrate to a modern log analytics platform, such as Dynatrace.

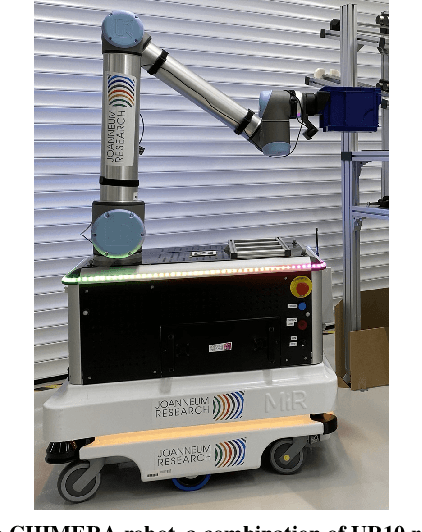

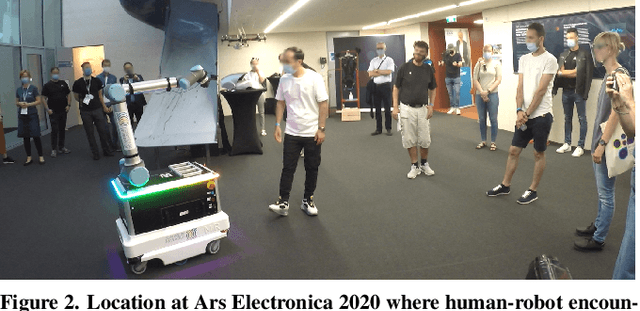

A proxemics game between festival visitors and an industrial robot

May 28, 2021

Abstract:With increased applications of collaborative robots (cobots) in industrial workplaces, behavioural effects of human-cobot interactions need to be further investigated. This is of particular importance as nonverbal behaviours of collaboration partners in human-robot teams significantly influence the experience of the human interaction partners and the success of the collaborative task. During the Ars Electronica 2020 Festival for Art, Technology and Society (Linz, Austria), we invited visitors to exploratively interact with an industrial robot, exhibiting restricted interaction capabilities: extending and retracting its arm, depending on the movements of the volunteer. The movements of the arm were pre-programmed and telecontrolled for safety reasons (which was not obvious to the participants). We recorded video data of these interactions and investigated general nonverbal behaviours of the humans interacting with the robot, as well as nonverbal behaviours of people in the audience. Our results showed that people were more interested in exploring the robot's action and perception capabilities than just reproducing the interaction game as introduced by the instructors. We also found that the majority of participants interacting with the robot approached it up to a distance which would be perceived as threatening or intimidating, if it were a human interaction partner. Regarding bystanders, we found examples where people made movements as if trying out variants of the current participant's behaviour.

Cybersecurity in Robotics: Challenges, Quantitative Modeling, and Practice

Mar 16, 2021

Abstract:Robotics is becoming more and more ubiquitous, but the pressure to bring systems to market occasionally goes at the cost of neglecting security mechanisms during the development, deployment or while in production. As a result, contemporary robotic systems are vulnerable to diverse attack patterns, and an a posteriori hardening is at least challenging, if not impossible at all. This book aims to stipulate the inclusion of security in robotics from the earliest design phases onward and with a special focus on the cost-benefit tradeoff that can otherwise be an inhibitor for the fast development of affordable systems. We advocate quantitative methods of security management and design, covering vulnerability scoring systems tailored to robotic systems, and accounting for the highly distributed nature of robots as an interplay of potentially very many components. A powerful quantitative approach to model-based security is offered by game theory, providing a rich spectrum of techniques to optimize security against various kinds of attacks. Such a multi-perspective view on security is necessary to address the heterogeneity and complexity of robotic systems. This book is intended as an accessible starter for the theoretician and practitioner working in the field.

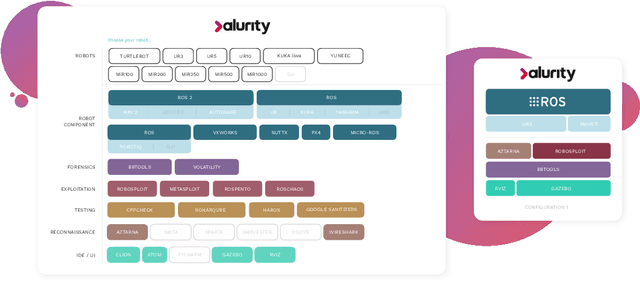

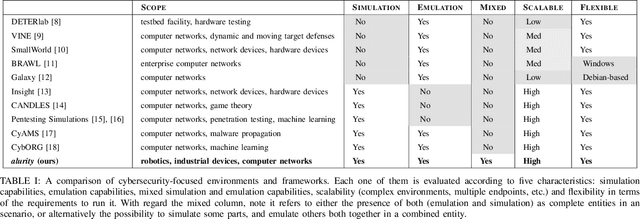

alurity, a toolbox for robot cybersecurity

Oct 16, 2020

Abstract:The reuse of technologies and inherent complexity of most robotic systems is increasingly leading to robots with wide attack surfaces and a variety of potential vulnerabilities. Given their growing presence in public environments, security research is increasingly becoming more important than in any other area, specially due to the safety implications that robot vulnerabilities could cause on humans. We argue that security triage in robotics is still immature and that new tools must be developed to accelerate the testing-triage-exploitation cycle, necessary for prioritizing and accelerating the mitigation of flaws. The present work tackles the current lack of offensive cybersecurity research in robotics by presenting a toolbox and the results obtained with it through several use cases conducted over a year period. We propose a modular and composable toolbox for robot cybersecurity: alurity. By ensuring that both roboticists and security researchers working on a project have a common, consistent and easily reproducible development environment, alurity aims to facilitate the cybersecurity research and the collaboration across teams.

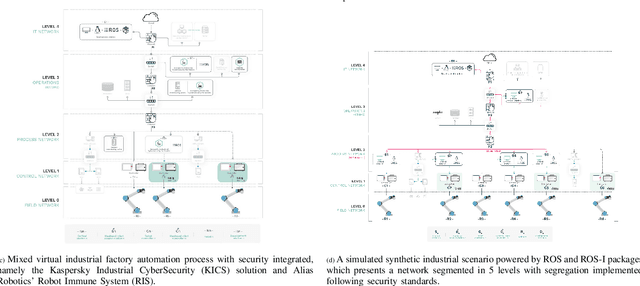

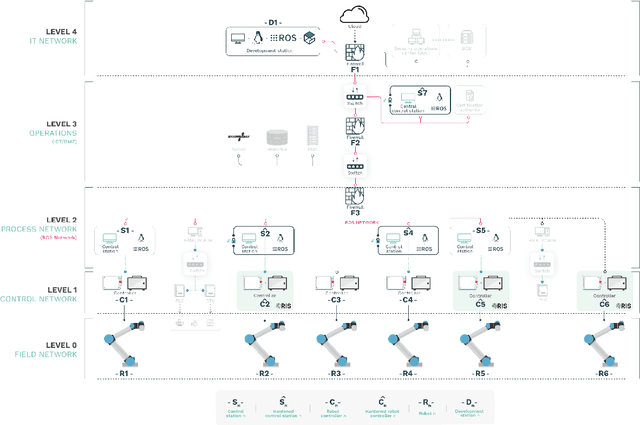

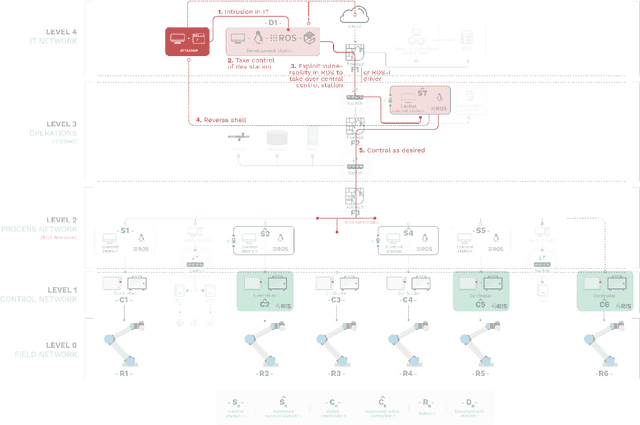

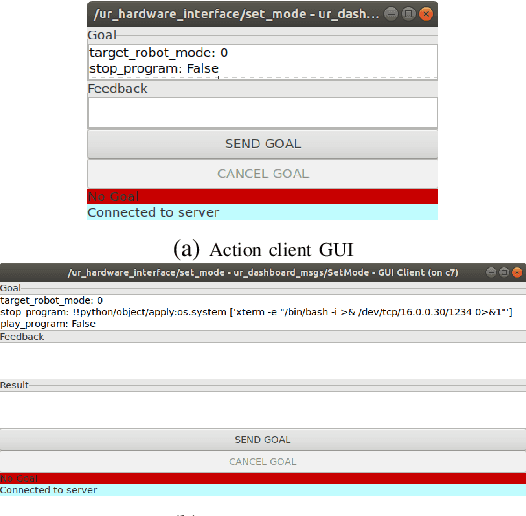

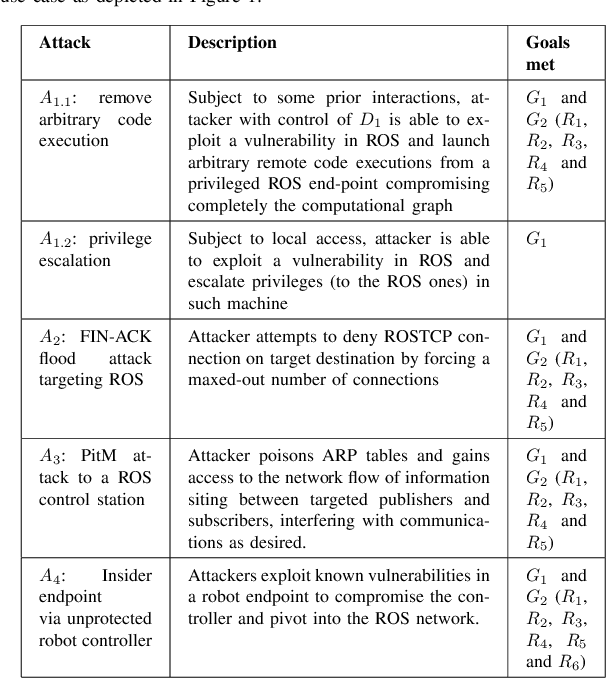

Can ROS be used securely in industry? Red teaming ROS-Industrial

Sep 17, 2020

Abstract:With its growing use in industry, ROS is rapidly becoming a standard in robotics. While developments in ROS 2 show promise, the slow adoption cycles in industry will push widespread ROS 2 industrial adoption years from now. ROS will prevail in the meantime which raises the question: can ROS be used securely for industrial use cases even though its origins didn't consider it? The present study analyzes this question experimentally by performing a targeted offensive security exercise in a synthetic industrial use case involving ROS-Industrial and ROS packages. Our exercise results in four groups of attacks which manage to compromise the ROS computational graph, and all except one take control of most robotic endpoints at desire. To the best of our knowledge and given our setup, results do not favour the secure use of ROS in industry today, however, we managed to confirm that the security of certain robotic endpoints hold and remain optimistic about securing ROS industrial deployments.

Introducing the Robot Vulnerability Database (RVD)

Dec 24, 2019

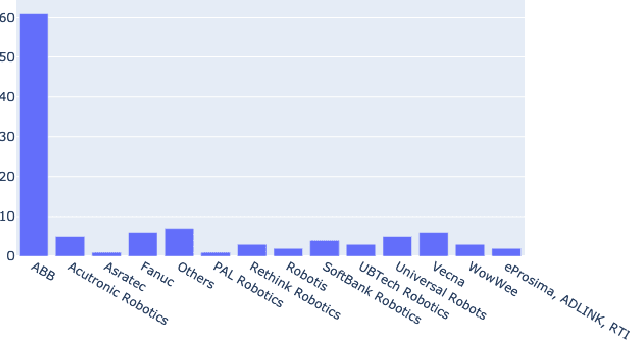

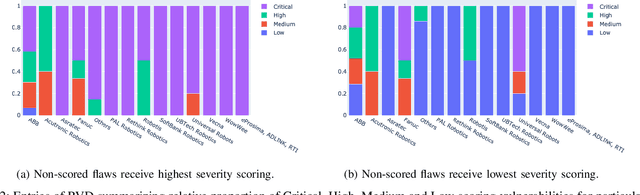

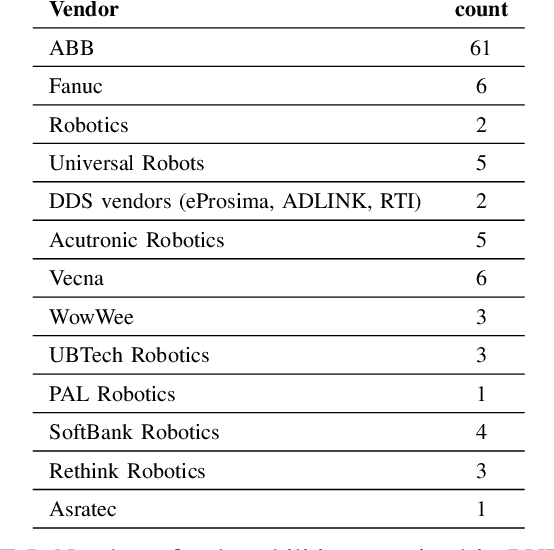

Abstract:Cybersecurity in robotics is an emerging topic that has gained significant traction. Researchers have demonstrated some of the potentials and effects of cyber attacks on robots lately. This implies safety related adverse consequences causing human harm, death or lead to significant integrity loss clearly overcoming the privacy concerns in classical IT world. In cybersecurity research, the use of vulnerability databases is a very reliable tool to responsibly disclose vulnerabilities in software products and raise willingness of vendors to address these issues. In this paper we argue, that existing vulnerability databases are of insufficient information density and show some biased content with respect to vulnerabilities in robots. This paper presents the Robot Vulnerability Database (RVD), a directory for responsible disclosure of bugs, weaknesses and vulnerabilities in robots. This article aims to describe the design and process as well as the associated disclosure policy behind RVD. Furthermore the authors present preliminary selected vulnerabilities already contained in RVD and call to the robotics and security communities for contribution to the endeavour of eliminating zero-day vulnerabilities in robotics.

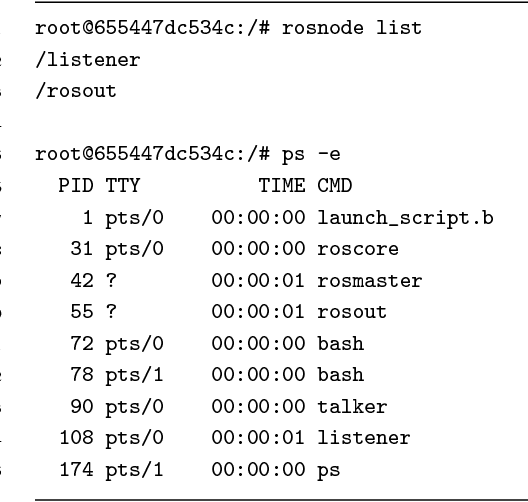

Volatile memory forensics for the Robot Operating System

Dec 22, 2018

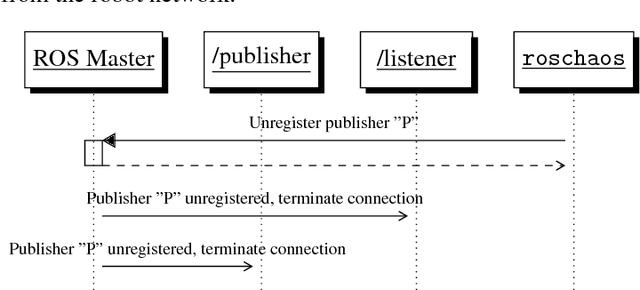

Abstract:The increasing impact of robotics on industry and on society will unavoidably lead to the involvement of robots in incidents and mishaps. In such cases, forensic analyses are key techniques to provide useful evidence on what happened, and try to prevent future incidents. This article discusses volatile memory forensics for the Robot Operating System (ROS). The authors start by providing a general overview of forensic techniques in robotics and then present a robotics-specific Volatility plugin named linux_rosnode, packaged within the ros_volatility project and aimed to extract evidence from robot's volatile memory. They demonstrate how this plugin can be used to detect a specific attack pattern on ROS, where a publisher node is unregistered externally, leading to denial of service and disruption of robotic behaviors. Step-by-step, common practices are introduced for performing forensic analysis and several techniques to capture memory are described. The authors finalize by introducing some future remarks while providing references to reproduce their work.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge