Stephanie Gross

Transforming Science with Large Language Models: A Survey on AI-assisted Scientific Discovery, Experimentation, Content Generation, and Evaluation

Feb 07, 2025

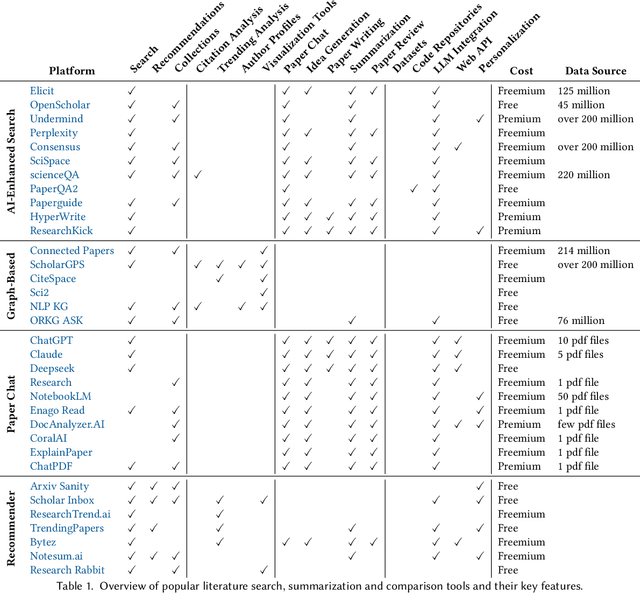

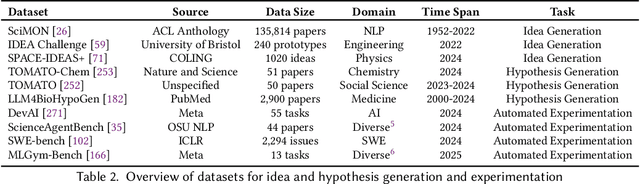

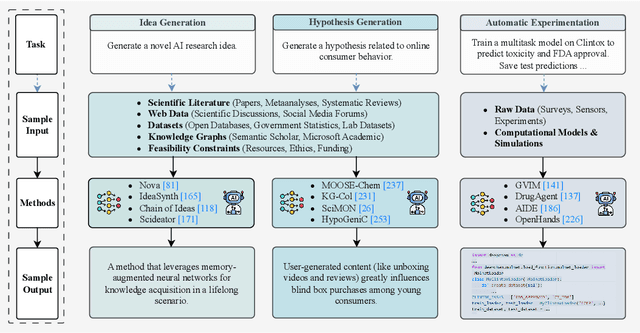

Abstract:With the advent of large multimodal language models, science is now at a threshold of an AI-based technological transformation. Recently, a plethora of new AI models and tools has been proposed, promising to empower researchers and academics worldwide to conduct their research more effectively and efficiently. This includes all aspects of the research cycle, especially (1) searching for relevant literature; (2) generating research ideas and conducting experimentation; generating (3) text-based and (4) multimodal content (e.g., scientific figures and diagrams); and (5) AI-based automatic peer review. In this survey, we provide an in-depth overview over these exciting recent developments, which promise to fundamentally alter the scientific research process for good. Our survey covers the five aspects outlined above, indicating relevant datasets, methods and results (including evaluation) as well as limitations and scope for future research. Ethical concerns regarding shortcomings of these tools and potential for misuse (fake science, plagiarism, harms to research integrity) take a particularly prominent place in our discussion. We hope that our survey will not only become a reference guide for newcomers to the field but also a catalyst for new AI-based initiatives in the area of "AI4Science".

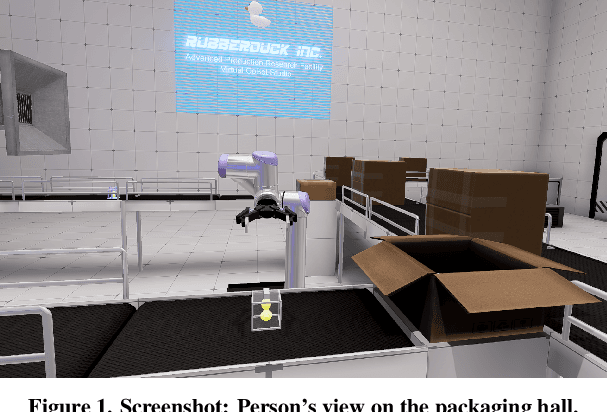

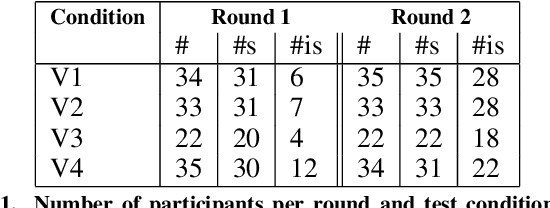

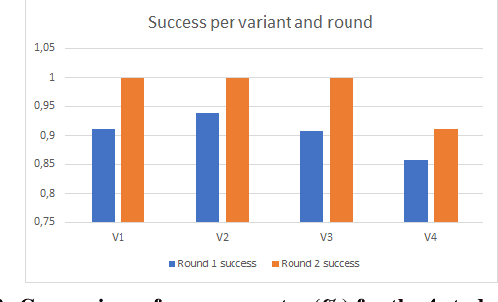

It's your turn! -- A collaborative human-robot pick-and-place scenario in a virtual industrial setting

May 28, 2021

Abstract:In human-robot collaborative interaction scenarios, nonverbal communication plays an important role. Both, signals sent by a human collaborator need to be identified and interpreted by the robotic system, and the signals sent by the robot need to be identified and interpreted by the human. In this paper, we focus on the latter. We implemented on an industrial robot in a VR environment nonverbal behavior signalling the user that it is now their turn to proceed with a pick-and-place task. The signals were presented in four different test conditions: no signal, robot arm gesture, light signal, combination of robot arm gesture and light signal. Test conditions were presented to the participants in two rounds. The qualitative analysis was conducted with focus on (i) potential signals in human behaviour indicating why some participants immediately took over from the robot whereas others needed more time to explore, (ii) human reactions after the nonverbal signal of the robot, and (iii) whether participants showed different behaviours in the different test conditions. We could not identify potential signals why some participants were immediately successful and others not. There was a bandwidth of behaviors after the robot stopped working, e.g. participants rearranged the objects, looked at the robot or the object, or gestured the robot to proceed. We found evidence that robot deictic gestures were helpful for the human to correctly interpret what to do next. Moreover, there was a strong tendency that humans interpreted the light signal projected on the robot's gripper as a request to give the object in focus to the robot. Whereas a robot's pointing gesture at the object was a strong trigger for the humans to look at the object.

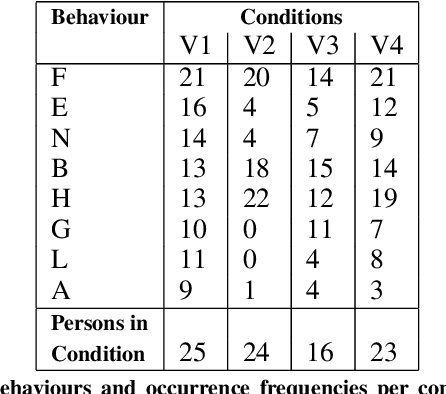

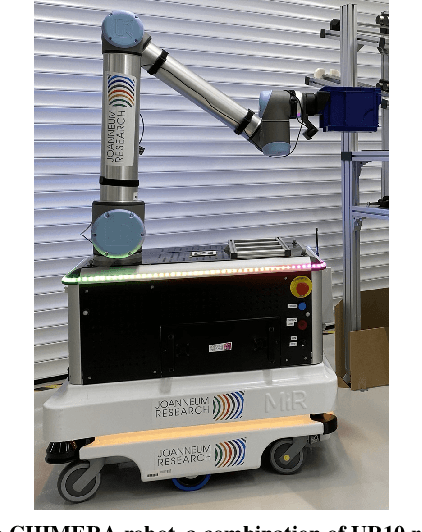

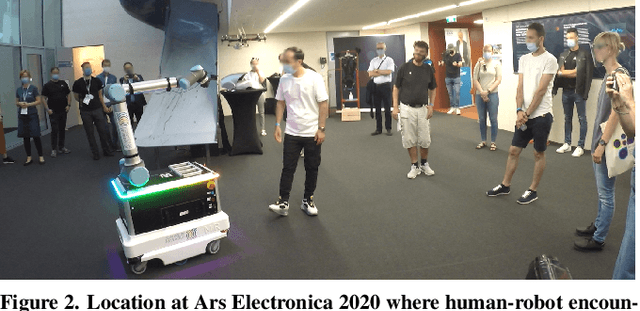

A proxemics game between festival visitors and an industrial robot

May 28, 2021

Abstract:With increased applications of collaborative robots (cobots) in industrial workplaces, behavioural effects of human-cobot interactions need to be further investigated. This is of particular importance as nonverbal behaviours of collaboration partners in human-robot teams significantly influence the experience of the human interaction partners and the success of the collaborative task. During the Ars Electronica 2020 Festival for Art, Technology and Society (Linz, Austria), we invited visitors to exploratively interact with an industrial robot, exhibiting restricted interaction capabilities: extending and retracting its arm, depending on the movements of the volunteer. The movements of the arm were pre-programmed and telecontrolled for safety reasons (which was not obvious to the participants). We recorded video data of these interactions and investigated general nonverbal behaviours of the humans interacting with the robot, as well as nonverbal behaviours of people in the audience. Our results showed that people were more interested in exploring the robot's action and perception capabilities than just reproducing the interaction game as introduced by the instructors. We also found that the majority of participants interacting with the robot approached it up to a distance which would be perceived as threatening or intimidating, if it were a human interaction partner. Regarding bystanders, we found examples where people made movements as if trying out variants of the current participant's behaviour.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge