Horst Pichler

A proxemics game between festival visitors and an industrial robot

May 28, 2021

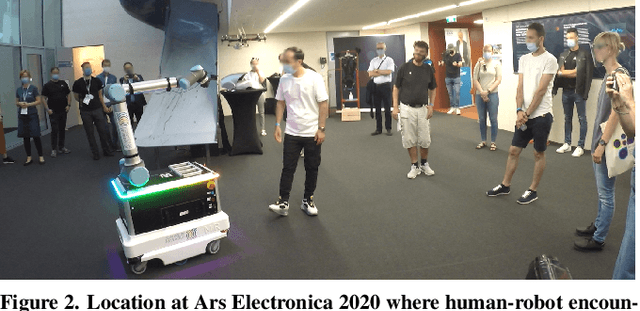

Abstract:With increased applications of collaborative robots (cobots) in industrial workplaces, behavioural effects of human-cobot interactions need to be further investigated. This is of particular importance as nonverbal behaviours of collaboration partners in human-robot teams significantly influence the experience of the human interaction partners and the success of the collaborative task. During the Ars Electronica 2020 Festival for Art, Technology and Society (Linz, Austria), we invited visitors to exploratively interact with an industrial robot, exhibiting restricted interaction capabilities: extending and retracting its arm, depending on the movements of the volunteer. The movements of the arm were pre-programmed and telecontrolled for safety reasons (which was not obvious to the participants). We recorded video data of these interactions and investigated general nonverbal behaviours of the humans interacting with the robot, as well as nonverbal behaviours of people in the audience. Our results showed that people were more interested in exploring the robot's action and perception capabilities than just reproducing the interaction game as introduced by the instructors. We also found that the majority of participants interacting with the robot approached it up to a distance which would be perceived as threatening or intimidating, if it were a human interaction partner. Regarding bystanders, we found examples where people made movements as if trying out variants of the current participant's behaviour.

robo-gym -- An Open Source Toolkit for Distributed Deep Reinforcement Learning on Real and Simulated Robots

Jul 06, 2020

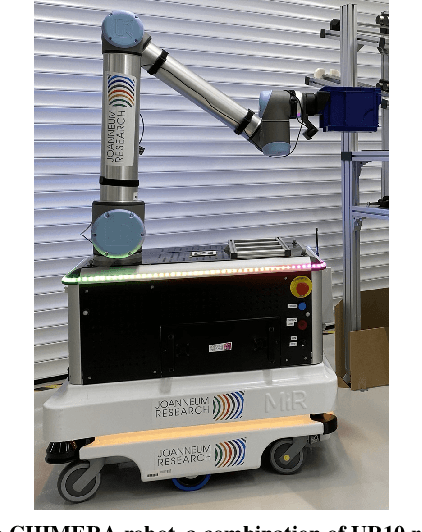

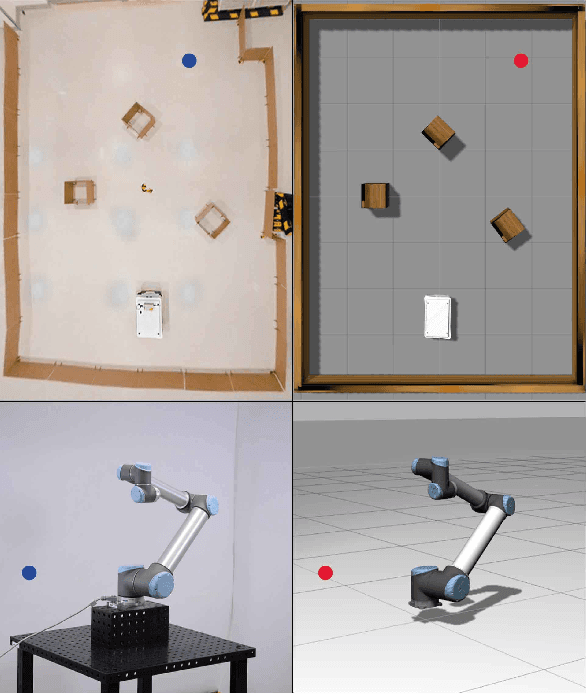

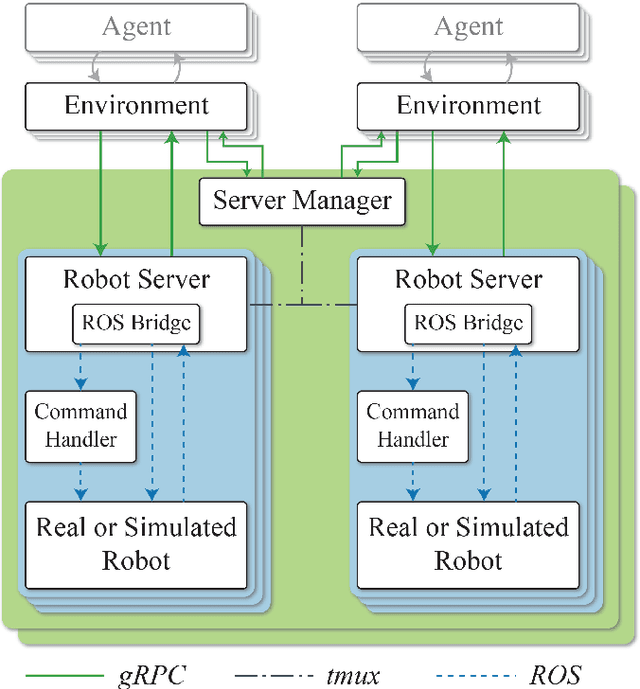

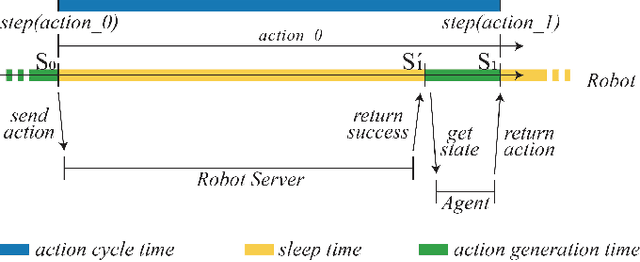

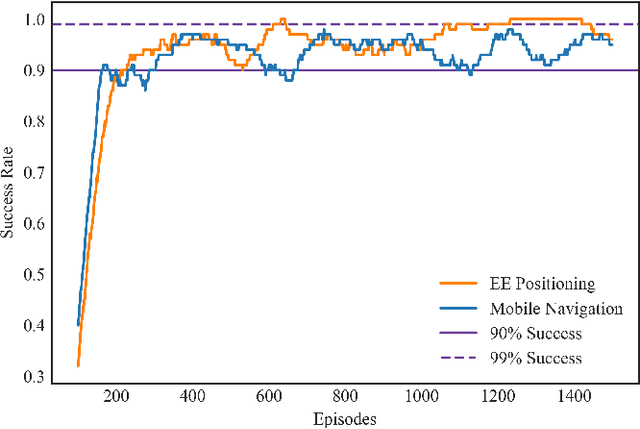

Abstract:Applying Deep Reinforcement Learning (DRL) to complex tasks in the field of robotics has proven to be very successful in the recent years. However, most of the publications focus either on applying it to a task in simulation or to a task in a real world setup. Although there are great examples of combining the two worlds with the help of transfer learning, it often requires a lot of additional work and fine-tuning to make the setup work effectively. In order to increase the use of DRL with real robots and reduce the gap between simulation and real world robotics, we propose an open source toolkit: robo-gym. We demonstrate a unified setup for simulation and real environments which enables a seamless transfer from training in simulation to application on the robot. We showcase the capabilities and the effectiveness of the framework with two real world applications featuring industrial robots: a mobile robot and a robot arm. The distributed capabilities of the framework enable several advantages like using distributed algorithms, separating the workload of simulation and training on different physical machines as well as enabling the future opportunity to train in simulation and real world at the same time. Finally we offer an overview and comparison of robo-gym with other frequently used state-of-the-art DRL frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge