Srivatsan Ravi

FedGraph: A Research Library and Benchmark for Federated Graph Learning

Oct 08, 2024

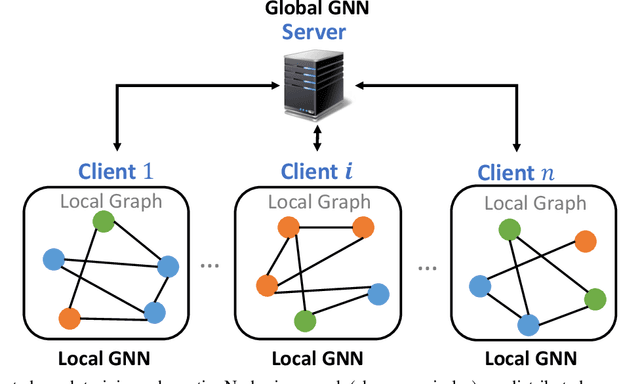

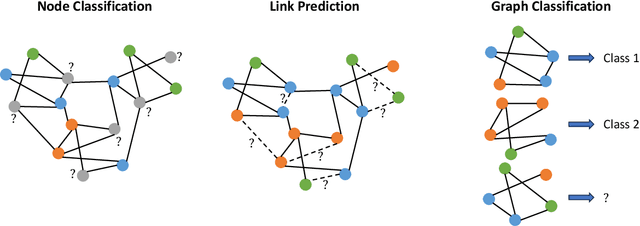

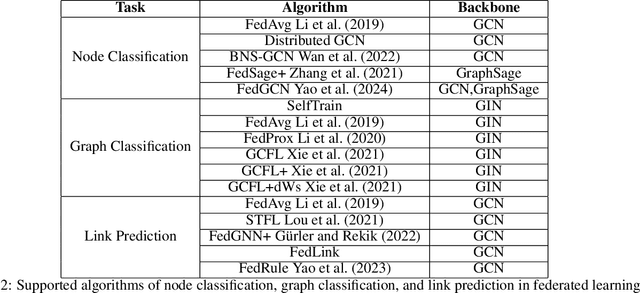

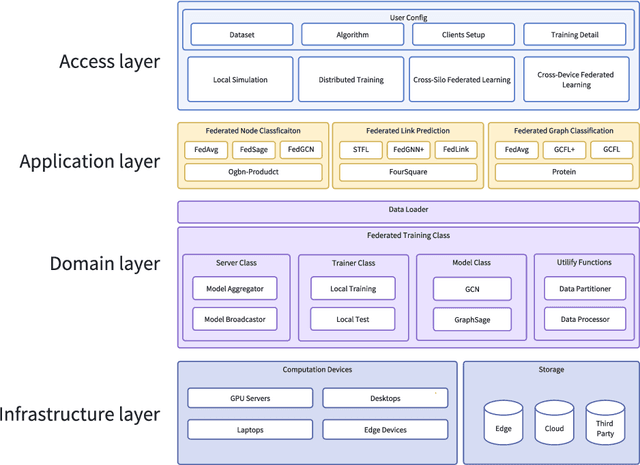

Abstract:Federated graph learning is an emerging field with significant practical challenges. While many algorithms have been proposed to enhance model accuracy, their system performance, crucial for real-world deployment, is often overlooked. To address this gap, we present FedGraph, a research library designed for practical distributed deployment and benchmarking in federated graph learning. FedGraph supports a range of state-of-the-art methods and includes profiling tools for system performance evaluation, focusing on communication and computation costs during training. FedGraph can then facilitate the development of practical applications and guide the design of future algorithms.

Privacy-Preserving Language Model Inference with Instance Obfuscation

Feb 13, 2024

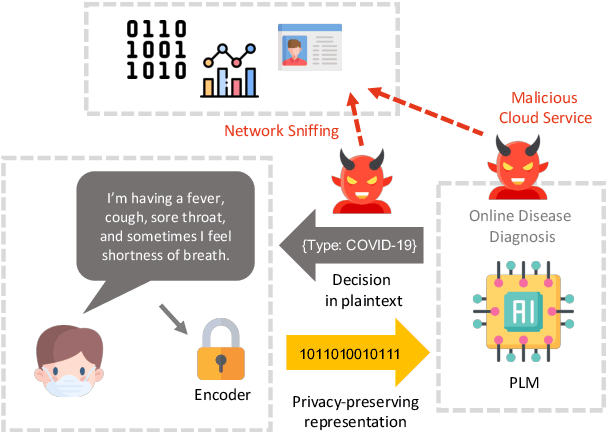

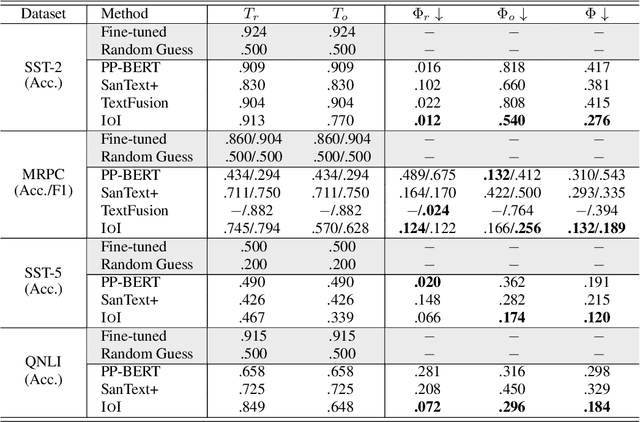

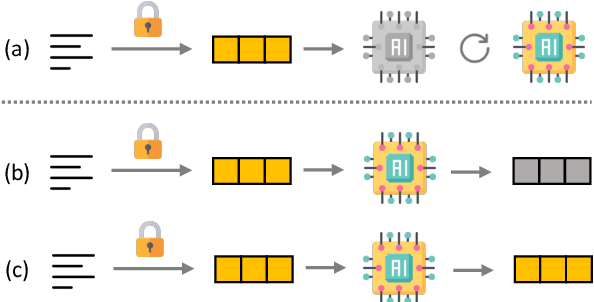

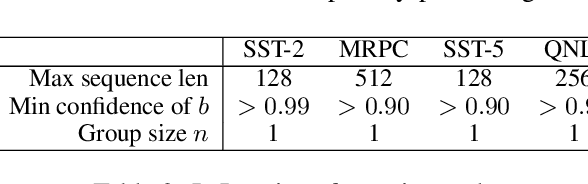

Abstract:Language Models as a Service (LMaaS) offers convenient access for developers and researchers to perform inference using pre-trained language models. Nonetheless, the input data and the inference results containing private information are exposed as plaintext during the service call, leading to privacy issues. Recent studies have started tackling the privacy issue by transforming input data into privacy-preserving representation from the user-end with the techniques such as noise addition and content perturbation, while the exploration of inference result protection, namely decision privacy, is still a blank page. In order to maintain the black-box manner of LMaaS, conducting data privacy protection, especially for the decision, is a challenging task because the process has to be seamless to the models and accompanied by limited communication and computation overhead. We thus propose Instance-Obfuscated Inference (IOI) method, which focuses on addressing the decision privacy issue of natural language understanding tasks in their complete life-cycle. Besides, we conduct comprehensive experiments to evaluate the performance as well as the privacy-protection strength of the proposed method on various benchmarking tasks.

Labeling without Seeing? Blind Annotation for Privacy-Preserving Entity Resolution

Aug 07, 2023

Abstract:The entity resolution problem requires finding pairs across datasets that belong to different owners but refer to the same entity in the real world. To train and evaluate solutions (either rule-based or machine-learning-based) to the entity resolution problem, generating a ground truth dataset with entity pairs or clusters is needed. However, such a data annotation process involves humans as domain oracles to review the plaintext data for all candidate record pairs from different parties, which inevitably infringes the privacy of data owners, especially in privacy-sensitive cases like medical records. To the best of our knowledge, there is no prior work on privacy-preserving ground truth dataset generation, especially in the domain of entity resolution. We propose a novel blind annotation protocol based on homomorphic encryption that allows domain oracles to collaboratively label ground truths without sharing data in plaintext with other parties. In addition, we design a domain-specific easy-to-use language that hides the sophisticated underlying homomorphic encryption layer. Rigorous proof of the privacy guarantee is provided and our empirical experiments via an annotation simulator indicate the feasibility of our privacy-preserving protocol (f-measure on average achieves more than 90\% compared with the real ground truths).

FedML-HE: An Efficient Homomorphic-Encryption-Based Privacy-Preserving Federated Learning System

Mar 20, 2023

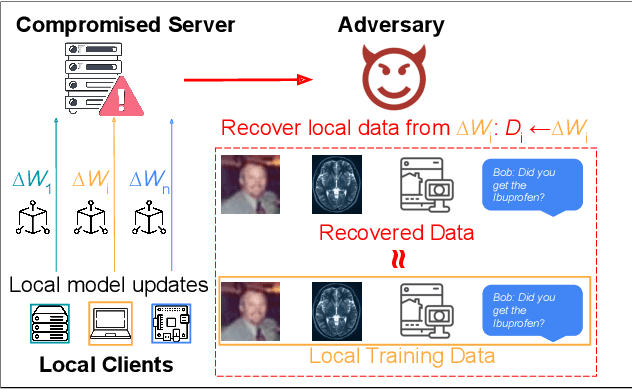

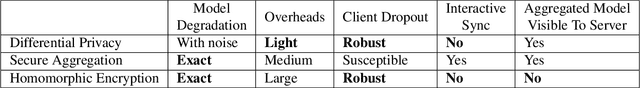

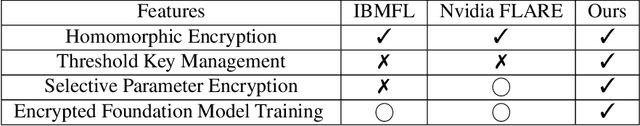

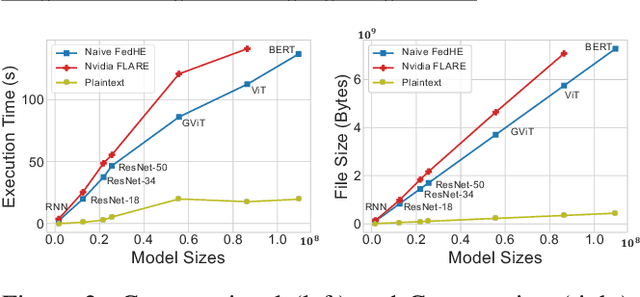

Abstract:Federated Learning (FL) enables machine learning model training on distributed edge devices by aggregating local model updates rather than local data. However, privacy concerns arise as the FL server's access to local model updates can potentially reveal sensitive personal information by performing attacks like gradient inversion recovery. To address these concerns, privacy-preserving methods, such as Homomorphic Encryption (HE)-based approaches, have been proposed. Despite HE's post-quantum security advantages, its applications suffer from impractical overheads. In this paper, we present FedML-HE, the first practical system for efficient HE-based secure federated aggregation that provides a user/device-friendly deployment platform. FL-HE utilizes a novel universal overhead optimization scheme, significantly reducing both computation and communication overheads during deployment while providing customizable privacy guarantees. Our optimized system demonstrates considerable overhead reduction, particularly for large models (e.g., ~10x reduction for HE-federated training of ResNet-50 and ~40x reduction for BERT), demonstrating the potential for scalable HE-based FL deployment.

Secure Federated Learning for Neuroimaging

May 11, 2022

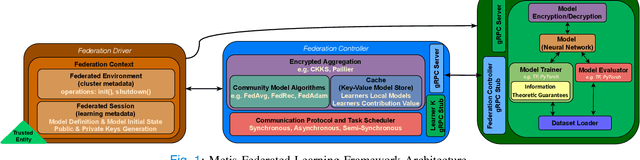

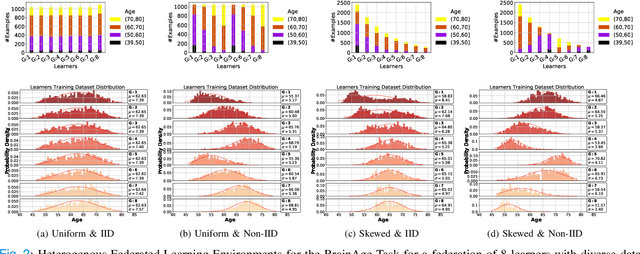

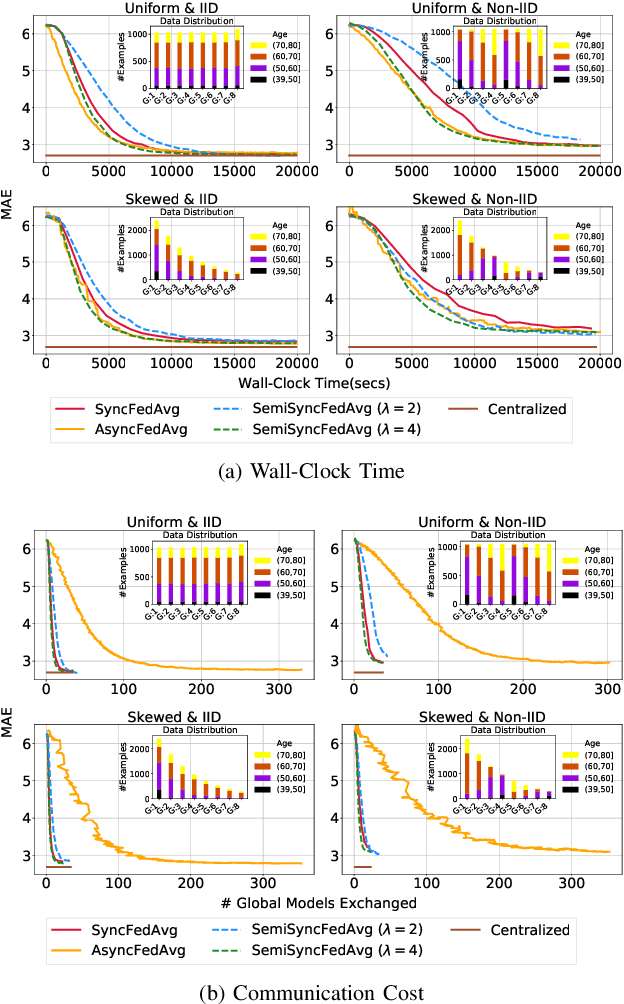

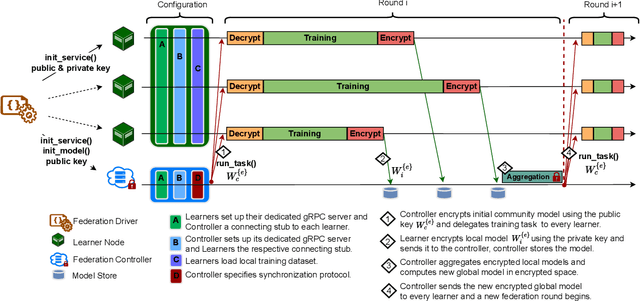

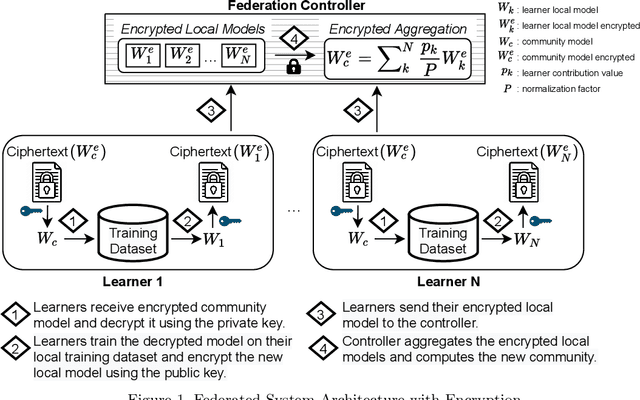

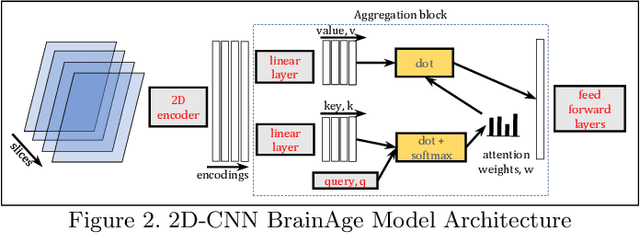

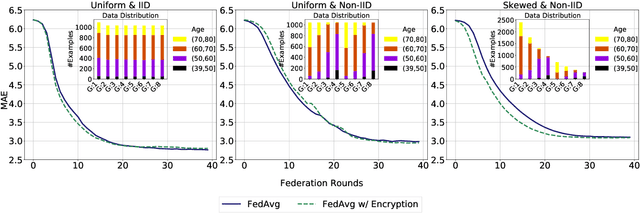

Abstract:The amount of biomedical data continues to grow rapidly. However, the ability to collect data from multiple sites for joint analysis remains challenging due to security, privacy, and regulatory concerns. We present a Secure Federated Learning architecture, MetisFL, which enables distributed training of neural networks over multiple data sources without sharing data. Each site trains the neural network over its private data for some time, then shares the neural network parameters (i.e., weights, gradients) with a Federation Controller, which in turn aggregates the local models, sends the resulting community model back to each site, and the process repeats. Our architecture provides strong security and privacy. First, sample data never leaves a site. Second, neural parameters are encrypted before transmission and the community model is computed under fully-homomorphic encryption. Finally, we use information-theoretic methods to limit information leakage from the neural model to prevent a curious site from performing membership attacks. We demonstrate this architecture in neuroimaging. Specifically, we investigate training neural models to classify Alzheimer's disease, and estimate Brain Age, from magnetic resonance imaging datasets distributed across multiple sites, including heterogeneous environments where sites have different amounts of data, statistical distributions, and computational capabilities.

Secure Neuroimaging Analysis using Federated Learning with Homomorphic Encryption

Aug 07, 2021

Abstract:Federated learning (FL) enables distributed computation of machine learning models over various disparate, remote data sources, without requiring to transfer any individual data to a centralized location. This results in an improved generalizability of models and efficient scaling of computation as more sources and larger datasets are added to the federation. Nevertheless, recent membership attacks show that private or sensitive personal data can sometimes be leaked or inferred when model parameters or summary statistics are shared with a central site, requiring improved security solutions. In this work, we propose a framework for secure FL using fully-homomorphic encryption (FHE). Specifically, we use the CKKS construction, an approximate, floating point compatible scheme that benefits from ciphertext packing and rescaling. In our evaluation on large-scale brain MRI datasets, we use our proposed secure FL framework to train a deep learning model to predict a person's age from distributed MRI scans, a common benchmarking task, and demonstrate that there is no degradation in the learning performance between the encrypted and non-encrypted federated models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge