Sharada P. Mohanty

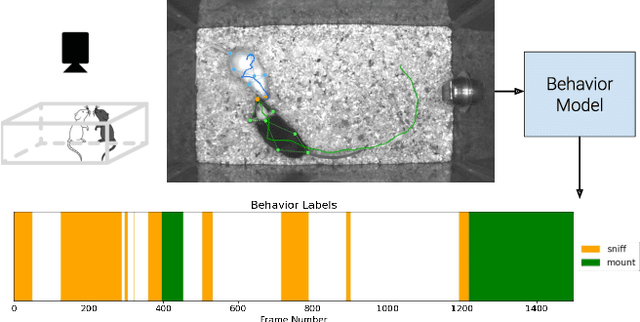

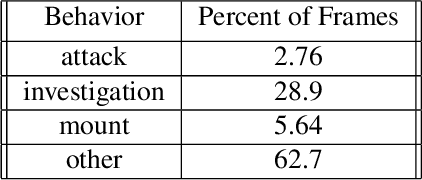

The Multi-Agent Behavior Dataset: Mouse Dyadic Social Interactions

Apr 07, 2021

Abstract:Multi-agent behavior modeling aims to understand the interactions that occur between agents. We present a multi-agent dataset from behavioral neuroscience, the Caltech Mouse Social Interactions (CalMS21) Dataset. Our dataset consists of trajectory data of social interactions, recorded from videos of freely behaving mice in a standard resident-intruder assay. The CalMS21 dataset is part of the Multi-Agent Behavior Challenge 2021 and for our next step, our goal is to incorporate datasets from other domains studying multi-agent behavior. To help accelerate behavioral studies, the CalMS21 dataset provides a benchmark to evaluate the performance of automated behavior classification methods in three settings: (1) for training on large behavioral datasets all annotated by a single annotator, (2) for style transfer to learn inter-annotator differences in behavior definitions, and (3) for learning of new behaviors of interest given limited training data. The dataset consists of 6 million frames of unlabelled tracked poses of interacting mice, as well as over 1 million frames with tracked poses and corresponding frame-level behavior annotations. The challenge of our dataset is to be able to classify behaviors accurately using both labelled and unlabelled tracking data, as well as being able to generalize to new annotators and behaviors.

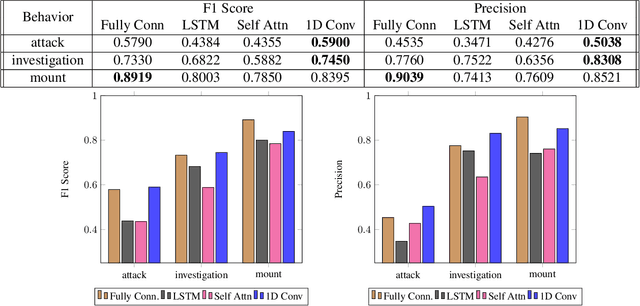

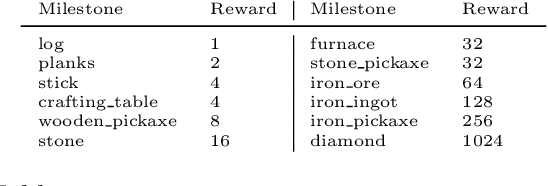

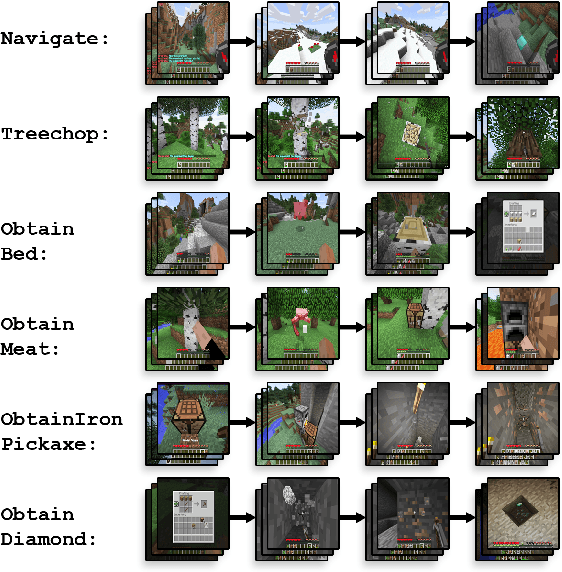

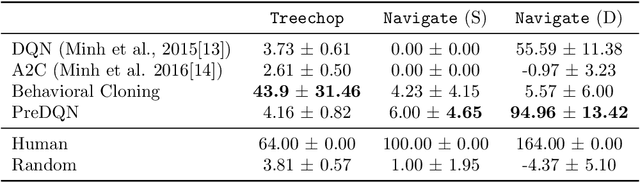

Retrospective Analysis of the 2019 MineRL Competition on Sample Efficient Reinforcement Learning

Mar 27, 2020

Abstract:To facilitate research in the direction of sample-efficient reinforcement learning, we held the MineRL Competition on Sample-Efficient Reinforcement Learning Using Human Priors at the Thirty-fourth Conference on Neural Information Processing Systems (NeurIPS 2019). The primary goal of this competition was to promote the development of algorithms that use human demonstrations alongside reinforcement learning to reduce the number of samples needed to solve complex, hierarchical, and sparse environments. We describe the competition and provide an overview of the top solutions, each of which uses deep reinforcement learning and/or imitation learning. We also discuss the impact of our organizational decisions on the competition as well as future directions for improvement.

Adversarial Vision Challenge

Aug 06, 2018Abstract:The NIPS 2018 Adversarial Vision Challenge is a competition to facilitate measurable progress towards robust machine vision models and more generally applicable adversarial attacks. This document is an updated version of our competition proposal that was accepted in the competition track of 32nd Conference on Neural Information Processing Systems (NIPS 2018).

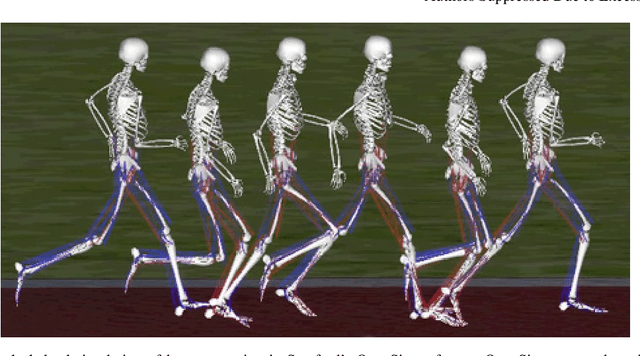

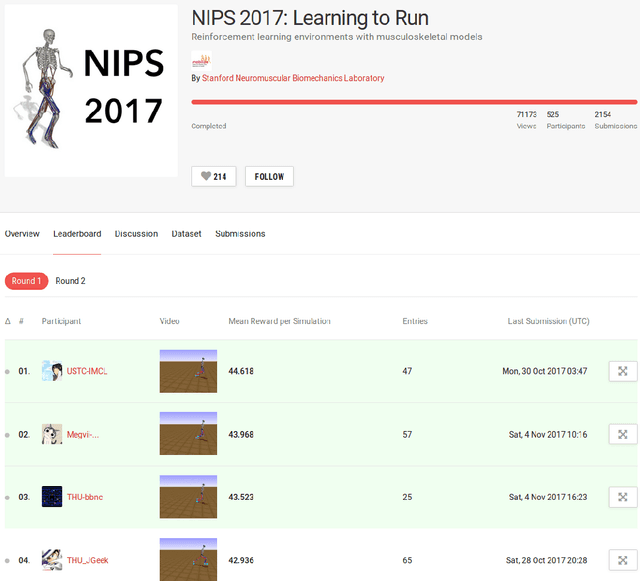

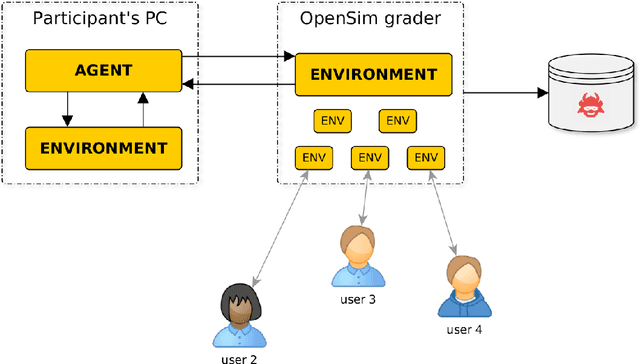

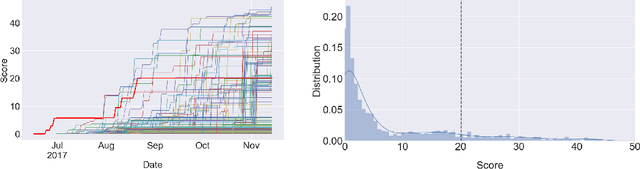

Learning to Run challenge: Synthesizing physiologically accurate motion using deep reinforcement learning

Mar 31, 2018

Abstract:Synthesizing physiologically-accurate human movement in a variety of conditions can help practitioners plan surgeries, design experiments, or prototype assistive devices in simulated environments, reducing time and costs and improving treatment outcomes. Because of the large and complex solution spaces of biomechanical models, current methods are constrained to specific movements and models, requiring careful design of a controller and hindering many possible applications. We sought to discover if modern optimization methods efficiently explore these complex spaces. To do this, we posed the problem as a competition in which participants were tasked with developing a controller to enable a physiologically-based human model to navigate a complex obstacle course as quickly as possible, without using any experimental data. They were provided with a human musculoskeletal model and a physics-based simulation environment. In this paper, we discuss the design of the competition, technical difficulties, results, and analysis of the top controllers. The challenge proved that deep reinforcement learning techniques, despite their high computational cost, can be successfully employed as an optimization method for synthesizing physiologically feasible motion in high-dimensional biomechanical systems.

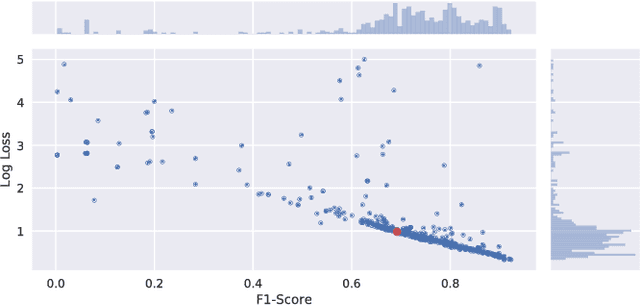

Learning to Recognize Musical Genre from Audio

Mar 13, 2018

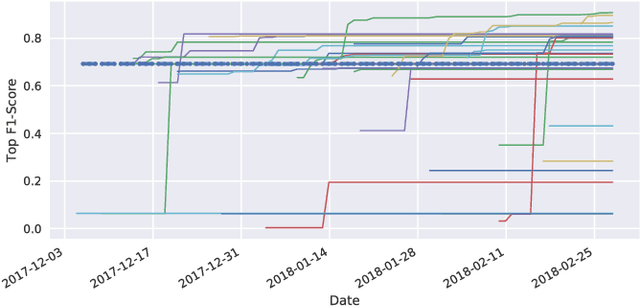

Abstract:We here summarize our experience running a challenge with open data for musical genre recognition. Those notes motivate the task and the challenge design, show some statistics about the submissions, and present the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge