Jennifer L. Hicks

Learning to Run challenge: Synthesizing physiologically accurate motion using deep reinforcement learning

Mar 31, 2018

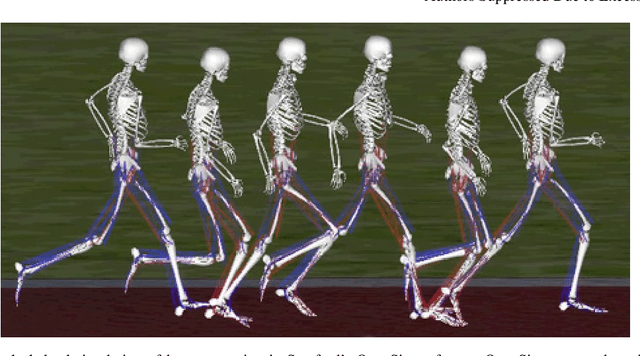

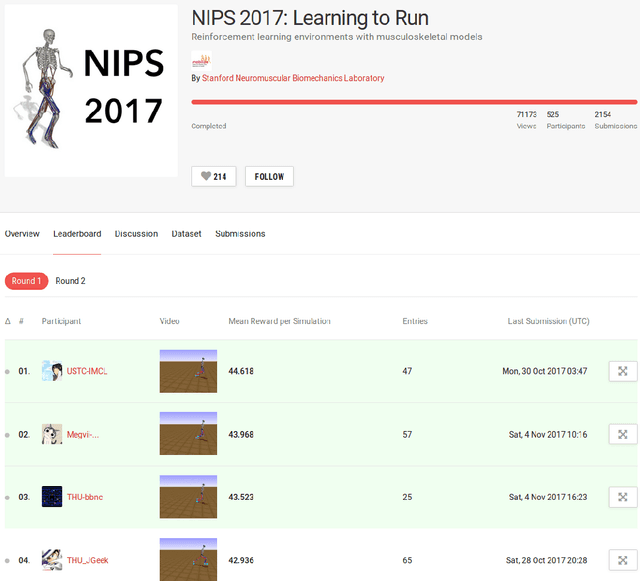

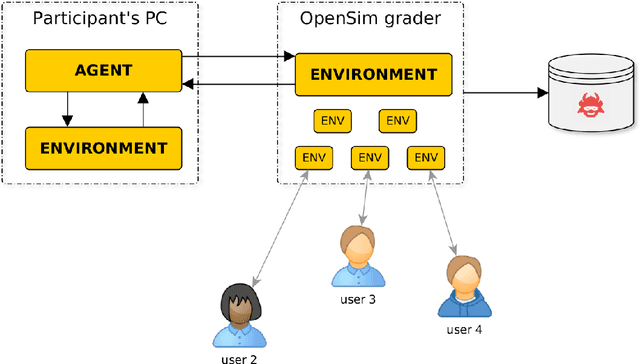

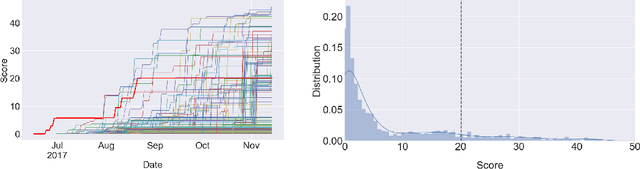

Abstract:Synthesizing physiologically-accurate human movement in a variety of conditions can help practitioners plan surgeries, design experiments, or prototype assistive devices in simulated environments, reducing time and costs and improving treatment outcomes. Because of the large and complex solution spaces of biomechanical models, current methods are constrained to specific movements and models, requiring careful design of a controller and hindering many possible applications. We sought to discover if modern optimization methods efficiently explore these complex spaces. To do this, we posed the problem as a competition in which participants were tasked with developing a controller to enable a physiologically-based human model to navigate a complex obstacle course as quickly as possible, without using any experimental data. They were provided with a human musculoskeletal model and a physics-based simulation environment. In this paper, we discuss the design of the competition, technical difficulties, results, and analysis of the top controllers. The challenge proved that deep reinforcement learning techniques, despite their high computational cost, can be successfully employed as an optimization method for synthesizing physiologically feasible motion in high-dimensional biomechanical systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge