Sergio Barbarossa

Learning Consistent Causal Abstraction Networks

Feb 02, 2026Abstract:Causal artificial intelligence aims to enhance explainability, trustworthiness, and robustness in AI by leveraging structural causal models (SCMs). In this pursuit, recent advances formalize network sheaves and cosheaves of causal knowledge. Pushing in the same direction, we tackle the learning of consistent causal abstraction network (CAN), a sheaf-theoretic framework where (i) SCMs are Gaussian, (ii) restriction maps are transposes of constructive linear causal abstractions (CAs) adhering to the semantic embedding principle, and (iii) edge stalks correspond--up to permutation--to the node stalks of more detailed SCMs. Our problem formulation separates into edge-specific local Riemannian problems and avoids nonconvex objectives. We propose an efficient search procedure, solving the local problems with SPECTRAL, our iterative method with closed-form updates and suitable for positive definite and semidefinite covariance matrices. Experiments on synthetic data show competitive performance in the CA learning task, and successful recovery of diverse CAN structures.

VitaGraph: Building a Knowledge Graph for Biologically Relevant Learning Tasks

May 16, 2025Abstract:The intrinsic complexity of human biology presents ongoing challenges to scientific understanding. Researchers collaborate across disciplines to expand our knowledge of the biological interactions that define human life. AI methodologies have emerged as powerful tools across scientific domains, particularly in computational biology, where graph data structures effectively model biological entities such as protein-protein interaction (PPI) networks and gene functional networks. Those networks are used as datasets for paramount network medicine tasks, such as gene-disease association prediction, drug repurposing, and polypharmacy side effect studies. Reliable predictions from machine learning models require high-quality foundational data. In this work, we present a comprehensive multi-purpose biological knowledge graph constructed by integrating and refining multiple publicly available datasets. Building upon the Drug Repurposing Knowledge Graph (DRKG), we define a pipeline tasked with a) cleaning inconsistencies and redundancies present in DRKG, b) coalescing information from the main available public data sources, and c) enriching the graph nodes with expressive feature vectors such as molecular fingerprints and gene ontologies. Biologically and chemically relevant features improve the capacity of machine learning models to generate accurate and well-structured embedding spaces. The resulting resource represents a coherent and reliable biological knowledge graph that serves as a state-of-the-art platform to advance research in computational biology and precision medicine. Moreover, it offers the opportunity to benchmark graph-based machine learning and network medicine models on relevant tasks. We demonstrate the effectiveness of the proposed dataset by benchmarking it against the task of drug repurposing, PPI prediction, and side-effect prediction, modeled as link prediction problems.

Physics-Informed Topological Signal Processing for Water Distribution Network Monitoring

May 12, 2025Abstract:Water management is one of the most critical aspects of our society, together with population increase and climate change. Water scarcity requires a better characterization and monitoring of Water Distribution Networks (WDNs). This paper presents a novel framework for monitoring Water Distribution Networks (WDNs) by integrating physics-informed modeling of the nonlinear interactions between pressure and flow data with Topological Signal Processing (TSP) techniques. We represent pressure and flow data as signals defined over a second-order cell complex, enabling accurate estimation of water pressures and flows throughout the entire network from sparse sensor measurements. By formalizing hydraulic conservation laws through the TSP framework, we provide a comprehensive representation of nodal pressures and edge flows that incorporate higher-order interactions captured through the formalism of cell complexes. This provides a principled way to decompose the water flows in WDNs in three orthogonal signal components (irrotational, solenoidal and harmonic). The spectral representations of these components inherently reflect the conservation laws governing the water pressures and flows. Sparse representation in the spectral domain enable topology-based sampling and reconstruction of nodal pressures and water flows from sparse measurements. Our results demonstrate that employing cell complex-based signal representations enhances the accuracy of edge signal reconstruction, due to proper modeling of both conservative and non-conservative flows along the polygonal cells.

SQ-GAN: Semantic Image Communications Using Masked Vector Quantization

Feb 13, 2025

Abstract:This work introduces Semantically Masked VQ-GAN (SQ-GAN), a novel approach integrating generative models to optimize image compression for semantic/task-oriented communications. SQ-GAN employs off-the-shelf semantic semantic segmentation and a new specifically developed semantic-conditioned adaptive mask module (SAMM) to selectively encode semantically significant features of the images. SQ-GAN outperforms state-of-the-art image compression schemes such as JPEG2000 and BPG across multiple metrics, including perceptual quality and semantic segmentation accuracy on the post-decoding reconstructed image, at extreme low compression rates expressed in bits per pixel.

Learning Sheaf Laplacian Optimizing Restriction Maps

Jan 31, 2025Abstract:The aim of this paper is to propose a novel framework to infer the sheaf Laplacian, including the topology of a graph and the restriction maps, from a set of data observed over the nodes of a graph. The proposed method is based on sheaf theory, which represents an important generalization of graph signal processing. The learning problem aims to find the sheaf Laplacian that minimizes the total variation of the observed data, where the variation over each edge is also locally minimized by optimizing the associated restriction maps. Compared to alternative methods based on semidefinite programming, our solution is significantly more numerically efficient, as all its fundamental steps are resolved in closed form. The method is numerically tested on data consisting of vectors defined over subspaces of varying dimensions at each node. We demonstrate how the resulting graph is influenced by two key factors: the cross-correlation and the dimensionality difference of the data residing on the graph's nodes.

Topological Signal Processing and Learning: Recent Advances and Future Challenges

Dec 02, 2024Abstract:Developing methods to process irregularly structured data is crucial in applications like gene-regulatory, brain, power, and socioeconomic networks. Graphs have been the go-to algebraic tool for modeling the structure via nodes and edges capturing their interactions, leading to the establishment of the fields of graph signal processing (GSP) and graph machine learning (GML). Key graph-aware methods include Fourier transform, filtering, sampling, as well as topology identification and spatiotemporal processing. Although versatile, graphs can model only pairwise dependencies in the data. To this end, topological structures such as simplicial and cell complexes have emerged as algebraic representations for more intricate structure modeling in data-driven systems, fueling the rapid development of novel topological-based processing and learning methods. This paper first presents the core principles of topological signal processing through the Hodge theory, a framework instrumental in propelling the field forward thanks to principled connections with GSP-GML. It then outlines advances in topological signal representation, filtering, and sampling, as well as inferring topological structures from data, processing spatiotemporal topological signals, and connections with topological machine learning. The impact of topological signal processing and learning is finally highlighted in applications dealing with flow data over networks, geometric processing, statistical ranking, biology, and semantic communication.

Language-Oriented Semantic Latent Representation for Image Transmission

May 16, 2024

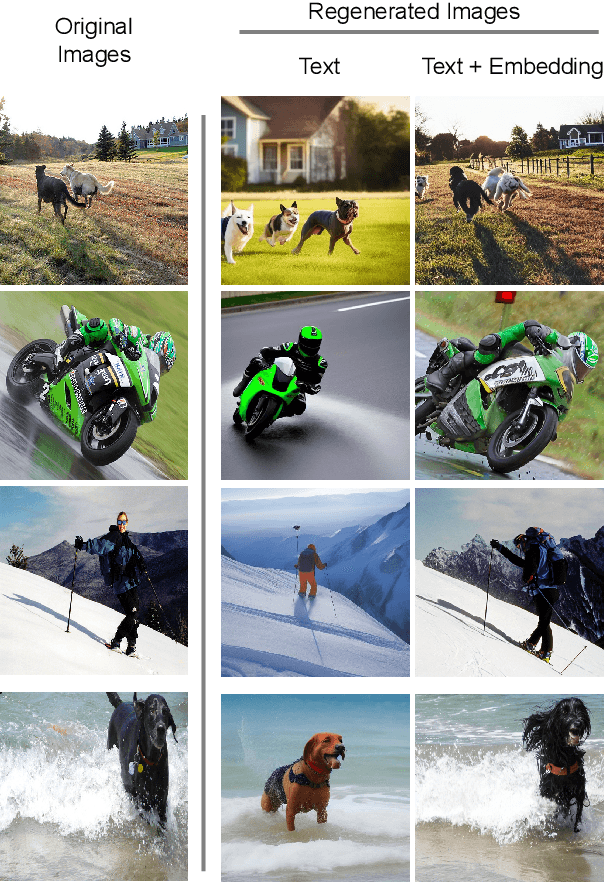

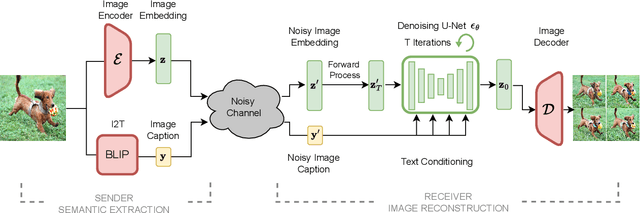

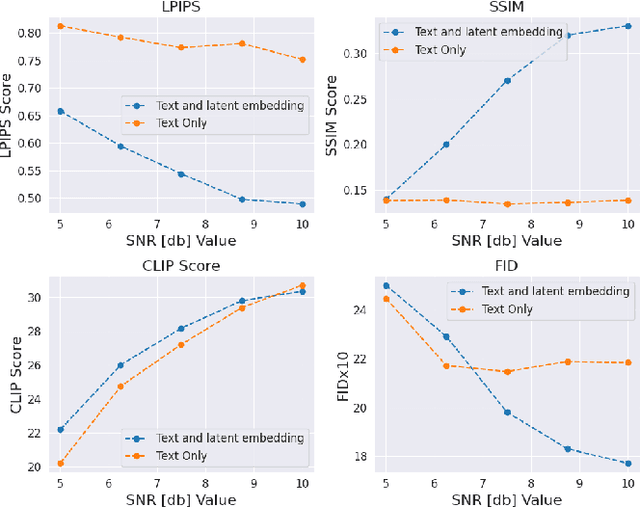

Abstract:In the new paradigm of semantic communication (SC), the focus is on delivering meanings behind bits by extracting semantic information from raw data. Recent advances in data-to-text models facilitate language-oriented SC, particularly for text-transformed image communication via image-to-text (I2T) encoding and text-to-image (T2I) decoding. However, although semantically aligned, the text is too coarse to precisely capture sophisticated visual features such as spatial locations, color, and texture, incurring a significant perceptual difference between intended and reconstructed images. To address this limitation, in this paper, we propose a novel language-oriented SC framework that communicates both text and a compressed image embedding and combines them using a latent diffusion model to reconstruct the intended image. Experimental results validate the potential of our approach, which transmits only 2.09\% of the original image size while achieving higher perceptual similarities in noisy communication channels compared to a baseline SC method that communicates only through text.The code is available at https://github.com/ispamm/Img2Img-SC/ .

Opportunistic Information-Bottleneck for Goal-oriented Feature Extraction and Communication

Apr 14, 2024

Abstract:The Information Bottleneck (IB) method is an information theoretical framework to design a parsimonious and tunable feature-extraction mechanism, such that the extracted features are maximally relevant to a specific learning or inference task. Despite its theoretical value, the IB is based on a functional optimization problem that admits a closed form solution only on specific cases (e.g., Gaussian distributions), making it difficult to be applied in most applications, where it is necessary to resort to complex and approximated variational implementations. To overcome this limitation, we propose an approach to adapt the closed-form solution of the Gaussian IB to a general task. Whichever is the inference task to be performed by a (possibly deep) neural-network, the key idea is to opportunistically design a regression sub-task, embedded in the original problem, where we can safely assume a (joint) multivariate normality between the sub-task's inputs and outputs. In this way we can exploit a fixed and pre-trained neural network to process the input data, using a tunable number of features, to trade data-size and complexity for accuracy. This approach is particularly useful every time a device needs to transmit data (or features) to a server that has to fulfil an inference task, as it provides a principled way to extract the most relevant features for the task to be executed, while looking for the best trade-off between the size of the feature vector to be transmitted, inference accuracy, and complexity. Extensive simulation results testify the effectiveness of the proposed methodhttps://info.arxiv.org/help/prep#comments and encourage to further investigate this research line.

Generative AI Meets Semantic Communication: Evolution and Revolution of Communication Tasks

Jan 10, 2024Abstract:While deep generative models are showing exciting abilities in computer vision and natural language processing, their adoption in communication frameworks is still far underestimated. These methods are demonstrated to evolve solutions to classic communication problems such as denoising, restoration, or compression. Nevertheless, generative models can unveil their real potential in semantic communication frameworks, in which the receiver is not asked to recover the sequence of bits used to encode the transmitted (semantic) message, but only to regenerate content that is semantically consistent with the transmitted message. Disclosing generative models capabilities in semantic communication paves the way for a paradigm shift with respect to conventional communication systems, which has great potential to reduce the amount of data traffic and offers a revolutionary versatility to novel tasks and applications that were not even conceivable a few years ago. In this paper, we present a unified perspective of deep generative models in semantic communication and we unveil their revolutionary role in future communication frameworks, enabling emerging applications and tasks. Finally, we analyze the challenges and opportunities to face to develop generative models specifically tailored for communication systems.

Stability of Graph Convolutional Neural Networks through the lens of small perturbation analysis

Dec 20, 2023

Abstract:In this work, we study the problem of stability of Graph Convolutional Neural Networks (GCNs) under random small perturbations in the underlying graph topology, i.e. under a limited number of insertions or deletions of edges. We derive a novel bound on the expected difference between the outputs of unperturbed and perturbed GCNs. The proposed bound explicitly depends on the magnitude of the perturbation of the eigenpairs of the Laplacian matrix, and the perturbation explicitly depends on which edges are inserted or deleted. Then, we provide a quantitative characterization of the effect of perturbing specific edges on the stability of the network. We leverage tools from small perturbation analysis to express the bounds in closed, albeit approximate, form, in order to enhance interpretability of the results, without the need to compute any perturbed shift operator. Finally, we numerically evaluate the effectiveness of the proposed bound.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge