Jinho Choi

Stochastic Design of Active RIS-Assisted Satellite Downlinks under Interference, Folded Noise, and EIRP Constraints

Feb 12, 2026Abstract:Active reconfigurable intelligent surfaces (RISs) can mitigate the double-fading loss of passive reflection in satellite downlinks. However, their gains are limited by random co-channel interference, gain-dependent amplifier noise, and regulatory emission constraints. This paper develops a stochastic reliability framework for active RIS-assisted satellite downlinks by modeling the desired and interfering channels, receiver noise, and RIS amplifier noise as random variables. The resulting instantaneous signal-to-interference-plus-noise ratio (SINR) model explicitly captures folded cascaded amplifier noise and reveals a finite high-gain SINR ceiling. To guarantee a target outage level, we formulate a chance-constrained max-SINR design that jointly optimizes the binary RIS configuration and a common amplification gain. The chance constraint is handled using a sample-average approximation (SAA) with a violation budget. The resulting feasibility problem is solved as a mixed-integer second-order cone program (MISOCP) within a bisection search over the SINR threshold. Practical implementation is enforced by restricting the gain to an admissible range determined by small-signal stability and effective isotropic radiated power (EIRP) limits. We also derive realization-wise SINR envelopes based on eigenvalue and l1-norm bounds, which provide interpretable performance limits and fast diagnostics. Monte Carlo results show that these envelopes tightly bound the simulated SINR, reproduce the predicted saturation behavior, and quantify performance degradation as interference increases. Overall, the paper provides a solver-ready, reliability-targeting design methodology whose achieved reliability is validated through out-of-sample Monte Carlo testing under realistic randomness and hardware constraints.

Towards Optimal Semantic Communications: Reconsidering the Role of Semantic Feature Channels

Feb 09, 2026Abstract:This paper investigates the optimization of transmitting the encoder outputs, termed semantic features (SFs), in semantic communication (SC). We begin by modeling the entire communication process from the encoder output to the decoder input, encompassing the physical channel and all transceiver operations, as the SF channel, thereby establishing an encoder-SF channel-decoder pipeline. In contrast to prior studies that assume a fixed SF channel, we note that the SF channel is configurable, as its characteristics are shaped by various transmission and reception strategies, such as power allocation. Based on this observation, we formulate the SF channel optimization problem under a mutual information constraint between the SFs and their reconstructions, and analytically derive the optimal SF channel under a linear encoder-decoder structure and Gaussian source assumption. Building upon this theoretical foundation, we propose a joint optimization framework for the encoder-decoder and SF channel, applicable to both analog and digital SCs. To realize the optimized SF channel, we also propose a physical-layer calibration strategy that enables real-time power control and adaptation to varying channel conditions. Simulation results demonstrate that the proposed SF channel optimization achieves superior task performance under various communication environments.

Duality-Guided Graph Learning for Real-Time Joint Connectivity and Routing in LEO Mega-Constellations

Jan 29, 2026Abstract:Laser inter-satellite links (LISLs) of low Earth orbit (LEO) mega-constellations enable high-capacity backbone connectivity in non-terrestrial networks, but their management is challenged by limited laser communication terminals, mechanical pointing constraints, and rapidly time-varying network topologies. This paper studies the joint problem of LISL connection establishment, traffic routing, and flow-rate allocation under heterogeneous global traffic demand and gateway availability. We formulate the problem as a mixed-integer optimization over large-scale, time-varying constellation graphs and develop a Lagrangian dual decomposition that interprets per-link dual variables as congestion prices coordinating connectivity and routing decisions. To overcome the prohibitive latency of iterative dual updates, we propose DeepLaDu, a Lagrangian duality-guided deep learning framework that trains a graph neural network (GNN) to directly infer per-link (edge-level) congestion prices from the constellation state in a single forward pass. We enable scalable and stable training using a subgradient-based edge-level loss in DeepLaDu. We analyze the convergence and computational complexity of the proposed approach and evaluate it using realistic Starlink-like constellations with optical and traffic constraints. Simulation results show that DeepLaDu achieves up to 20\% higher network throughput than non-joint or heuristic baselines, while matching the performance of iterative dual optimization with orders-of-magnitude lower computation time, suitable for real-time operation in dynamic LEO networks.

Joint Laser Inter-Satellite Link Matching and Traffic Flow Routing in LEO Mega-Constellations via Lagrangian Duality

Jan 29, 2026Abstract:Low Earth orbit (LEO) mega-constellations greatly extend the coverage and resilience of future wireless systems. Within the mega-constellations, laser inter-satellite links (LISLs) enable high-capacity, long-range connectivity. Existing LISL schemes often overlook mechanical limitations of laser communication terminals (LCTs) and non-uniform global traffic profiles caused by uneven user and gateway distributions, leading to suboptimal throughput and underused LCTs/LISLs -- especially when each satellite carries only a few LCTs. This paper investigates the joint optimization of LCT connections and traffic routing to maximize the constellation throughput, considering the realistic LCT mechanics and the global traffic profile. The problem is formulated as an NP-hard mixed-integer program coupling LCT connections with flow-rate variables under link capacity constraints. Due to its intractability, we resort to relaxing the coupling constraints via Lagrangian duality, decomposing the problem into a weighted graph-matching for LCT connections, weighted shortest-path routing tasks, and a linear program for rate allocation. Here, Lagrange multipliers reflect congestion weights between satellites, jointly guiding the matching, routing, and rate allocation. Subgradient descent optimizes the multipliers, with provable convergence. Simulations using real-world constellation and terrestrial data show that our methods substantially improve network throughput by up to $35\%$--$145\%$ over existing non-joint approaches.

Context-Aware Iterative Token Detection and Masked Transmission for Wireless Token Communication

Jan 25, 2026Abstract:The success of large-scale language models has established tokens as compact and meaningful units for natural-language representation, which motivates token communication over wireless channels, where tokens are considered fundamental units for wireless transmission. We propose a context-aware token communication framework that uses a pretrained masked language model (MLM) as a shared contextual probability model between the transmitter (Tx) and receiver (Rx). At Rx, we develop an iterative token detection method that jointly exploits MLM-guided contextual priors and channel observations based on a Bayesian perspective. At Tx, we additionally introduce a context-aware masking strategy which skips highly predictable token transmission to reduce transmission rate. Simulation results demonstrate that the proposed framework substantially improves reconstructed sentence quality and supports effective rate adaptation under various channel conditions.

Reinforcement Learning for Opportunistic Routing in Software-Defined LEO-Terrestrial Systems

Jan 20, 2026Abstract:The proliferation of large-scale low Earth orbit (LEO) satellite constellations is driving the need for intelligent routing strategies that can effectively deliver data to terrestrial networks under rapidly time-varying topologies and intermittent gateway visibility. Leveraging the global control capabilities of a geostationary (GEO)-resident software-defined networking (SDN) controller, we introduce opportunistic routing, which aims to minimize delivery delay by forwarding packets to any currently available ground gateways rather than fixed destinations. This makes it a promising approach for achieving low-latency and robust data delivery in highly dynamic LEO networks. Specifically, we formulate a constrained stochastic optimization problem and employ a residual reinforcement learning framework to optimize opportunistic routing for reducing transmission delay. Simulation results over multiple days of orbital data demonstrate that our method achieves significant improvements in queue length reduction compared to classical backpressure and other well-known queueing algorithms.

Learning Time-Varying Graph Signals via Koopman

Nov 09, 2025Abstract:A wide variety of real-world data, such as sea measurements, e.g., temperatures collected by distributed sensors and multiple unmanned aerial vehicles (UAV) trajectories, can be naturally represented as graphs, often exhibiting non-Euclidean structures. These graph representations may evolve over time, forming time-varying graphs. Effectively modeling and analyzing such dynamic graph data is critical for tasks like predicting graph evolution and reconstructing missing graph data. In this paper, we propose a framework based on the Koopman autoencoder (KAE) to handle time-varying graph data. Specifically, we assume the existence of a hidden non-linear dynamical system, where the state vector corresponds to the graph embedding of the time-varying graph signals. To capture the evolving graph structures, the graph data is first converted into a vector time series through graph embedding, representing the structural information in a finite-dimensional latent space. In this latent space, the KAE is applied to learn the underlying non-linear dynamics governing the temporal evolution of graph features, enabling both prediction and reconstruction tasks.

ConceptScope: Characterizing Dataset Bias via Disentangled Visual Concepts

Oct 30, 2025Abstract:Dataset bias, where data points are skewed to certain concepts, is ubiquitous in machine learning datasets. Yet, systematically identifying these biases is challenging without costly, fine-grained attribute annotations. We present ConceptScope, a scalable and automated framework for analyzing visual datasets by discovering and quantifying human-interpretable concepts using Sparse Autoencoders trained on representations from vision foundation models. ConceptScope categorizes concepts into target, context, and bias types based on their semantic relevance and statistical correlation to class labels, enabling class-level dataset characterization, bias identification, and robustness evaluation through concept-based subgrouping. We validate that ConceptScope captures a wide range of visual concepts, including objects, textures, backgrounds, facial attributes, emotions, and actions, through comparisons with annotated datasets. Furthermore, we show that concept activations produce spatial attributions that align with semantically meaningful image regions. ConceptScope reliably detects known biases (e.g., background bias in Waterbirds) and uncovers previously unannotated ones (e.g, co-occurring objects in ImageNet), offering a practical tool for dataset auditing and model diagnostics.

Hybrid Semantic-Complementary Transmission for High-Fidelity Image Reconstruction

Jul 23, 2025Abstract:Recent advances in semantic communication (SC) have introduced neural network (NN)-based transceivers that convey semantic representation (SR) of signals such as images. However, these NNs are trained over diverse image distributions and thus often fail to reconstruct fine-grained image-specific details. To overcome this limited reconstruction fidelity, we propose an extended SC framework, hybrid semantic communication (HSC), which supplements SR with complementary representation (CR) capturing residual image-specific information. The CR is constructed at the transmitter, and is combined with the actual SC outcome at the receiver to yield a high-fidelity recomposed image. While the transmission load of SR is fixed due to its NN-based structure, the load of CR can be flexibly adjusted to achieve a desirable fidelity. This controllability directly influences the final reconstruction error, for which we derive a closed-form expression and the corresponding optimal CR. Simulation results demonstrate that HSC substantially reduces MSE compared to the baseline SC without CR transmission across various channels and NN architectures.

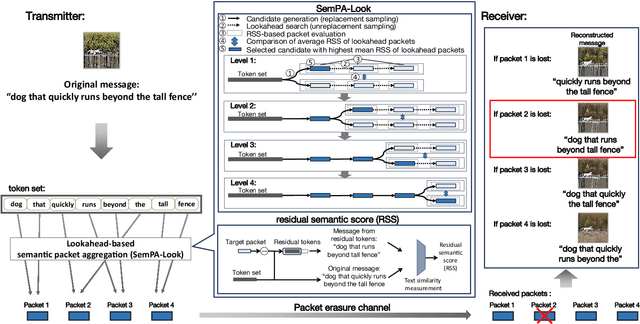

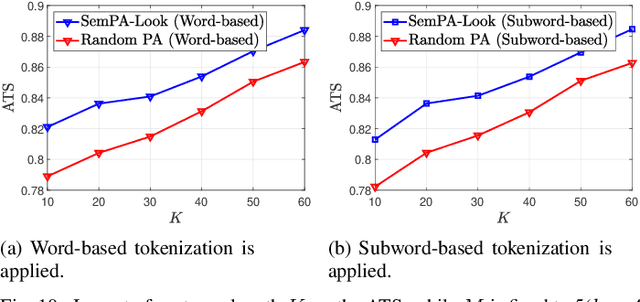

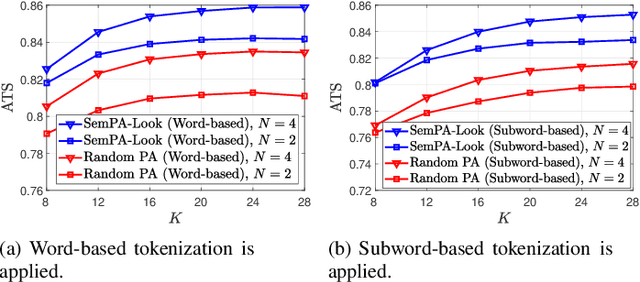

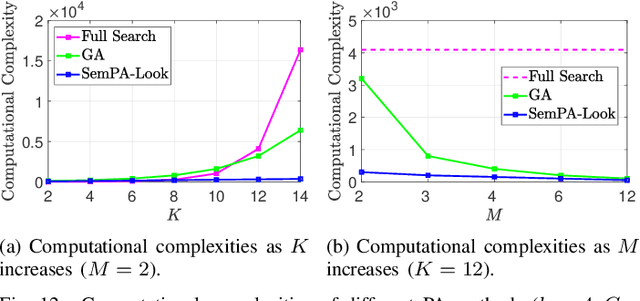

Low-Complexity Semantic Packet Aggregation for Token Communication via Lookahead Search

Jun 24, 2025

Abstract:Tokens are fundamental processing units of generative AI (GenAI) and large language models (LLMs), and token communication (TC) is essential for enabling remote AI-generate content (AIGC) and wireless LLM applications. Unlike traditional bits, each of which is independently treated, the semantics of each token depends on its surrounding context tokens. This inter-token dependency makes TC vulnerable to outage channels, where the loss of a single token can significantly distort the original message semantics. Motivated by this, this paper focuses on optimizing token packetization to maximize the average token similarity (ATS) between the original and received token messages under outage channels. Due to inter-token dependency, this token grouping problem is combinatorial, with complexity growing exponentially with message length. To address this, we propose a novel framework of semantic packet aggregation with lookahead search (SemPA-Look), built on two core ideas. First, it introduces the residual semantic score (RSS) as a token-level surrogate for the message-level ATS, allowing robust semantic preservation even when a certain token packet is lost. Second, instead of full search, SemPA-Look applies a lookahead search-inspired algorithm that samples intra-packet token candidates without replacement (fixed depth), conditioned on inter-packet token candidates sampled with replacement (fixed width), thereby achieving linear complexity. Experiments on a remote AIGC task with the MS-COCO dataset (text captioned images) demonstrate that SemPA-Look achieves high ATS and LPIPS scores comparable to exhaustive search, while reducing computational complexity by up to 40$\times$. Compared to other linear-complexity algorithms such as the genetic algorithm (GA), SemPA-Look achieves 10$\times$ lower complexity, demonstrating its practicality for remote AIGC and other TC applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge