Zhouyou Gu

LocDreamer: World Model-Based Learning for Joint Indoor Tracking and Anchor Scheduling

Feb 09, 2026Abstract:Accurate, resource-efficient localization and tracking enables numerous location-aware services in next-generation wireless networks. However, existing machine learning-based methods often require large labeled datasets while overlooking spectrum and energy efficiencies. To fill this gap, we propose LocDreamer, a world model (WM)-based framework for joint target tracking and scheduling of localization anchors. LocDreamer learns a WM that captures the latent representation of the target motion and localization environment, thereby generating synthetic measurements to imagine arbitrary anchor deployments. These measurements enable imagination-driven training of both the tracking model and the reinforcement learning (RL)-based anchor scheduler that activates only the most informative anchors, which significantly reduce energy and signaling costs while preserving high tracking accuracy. Experiments on a real-world indoor dataset demonstrate that LocDreamer substantially improves data efficiency and generalization, outperforming conventional Bayesian filter with random scheduling by 37% in tracking accuracy, and achieving 86% of the accuracy of same model trained directly on real data.

Duality-Guided Graph Learning for Real-Time Joint Connectivity and Routing in LEO Mega-Constellations

Jan 29, 2026Abstract:Laser inter-satellite links (LISLs) of low Earth orbit (LEO) mega-constellations enable high-capacity backbone connectivity in non-terrestrial networks, but their management is challenged by limited laser communication terminals, mechanical pointing constraints, and rapidly time-varying network topologies. This paper studies the joint problem of LISL connection establishment, traffic routing, and flow-rate allocation under heterogeneous global traffic demand and gateway availability. We formulate the problem as a mixed-integer optimization over large-scale, time-varying constellation graphs and develop a Lagrangian dual decomposition that interprets per-link dual variables as congestion prices coordinating connectivity and routing decisions. To overcome the prohibitive latency of iterative dual updates, we propose DeepLaDu, a Lagrangian duality-guided deep learning framework that trains a graph neural network (GNN) to directly infer per-link (edge-level) congestion prices from the constellation state in a single forward pass. We enable scalable and stable training using a subgradient-based edge-level loss in DeepLaDu. We analyze the convergence and computational complexity of the proposed approach and evaluate it using realistic Starlink-like constellations with optical and traffic constraints. Simulation results show that DeepLaDu achieves up to 20\% higher network throughput than non-joint or heuristic baselines, while matching the performance of iterative dual optimization with orders-of-magnitude lower computation time, suitable for real-time operation in dynamic LEO networks.

Joint Laser Inter-Satellite Link Matching and Traffic Flow Routing in LEO Mega-Constellations via Lagrangian Duality

Jan 29, 2026Abstract:Low Earth orbit (LEO) mega-constellations greatly extend the coverage and resilience of future wireless systems. Within the mega-constellations, laser inter-satellite links (LISLs) enable high-capacity, long-range connectivity. Existing LISL schemes often overlook mechanical limitations of laser communication terminals (LCTs) and non-uniform global traffic profiles caused by uneven user and gateway distributions, leading to suboptimal throughput and underused LCTs/LISLs -- especially when each satellite carries only a few LCTs. This paper investigates the joint optimization of LCT connections and traffic routing to maximize the constellation throughput, considering the realistic LCT mechanics and the global traffic profile. The problem is formulated as an NP-hard mixed-integer program coupling LCT connections with flow-rate variables under link capacity constraints. Due to its intractability, we resort to relaxing the coupling constraints via Lagrangian duality, decomposing the problem into a weighted graph-matching for LCT connections, weighted shortest-path routing tasks, and a linear program for rate allocation. Here, Lagrange multipliers reflect congestion weights between satellites, jointly guiding the matching, routing, and rate allocation. Subgradient descent optimizes the multipliers, with provable convergence. Simulations using real-world constellation and terrestrial data show that our methods substantially improve network throughput by up to $35\%$--$145\%$ over existing non-joint approaches.

Vision-Language-Model-Guided Differentiable Ray Tracing for Fast and Accurate Multi-Material RF Parameter Estimation

Jan 26, 2026Abstract:Accurate radio-frequency (RF) material parameters are essential for electromagnetic digital twins in 6G systems, yet gradient-based inverse ray tracing (RT) remains sensitive to initialization and costly under limited measurements. This paper proposes a vision-language-model (VLM) guided framework that accelerates and stabilizes multi-material parameter estimation in a differentiable RT (DRT) engine. A VLM parses scene images to infer material categories and maps them to quantitative priors via an ITU-R material table, yielding informed conductivity initializations. The VLM further selects informative transmitter/receiver placements that promote diverse, material-discriminative paths. Starting from these priors, the DRT performs gradient-based refinement using measured received signal strengths. Experiments in NVIDIA Sionna on indoor scenes show 2-4$\times$ faster convergence and 10-100$\times$ lower final parameter error compared with uniform or random initialization and random placement baselines, achieving sub-0.1\% mean relative error with only a few receivers. Complexity analyses indicate per-iteration time scales near-linearly with the number of materials and measurement setups, while VLM-guided placement reduces the measurements required for accurate recovery. Ablations over RT depth and ray counts confirm further accuracy gains without significant per-iteration overhead. Results demonstrate that semantic priors from VLMs effectively guide physics-based optimization for fast and reliable RF material estimation.

Reinforcement Learning for Opportunistic Routing in Software-Defined LEO-Terrestrial Systems

Jan 20, 2026Abstract:The proliferation of large-scale low Earth orbit (LEO) satellite constellations is driving the need for intelligent routing strategies that can effectively deliver data to terrestrial networks under rapidly time-varying topologies and intermittent gateway visibility. Leveraging the global control capabilities of a geostationary (GEO)-resident software-defined networking (SDN) controller, we introduce opportunistic routing, which aims to minimize delivery delay by forwarding packets to any currently available ground gateways rather than fixed destinations. This makes it a promising approach for achieving low-latency and robust data delivery in highly dynamic LEO networks. Specifically, we formulate a constrained stochastic optimization problem and employ a residual reinforcement learning framework to optimize opportunistic routing for reducing transmission delay. Simulation results over multiple days of orbital data demonstrate that our method achieves significant improvements in queue length reduction compared to classical backpressure and other well-known queueing algorithms.

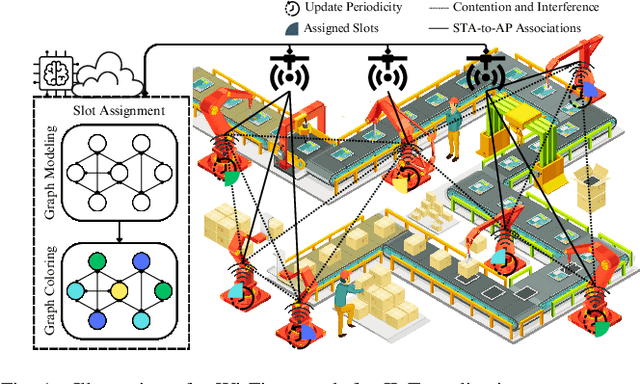

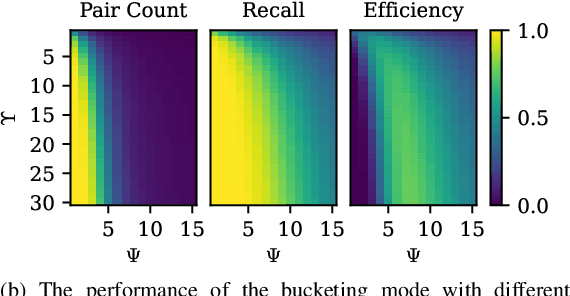

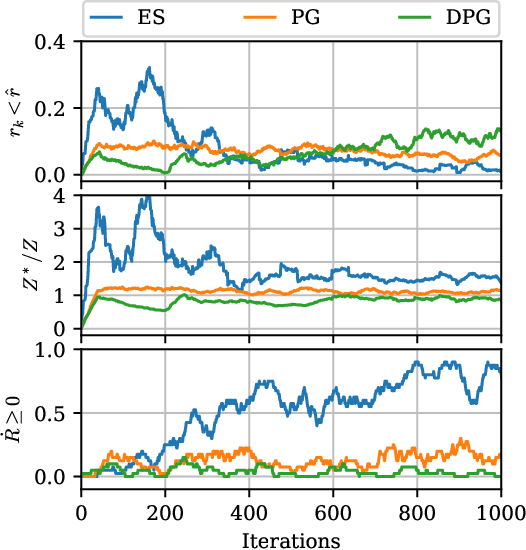

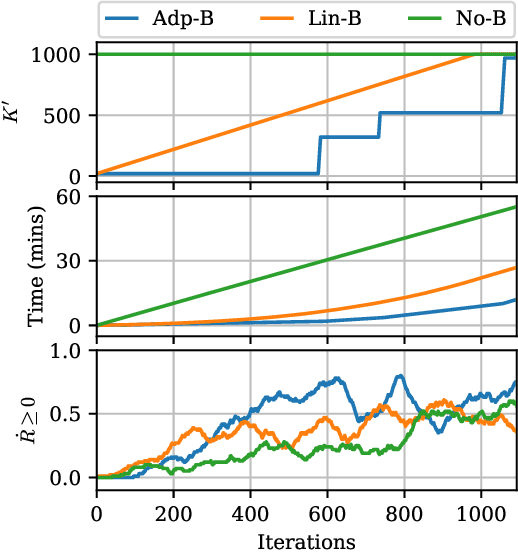

ScNeuGM: Scalable Neural Graph Modeling for Coloring-Based Contention and Interference Management in Wi-Fi 7

Feb 05, 2025

Abstract:Carrier-sense multiple access with collision avoidance in Wi-Fi often leads to contention and interference, thereby increasing packet losses. These challenges have traditionally been modeled as a graph, with stations (STAs) represented as vertices and contention or interference as edges. Graph coloring assigns orthogonal transmission slots to STAs, managing contention and interference, e.g., using the restricted target wake time (RTWT) mechanism introduced in Wi-Fi 7 standards. However, legacy graph models lack flexibility in optimizing these assignments, often failing to minimize slot usage while maintaining reliable transmissions. To address this issue, we propose ScNeuGM, a neural graph modeling (NGM) framework that flexibly trains a neural network (NN) to construct optimal graph models whose coloring corresponds to optimal slot assignments. ScNeuGM is highly scalable to large Wi-Fi networks with massive STA pairs: 1) it utilizes an evolution strategy (ES) to directly optimize the NN parameters based on one network-wise reward signal, avoiding exhaustive edge-wise feedback estimations in all STA pairs; 2) ScNeuGM also leverages a deep hashing function (DHF) to group contending or interfering STA pairs and restricts NGM NN training and inference to pairs within these groups, significantly reducing complexity. Simulations show that the ES-trained NN in ScNeuGM returns near-optimal graphs 4-10 times more often than algorithms requiring edge-wise feedback and reduces 25\% slots than legacy graph constructions. Furthermore, the DHF in ScNeuGM reduces the training and the inference time of NGM by 4 and 8 times, respectively, and the online slot assignment time by 3 times in large networks, and up to 30\% fewer packet losses in dynamic scenarios due to the timely assignments.

SIG-SDP: Sparse Interference Graph-Aided Semidefinite Programming for Large-Scale Wireless Time-Sensitive Networking

Jan 20, 2025

Abstract:Wireless time-sensitive networking (WTSN) is essential for Industrial Internet of Things. We address the problem of minimizing time slots needed for WTSN transmissions while ensuring reliability subject to interference constraints -- an NP-hard task. Existing semidefinite programming (SDP) methods can relax and solve the problem but suffer from high polynomial complexity. We propose a sparse interference graph-aided SDP (SIG-SDP) framework that exploits the interference's sparsity arising from attenuated signals between distant user pairs. First, the framework utilizes the sparsity to establish the upper and lower bounds of the minimum number of slots and uses binary search to locate the minimum within the bounds. Here, for each searched slot number, the framework optimizes a positive semidefinite (PSD) matrix indicating how likely user pairs share the same slot, and the constraint feasibility with the optimized PSD matrix further refines the slot search range. Second, the framework designs a matrix multiplicative weights (MMW) algorithm that accelerates the optimization, achieved by only sparsely adjusting interfering user pairs' elements in the PSD matrix while skipping the non-interfering pairs. We also design an online architecture to deploy the framework to adjust slot assignments based on real-time interference measurements. Simulations show that the SIG-SDP framework converges in near-linear complexity and is highly scalable to large networks. The framework minimizes the number of slots with up to 10 times faster computation and up to 100 times lower packet loss rates than compared methods. The online architecture demonstrates how the algorithm complexity impacts dynamic networks' performance.

Opportunistic Scheduling Using Statistical Information of Wireless Channels

Feb 13, 2024

Abstract:This paper considers opportunistic scheduler (OS) design using statistical channel state information~(CSI). We apply max-weight schedulers (MWSs) to maximize a utility function of users' average data rates. MWSs schedule the user with the highest weighted instantaneous data rate every time slot. Existing methods require hundreds of time slots to adjust the MWS's weights according to the instantaneous CSI before finding the optimal weights that maximize the utility function. In contrast, our MWS design requires few slots for estimating the statistical CSI. Specifically, we formulate a weight optimization problem using the mean and variance of users' signal-to-noise ratios (SNRs) to construct constraints bounding users' feasible average rates. Here, the utility function is the formulated objective, and the MWS's weights are optimization variables. We develop an iterative solver for the problem and prove that it finds the optimal weights. We also design an online architecture where the solver adaptively generates optimal weights for networks with varying mean and variance of the SNRs. Simulations show that our methods effectively require $4\sim10$ times fewer slots to find the optimal weights and achieve $5\sim15\%$ better average rates than the existing methods.

Graph Representation Learning for Contention and Interference Management in Wireless Networks

Jan 15, 2024Abstract:Restricted access window (RAW) in Wi-Fi 802.11ah networks manages contention and interference by grouping users and allocating periodic time slots for each group's transmissions. We will find the optimal user grouping decisions in RAW to maximize the network's worst-case user throughput. We review existing user grouping approaches and highlight their performance limitations in the above problem. We propose formulating user grouping as a graph construction problem where vertices represent users and edge weights indicate the contention and interference. This formulation leverages the graph's max cut to group users and optimizes edge weights to construct the optimal graph whose max cut yields the optimal grouping decisions. To achieve this optimal graph construction, we design an actor-critic graph representation learning (AC-GRL) algorithm. Specifically, the actor neural network (NN) is trained to estimate the optimal graph's edge weights using path losses between users and access points. A graph cut procedure uses semidefinite programming to solve the max cut efficiently and return the grouping decisions for the given weights. The critic NN approximates user throughput achieved by the above-returned decisions and is used to improve the actor. Additionally, we present an architecture that uses the online-measured throughput and path losses to fine-tune the decisions in response to changes in user populations and their locations. Simulations show that our methods achieve $30\%\sim80\%$ higher worst-case user throughput than the existing approaches and that the proposed architecture can further improve the worst-case user throughput by $5\%\sim30\%$ while ensuring timely updates of grouping decisions.

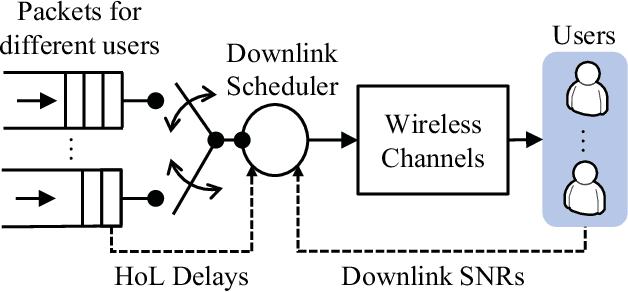

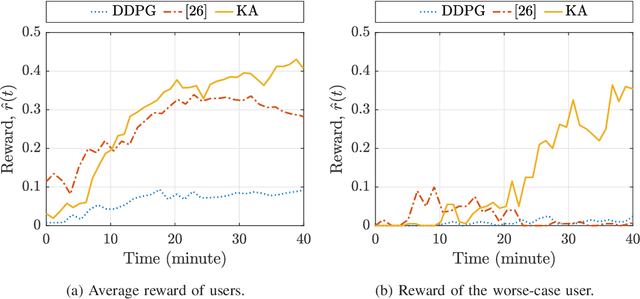

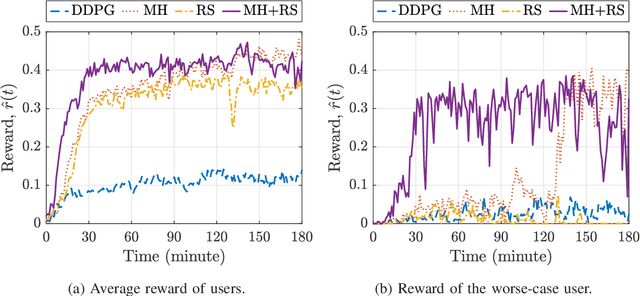

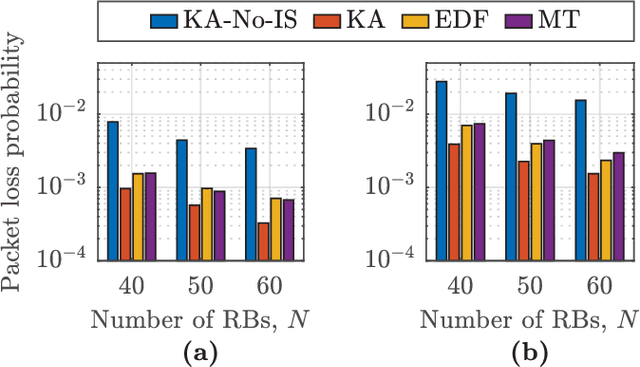

Knowledge-Assisted Deep Reinforcement Learning in 5G Scheduler Design: From Theoretical Framework to Implementation

Sep 17, 2020

Abstract:In this paper, we develop a knowledge-assisted deep reinforcement learning (DRL) algorithm to design wireless schedulers in the fifth-generation (5G) cellular networks with time-sensitive traffic. Since the scheduling policy is a deterministic mapping from channel and queue states to scheduling actions, it can be optimized by using deep deterministic policy gradient (DDPG). We show that a straightforward implementation of DDPG converges slowly, has a poor quality-of-service (QoS) performance, and cannot be implemented in real-world 5G systems, which are non-stationary in general. To address these issues, we propose a theoretical DRL framework, where theoretical models from wireless communications are used to formulate a Markov decision process in DRL. To reduce the convergence time and improve the QoS of each user, we design a knowledge-assisted DDPG (K-DDPG) that exploits expert knowledge of the scheduler deign problem, such as the knowledge of the QoS, the target scheduling policy, and the importance of each training sample, determined by the approximation error of the value function and the number of packet losses. Furthermore, we develop an architecture for online training and inference, where K-DDPG initializes the scheduler off-line and then fine-tunes the scheduler online to handle the mismatch between off-line simulations and non-stationary real-world systems. Simulation results show that our approach reduces the convergence time of DDPG significantly and achieves better QoS than existing schedulers (reducing 30% ~ 50% packet losses). Experimental results show that with off-line initialization, our approach achieves better initial QoS than random initialization and the online fine-tuning converges in few minutes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge