Chengjian Sun

A Tutorial of Ultra-Reliable and Low-Latency Communications in 6G: Integrating Theoretical Knowledge into Deep Learning

Sep 13, 2020

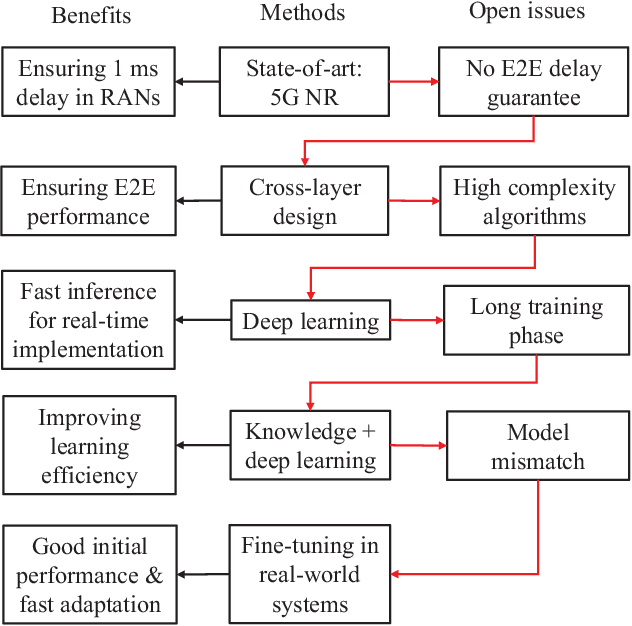

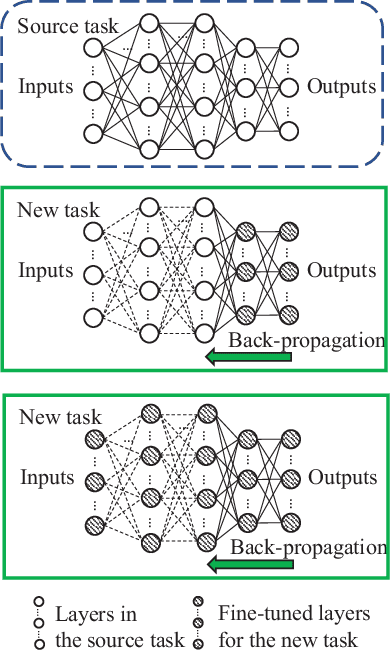

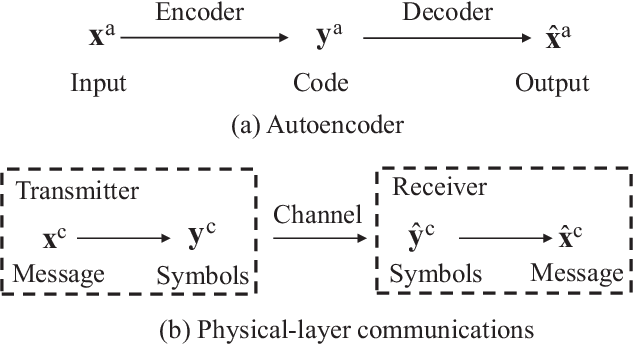

Abstract:As one of the key communication scenarios in the 5th and also the 6th generation (6G) cellular networks, ultra-reliable and low-latency communications (URLLC) will be central for the development of various emerging mission-critical applications. The state-of-the-art mobile communication systems do not fulfill the end-to-end delay and overall reliability requirements of URLLC. A holistic framework that takes into account latency, reliability, availability, scalability, and decision-making under uncertainty is lacking. Driven by recent breakthroughs in deep neural networks, deep learning algorithms have been considered as promising ways of developing enabling technologies for URLLC in future 6G networks. This tutorial illustrates how to integrate theoretical knowledge (models, analysis tools, and optimization frameworks) of wireless communications into different kinds of deep learning algorithms for URLLC. We first introduce the background of URLLC and review promising network architectures and deep learning frameworks in 6G. To better illustrate how to improve learning algorithms with theoretical knowledge, we revisit model-based analysis tools and cross-layer optimization frameworks for URLLC. Following that, we examine the potential of applying supervised/unsupervised deep learning and deep reinforcement learning in URLLC and summarize related open problems. Finally, we provide simulation and experimental results to validate the effectiveness of different learning algorithms and discuss future directions.

Optimizing Ultra-Reliable and Low-Latency Communication Systems with Unsupervised Learning

May 30, 2020

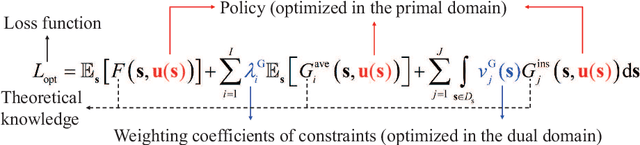

Abstract:Supervised learning has been introduced to wireless communications to solve complex problems due to its wide applicability. However, generating labels for supervision could be expensive or even unavailable in wireless communications, and constraints cannot be explicitly guaranteed in the supervised manner. In this work, we introduced an unsupervised learning framework, which exploits mathematic models and the knowledge of optimization to search an unknown policy without supervision. Such a framework is applicable to both variable and functional optimization problems with instantaneous and long-term constraints. We take two resource allocation problems in ultra-reliable and low-latency communications as examples, which involve one and two timescales, respectively. Unsupervised learning is adopted to find the approximated optimal solutions of the problems. Simulation results show that the learned solution can achieve the same bandwidth efficiency as the optimal solution in the symmetric scenarios. By comparing the learned solution with the existing policies, our results illustrate the benefit of exploiting frequency diversity and multi-user diversity in improving the bandwidth efficiency in both symmetric and asymmetric scenarios. We further illustrate that, with pre-training, the unsupervised learning algorithm converges rapidly.

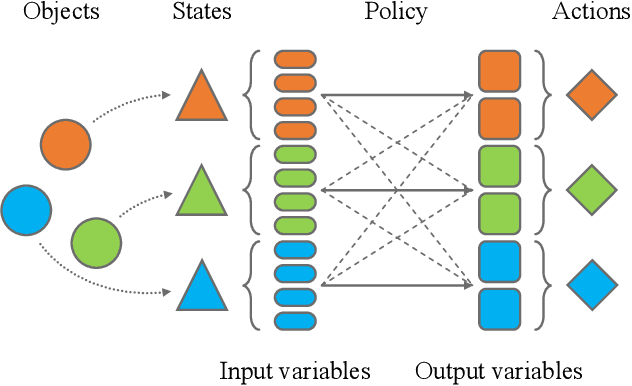

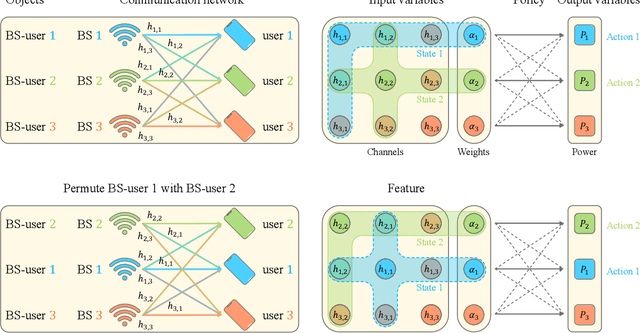

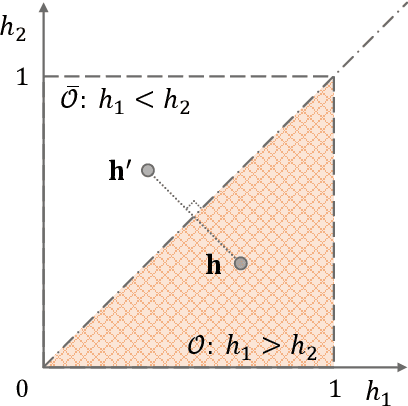

Data Representation for Deep Learning with Prior Knowledge of Symmetric Wireless Tasks

May 27, 2020

Abstract:Deep neural networks (DNNs) have been applied to deal with various wireless problems, which usually need a large number of samples for training. Considering that wireless environments are highly dynamic and gathering data is expensive, reducing sample complexity is critical for DNN-based wireless networks. Incorporating domain knowledge into learning is a promising way of decreasing training samples. Yet how to invoke prior knowledge of wireless tasks for efficient data representation remains largely unexplored. In this article, we first briefly summarize several approaches to address training complexity. Then, we show that a symmetric property, permutation equivariance, widely exists in wireless tasks. We introduce a simple method to compress the training set by exploiting such a generic prior, which is to jointly sort the input and output of the DNNs. We use interference coordination and caching policy optimization to illustrate how to apply this method of data representation, i.e., ranking, and how much the sample complexity can be reduced. Simulation results demonstrate that the training samples required to achieve the same learning performance as the traditional data representation can be reduced by $10 \sim 200$ folds by ranking.

Optimizing Wireless Systems Using Unsupervised and Reinforced-Unsupervised Deep Learning

Jan 03, 2020

Abstract:Resource allocation and transceivers in wireless networks are usually designed by solving optimization problems subject to specific constraints, which can be formulated as variable or functional optimization. If the objective and constraint functions of a variable optimization problem can be derived, standard numerical algorithms can be applied for finding the optimal solution, which however incur high computational cost when the dimension of the variable is high. To reduce the on-line computational complexity, learning the optimal solution as a function of the environment's status by deep neural networks (DNNs) is an effective approach. DNNs can be trained under the supervision of optimal solutions, which however, is not applicable to the scenarios without models or for functional optimization where the optimal solutions are hard to obtain. If the objective and constraint functions are unavailable, reinforcement learning can be applied to find the solution of a functional optimization problem, which is however not tailored to optimization problems in wireless networks. In this article, we introduce unsupervised and reinforced-unsupervised learning frameworks for solving both variable and functional optimization problems without the supervision of the optimal solutions. When the mathematical model of the environment is completely known and the distribution of environment's status is known or unknown, we can invoke unsupervised learning algorithm. When the mathematical model of the environment is incomplete, we introduce reinforced-unsupervised learning algorithms that learn the model by interacting with the environment. Our simulation results confirm the applicability of these learning frameworks by taking a user association problem as an example.

Proactive Optimization with Unsupervised Learning

Oct 29, 2019

Abstract:Proactive resource allocation, say proactive caching at wireless edge, has shown promising gain in boosting network performance and improving user experience, by leveraging big data and machine learning. Earlier research efforts focus on optimizing proactive policies under the assumption that the future knowledge required for optimization is perfectly known. Recently, various machine learning techniques are proposed to predict the required knowledge such as file popularity, which is treated as the true value for the optimization. In this paper, we introduce a \emph{proactive optimization} framework for optimizing proactive resource allocation, where the future knowledge is implicitly predicted from historical observations by the optimization. To this end, we formulate a {proactive optimization} problem by taking proactive caching and bandwidth allocation as an example, where the objective function is the conditional expectation of successful offloading probability taken over the unknown popularity given the historically observed popularity. To solve such a problem that depends on the conditional distribution of future information given current and past information, we transform the problem equivalently to a problem depending on the joint distribution of future and historical popularity. Then, we resort to stochastic optimization to learn the joint distribution and resort to unsupervised learning with neural networks to learn the optimal policy. The neural networks can be trained off-line, or in an on-line manner to adapt to the dynamic environment. Simulation results using a real dataset validate that the proposed framework can indeed predict the file popularity implicitly by optimization.

Model-Free Unsupervised Learning for Optimization Problems with Constraints

Jul 30, 2019

Abstract:In many optimization problems in wireless communications, the expressions of objective function or constraints are hard or even impossible to derive, which makes the solutions difficult to find. In this paper, we propose a model-free learning framework to solve constrained optimization problems without the supervision of the optimal solution. Neural networks are used respectively for parameterizing the function to be optimized, parameterizing the Lagrange multiplier associated with instantaneous constraints, and approximating the unknown objective function or constraints. We provide learning algorithms to train all the neural networks simultaneously, and reveal the connections of the proposed framework with reinforcement learning. Numerical and simulation results validate the proposed framework and demonstrate the efficiency of model-free learning by taking power control problem as an example.

Unsupervised Deep Learning for Ultra-reliable and Low-latency Communications

Jun 05, 2019

Abstract:In this paper, we study how to solve resource allocation problems in ultra-reliable and low-latency communications by unsupervised deep learning, which often yield functional optimization problems with quality-of-service (QoS) constraints. We take a joint power and bandwidth allocation problem as an example, which minimizes the total bandwidth required to guarantee the QoS of each user in terms of the delay bound and overall packet loss probability. The global optimal solution is found in a symmetric scenario. A neural network was introduced to find an approximated optimal solution in general scenarios, where the QoS is ensured by using the property that the optimal solution should satisfy as the "supervision signal". Simulation results show that the learning-based solution performs the same as the optimal solution in the symmetric scenario, and can save around 40% bandwidth with respect to the state-of-the-art policy.

Learning to Optimize with Unsupervised Learning: Training Deep Neural Networks for URLLC

May 27, 2019

Abstract:Learning the optimized solution as a function of environmental parameters is effective in solving numerical optimization in real time for time-sensitive applications. Existing works of learning to optimize train deep neural networks (DNN) with labels, and the learnt solution are inaccurate, which cannot be employed to ensure the stringent quality of service. In this paper, we propose a framework to learn the latent function with unsupervised deep learning, where the property that the optimal solution should satisfy is used as the "supervision signal" implicitly. The framework is applicable to both functional and variable optimization problems with constraints. We take a variable optimization problem in ultra-reliable and low-latency communications as an example, which demonstrates that the ultra-high reliability can be supported by the DNN without supervision labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge