Data Representation for Deep Learning with Prior Knowledge of Symmetric Wireless Tasks

Paper and Code

May 27, 2020

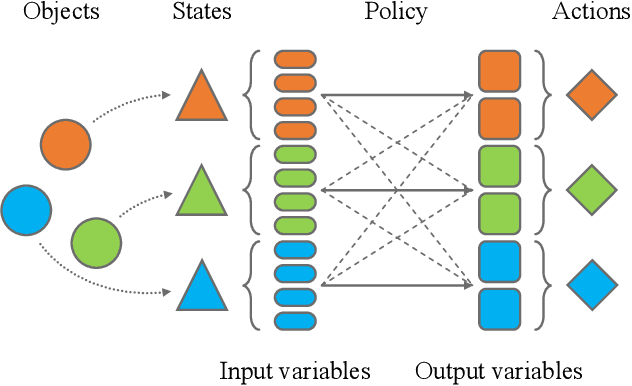

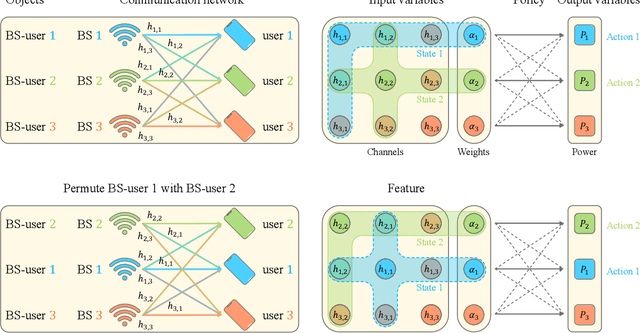

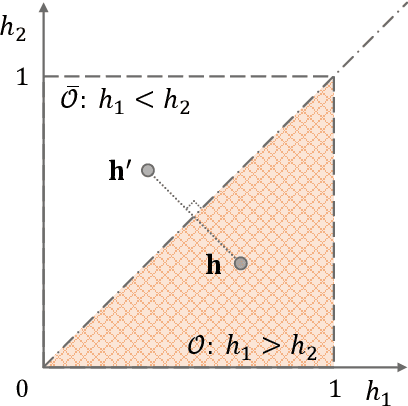

Deep neural networks (DNNs) have been applied to deal with various wireless problems, which usually need a large number of samples for training. Considering that wireless environments are highly dynamic and gathering data is expensive, reducing sample complexity is critical for DNN-based wireless networks. Incorporating domain knowledge into learning is a promising way of decreasing training samples. Yet how to invoke prior knowledge of wireless tasks for efficient data representation remains largely unexplored. In this article, we first briefly summarize several approaches to address training complexity. Then, we show that a symmetric property, permutation equivariance, widely exists in wireless tasks. We introduce a simple method to compress the training set by exploiting such a generic prior, which is to jointly sort the input and output of the DNNs. We use interference coordination and caching policy optimization to illustrate how to apply this method of data representation, i.e., ranking, and how much the sample complexity can be reduced. Simulation results demonstrate that the training samples required to achieve the same learning performance as the traditional data representation can be reduced by $10 \sim 200$ folds by ranking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge