Branka Vucetic

Short Wins Long: Short Codes with Language Model Semantic Correction Outperform Long Codes

May 13, 2025Abstract:This paper presents a novel semantic-enhanced decoding scheme for transmitting natural language sentences with multiple short block codes over noisy wireless channels. After ASCII source coding, the natural language sentence message is divided into segments, where each is encoded with short block channel codes independently before transmission. At the receiver, each short block of codewords is decoded in parallel, followed by a semantic error correction (SEC) model to reconstruct corrupted segments semantically. We design and train the SEC model based on Bidirectional and Auto-Regressive Transformers (BART). Simulations demonstrate that the proposed scheme can significantly outperform encoding the sentence with one conventional long LDPC code, in terms of block error rate (BLER), semantic metrics, and decoding latency. Finally, we proposed a semantic hybrid automatic repeat request (HARQ) scheme to further enhance the error performance, which selectively requests retransmission depends on semantic uncertainty.

SIG-SDP: Sparse Interference Graph-Aided Semidefinite Programming for Large-Scale Wireless Time-Sensitive Networking

Jan 20, 2025

Abstract:Wireless time-sensitive networking (WTSN) is essential for Industrial Internet of Things. We address the problem of minimizing time slots needed for WTSN transmissions while ensuring reliability subject to interference constraints -- an NP-hard task. Existing semidefinite programming (SDP) methods can relax and solve the problem but suffer from high polynomial complexity. We propose a sparse interference graph-aided SDP (SIG-SDP) framework that exploits the interference's sparsity arising from attenuated signals between distant user pairs. First, the framework utilizes the sparsity to establish the upper and lower bounds of the minimum number of slots and uses binary search to locate the minimum within the bounds. Here, for each searched slot number, the framework optimizes a positive semidefinite (PSD) matrix indicating how likely user pairs share the same slot, and the constraint feasibility with the optimized PSD matrix further refines the slot search range. Second, the framework designs a matrix multiplicative weights (MMW) algorithm that accelerates the optimization, achieved by only sparsely adjusting interfering user pairs' elements in the PSD matrix while skipping the non-interfering pairs. We also design an online architecture to deploy the framework to adjust slot assignments based on real-time interference measurements. Simulations show that the SIG-SDP framework converges in near-linear complexity and is highly scalable to large networks. The framework minimizes the number of slots with up to 10 times faster computation and up to 100 times lower packet loss rates than compared methods. The online architecture demonstrates how the algorithm complexity impacts dynamic networks' performance.

GNN-based Auto-Encoder for Short Linear Block Codes: A DRL Approach

Dec 03, 2024

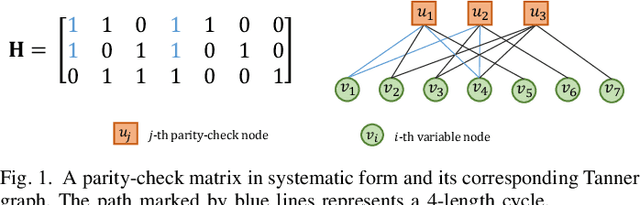

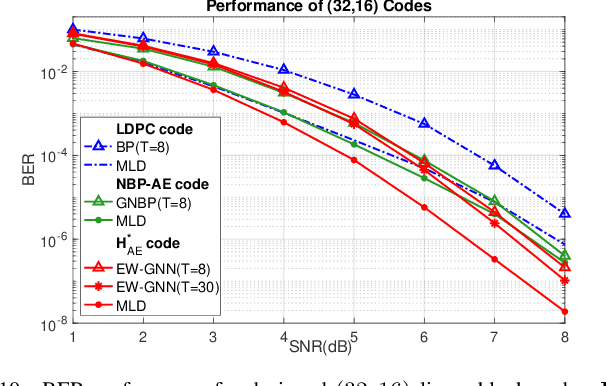

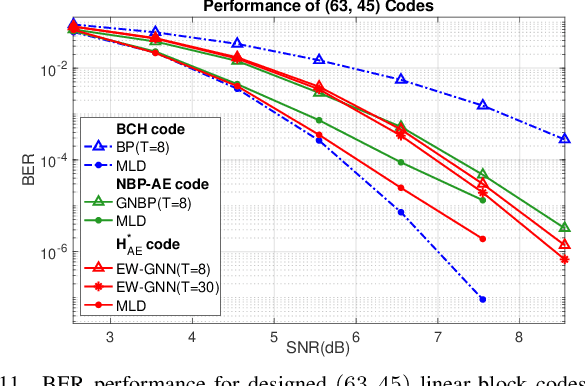

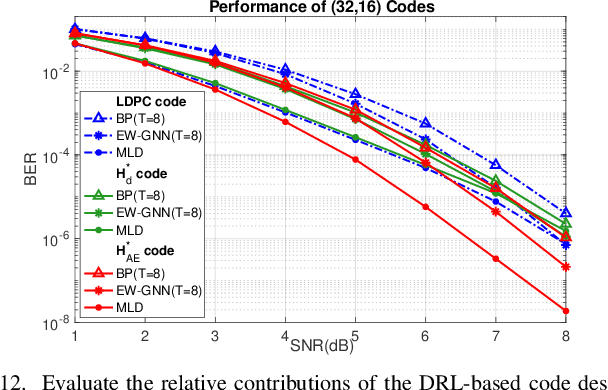

Abstract:This paper presents a novel auto-encoder based end-to-end channel encoding and decoding. It integrates deep reinforcement learning (DRL) and graph neural networks (GNN) in code design by modeling the generation of code parity-check matrices as a Markov Decision Process (MDP), to optimize key coding performance metrics such as error-rates and code algebraic properties. An edge-weighted GNN (EW-GNN) decoder is proposed, which operates on the Tanner graph with an iterative message-passing structure. Once trained on a single linear block code, the EW-GNN decoder can be directly used to decode other linear block codes of different code lengths and code rates. An iterative joint training of the DRL-based code designer and the EW-GNN decoder is performed to optimize the end-end encoding and decoding process. Simulation results show the proposed auto-encoder significantly surpasses several traditional coding schemes at short block lengths, including low-density parity-check (LDPC) codes with the belief propagation (BP) decoding and the maximum-likelihood decoding (MLD), and BCH with BP decoding, offering superior error-correction capabilities while maintaining low decoding complexity.

Approaching Maximum Likelihood Performance via End-to-End Learning in MU-MIMO Systems

Nov 25, 2024

Abstract:Multi-user multiple-input multiple-output (MU-MIMO) systems allow multiple users to share the same wireless spectrum. Each user transmits one symbol drawn from an M-ary quadrature amplitude modulation (M-QAM) constellation set, and the resulting multi-user interference (MUI) is cancelled at the receiver. End-to-End (E2E) learning has recently been proposed to jointly design the constellation set for a modulator and symbol detector for a single user under an additive white Gaussian noise (AWGN) channel by using deep neural networks (DNN) with a symbol-error-rate (SER) performance, exceeding that of Maximum likelihood (ML) M-QAM detectors. In this paper, we extend the E2E concept to the MU-MIMO systems, where a DNN-based modulator that generates learned M constellation points and graph expectation propagation network (GEPNet) detector that cancels MUI are jointly optimised with respect to SER performance loss. Simulation results demonstrate that the proposed E2E with learned constellation outperforms GEPNet with 16-QAM by around 5 dB in terms of SER in a high MUI environment and even surpasses ML with 16-QAM in a low MUI condition, both with no additional computational complexity.

Wireless Human-Machine Collaboration in Industry 5.0

Oct 21, 2024Abstract:Wireless Human-Machine Collaboration (WHMC) represents a critical advancement for Industry 5.0, enabling seamless interaction between humans and machines across geographically distributed systems. As the WHMC systems become increasingly important for achieving complex collaborative control tasks, ensuring their stability is essential for practical deployment and long-term operation. Stability analysis certifies how the closed-loop system will behave under model randomness, which is essential for systems operating with wireless communications. However, the fundamental stability analysis of the WHMC systems remains an unexplored challenge due to the intricate interplay between the stochastic nature of wireless communications, dynamic human operations, and the inherent complexities of control system dynamics. This paper establishes a fundamental WHMC model incorporating dual wireless loops for machine and human control. Our framework accounts for practical factors such as short-packet transmissions, fading channels, and advanced HARQ schemes. We model human control lag as a Markov process, which is crucial for capturing the stochastic nature of human interactions. Building on this model, we propose a stochastic cycle-cost-based approach to derive a stability condition for the WHMC system, expressed in terms of wireless channel statistics, human dynamics, and control parameters. Our findings are validated through extensive numerical simulations and a proof-of-concept experiment, where we developed and tested a novel wireless collaborative cart-pole control system. The results confirm the effectiveness of our approach and provide a robust framework for future research on WHMC systems in more complex environments.

Communication-Control Codesign for Large-Scale Wireless Networked Control Systems

Oct 15, 2024

Abstract:Wireless Networked Control Systems (WNCSs) are essential to Industry 4.0, enabling flexible control in applications, such as drone swarms and autonomous robots. The interdependence between communication and control requires integrated design, but traditional methods treat them separately, leading to inefficiencies. Current codesign approaches often rely on simplified models, focusing on single-loop or independent multi-loop systems. However, large-scale WNCSs face unique challenges, including coupled control loops, time-correlated wireless channels, trade-offs between sensing and control transmissions, and significant computational complexity. To address these challenges, we propose a practical WNCS model that captures correlated dynamics among multiple control loops with spatially distributed sensors and actuators sharing limited wireless resources over multi-state Markov block-fading channels. We formulate the codesign problem as a sequential decision-making task that jointly optimizes scheduling and control inputs across estimation, control, and communication domains. To solve this problem, we develop a Deep Reinforcement Learning (DRL) algorithm that efficiently handles the hybrid action space, captures communication-control correlations, and ensures robust training despite sparse cross-domain variables and floating control inputs. Extensive simulations show that the proposed DRL approach outperforms benchmarks and solves the large-scale WNCS codesign problem, providing a scalable solution for industrial automation.

Floor-Plan-aided Indoor Localization: Zero-Shot Learning Framework, Data Sets, and Prototype

May 22, 2024

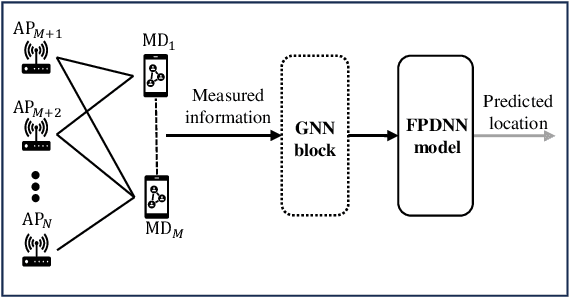

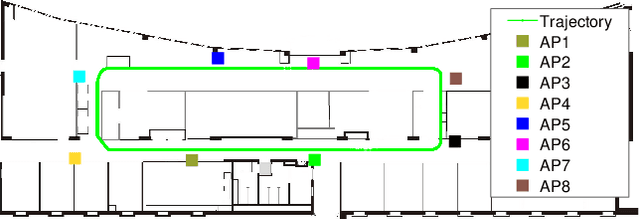

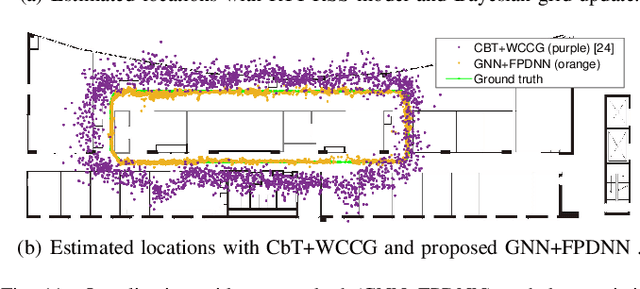

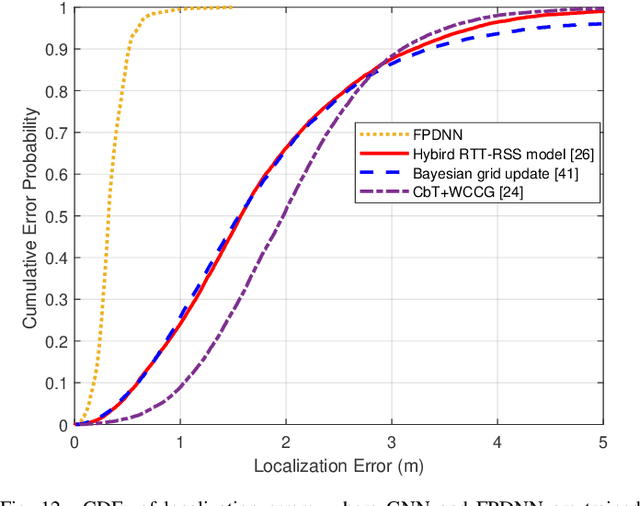

Abstract:Machine learning has been considered a promising approach for indoor localization. Nevertheless, the sample efficiency, scalability, and generalization ability remain open issues of implementing learning-based algorithms in practical systems. In this paper, we establish a zero-shot learning framework that does not need real-world measurements in a new communication environment. Specifically, a graph neural network that is scalable to the number of access points (APs) and mobile devices (MDs) is used for obtaining coarse locations of MDs. Based on the coarse locations, the floor-plan image between an MD and an AP is exploited to improve localization accuracy in a floor-plan-aided deep neural network. To further improve the generalization ability, we develop a synthetic data generator that provides synthetic data samples in different scenarios, where real-world samples are not available. We implement the framework in a prototype that estimates the locations of MDs. Experimental results show that our zero-shot learning method can reduce localization errors by around $30$\% to $55$\% compared with three baselines from the existing literature.

Graph-based Untrained Neural Network Detector for OTFS Systems

Apr 08, 2024

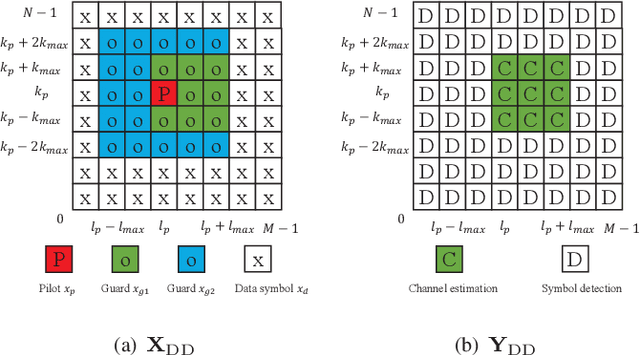

Abstract:Inter-carrier interference (ICI) caused by mobile reflectors significantly degrades the conventional orthogonal frequency division multiplexing (OFDM) performance in high-mobility environments. The orthogonal time frequency space (OTFS) modulation system effectively represents ICI in the delay-Doppler domain, thus significantly outperforming OFDM. Existing iterative and neural network (NN) based OTFS detectors suffer from high complex matrix operations and performance degradation in untrained environments, where the real wireless channel does not match the one used in the training, which often happens in real wireless networks. In this paper, we propose to embed the prior knowledge of interference extracted from the estimated channel state information (CSI) as a directed graph into a decoder untrained neural network (DUNN), namely graph-based DUNN (GDUNN). We then combine it with Bayesian parallel interference cancellation (BPIC) for OTFS symbol detection, resulting in GDUNN-BPIC. Simulation results show that the proposed GDUNN-BPIC outperforms state-of-the-art OTFS detectors under imperfect CSI.

Opportunistic Scheduling Using Statistical Information of Wireless Channels

Feb 13, 2024

Abstract:This paper considers opportunistic scheduler (OS) design using statistical channel state information~(CSI). We apply max-weight schedulers (MWSs) to maximize a utility function of users' average data rates. MWSs schedule the user with the highest weighted instantaneous data rate every time slot. Existing methods require hundreds of time slots to adjust the MWS's weights according to the instantaneous CSI before finding the optimal weights that maximize the utility function. In contrast, our MWS design requires few slots for estimating the statistical CSI. Specifically, we formulate a weight optimization problem using the mean and variance of users' signal-to-noise ratios (SNRs) to construct constraints bounding users' feasible average rates. Here, the utility function is the formulated objective, and the MWS's weights are optimization variables. We develop an iterative solver for the problem and prove that it finds the optimal weights. We also design an online architecture where the solver adaptively generates optimal weights for networks with varying mean and variance of the SNRs. Simulations show that our methods effectively require $4\sim10$ times fewer slots to find the optimal weights and achieve $5\sim15\%$ better average rates than the existing methods.

Graph Representation Learning for Contention and Interference Management in Wireless Networks

Jan 15, 2024Abstract:Restricted access window (RAW) in Wi-Fi 802.11ah networks manages contention and interference by grouping users and allocating periodic time slots for each group's transmissions. We will find the optimal user grouping decisions in RAW to maximize the network's worst-case user throughput. We review existing user grouping approaches and highlight their performance limitations in the above problem. We propose formulating user grouping as a graph construction problem where vertices represent users and edge weights indicate the contention and interference. This formulation leverages the graph's max cut to group users and optimizes edge weights to construct the optimal graph whose max cut yields the optimal grouping decisions. To achieve this optimal graph construction, we design an actor-critic graph representation learning (AC-GRL) algorithm. Specifically, the actor neural network (NN) is trained to estimate the optimal graph's edge weights using path losses between users and access points. A graph cut procedure uses semidefinite programming to solve the max cut efficiently and return the grouping decisions for the given weights. The critic NN approximates user throughput achieved by the above-returned decisions and is used to improve the actor. Additionally, we present an architecture that uses the online-measured throughput and path losses to fine-tune the decisions in response to changes in user populations and their locations. Simulations show that our methods achieve $30\%\sim80\%$ higher worst-case user throughput than the existing approaches and that the proposed architecture can further improve the worst-case user throughput by $5\%\sim30\%$ while ensuring timely updates of grouping decisions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge