Wanchun Liu

Goal-oriented Transmission Scheduling: Structure-guided DRL with a Unified Dual On-policy and Off-policy Approach

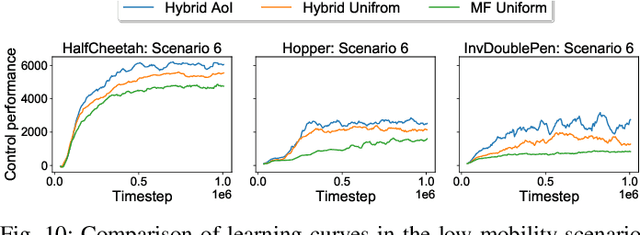

Jan 21, 2025Abstract:Goal-oriented communications prioritize application-driven objectives over data accuracy, enabling intelligent next-generation wireless systems. Efficient scheduling in multi-device, multi-channel systems poses significant challenges due to high-dimensional state and action spaces. We address these challenges by deriving key structural properties of the optimal solution to the goal-oriented scheduling problem, incorporating Age of Information (AoI) and channel states. Specifically, we establish the monotonicity of the optimal state value function (a measure of long-term system performance) w.r.t. channel states and prove its asymptotic convexity w.r.t. AoI states. Additionally, we derive the monotonicity of the optimal policy w.r.t. channel states, advancing the theoretical framework for optimal scheduling. Leveraging these insights, we propose the structure-guided unified dual on-off policy DRL (SUDO-DRL), a hybrid algorithm that combines the stability of on-policy training with the sample efficiency of off-policy methods. Through a novel structural property evaluation framework, SUDO-DRL enables effective and scalable training, addressing the complexities of large-scale systems. Numerical results show SUDO-DRL improves system performance by up to 45% and reduces convergence time by 40% compared to state-of-the-art methods. It also effectively handles scheduling in much larger systems, where off-policy DRL fails and on-policy benchmarks exhibit significant performance loss, demonstrating its scalability and efficacy in goal-oriented communications.

Wireless Resource Allocation with Collaborative Distributed and Centralized DRL under Control Channel Attacks

Nov 16, 2024

Abstract:In this paper, we consider a wireless resource allocation problem in a cyber-physical system (CPS) where the control channel, carrying resource allocation commands, is subjected to denial-of-service (DoS) attacks. We propose a novel concept of collaborative distributed and centralized (CDC) resource allocation to effectively mitigate the impact of these attacks. To optimize the CDC resource allocation policy, we develop a new CDC-deep reinforcement learning (DRL) algorithm, whereas existing DRL frameworks only formulate either centralized or distributed decision-making problems. Simulation results demonstrate that the CDC-DRL algorithm significantly outperforms state-of-the-art DRL benchmarks, showcasing its ability to address resource allocation problems in large-scale CPSs under control channel attacks.

Wireless Human-Machine Collaboration in Industry 5.0

Oct 21, 2024Abstract:Wireless Human-Machine Collaboration (WHMC) represents a critical advancement for Industry 5.0, enabling seamless interaction between humans and machines across geographically distributed systems. As the WHMC systems become increasingly important for achieving complex collaborative control tasks, ensuring their stability is essential for practical deployment and long-term operation. Stability analysis certifies how the closed-loop system will behave under model randomness, which is essential for systems operating with wireless communications. However, the fundamental stability analysis of the WHMC systems remains an unexplored challenge due to the intricate interplay between the stochastic nature of wireless communications, dynamic human operations, and the inherent complexities of control system dynamics. This paper establishes a fundamental WHMC model incorporating dual wireless loops for machine and human control. Our framework accounts for practical factors such as short-packet transmissions, fading channels, and advanced HARQ schemes. We model human control lag as a Markov process, which is crucial for capturing the stochastic nature of human interactions. Building on this model, we propose a stochastic cycle-cost-based approach to derive a stability condition for the WHMC system, expressed in terms of wireless channel statistics, human dynamics, and control parameters. Our findings are validated through extensive numerical simulations and a proof-of-concept experiment, where we developed and tested a novel wireless collaborative cart-pole control system. The results confirm the effectiveness of our approach and provide a robust framework for future research on WHMC systems in more complex environments.

Communication-Control Codesign for Large-Scale Wireless Networked Control Systems

Oct 15, 2024

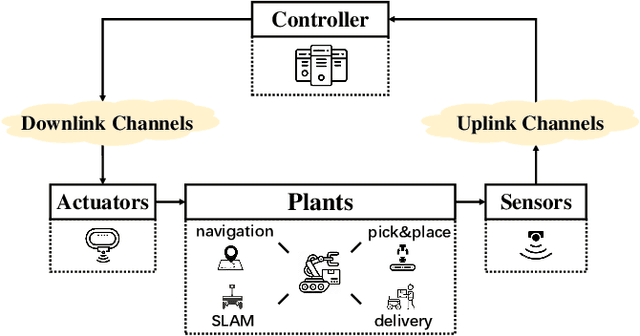

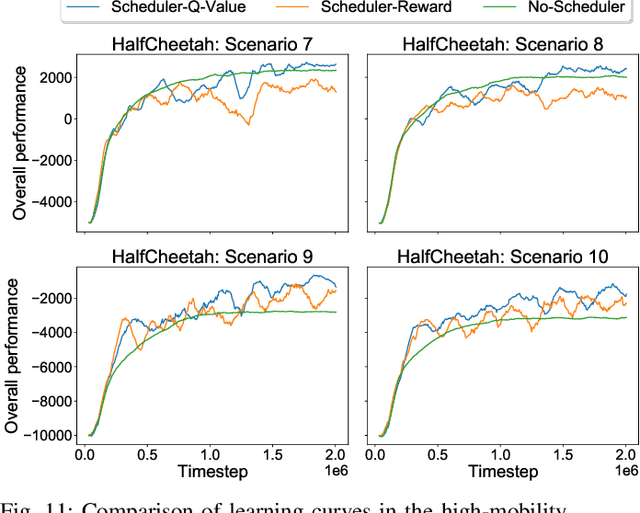

Abstract:Wireless Networked Control Systems (WNCSs) are essential to Industry 4.0, enabling flexible control in applications, such as drone swarms and autonomous robots. The interdependence between communication and control requires integrated design, but traditional methods treat them separately, leading to inefficiencies. Current codesign approaches often rely on simplified models, focusing on single-loop or independent multi-loop systems. However, large-scale WNCSs face unique challenges, including coupled control loops, time-correlated wireless channels, trade-offs between sensing and control transmissions, and significant computational complexity. To address these challenges, we propose a practical WNCS model that captures correlated dynamics among multiple control loops with spatially distributed sensors and actuators sharing limited wireless resources over multi-state Markov block-fading channels. We formulate the codesign problem as a sequential decision-making task that jointly optimizes scheduling and control inputs across estimation, control, and communication domains. To solve this problem, we develop a Deep Reinforcement Learning (DRL) algorithm that efficiently handles the hybrid action space, captures communication-control correlations, and ensures robust training despite sparse cross-domain variables and floating control inputs. Extensive simulations show that the proposed DRL approach outperforms benchmarks and solves the large-scale WNCS codesign problem, providing a scalable solution for industrial automation.

Semantic-aware Transmission Scheduling: a Monotonicity-driven Deep Reinforcement Learning Approach

May 23, 2023Abstract:For cyber-physical systems in the 6G era, semantic communications connecting distributed devices for dynamic control and remote state estimation are required to guarantee application-level performance, not merely focus on communication-centric performance. Semantics here is a measure of the usefulness of information transmissions. Semantic-aware transmission scheduling of a large system often involves a large decision-making space, and the optimal policy cannot be obtained by existing algorithms effectively. In this paper, we first investigate the fundamental properties of the optimal semantic-aware scheduling policy and then develop advanced deep reinforcement learning (DRL) algorithms by leveraging the theoretical guidelines. Our numerical results show that the proposed algorithms can substantially reduce training time and enhance training performance compared to benchmark algorithms.

Splitting Receiver with Multiple Antennas

Mar 08, 2023

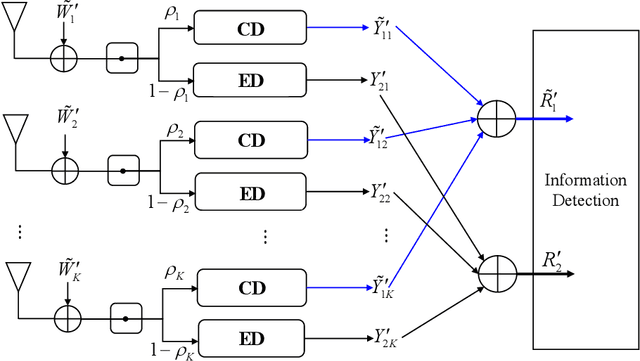

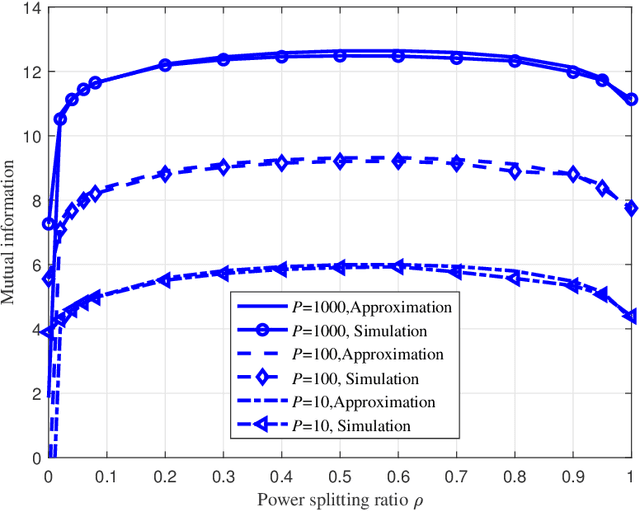

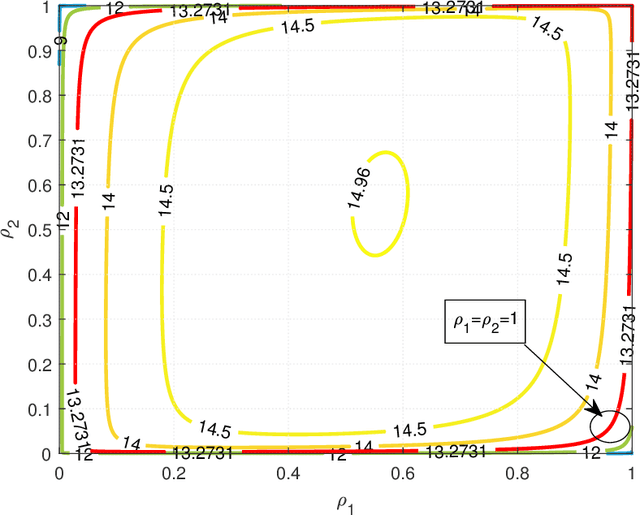

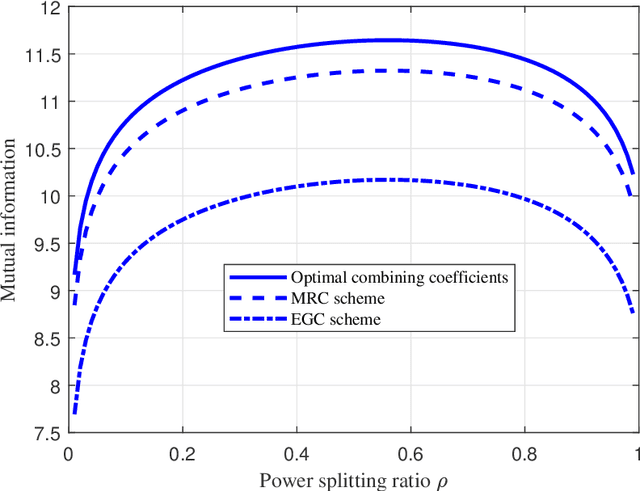

Abstract:Recently proposed splitting receivers, utilizing both coherently and non-coherently processed signals for detection, have demonstrated remarkable performance gain compared to conventional receivers in the single-antenna scenario. In this paper, we propose a multi-antenna splitting receiver, where the received signal at each antenna is split into an envelope detection (ED) branch and a coherent detection (CD) branch, and the processed signals from both branches of all antennas are then jointly utilized for recovering the transmitted information. We derive a closed-form approximation of the achievable mutual information (MI) in terms of the key receiver design parameters, including the power splitting ratio at each antenna and the signal combining coefficients from all the ED and CD branches. We further optimize these receiver design parameters and demonstrate important design insights for the proposed multi-antenna ED-CD splitting receiver: 1) the optimal splitting ratio is identical at each antenna, and 2) the optimal combining coefficients for the ED and CD branches are the same, and each coefficient is proportional to the corresponding antenna's channel power gain. Our numerical results also demonstrate the MI performance improvement of the proposed receiver over conventional non-splitting receivers.

Structure-Enhanced DRL for Optimal Transmission Scheduling

Dec 24, 2022

Abstract:Remote state estimation of large-scale distributed dynamic processes plays an important role in Industry 4.0 applications. In this paper, we focus on the transmission scheduling problem of a remote estimation system. First, we derive some structural properties of the optimal sensor scheduling policy over fading channels. Then, building on these theoretical guidelines, we develop a structure-enhanced deep reinforcement learning (DRL) framework for optimal scheduling of the system to achieve the minimum overall estimation mean-square error (MSE). In particular, we propose a structure-enhanced action selection method, which tends to select actions that obey the policy structure. This explores the action space more effectively and enhances the learning efficiency of DRL agents. Furthermore, we introduce a structure-enhanced loss function to add penalties to actions that do not follow the policy structure. The new loss function guides the DRL to converge to the optimal policy structure quickly. Our numerical experiments illustrate that the proposed structure-enhanced DRL algorithms can save the training time by 50% and reduce the remote estimation MSE by 10% to 25% when compared to benchmark DRL algorithms. In addition, we show that the derived structural properties exist in a wide range of dynamic scheduling problems that go beyond remote state estimation.

Structure-Enhanced Deep Reinforcement Learning for Optimal Transmission Scheduling

Nov 20, 2022Abstract:Remote state estimation of large-scale distributed dynamic processes plays an important role in Industry 4.0 applications. In this paper, by leveraging the theoretical results of structural properties of optimal scheduling policies, we develop a structure-enhanced deep reinforcement learning (DRL) framework for optimal scheduling of a multi-sensor remote estimation system to achieve the minimum overall estimation mean-square error (MSE). In particular, we propose a structure-enhanced action selection method, which tends to select actions that obey the policy structure. This explores the action space more effectively and enhances the learning efficiency of DRL agents. Furthermore, we introduce a structure-enhanced loss function to add penalty to actions that do not follow the policy structure. The new loss function guides the DRL to converge to the optimal policy structure quickly. Our numerical results show that the proposed structure-enhanced DRL algorithms can save the training time by 50% and reduce the remote estimation MSE by 10% to 25%, when compared to benchmark DRL algorithms.

Deep Learning for Wireless Networked Systems: a joint Estimation-Control-Scheduling Approach

Oct 03, 2022

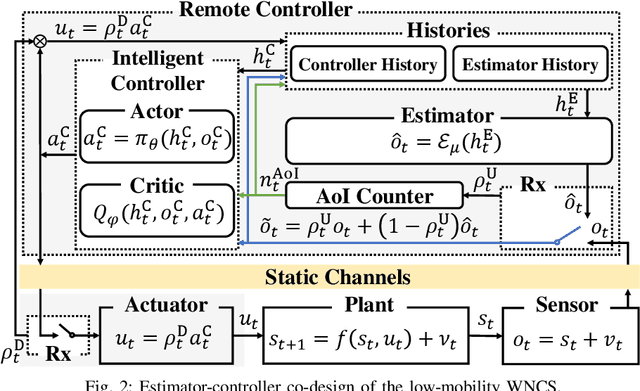

Abstract:Wireless networked control system (WNCS) connecting sensors, controllers, and actuators via wireless communications is a key enabling technology for highly scalable and low-cost deployment of control systems in the Industry 4.0 era. Despite the tight interaction of control and communications in WNCSs, most existing works adopt separative design approaches. This is mainly because the co-design of control-communication policies requires large and hybrid state and action spaces, making the optimal problem mathematically intractable and difficult to be solved effectively by classic algorithms. In this paper, we systematically investigate deep learning (DL)-based estimator-control-scheduler co-design for a model-unknown nonlinear WNCS over wireless fading channels. In particular, we propose a co-design framework with the awareness of the sensor's age-of-information (AoI) states and dynamic channel states. We propose a novel deep reinforcement learning (DRL)-based algorithm for controller and scheduler optimization utilizing both model-free and model-based data. An AoI-based importance sampling algorithm that takes into account the data accuracy is proposed for enhancing learning efficiency. We also develop novel schemes for enhancing the stability of joint training. Extensive experiments demonstrate that the proposed joint training algorithm can effectively solve the estimation-control-scheduling co-design problem in various scenarios and provide significant performance gain compared to separative design and some benchmark policies.

DRL-based Resource Allocation in Remote State Estimation

May 24, 2022

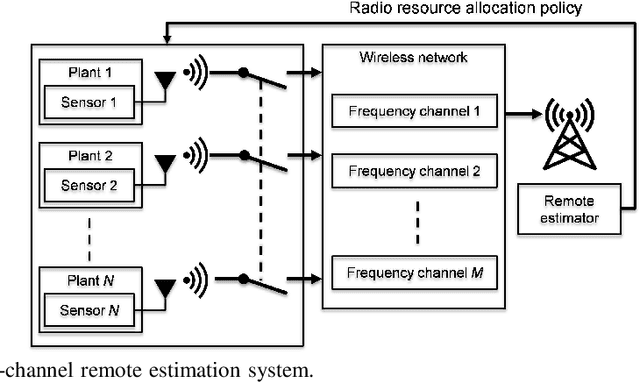

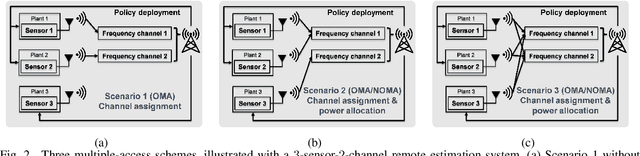

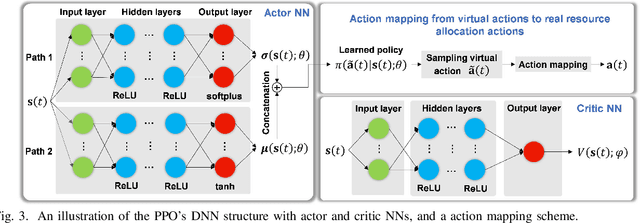

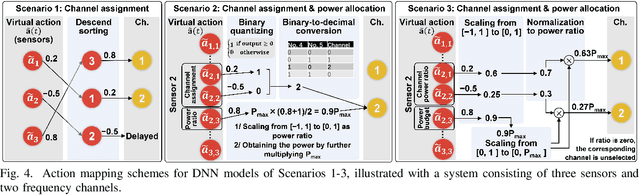

Abstract:Remote state estimation, where sensors send their measurements of distributed dynamic plants to a remote estimator over shared wireless resources, is essential for mission-critical applications of Industry 4.0. Existing algorithms on dynamic radio resource allocation for remote estimation systems assumed oversimplified wireless communications models and can only work for small-scale settings. In this work, we consider remote estimation systems with practical wireless models over the orthogonal multiple-access and non-orthogonal multiple-access schemes. We derive necessary and sufficient conditions under which remote estimation systems can be stabilized. The conditions are described in terms of the transmission power budget, channel statistics, and plants' parameters. For each multiple-access scheme, we formulate a novel dynamic resource allocation problem as a decision-making problem for achieving the minimum overall long-term average estimation mean-square error. Both the estimation quality and the channel quality states are taken into account for decision making. We systematically investigated the problems under different multiple-access schemes with large discrete, hybrid discrete-and-continuous, and continuous action spaces, respectively. We propose novel action-space compression methods and develop advanced deep reinforcement learning algorithms to solve the problems. Numerical results show that our algorithms solve the resource allocation problems effectively and provide much better scalability than the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge