Danilo Comminiello

Closing the Modality Gap Aligns Group-Wise Semantics

Jan 26, 2026Abstract:In multimodal learning, CLIP has been recognized as the \textit{de facto} method for learning a shared latent space across multiple modalities, placing similar representations close to each other and moving them away from dissimilar ones. Although CLIP-based losses effectively align modalities at the semantic level, the resulting latent spaces often remain only partially shared, revealing a structural mismatch known as the modality gap. While the necessity of addressing this phenomenon remains debated, particularly given its limited impact on instance-wise tasks (e.g., retrieval), we prove that its influence is instead strongly pronounced in group-level tasks (e.g., clustering). To support this claim, we introduce a novel method designed to consistently reduce this discrepancy in two-modal settings, with a straightforward extension to the general $n$-modal case. Through our extensive evaluation, we demonstrate our novel insight: while reducing the gap provides only marginal or inconsistent improvements in traditional instance-wise tasks, it significantly enhances group-wise tasks. These findings may reshape our understanding of the modality gap, highlighting its key role in improving performance on tasks requiring semantic grouping.

Quaternion Wavelet-Conditioned Diffusion Models for Image Super-Resolution

May 05, 2025Abstract:Image Super-Resolution is a fundamental problem in computer vision with broad applications spacing from medical imaging to satellite analysis. The ability to reconstruct high-resolution images from low-resolution inputs is crucial for enhancing downstream tasks such as object detection and segmentation. While deep learning has significantly advanced SR, achieving high-quality reconstructions with fine-grained details and realistic textures remains challenging, particularly at high upscaling factors. Recent approaches leveraging diffusion models have demonstrated promising results, yet they often struggle to balance perceptual quality with structural fidelity. In this work, we introduce ResQu a novel SR framework that integrates a quaternion wavelet preprocessing framework with latent diffusion models, incorporating a new quaternion wavelet- and time-aware encoder. Unlike prior methods that simply apply wavelet transforms within diffusion models, our approach enhances the conditioning process by exploiting quaternion wavelet embeddings, which are dynamically integrated at different stages of denoising. Furthermore, we also leverage the generative priors of foundation models such as Stable Diffusion. Extensive experiments on domain-specific datasets demonstrate that our method achieves outstanding SR results, outperforming in many cases existing approaches in perceptual quality and standard evaluation metrics. The code will be available after the revision process.

Beyond Answers: How LLMs Can Pursue Strategic Thinking in Education

Apr 07, 2025

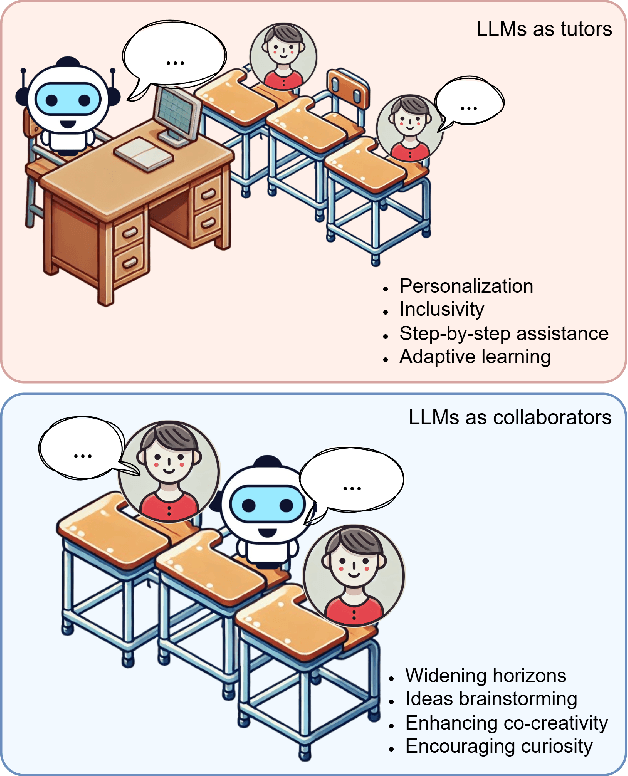

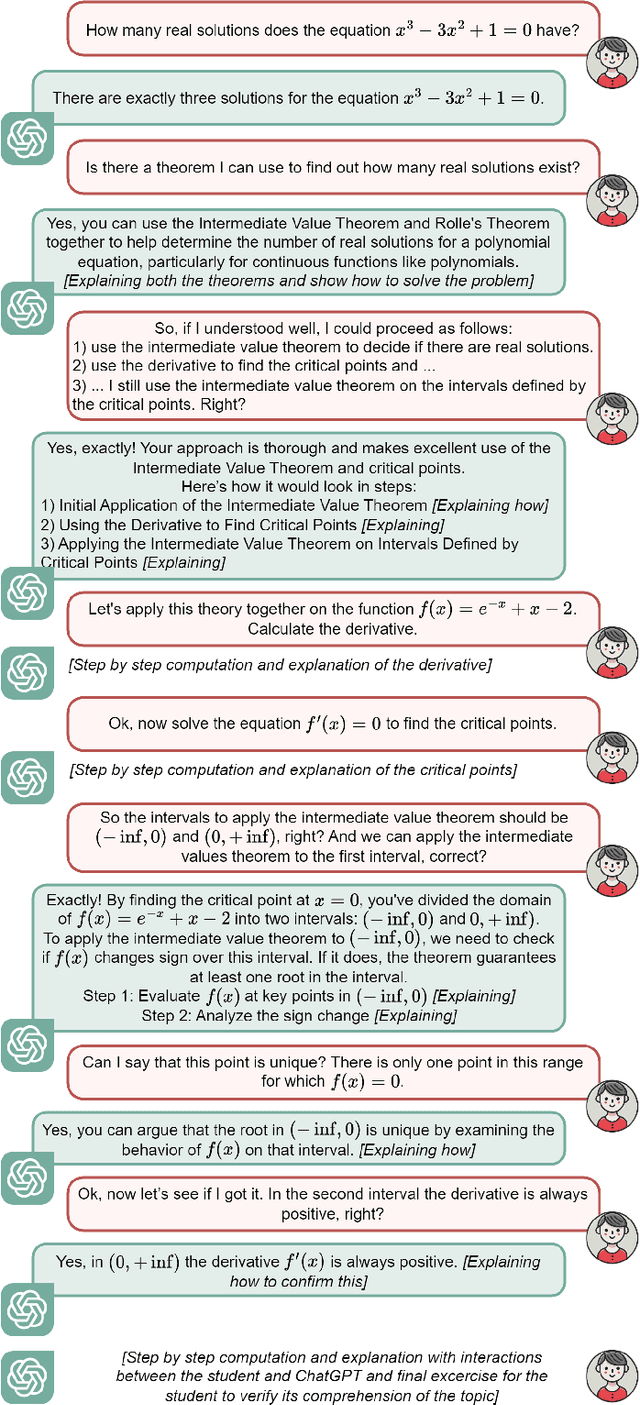

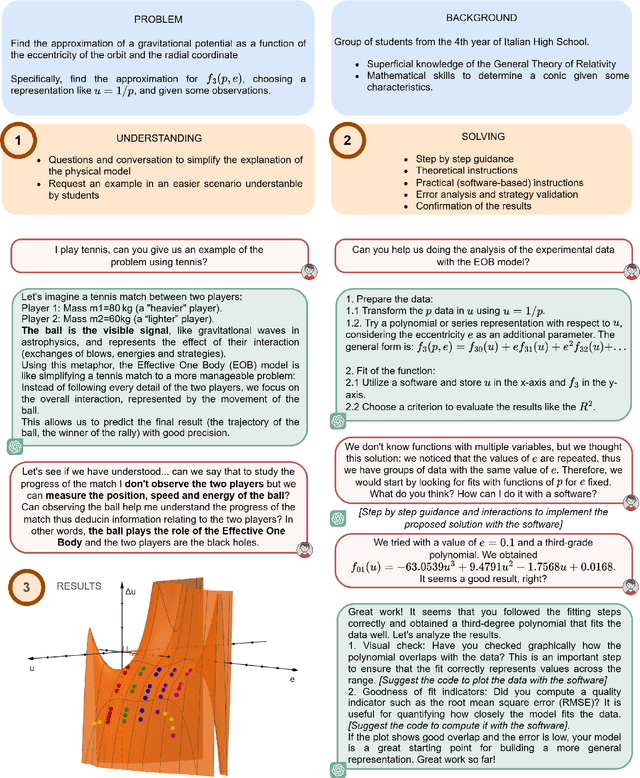

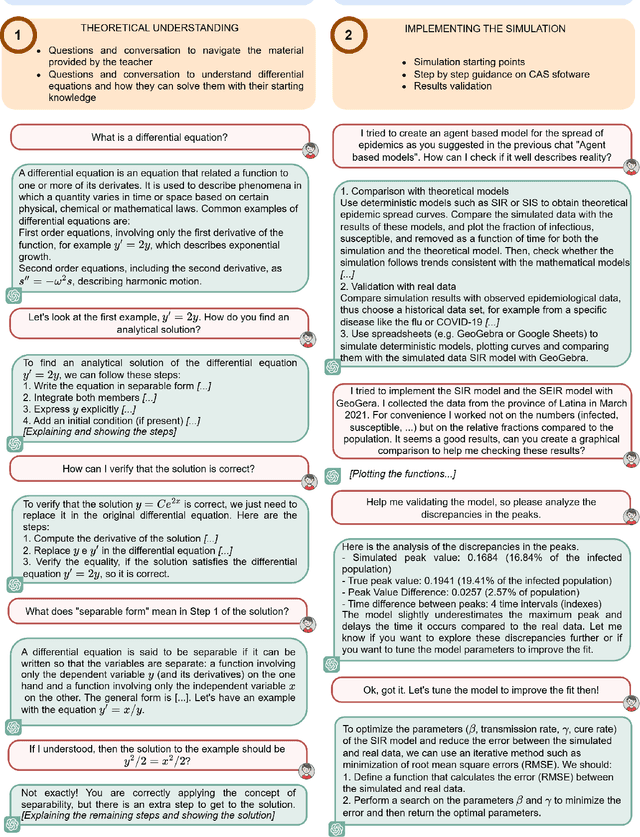

Abstract:Artificial Intelligence (AI) holds transformative potential in education, enabling personalized learning, enhancing inclusivity, and encouraging creativity and curiosity. In this paper, we explore how Large Language Models (LLMs) can act as both patient tutors and collaborative partners to enhance education delivery. As tutors, LLMs personalize learning by offering step-by-step explanations and addressing individual needs, making education more inclusive for students with diverse backgrounds or abilities. As collaborators, they expand students' horizons, supporting them in tackling complex, real-world problems and co-creating innovative projects. However, to fully realize these benefits, LLMs must be leveraged not as tools for providing direct solutions but rather to guide students in developing resolving strategies and finding learning paths together. Therefore, a strong emphasis should be placed on educating students and teachers on the successful use of LLMs to ensure their effective integration into classrooms. Through practical examples and real-world case studies, this paper illustrates how LLMs can make education more inclusive and engaging while empowering students to reach their full potential.

Stable-V2A: Synthesis of Synchronized Sound Effects with Temporal and Semantic Controls

Dec 19, 2024

Abstract:Sound designers and Foley artists usually sonorize a scene, such as from a movie or video game, by manually annotating and sonorizing each action of interest in the video. In our case, the intent is to leave full creative control to sound designers with a tool that allows them to bypass the more repetitive parts of their work, thus being able to focus on the creative aspects of sound production. We achieve this presenting Stable-V2A, a two-stage model consisting of: an RMS-Mapper that estimates an envelope representative of the audio characteristics associated with the input video; and Stable-Foley, a diffusion model based on Stable Audio Open that generates audio semantically and temporally aligned with the target video. Temporal alignment is guaranteed by the use of the envelope as a ControlNet input, while semantic alignment is achieved through the use of sound representations chosen by the designer as cross-attention conditioning of the diffusion process. We train and test our model on Greatest Hits, a dataset commonly used to evaluate V2A models. In addition, to test our model on a case study of interest, we introduce Walking The Maps, a dataset of videos extracted from video games depicting animated characters walking in different locations. Samples and code available on our demo page at https://ispamm.github.io/Stable-V2A.

Gramian Multimodal Representation Learning and Alignment

Dec 16, 2024

Abstract:Human perception integrates multiple modalities, such as vision, hearing, and language, into a unified understanding of the surrounding reality. While recent multimodal models have achieved significant progress by aligning pairs of modalities via contrastive learning, their solutions are unsuitable when scaling to multiple modalities. These models typically align each modality to a designated anchor without ensuring the alignment of all modalities with each other, leading to suboptimal performance in tasks requiring a joint understanding of multiple modalities. In this paper, we structurally rethink the pairwise conventional approach to multimodal learning and we present the novel Gramian Representation Alignment Measure (GRAM), which overcomes the above-mentioned limitations. GRAM learns and then aligns $n$ modalities directly in the higher-dimensional space in which modality embeddings lie by minimizing the Gramian volume of the $k$-dimensional parallelotope spanned by the modality vectors, ensuring the geometric alignment of all modalities simultaneously. GRAM can replace cosine similarity in any downstream method, holding for 2 to $n$ modality and providing more meaningful alignment with respect to previous similarity measures. The novel GRAM-based contrastive loss function enhances the alignment of multimodal models in the higher-dimensional embedding space, leading to new state-of-the-art performance in downstream tasks such as video-audio-text retrieval and audio-video classification. The project page, the code, and the pretrained models are available at https://ispamm.github.io/GRAM/.

A Wavelet Diffusion GAN for Image Super-Resolution

Oct 23, 2024

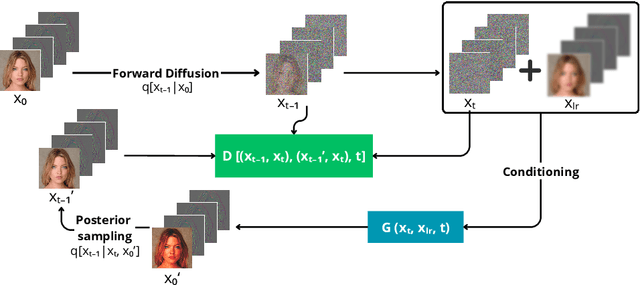

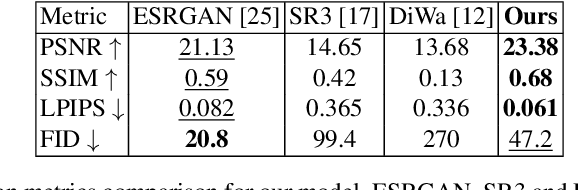

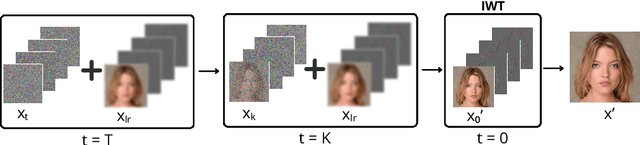

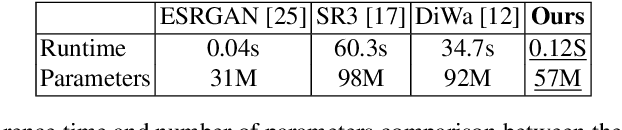

Abstract:In recent years, diffusion models have emerged as a superior alternative to generative adversarial networks (GANs) for high-fidelity image generation, with wide applications in text-to-image generation, image-to-image translation, and super-resolution. However, their real-time feasibility is hindered by slow training and inference speeds. This study addresses this challenge by proposing a wavelet-based conditional Diffusion GAN scheme for Single-Image Super-Resolution (SISR). Our approach utilizes the diffusion GAN paradigm to reduce the timesteps required by the reverse diffusion process and the Discrete Wavelet Transform (DWT) to achieve dimensionality reduction, decreasing training and inference times significantly. The results of an experimental validation on the CelebA-HQ dataset confirm the effectiveness of our proposed scheme. Our approach outperforms other state-of-the-art methodologies successfully ensuring high-fidelity output while overcoming inherent drawbacks associated with diffusion models in time-sensitive applications.

Lightweight Diffusion Models for Resource-Constrained Semantic Communication

Oct 03, 2024

Abstract:Recently, generative semantic communication models have proliferated as they are revolutionizing semantic communication frameworks, improving their performance, and opening the way to novel applications. Despite their impressive ability to regenerate content from the compressed semantic information received, generative models pose crucial challenges for communication systems in terms of high memory footprints and heavy computational load. In this paper, we present a novel Quantized GEnerative Semantic COmmunication framework, Q-GESCO. The core method of Q-GESCO is a quantized semantic diffusion model capable of regenerating transmitted images from the received semantic maps while simultaneously reducing computational load and memory footprint thanks to the proposed post-training quantization technique. Q-GESCO is robust to different channel noises and obtains comparable performance to the full precision counterpart in different scenarios saving up to 75% memory and 79% floating point operations. This allows resource-constrained devices to exploit the generative capabilities of Q-GESCO, widening the range of applications and systems for generative semantic communication frameworks. The code is available at https://github.com/ispamm/Q-GESCO.

Hierarchical Hypercomplex Network for Multimodal Emotion Recognition

Sep 13, 2024

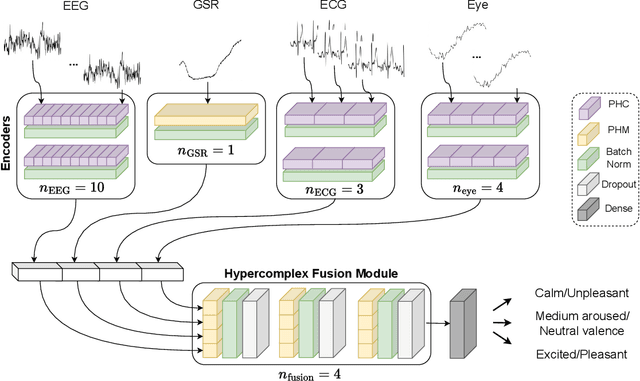

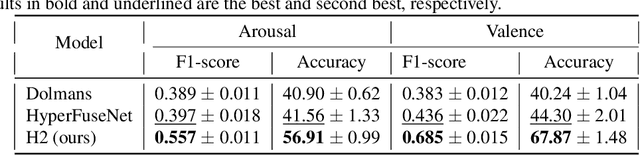

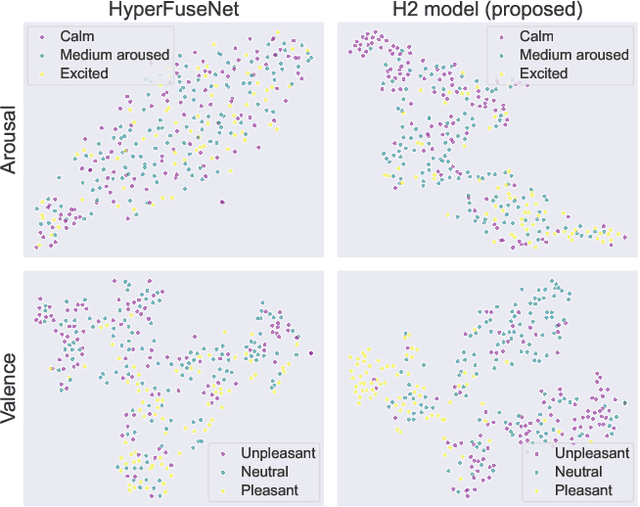

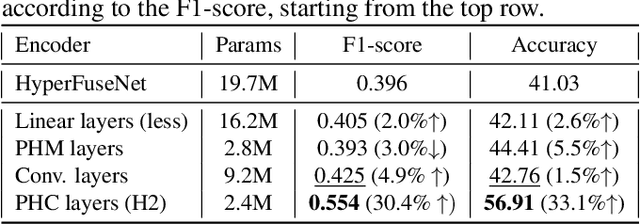

Abstract:Emotion recognition is relevant in various domains, ranging from healthcare to human-computer interaction. Physiological signals, being beyond voluntary control, offer reliable information for this purpose, unlike speech and facial expressions which can be controlled at will. They reflect genuine emotional responses, devoid of conscious manipulation, thereby enhancing the credibility of emotion recognition systems. Nonetheless, multimodal emotion recognition with deep learning models remains a relatively unexplored field. In this paper, we introduce a fully hypercomplex network with a hierarchical learning structure to fully capture correlations. Specifically, at the encoder level, the model learns intra-modal relations among the different channels of each input signal. Then, a hypercomplex fusion module learns inter-modal relations among the embeddings of the different modalities. The main novelty is in exploiting intra-modal relations by endowing the encoders with parameterized hypercomplex convolutions (PHCs) that thanks to hypercomplex algebra can capture inter-channel interactions within single modalities. Instead, the fusion module comprises parameterized hypercomplex multiplications (PHMs) that can model inter-modal correlations. The proposed architecture surpasses state-of-the-art models on the MAHNOB-HCI dataset for emotion recognition, specifically in classifying valence and arousal from electroencephalograms (EEGs) and peripheral physiological signals. The code of this study is available at https://github.com/ispamm/MHyEEG.

Language-Oriented Semantic Latent Representation for Image Transmission

May 16, 2024

Abstract:In the new paradigm of semantic communication (SC), the focus is on delivering meanings behind bits by extracting semantic information from raw data. Recent advances in data-to-text models facilitate language-oriented SC, particularly for text-transformed image communication via image-to-text (I2T) encoding and text-to-image (T2I) decoding. However, although semantically aligned, the text is too coarse to precisely capture sophisticated visual features such as spatial locations, color, and texture, incurring a significant perceptual difference between intended and reconstructed images. To address this limitation, in this paper, we propose a novel language-oriented SC framework that communicates both text and a compressed image embedding and combines them using a latent diffusion model to reconstruct the intended image. Experimental results validate the potential of our approach, which transmits only 2.09\% of the original image size while achieving higher perceptual similarities in noisy communication channels compared to a baseline SC method that communicates only through text.The code is available at https://github.com/ispamm/Img2Img-SC/ .

Rethinking Multi-User Semantic Communications with Deep Generative Models

May 16, 2024

Abstract:In recent years, novel communication strategies have emerged to face the challenges that the increased number of connected devices and the higher quality of transmitted information are posing. Among them, semantic communication obtained promising results especially when combined with state-of-the-art deep generative models, such as large language or diffusion models, able to regenerate content from extremely compressed semantic information. However, most of these approaches focus on single-user scenarios processing the received content at the receiver on top of conventional communication systems. In this paper, we propose to go beyond these methods by developing a novel generative semantic communication framework tailored for multi-user scenarios. This system assigns the channel to users knowing that the lost information can be filled in with a diffusion model at the receivers. Under this innovative perspective, OFDMA systems should not aim to transmit the largest part of information, but solely the bits necessary to the generative model to semantically regenerate the missing ones. The thorough experimental evaluation shows the capabilities of the novel diffusion model and the effectiveness of the proposed framework, leading towards a GenAI-based next generation of communications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge