Paolo Banelli

Radio-Coverage-Aware Path Planning for Cooperative Autonomous Vehicles

Nov 10, 2025

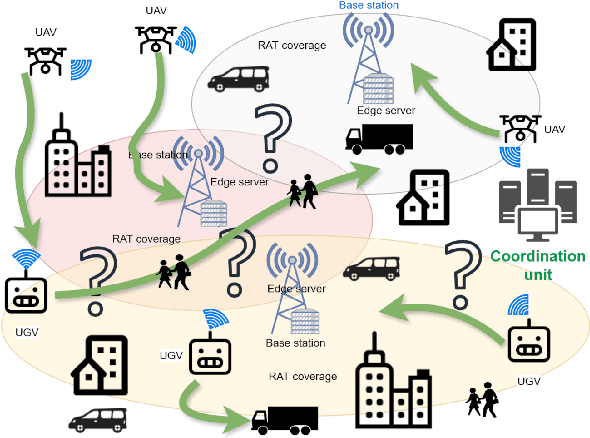

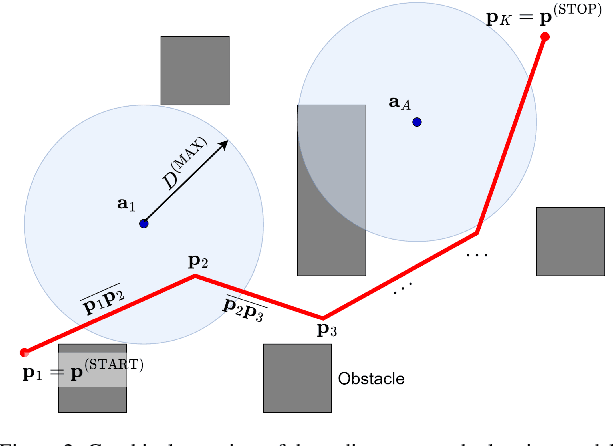

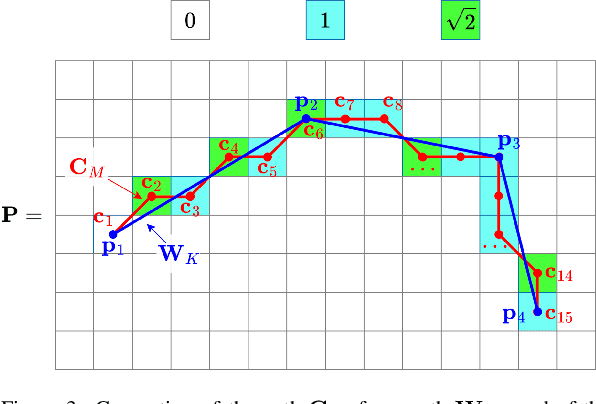

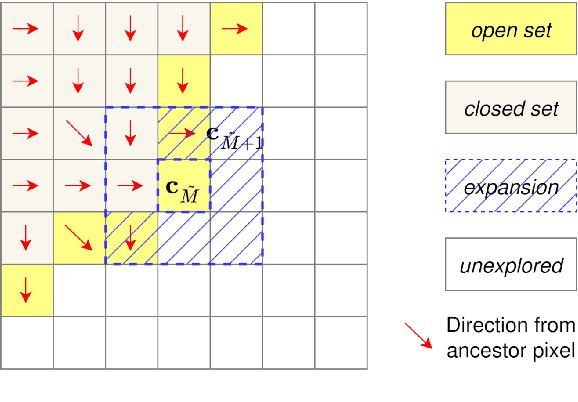

Abstract:Fleets of autonomous vehicles (AV) often are at the core of intelligent transportation scenarios for smart cities, and may require a wireless Internet connection to offload computer vision tasks to data centers located either in the edge or the cloud section of the network. Cooperation among AVs is successful when the environment is unknown, or changes dynamically, so as to improve coverage and trip time, and minimize the traveled distance. The AVs, while mapping the environment with range-based sensors, move across the wireless coverage areas, with consequences on the achieved access bit rate, latency, and handover rate. In this paper, we propose to modify the cost of path planning algorithms such as Dijkstra and A*, so that not only the traveled distance is considered in the best path solution, but also the radio coverage experience. To this aim, several radio-related cost-weighting functions are introduced and tested, to assess the performance of the proposed techniques with extensive simulations. The proposed mapping algorithm can achieve a mapping error probability below 2%, while the proposed path-planning algorithms extend the experienced radio coverage of the AVs, with limited distance increase with respect to shortest-path existing methods, such as conventional Dijkstra and A* algorithms.

Opportunistic Information-Bottleneck for Goal-oriented Feature Extraction and Communication

Apr 14, 2024

Abstract:The Information Bottleneck (IB) method is an information theoretical framework to design a parsimonious and tunable feature-extraction mechanism, such that the extracted features are maximally relevant to a specific learning or inference task. Despite its theoretical value, the IB is based on a functional optimization problem that admits a closed form solution only on specific cases (e.g., Gaussian distributions), making it difficult to be applied in most applications, where it is necessary to resort to complex and approximated variational implementations. To overcome this limitation, we propose an approach to adapt the closed-form solution of the Gaussian IB to a general task. Whichever is the inference task to be performed by a (possibly deep) neural-network, the key idea is to opportunistically design a regression sub-task, embedded in the original problem, where we can safely assume a (joint) multivariate normality between the sub-task's inputs and outputs. In this way we can exploit a fixed and pre-trained neural network to process the input data, using a tunable number of features, to trade data-size and complexity for accuracy. This approach is particularly useful every time a device needs to transmit data (or features) to a server that has to fulfil an inference task, as it provides a principled way to extract the most relevant features for the task to be executed, while looking for the best trade-off between the size of the feature vector to be transmitted, inference accuracy, and complexity. Extensive simulation results testify the effectiveness of the proposed methodhttps://info.arxiv.org/help/prep#comments and encourage to further investigate this research line.

Enabling Edge Artificial Intelligence via Goal-oriented Deep Neural Network Splitting

Dec 06, 2023

Abstract:Deep Neural Network (DNN) splitting is one of the key enablers of edge Artificial Intelligence (AI), as it allows end users to pre-process data and offload part of the computational burden to nearby Edge Cloud Servers (ECSs). This opens new opportunities and degrees of freedom in balancing energy consumption, delay, accuracy, privacy, and other trustworthiness metrics. In this work, we explore the opportunity of DNN splitting at the edge of 6G wireless networks to enable low energy cooperative inference with target delay and accuracy with a goal-oriented perspective. Going beyond the current literature, we explore new trade-offs that take into account the accuracy degradation as a function of the Splitting Point (SP) selection and wireless channel conditions. Then, we propose an algorithm that dynamically controls SP selection, local computing resources, uplink transmit power and bandwidth allocation, in a goal-oriented fashion, to meet a target goal-effectiveness. To the best of our knowledge, this is the first work proposing adaptive SP selection on the basis of all learning performance (i.e., energy, delay, accuracy), with the aim of guaranteeing the accomplishment of a goal (e.g., minimize the energy consumption under latency and accuracy constraints). Numerical results show the advantages of the proposed SP selection and resource allocation, to enable energy frugal and effective edge AI.

Goal-oriented Communications for the IoT: System Design and Adaptive Resource Optimization

Oct 21, 2023

Abstract:Internet of Things (IoT) applications combine sensing, wireless communication, intelligence, and actuation, enabling the interaction among heterogeneous devices that collect and process considerable amounts of data. However, the effectiveness of IoT applications needs to face the limitation of available resources, including spectrum, energy, computing, learning and inference capabilities. This paper challenges the prevailing approach to IoT communication, which prioritizes the usage of resources in order to guarantee perfect recovery, at the bit level, of the data transmitted by the sensors to the central unit. We propose a novel approach, called goal-oriented (GO) IoT system design, that transcends traditional bit-related metrics and focuses directly on the fulfillment of the goal motivating the exchange of data. The improvement is then achieved through a comprehensive system optimization, integrating sensing, communication, computation, learning, and control. We provide numerical results demonstrating the practical applications of our methodology in compelling use cases such as edge inference, cooperative sensing, and federated learning. These examples highlight the effectiveness and real-world implications of our proposed approach, with the potential to revolutionize IoT systems.

Multi-user Goal-oriented Communications with Energy-efficient Edge Resource Management

May 03, 2023

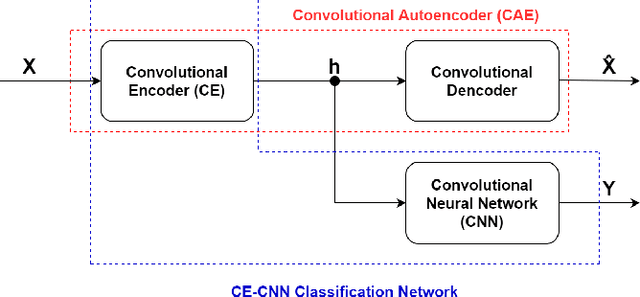

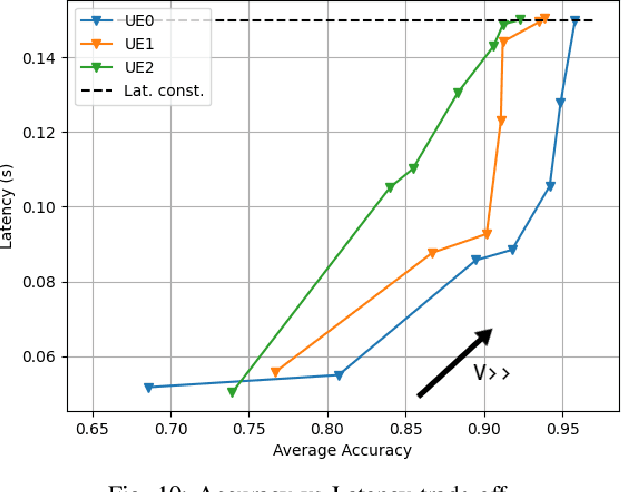

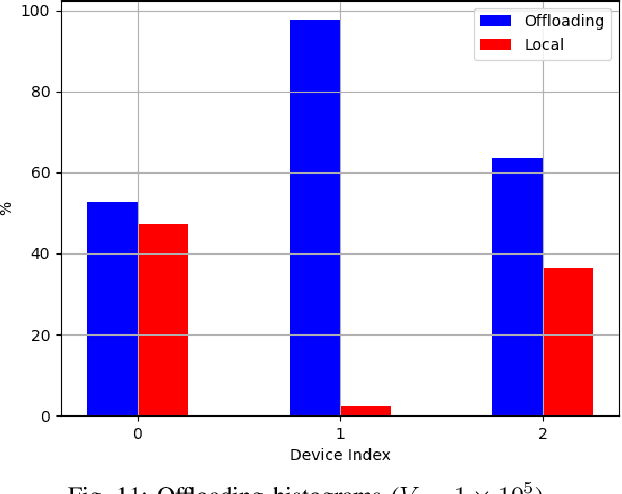

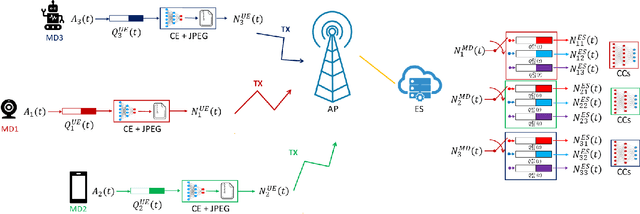

Abstract:Edge Learning (EL) pushes the computational resources toward the edge of 5G/6G network to assist mobile users requesting delay-sensitive and energy-aware intelligent services. A common challenge in running inference tasks from remote is to extract and transmit only the features that are most significant for the inference task. From this perspective, EL can be effectively coupled with goal-oriented communications, whose aim is to transmit only the information {\it relevant} to perform the inference task, under prescribed accuracy, delay, and energy constraints. In this work, we consider a multi-user/single server wireless network, where the users can opportunistically decide whether to perform the inference task by themselves or, alternatively, to offload the data to the edge server for remote processing. The data to be transmitted undergoes a goal-oriented compression stage performed using a convolutional encoder, jointly trained with a convolutional decoder running at the edge-server side. Employing Lyapunov optimization, we propose a method to jointly and dynamically optimize the selection of the most suitable encoding/decoding scheme, together with the allocation of computational and transmission resources, across all the users and the edge server. Extensive simulations confirm the effectiveness of the proposed approaches and highlight the trade-offs between energy, latency, and learning accuracy.

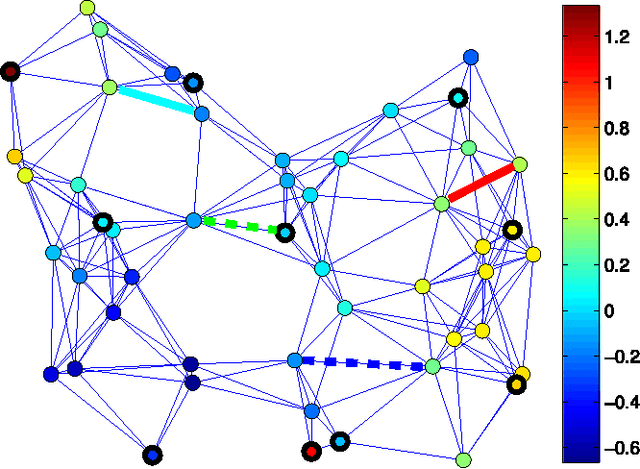

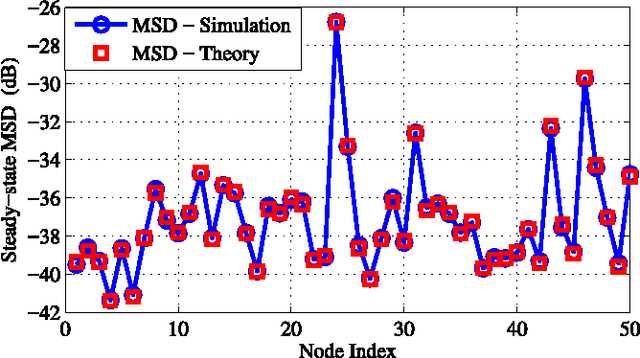

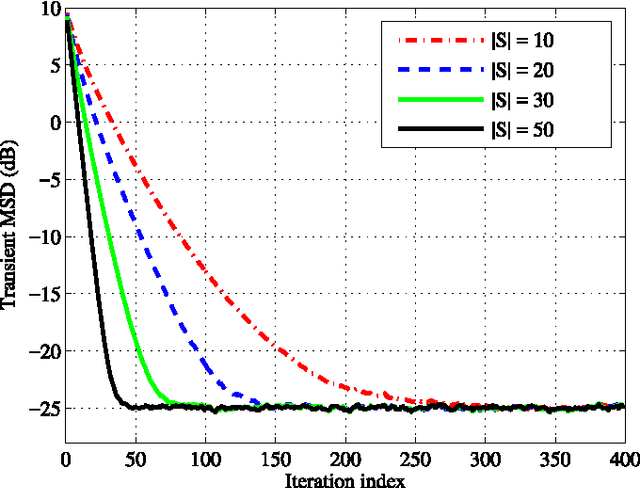

Adaptive Graph Signal Processing: Algorithms and Optimal Sampling Strategies

Sep 12, 2017

Abstract:The goal of this paper is to propose novel strategies for adaptive learning of signals defined over graphs, which are observed over a (randomly time-varying) subset of vertices. We recast two classical adaptive algorithms in the graph signal processing framework, namely, the least mean squares (LMS) and the recursive least squares (RLS) adaptive estimation strategies. For both methods, a detailed mean-square analysis illustrates the effect of random sampling on the adaptive reconstruction capability and the steady-state performance. Then, several probabilistic sampling strategies are proposed to design the sampling probability at each node in the graph, with the aim of optimizing the tradeoff between steady-state performance, graph sampling rate, and convergence rate of the adaptive algorithms. Finally, a distributed RLS strategy is derived and is shown to be convergent to its centralized counterpart. Numerical simulations carried out over both synthetic and real data illustrate the good performance of the proposed sampling and reconstruction strategies for (possibly distributed) adaptive learning of signals defined over graphs.

Adaptive Least Mean Squares Estimation of Graph Signals

Jul 11, 2016

Abstract:The aim of this paper is to propose a least mean squares (LMS) strategy for adaptive estimation of signals defined over graphs. Assuming the graph signal to be band-limited, over a known bandwidth, the method enables reconstruction, with guaranteed performance in terms of mean-square error, and tracking from a limited number of observations over a subset of vertices. A detailed mean square analysis provides the performance of the proposed method, and leads to several insights for designing useful sampling strategies for graph signals. Numerical results validate our theoretical findings, and illustrate the performance of the proposed method. Furthermore, to cope with the case where the bandwidth is not known beforehand, we propose a method that performs a sparse online estimation of the signal support in the (graph) frequency domain, which enables online adaptation of the graph sampling strategy. Finally, we apply the proposed method to build the power spatial density cartography of a given operational region in a cognitive network environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge