Sascha Hornauer

Improving Consistency in Vehicle Trajectory Prediction Through Preference Optimization

Jul 03, 2025Abstract:Trajectory prediction is an essential step in the pipeline of an autonomous vehicle. Inaccurate or inconsistent predictions regarding the movement of agents in its surroundings lead to poorly planned maneuvers and potentially dangerous situations for the end-user. Current state-of-the-art deep-learning-based trajectory prediction models can achieve excellent accuracy on public datasets. However, when used in more complex, interactive scenarios, they often fail to capture important interdependencies between agents, leading to inconsistent predictions among agents in the traffic scene. Inspired by the efficacy of incorporating human preference into large language models, this work fine-tunes trajectory prediction models in multi-agent settings using preference optimization. By taking as input automatically calculated preference rankings among predicted futures in the fine-tuning process, our experiments--using state-of-the-art models on three separate datasets--show that we are able to significantly improve scene consistency while minimally sacrificing trajectory prediction accuracy and without adding any excess computational requirements at inference time.

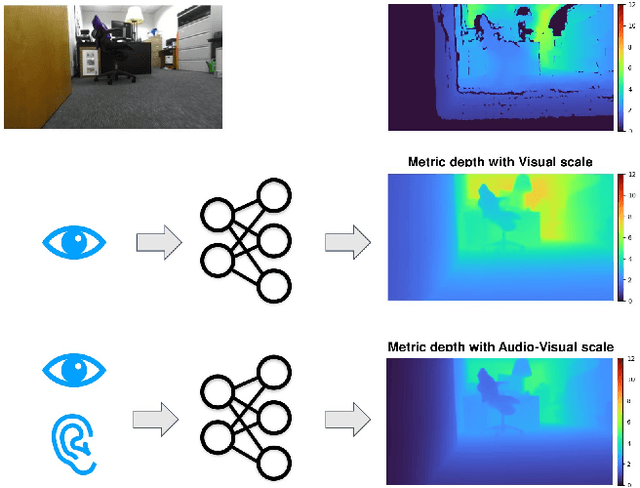

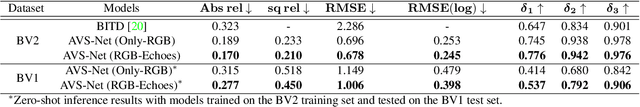

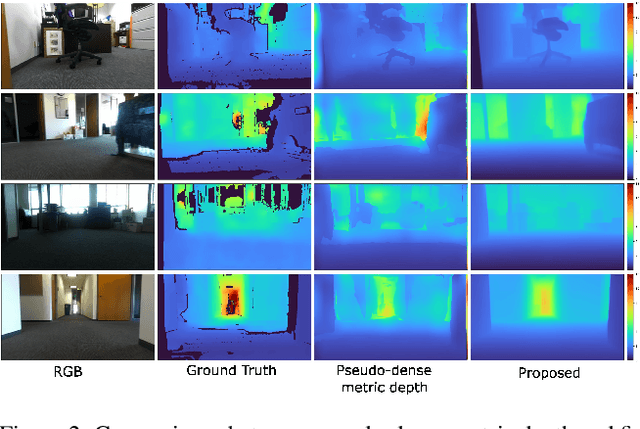

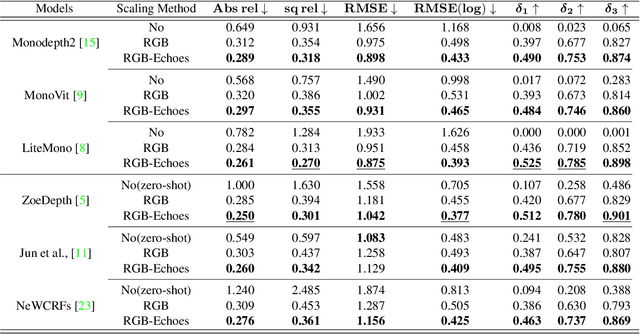

AVS-Net: Audio-Visual Scale Net for Self-supervised Monocular Metric Depth Estimation

Dec 02, 2024

Abstract:Metric depth prediction from monocular videos suffers from bad generalization between datasets and requires supervised depth data for scale-correct training. Self-supervised training using multi-view reconstruction can benefit from large scale natural videos but not provide correct scale, limiting its benefits. Recently, reflecting audible Echoes off objects is investigated for improved depth prediction and was shown to be sufficient to reconstruct objects at scale even without a visual signal. Because Echoes travel at fixed speed, they have the potential to resolve ambiguities in object scale and appearance. However, predicting depth end-to-end from sound and vision cannot benefit from unsupervised depth prediction approaches, which can process large scale data without sound annotation. In this work we show how Echoes can benefit depth prediction in two ways: When learning metric depth learned from supervised data and as supervisory signal for scale-correct self-supervised training. We show how we can improve the predictions of several state-of-the-art approaches and how the method can scale-correct a self-supervised depth approach.

NeRAF: 3D Scene Infused Neural Radiance and Acoustic Fields

May 28, 2024Abstract:Sound plays a major role in human perception, providing essential scene information alongside vision for understanding our environment. Despite progress in neural implicit representations, learning acoustics that match a visual scene is still challenging. We propose NeRAF, a method that jointly learns acoustic and radiance fields. NeRAF is designed as a Nerfstudio module for convenient access to realistic audio-visual generation. It synthesizes both novel views and spatialized audio at new positions, leveraging radiance field capabilities to condition the acoustic field with 3D scene information. At inference, each modality can be rendered independently and at spatially separated positions, providing greater versatility. We demonstrate the advantages of our method on the SoundSpaces dataset. NeRAF achieves substantial performance improvements over previous works while being more data-efficient. Furthermore, NeRAF enhances novel view synthesis of complex scenes trained with sparse data through cross-modal learning.

Mesoscale Traffic Forecasting for Real-Time Bottleneck and Shockwave Prediction

Feb 08, 2024

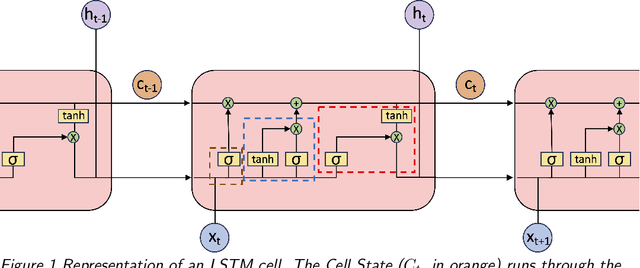

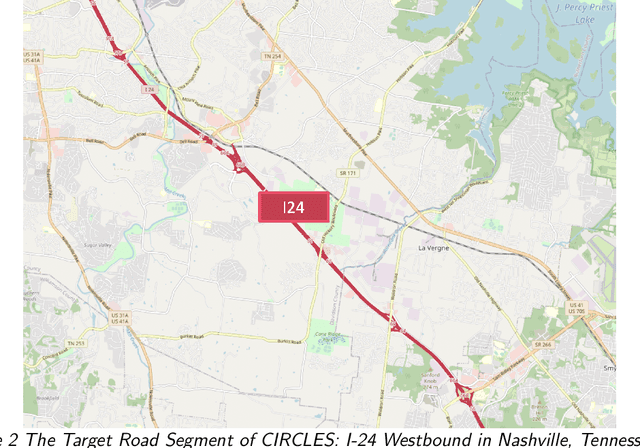

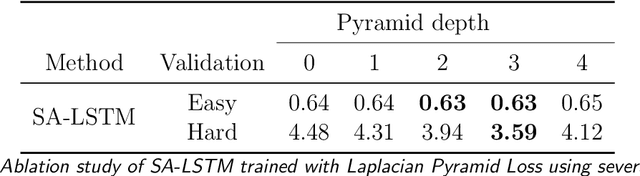

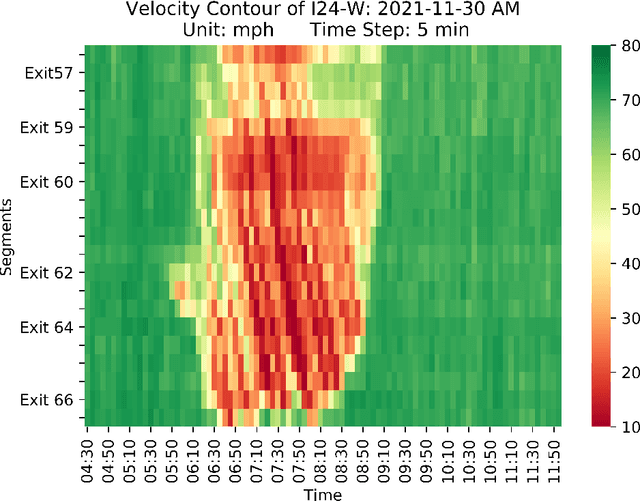

Abstract:Accurate real-time traffic state forecasting plays a pivotal role in traffic control research. In particular, the CIRCLES consortium project necessitates predictive techniques to mitigate the impact of data source delays. After the success of the MegaVanderTest experiment, this paper aims at overcoming the current system limitations and develop a more suited approach to improve the real-time traffic state estimation for the next iterations of the experiment. In this paper, we introduce the SA-LSTM, a deep forecasting method integrating Self-Attention (SA) on the spatial dimension with Long Short-Term Memory (LSTM) yielding state-of-the-art results in real-time mesoscale traffic forecasting. We extend this approach to multi-step forecasting with the n-step SA-LSTM, which outperforms traditional multi-step forecasting methods in the trade-off between short-term and long-term predictions, all while operating in real-time.

MBAPPE: MCTS-Built-Around Prediction for Planning Explicitly

Sep 15, 2023

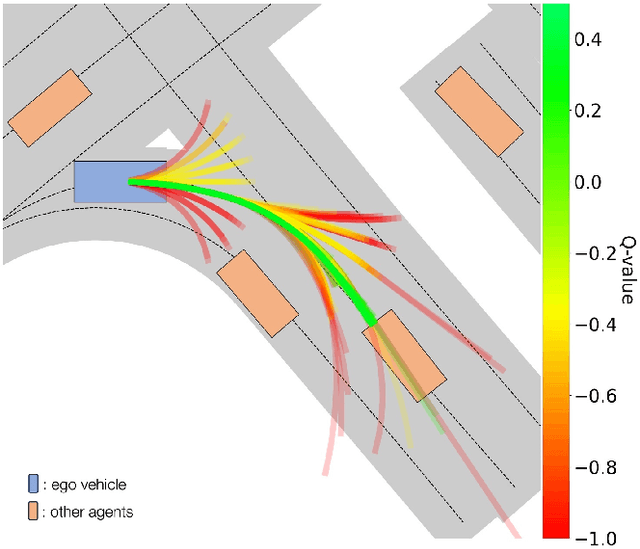

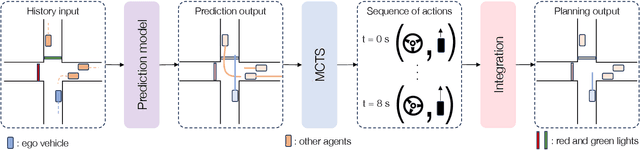

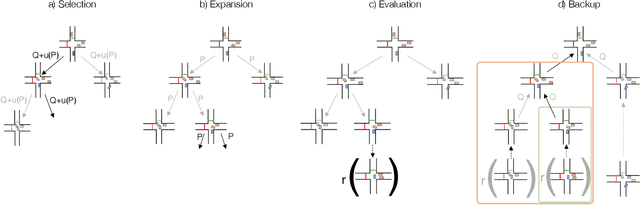

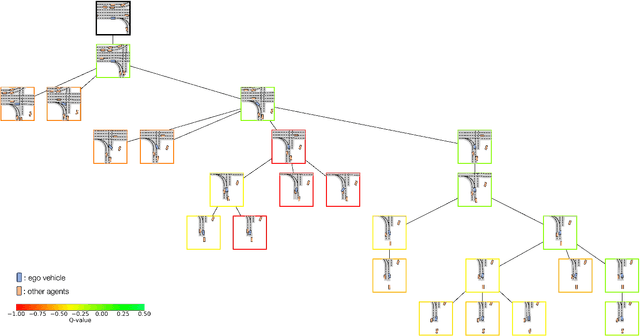

Abstract:We present MBAPPE, a novel approach to motion planning for autonomous driving combining tree search with a partially-learned model of the environment. Leveraging the inherent explainable exploration and optimization capabilities of the Monte-Carlo Search Tree (MCTS), our method addresses complex decision-making in a dynamic environment. We propose a framework that combines MCTS with supervised learning, enabling the autonomous vehicle to effectively navigate through diverse scenarios. Experimental results demonstrate the effectiveness and adaptability of our approach, showcasing improved real-time decision-making and collision avoidance. This paper contributes to the field by providing a robust solution for motion planning in autonomous driving systems, enhancing their explainability and reliability.

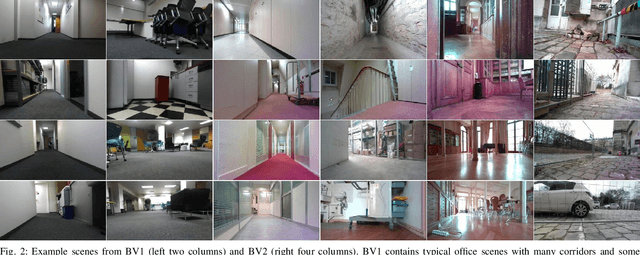

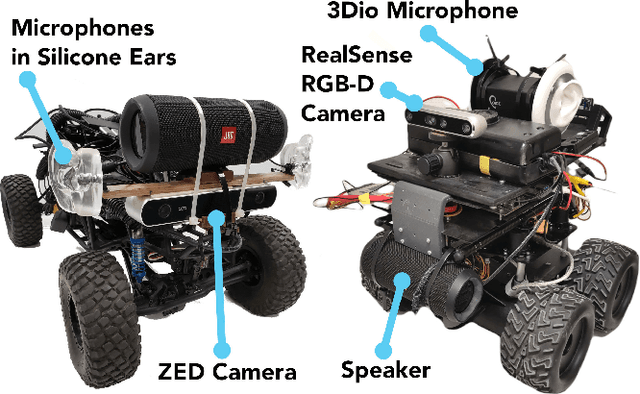

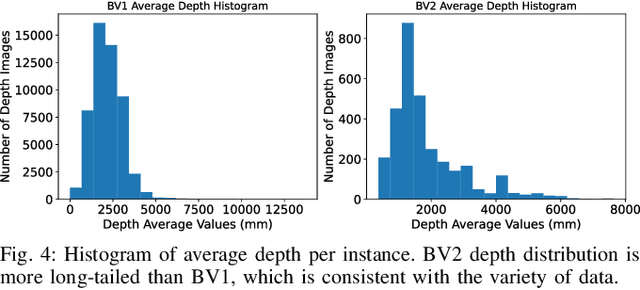

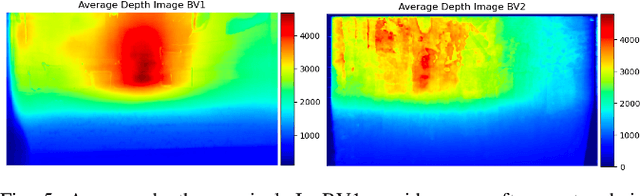

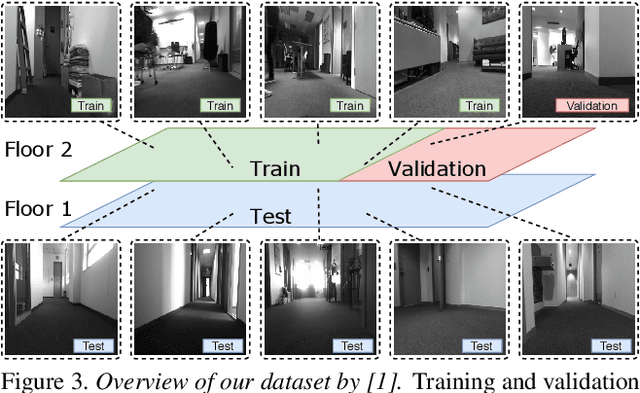

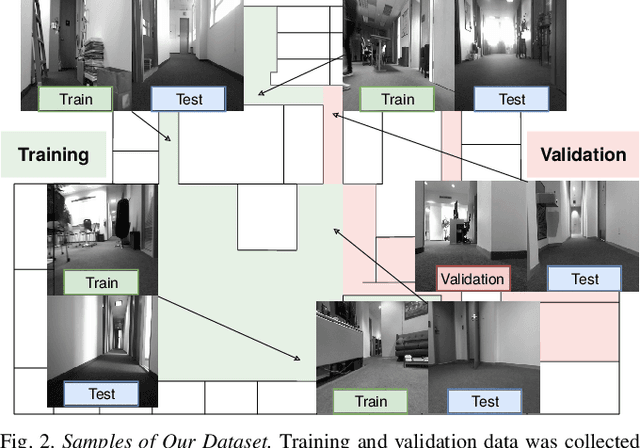

The Audio-Visual BatVision Dataset for Research on Sight and Sound

Mar 14, 2023

Abstract:Vision research showed remarkable success in understanding our world, propelled by datasets of images and videos. Sensor data from radar, LiDAR and cameras supports research in robotics and autonomous driving for at least a decade. However, while visual sensors may fail in some conditions, sound has recently shown potential to complement sensor data. Simulated room impulse responses (RIR) in 3D apartment-models became a benchmark dataset for the community, fostering a range of audiovisual research. In simulation, depth is predictable from sound, by learning bat-like perception with a neural network. Concurrently, the same was achieved in reality by using RGB-D images and echoes of chirping sounds. Biomimicking bat perception is an exciting new direction but needs dedicated datasets to explore the potential. Therefore, we collected the BatVision dataset to provide large-scale echoes in complex real-world scenes to the community. We equipped a robot with a speaker to emit chirps and a binaural microphone to record their echoes. Synchronized RGB-D images from the same perspective provide visual labels of traversed spaces. We sampled modern US office spaces to historic French university grounds, indoor and outdoor with large architectural variety. This dataset will allow research on robot echolocation, general audio-visual tasks and sound phaenomena unavailable in simulated data. We show promising results for audio-only depth prediction and show how state-of-the-art work developed for simulated data can also succeed on our dataset. The data can be downloaded at https://forms.gle/W6xtshMgoXGZDwsE7

GRI: General Reinforced Imitation and its Application to Vision-Based Autonomous Driving

Nov 16, 2021

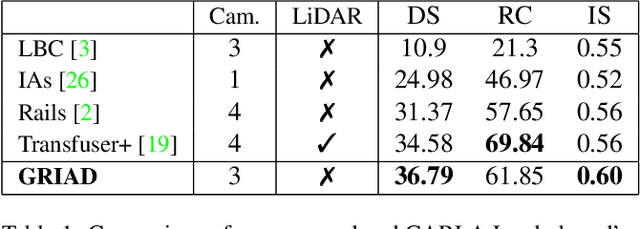

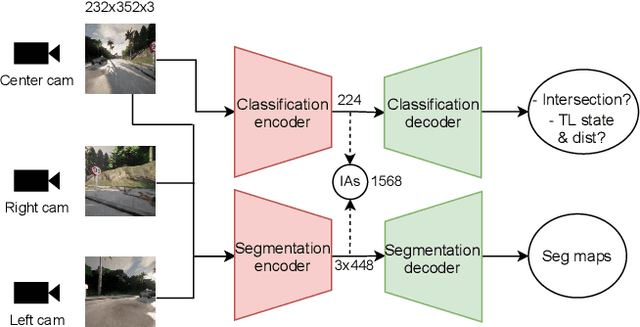

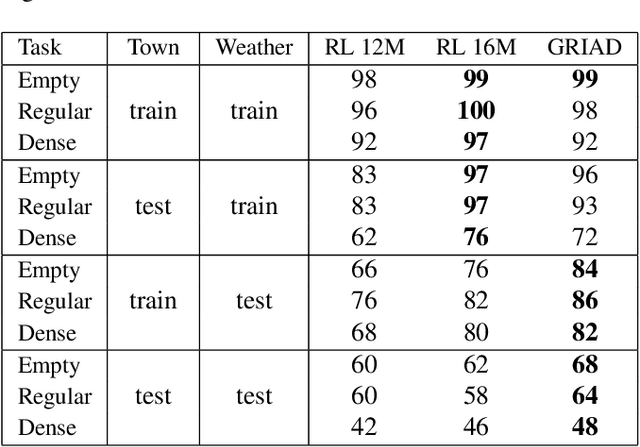

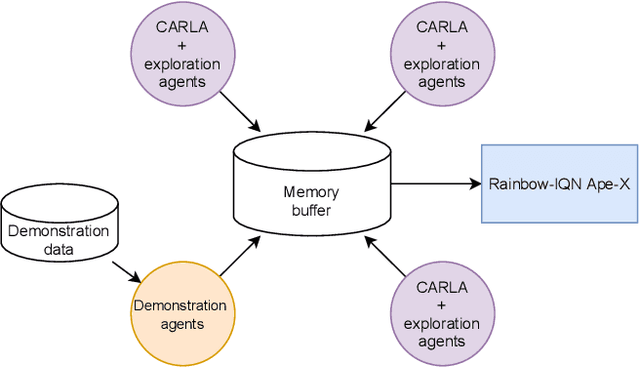

Abstract:Deep reinforcement learning (DRL) has been demonstrated to be effective for several complex decision-making applications such as autonomous driving and robotics. However, DRL is notoriously limited by its high sample complexity and its lack of stability. Prior knowledge, e.g. as expert demonstrations, is often available but challenging to leverage to mitigate these issues. In this paper, we propose General Reinforced Imitation (GRI), a novel method which combines benefits from exploration and expert data and is straightforward to implement over any off-policy RL algorithm. We make one simplifying hypothesis: expert demonstrations can be seen as perfect data whose underlying policy gets a constant high reward. Based on this assumption, GRI introduces the notion of offline demonstration agents. This agent sends expert data which are processed both concurrently and indistinguishably with the experiences coming from the online RL exploration agent. We show that our approach enables major improvements on vision-based autonomous driving in urban environments. We further validate the GRI method on Mujoco continuous control tasks with different off-policy RL algorithms. Our method ranked first on the CARLA Leaderboard and outperforms World on Rails, the previous state-of-the-art, by 17%.

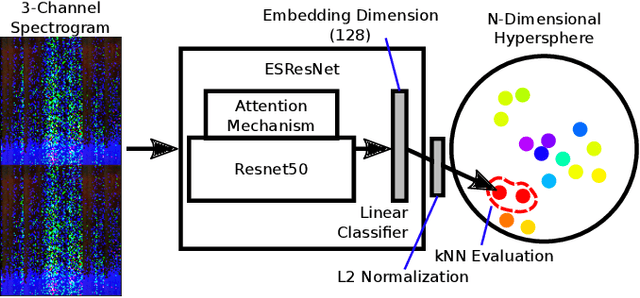

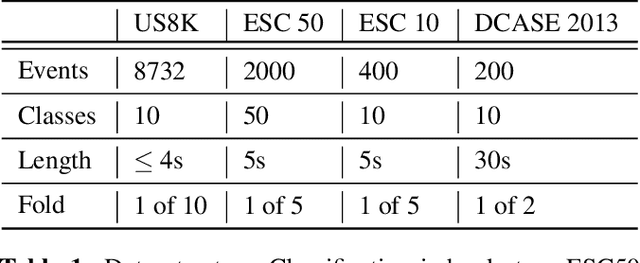

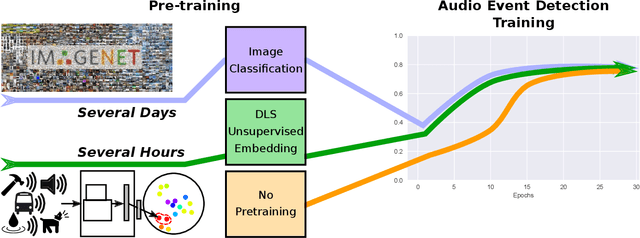

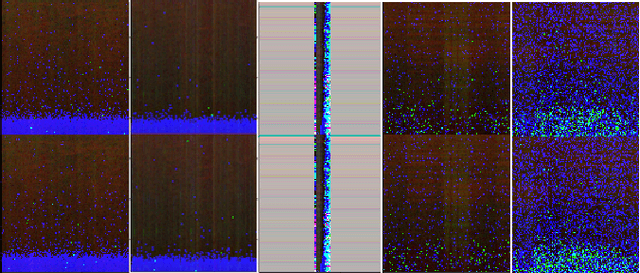

Unsupervised Discriminative Learning of Sounds for Audio Event Classification

May 20, 2021

Abstract:Recent progress in network-based audio event classification has shown the benefit of pre-training models on visual data such as ImageNet. While this process allows knowledge transfer across different domains, training a model on large-scale visual datasets is time consuming. On several audio event classification benchmarks, we show a fast and effective alternative that pre-trains the model unsupervised, only on audio data and yet delivers on-par performance with ImageNet pre-training. Furthermore, we show that our discriminative audio learning can be used to transfer knowledge across audio datasets and optionally include ImageNet pre-training.

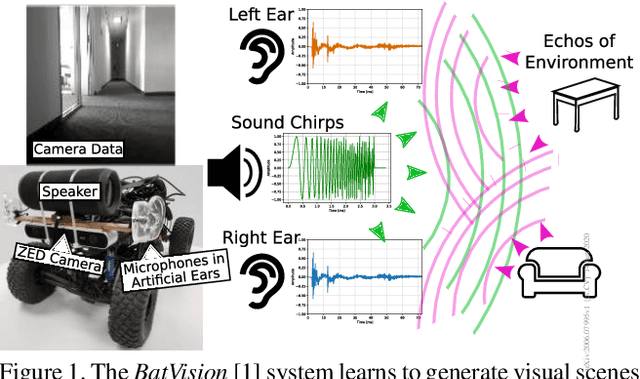

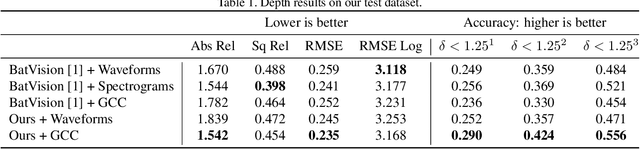

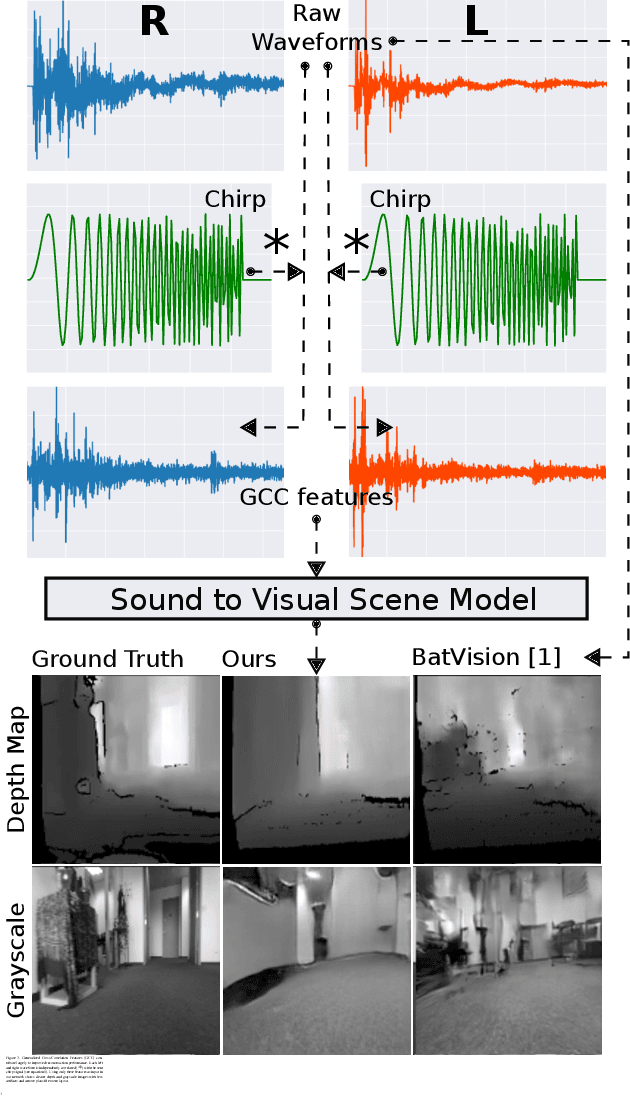

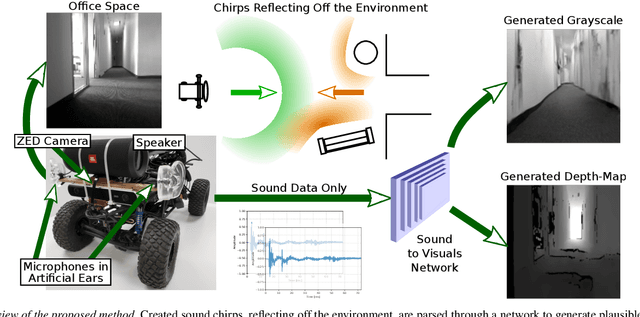

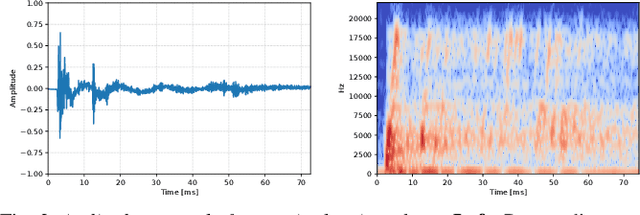

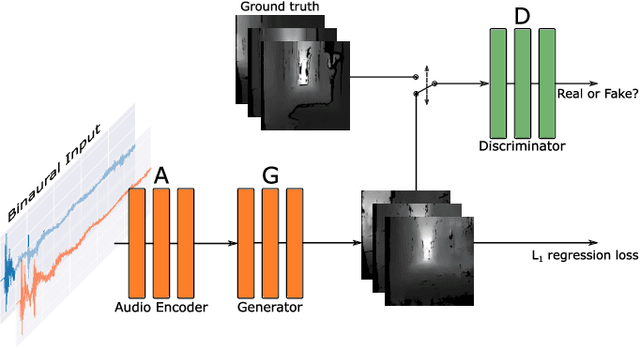

BatVision with GCC-PHAT Features for Better Sound to Vision Predictions

Jun 14, 2020

Abstract:Inspired by sophisticated echolocation abilities found in nature, we train a generative adversarial network to predict plausible depth maps and grayscale layouts from sound. To achieve this, our sound-to-vision model processes binaural echo-returns from chirping sounds. We build upon previous work with BatVision that consists of a sound-to-vision model and a self-collected dataset using our mobile robot and low-cost hardware. We improve on the previous model by introducing several changes to the model, which leads to a better depth and grayscale estimation, and increased perceptual quality. Rather than using raw binaural waveforms as input, we generate generalized cross-correlation (GCC) features and use these as input instead. In addition, we change the model generator and base it on residual learning and use spectral normalization in the discriminator. We compare and present both quantitative and qualitative improvements over our previous BatVision model.

BatVision: Learning to See 3D Spatial Layout with Two Ears

Dec 15, 2019

Abstract:Virtual camera images showing the correct layout of a space ahead can be generated by purely listening to the reflections of chirping sounds. Many species evolved sophisticated non-visual perception while artificial systems fall behind. Radar and ultrasound are used where cameras fail, but provide very limited information or require large, complex and expensive sensors. Yet sound is used effortlessly by dolphins, bats, wales and humans as a sensor modality with many advantages over vision. However, it is challenging to harness useful and detailed information for machine perception. We train a network to generate representations of the world in 2D and 3D only from sounds, sent by one speaker and captured by two microphones. Inspired by examples from nature, we emit short frequency modulated sound chirps and record returning echoes through an artificial human pinnae pair. We then learn to generate disparity-like depth maps and grayscale images from the echoes in an end-to-end fashion. With only low-cost equipment, our models show good reconstruction performance while being robust to errors and even overcoming limitations of our vision-based ground truth. Finally, we introduce a large dataset consisting of binaural sound signals synchronised in time with both RGB images and depth maps.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge