Rui Miao

Physics-informed Diffusion Generation for Geomagnetic Map Interpolation

Jan 31, 2026Abstract:Geomagnetic map interpolation aims to infer unobserved geomagnetic data at spatial points, yielding critical applications in navigation and resource exploration. However, existing methods for scattered data interpolation are not specifically designed for geomagnetic maps, which inevitably leads to suboptimal performance due to detection noise and the laws of physics. Therefore, we propose a Physics-informed Diffusion Generation framework~(PDG) to interpolate incomplete geomagnetic maps. First, we design a physics-informed mask strategy to guide the diffusion generation process based on a local receptive field, effectively eliminating noise interference. Second, we impose a physics-informed constraint on the diffusion generation results following the kriging principle of geomagnetic maps, ensuring strict adherence to the laws of physics. Extensive experiments and in-depth analyses on four real-world datasets demonstrate the superiority and effectiveness of each component of PDG.

Where Did This Sentence Come From? Tracing Provenance in LLM Reasoning Distillation

Dec 24, 2025Abstract:Reasoning distillation has attracted increasing attention. It typically leverages a large teacher model to generate reasoning paths, which are then used to fine-tune a student model so that it mimics the teacher's behavior in training contexts. However, previous approaches have lacked a detailed analysis of the origins of the distilled model's capabilities. It remains unclear whether the student can maintain consistent behaviors with the teacher in novel test-time contexts, or whether it regresses to its original output patterns, raising concerns about the generalization of distillation models. To analyse this question, we introduce a cross-model Reasoning Distillation Provenance Tracing framework. For each action (e.g., a sentence) produced by the distilled model, we obtain the predictive probabilities assigned by the teacher, the original student, and the distilled model under the same context. By comparing these probabilities, we classify each action into different categories. By systematically disentangling the provenance of each action, we experimentally demonstrate that, in test-time contexts, the distilled model can indeed generate teacher-originated actions, which correlate with and plausibly explain observed performance on distilled model. Building on this analysis, we further propose a teacher-guided data selection method. Unlike prior approach that rely on heuristics, our method directly compares teacher-student divergences on the training data, providing a principled selection criterion. We validate the effectiveness of our approach across multiple representative teacher models and diverse student models. The results highlight the utility of our provenance-tracing framework and underscore its promise for reasoning distillation. We hope to share Reasoning Distillation Provenance Tracing and our insights into reasoning distillation with the community.

Explainable and Fine-Grained Safeguarding of LLM Multi-Agent Systems via Bi-Level Graph Anomaly Detection

Dec 21, 2025Abstract:Large language model (LLM)-based multi-agent systems (MAS) have shown strong capabilities in solving complex tasks. As MAS become increasingly autonomous in various safety-critical tasks, detecting malicious agents has become a critical security concern. Although existing graph anomaly detection (GAD)-based defenses can identify anomalous agents, they mainly rely on coarse sentence-level information and overlook fine-grained lexical cues, leading to suboptimal performance. Moreover, the lack of interpretability in these methods limits their reliability and real-world applicability. To address these limitations, we propose XG-Guard, an explainable and fine-grained safeguarding framework for detecting malicious agents in MAS. To incorporate both coarse and fine-grained textual information for anomalous agent identification, we utilize a bi-level agent encoder to jointly model the sentence- and token-level representations of each agent. A theme-based anomaly detector further captures the evolving discussion focus in MAS dialogues, while a bi-level score fusion mechanism quantifies token-level contributions for explanation. Extensive experiments across diverse MAS topologies and attack scenarios demonstrate robust detection performance and strong interpretability of XG-Guard.

BlindGuard: Safeguarding LLM-based Multi-Agent Systems under Unknown Attacks

Aug 11, 2025Abstract:The security of LLM-based multi-agent systems (MAS) is critically threatened by propagation vulnerability, where malicious agents can distort collective decision-making through inter-agent message interactions. While existing supervised defense methods demonstrate promising performance, they may be impractical in real-world scenarios due to their heavy reliance on labeled malicious agents to train a supervised malicious detection model. To enable practical and generalizable MAS defenses, in this paper, we propose BlindGuard, an unsupervised defense method that learns without requiring any attack-specific labels or prior knowledge of malicious behaviors. To this end, we establish a hierarchical agent encoder to capture individual, neighborhood, and global interaction patterns of each agent, providing a comprehensive understanding for malicious agent detection. Meanwhile, we design a corruption-guided detector that consists of directional noise injection and contrastive learning, allowing effective detection model training solely on normal agent behaviors. Extensive experiments show that BlindGuard effectively detects diverse attack types (i.e., prompt injection, memory poisoning, and tool attack) across MAS with various communication patterns while maintaining superior generalizability compared to supervised baselines. The code is available at: https://github.com/MR9812/BlindGuard.

Understanding the Information Propagation Effects of Communication Topologies in LLM-based Multi-Agent Systems

May 29, 2025Abstract:The communication topology in large language model-based multi-agent systems fundamentally governs inter-agent collaboration patterns, critically shaping both the efficiency and effectiveness of collective decision-making. While recent studies for communication topology automated design tend to construct sparse structures for efficiency, they often overlook why and when sparse and dense topologies help or hinder collaboration. In this paper, we present a causal framework to analyze how agent outputs, whether correct or erroneous, propagate under topologies with varying sparsity. Our empirical studies reveal that moderately sparse topologies, which effectively suppress error propagation while preserving beneficial information diffusion, typically achieve optimal task performance. Guided by this insight, we propose a novel topology design approach, EIB-leanrner, that balances error suppression and beneficial information propagation by fusing connectivity patterns from both dense and sparse graphs. Extensive experiments show the superior effectiveness, communication cost, and robustness of EIB-leanrner.

Reinforcement Learning for Individual Optimal Policy from Heterogeneous Data

May 14, 2025Abstract:Offline reinforcement learning (RL) aims to find optimal policies in dynamic environments in order to maximize the expected total rewards by leveraging pre-collected data. Learning from heterogeneous data is one of the fundamental challenges in offline RL. Traditional methods focus on learning an optimal policy for all individuals with pre-collected data from a single episode or homogeneous batch episodes, and thus, may result in a suboptimal policy for a heterogeneous population. In this paper, we propose an individualized offline policy optimization framework for heterogeneous time-stationary Markov decision processes (MDPs). The proposed heterogeneous model with individual latent variables enables us to efficiently estimate the individual Q-functions, and our Penalized Pessimistic Personalized Policy Learning (P4L) algorithm guarantees a fast rate on the average regret under a weak partial coverage assumption on behavior policies. In addition, our simulation studies and a real data application demonstrate the superior numerical performance of the proposed method compared with existing methods.

SMPR: A structure-enhanced multimodal drug-disease prediction model for drug repositioning and cold start

Mar 17, 2025Abstract:Repositioning drug-disease relationships has always been a hot field of research. However, actual cases of biologically validated drug relocation remain very limited, and existing models have not yet fully utilized the structural information of the drug. Furthermore, most repositioning models are only used to complete the relationship matrix, and their practicality is poor when dealing with drug cold start problems. This paper proposes a structure-enhanced multimodal relationship prediction model (SMRP). SMPR is based on the SMILE structure of the drug, using the Mol2VEC method to generate drug embedded representations, and learn disease embedded representations through heterogeneous network graph neural networks. Ultimately, a drug-disease relationship matrix is constructed. In addition, to reduce the difficulty of users' use, SMPR also provides a cold start interface based on structural similarity based on reposition results to simply and quickly predict drug-related diseases. The repositioning ability and cold start capability of the model are verified from multiple perspectives. While the AUC and ACUPR scores of repositioning reach 99% and 61% respectively, the AUC of cold start achieve 80%. In particular, the cold start Recall indicator can reach more than 70%, which means that SMPR is more sensitive to positive samples. Finally, case analysis is used to verify the practical value of the model and visual analysis directly demonstrates the improvement of the structure to the model. For quick use, we also provide local deployment of the model and package it into an executable program.

Mamba-Based Graph Convolutional Networks: Tackling Over-smoothing with Selective State Space

Jan 26, 2025

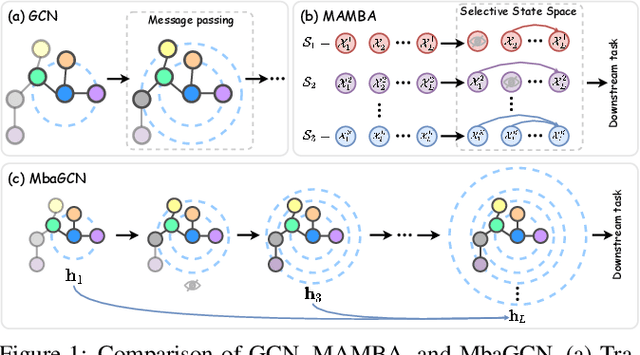

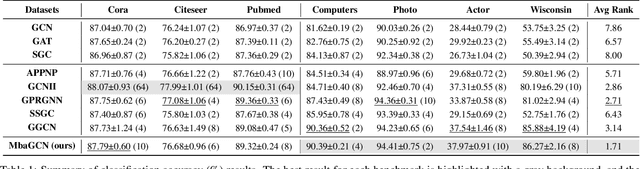

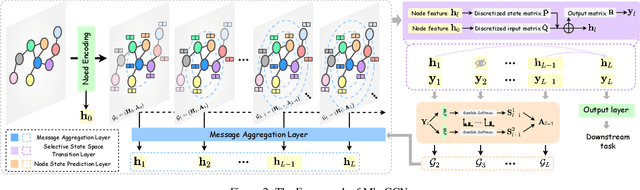

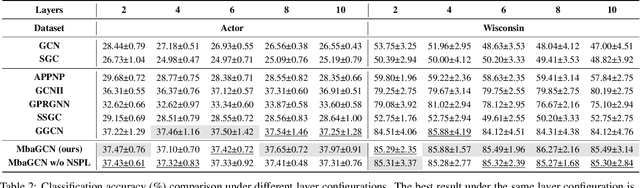

Abstract:Graph Neural Networks (GNNs) have shown great success in various graph-based learning tasks. However, it often faces the issue of over-smoothing as the model depth increases, which causes all node representations to converge to a single value and become indistinguishable. This issue stems from the inherent limitations of GNNs, which struggle to distinguish the importance of information from different neighborhoods. In this paper, we introduce MbaGCN, a novel graph convolutional architecture that draws inspiration from the Mamba paradigm-originally designed for sequence modeling. MbaGCN presents a new backbone for GNNs, consisting of three key components: the Message Aggregation Layer, the Selective State Space Transition Layer, and the Node State Prediction Layer. These components work in tandem to adaptively aggregate neighborhood information, providing greater flexibility and scalability for deep GNN models. While MbaGCN may not consistently outperform all existing methods on each dataset, it provides a foundational framework that demonstrates the effective integration of the Mamba paradigm into graph representation learning. Through extensive experiments on benchmark datasets, we demonstrate that MbaGCN paves the way for future advancements in graph neural network research.

Graph Defense Diffusion Model

Jan 20, 2025Abstract:Graph Neural Networks (GNNs) demonstrate significant potential in various applications but remain highly vulnerable to adversarial attacks, which can greatly degrade their performance. Existing graph purification methods attempt to address this issue by filtering attacked graphs; however, they struggle to effectively defend against multiple types of adversarial attacks simultaneously due to their limited flexibility, and they lack comprehensive modeling of graph data due to their heavy reliance on heuristic prior knowledge. To overcome these challenges, we propose a more versatile approach for defending against adversarial attacks on graphs. In this work, we introduce the Graph Defense Diffusion Model (GDDM), a flexible purification method that leverages the denoising and modeling capabilities of diffusion models. The iterative nature of diffusion models aligns well with the stepwise process of adversarial attacks, making them particularly suitable for defense. By iteratively adding and removing noise, GDDM effectively purifies attacked graphs, restoring their original structure and features. Our GDDM consists of two key components: (1) Graph Structure-Driven Refiner, which preserves the basic fidelity of the graph during the denoising process, and ensures that the generated graph remains consistent with the original scope; and (2) Node Feature-Constrained Regularizer, which removes residual impurities from the denoised graph, further enhances the purification effect. Additionally, we design tailored denoising strategies to handle different types of adversarial attacks, improving the model's adaptability to various attack scenarios. Extensive experiments conducted on three real-world datasets demonstrate that GDDM outperforms state-of-the-art methods in defending against a wide range of adversarial attacks, showcasing its robustness and effectiveness.

Unifying Unsupervised Graph-Level Anomaly Detection and Out-of-Distribution Detection: A Benchmark

Jun 21, 2024Abstract:To build safe and reliable graph machine learning systems, unsupervised graph-level anomaly detection (GLAD) and unsupervised graph-level out-of-distribution (OOD) detection (GLOD) have received significant attention in recent years. Though those two lines of research indeed share the same objective, they have been studied independently in the community due to distinct evaluation setups, creating a gap that hinders the application and evaluation of methods from one to the other. To bridge the gap, in this work, we present a Unified Benchmark for unsupervised Graph-level OOD and anomaly Detection (our method), a comprehensive evaluation framework that unifies GLAD and GLOD under the concept of generalized graph-level OOD detection. Our benchmark encompasses 35 datasets spanning four practical anomaly and OOD detection scenarios, facilitating the comparison of 16 representative GLAD/GLOD methods. We conduct multi-dimensional analyses to explore the effectiveness, generalizability, robustness, and efficiency of existing methods, shedding light on their strengths and limitations. Furthermore, we provide an open-source codebase (https://github.com/UB-GOLD/UB-GOLD) of our method to foster reproducible research and outline potential directions for future investigations based on our insights.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge