Robert Pollice

Show, Don't Tell: Evaluating Large Language Models Beyond Textual Understanding with ChildPlay

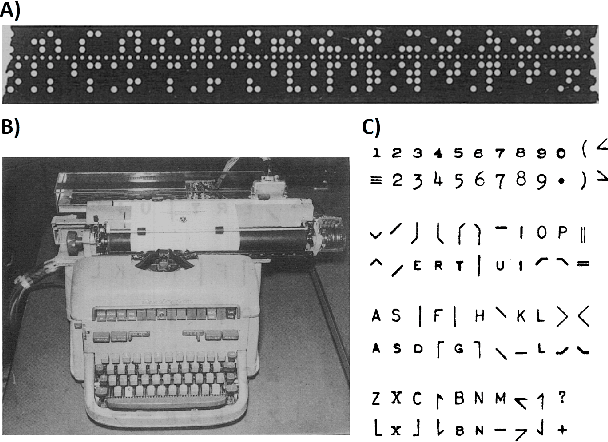

Jul 12, 2024Abstract:We explore the hypothesis that LLMs, such as GPT-3.5 and GPT-4, possess broader cognitive functions, particularly in non-linguistic domains. Our approach extends beyond standard linguistic benchmarks by incorporating games like Tic-Tac-Toe, Connect Four, and Battleship, encoded via ASCII, to assess strategic thinking and decision-making. To evaluate the models' ability to generalize beyond their training data, we introduce two additional games. The first game, LEGO Connect Language (LCL), tests the models' capacity to understand spatial logic and follow assembly instructions. The second game, the game of shapes, challenges the models to identify shapes represented by 1s within a matrix of zeros, further testing their spatial reasoning skills. This "show, don't tell" strategy uses games instead of simply querying the models. Our results show that despite their proficiency on standard benchmarks, GPT-3.5 and GPT-4's abilities to play and reason about fully observable games without pre-training is mediocre. Both models fail to anticipate losing moves in Tic-Tac-Toe and Connect Four, and they are unable to play Battleship correctly. While GPT-4 shows some success in the game of shapes, both models fail at the assembly tasks presented in the LCL game. These results suggest that while GPT models can emulate conversational proficiency and basic rule comprehension, their performance in strategic gameplay and spatial reasoning tasks is very limited. Importantly, this reveals a blind spot in current LLM benchmarks that we highlight with our gameplay benchmark suite ChildPlay (https://github.com/child-play-neurips/child-play). Our findings provide a cautionary tale about claims of emergent intelligence and reasoning capabilities of LLMs that are roughly the size of GPT-3.5 and GPT-4.

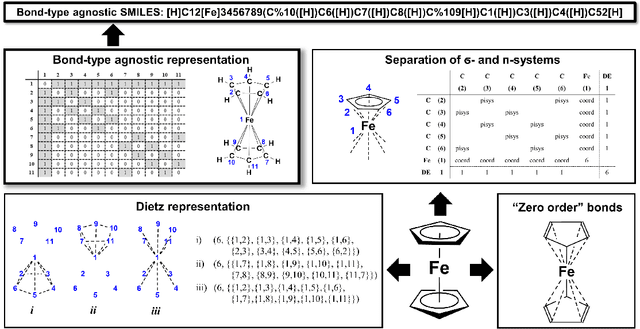

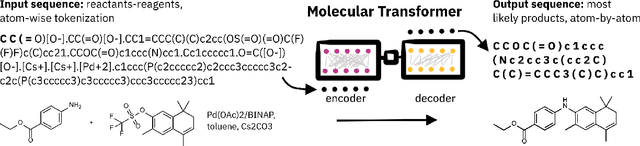

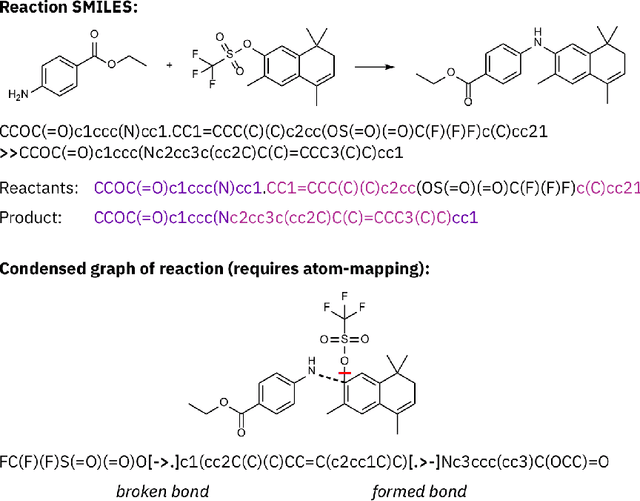

Recent advances in the Self-Referencing Embedding Strings (SELFIES) library

Feb 07, 2023Abstract:String-based molecular representations play a crucial role in cheminformatics applications, and with the growing success of deep learning in chemistry, have been readily adopted into machine learning pipelines. However, traditional string-based representations such as SMILES are often prone to syntactic and semantic errors when produced by generative models. To address these problems, a novel representation, SELF-referencIng Embedded Strings (SELFIES), was proposed that is inherently 100% robust, alongside an accompanying open-source implementation. Since then, we have generalized SELFIES to support a wider range of molecules and semantic constraints and streamlined its underlying grammar. We have implemented this updated representation in subsequent versions of \selfieslib, where we have also made major advances with respect to design, efficiency, and supported features. Hence, we present the current status of \selfieslib (version 2.1.1) in this manuscript.

On scientific understanding with artificial intelligence

Apr 04, 2022

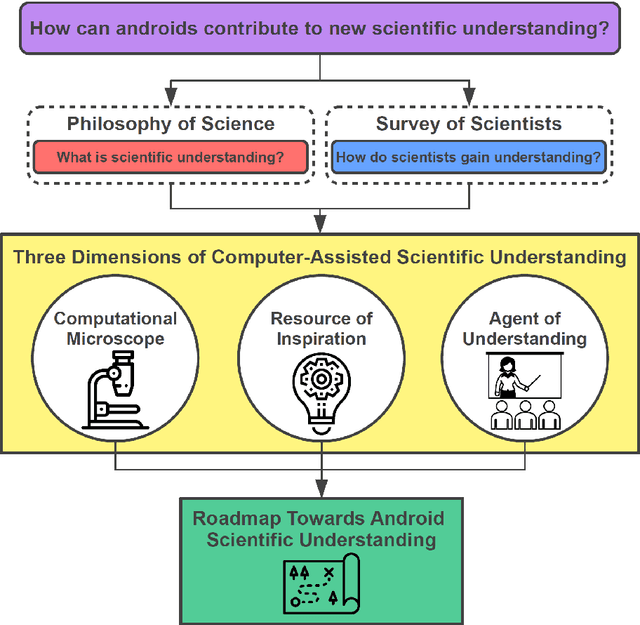

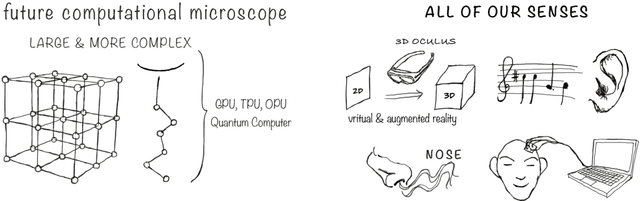

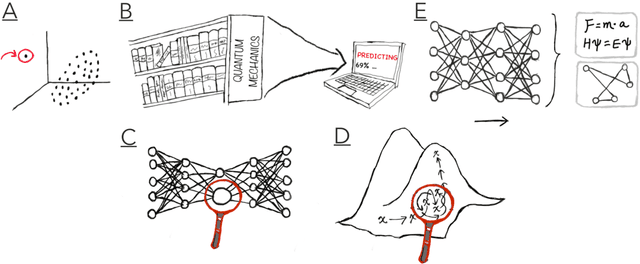

Abstract:Imagine an oracle that correctly predicts the outcome of every particle physics experiment, the products of every chemical reaction, or the function of every protein. Such an oracle would revolutionize science and technology as we know them. However, as scientists, we would not be satisfied with the oracle itself. We want more. We want to comprehend how the oracle conceived these predictions. This feat, denoted as scientific understanding, has frequently been recognized as the essential aim of science. Now, the ever-growing power of computers and artificial intelligence poses one ultimate question: How can advanced artificial systems contribute to scientific understanding or achieve it autonomously? We are convinced that this is not a mere technical question but lies at the core of science. Therefore, here we set out to answer where we are and where we can go from here. We first seek advice from the philosophy of science to understand scientific understanding. Then we review the current state of the art, both from literature and by collecting dozens of anecdotes from scientists about how they acquired new conceptual understanding with the help of computers. Those combined insights help us to define three dimensions of android-assisted scientific understanding: The android as a I) computational microscope, II) resource of inspiration and the ultimate, not yet existent III) agent of understanding. For each dimension, we explain new avenues to push beyond the status quo and unleash the full power of artificial intelligence's contribution to the central aim of science. We hope our perspective inspires and focuses research towards androids that get new scientific understanding and ultimately bring us closer to true artificial scientists.

SELFIES and the future of molecular string representations

Mar 31, 2022

Abstract:Artificial intelligence (AI) and machine learning (ML) are expanding in popularity for broad applications to challenging tasks in chemistry and materials science. Examples include the prediction of properties, the discovery of new reaction pathways, or the design of new molecules. The machine needs to read and write fluently in a chemical language for each of these tasks. Strings are a common tool to represent molecular graphs, and the most popular molecular string representation, SMILES, has powered cheminformatics since the late 1980s. However, in the context of AI and ML in chemistry, SMILES has several shortcomings -- most pertinently, most combinations of symbols lead to invalid results with no valid chemical interpretation. To overcome this issue, a new language for molecules was introduced in 2020 that guarantees 100\% robustness: SELFIES (SELF-referencIng Embedded Strings). SELFIES has since simplified and enabled numerous new applications in chemistry. In this manuscript, we look to the future and discuss molecular string representations, along with their respective opportunities and challenges. We propose 16 concrete Future Projects for robust molecular representations. These involve the extension toward new chemical domains, exciting questions at the interface of AI and robust languages and interpretability for both humans and machines. We hope that these proposals will inspire several follow-up works exploiting the full potential of molecular string representations for the future of AI in chemistry and materials science.

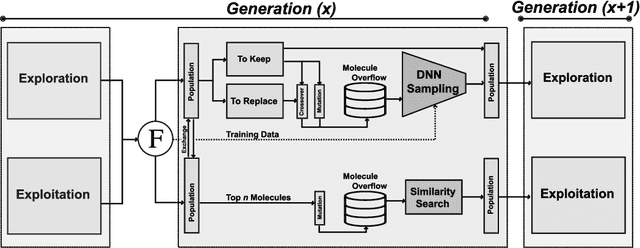

JANUS: Parallel Tempered Genetic Algorithm Guided by Deep Neural Networks for Inverse Molecular Design

Jun 07, 2021

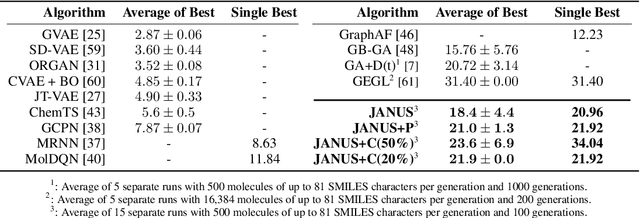

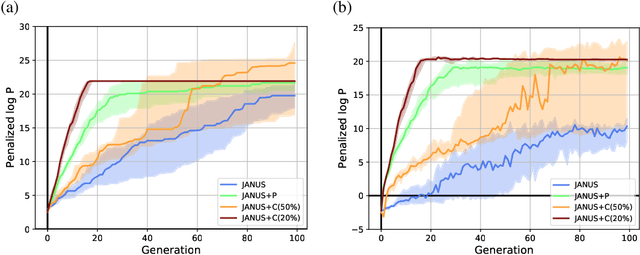

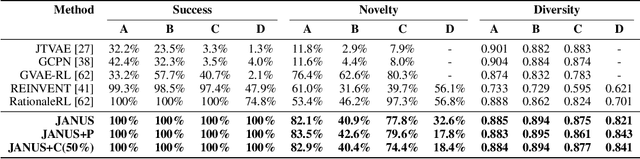

Abstract:Inverse molecular design, i.e., designing molecules with specific target properties, can be posed as an optimization problem. High-dimensional optimization tasks in the natural sciences are commonly tackled via population-based metaheuristic optimization algorithms such as evolutionary algorithms. However, expensive property evaluation, which is often required, can limit the widespread use of such approaches as the associated cost can become prohibitive. Herein, we present JANUS, a genetic algorithm that is inspired by parallel tempering. It propagates two populations, one for exploration and another for exploitation, improving optimization by reducing expensive property evaluations. Additionally, JANUS is augmented by a deep neural network that approximates molecular properties via active learning for enhanced sampling of the chemical space. Our method uses the SELFIES molecular representation and the STONED algorithm for the efficient generation of structures, and outperforms other generative models in common inverse molecular design tasks achieving state-of-the-art performance.

Assigning Confidence to Molecular Property Prediction

Feb 23, 2021

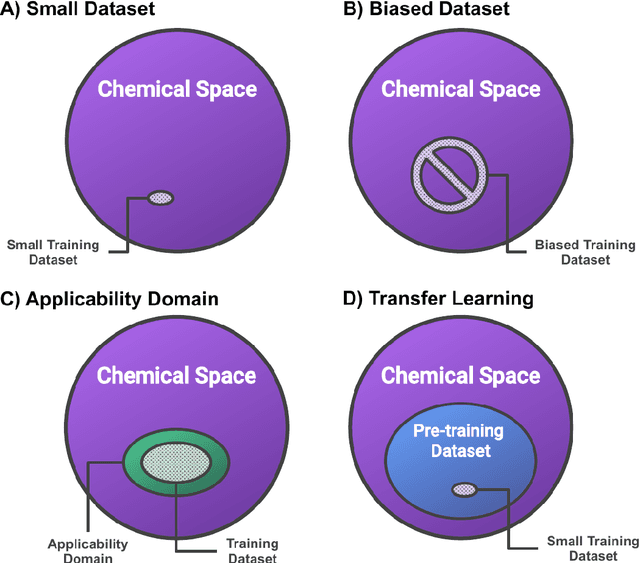

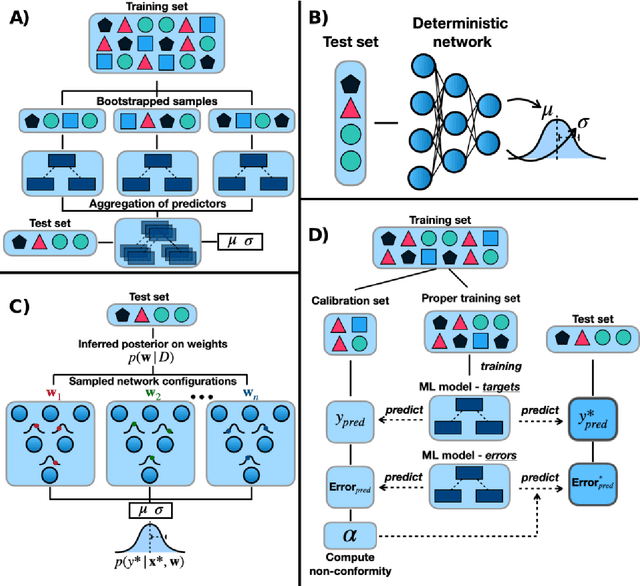

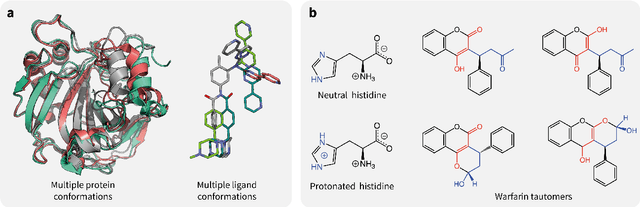

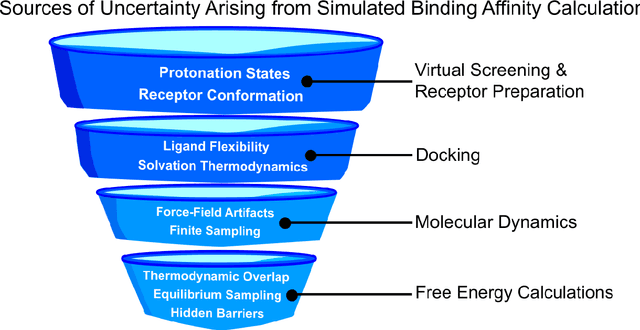

Abstract:Introduction: Computational modeling has rapidly advanced over the last decades, especially to predict molecular properties for chemistry, material science and drug design. Recently, machine learning techniques have emerged as a powerful and cost-effective strategy to learn from existing datasets and perform predictions on unseen molecules. Accordingly, the explosive rise of data-driven techniques raises an important question: What confidence can be assigned to molecular property predictions and what techniques can be used for that purpose? Areas covered: In this work, we discuss popular strategies for predicting molecular properties relevant to drug design, their corresponding uncertainty sources and methods to quantify uncertainty and confidence. First, our considerations for assessing confidence begin with dataset bias and size, data-driven property prediction and feature design. Next, we discuss property simulation via molecular docking, and free-energy simulations of binding affinity in detail. Lastly, we investigate how these uncertainties propagate to generative models, as they are usually coupled with property predictors. Expert opinion: Computational techniques are paramount to reduce the prohibitive cost and timing of brute-force experimentation when exploring the enormous chemical space. We believe that assessing uncertainty in property prediction models is essential whenever closed-loop drug design campaigns relying on high-throughput virtual screening are deployed. Accordingly, considering sources of uncertainty leads to better-informed experimental validations, more reliable predictions and to more realistic expectations of the entire workflow. Overall, this increases confidence in the predictions and designs and, ultimately, accelerates drug design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge