Rashidul Islam

Enhancing Distribution and Label Consistency for Graph Out-of-Distribution Generalization

Jan 07, 2025

Abstract:To deal with distribution shifts in graph data, various graph out-of-distribution (OOD) generalization techniques have been recently proposed. These methods often employ a two-step strategy that first creates augmented environments and subsequently identifies invariant subgraphs to improve generalizability. Nevertheless, this approach could be suboptimal from the perspective of consistency. First, the process of augmenting environments by altering the graphs while preserving labels may lead to graphs that are not realistic or meaningfully related to the origin distribution, thus lacking distribution consistency. Second, the extracted subgraphs are obtained from directly modifying graphs, and may not necessarily maintain a consistent predictive relationship with their labels, thereby impacting label consistency. In response to these challenges, we introduce an innovative approach that aims to enhance these two types of consistency for graph OOD generalization. We propose a modifier to obtain both augmented and invariant graphs in a unified manner. With the augmented graphs, we enrich the training data without compromising the integrity of label-graph relationships. The label consistency enhancement in our framework further preserves the supervision information in the invariant graph. We conduct extensive experiments on real-world datasets to demonstrate the superiority of our framework over other state-of-the-art baselines.

Can One Embedding Fit All? A Multi-Interest Learning Paradigm Towards Improving User Interest Diversity Fairness

Feb 21, 2024

Abstract:Recommender systems (RSs) have gained widespread applications across various domains owing to the superior ability to capture users' interests. However, the complexity and nuanced nature of users' interests, which span a wide range of diversity, pose a significant challenge in delivering fair recommendations. In practice, user preferences vary significantly; some users show a clear preference toward certain item categories, while others have a broad interest in diverse ones. Even though it is expected that all users should receive high-quality recommendations, the effectiveness of RSs in catering to this disparate interest diversity remains under-explored. In this work, we investigate whether users with varied levels of interest diversity are treated fairly. Our empirical experiments reveal an inherent disparity: users with broader interests often receive lower-quality recommendations. To mitigate this, we propose a multi-interest framework that uses multiple (virtual) interest embeddings rather than single ones to represent users. Specifically, the framework consists of stacked multi-interest representation layers, which include an interest embedding generator that derives virtual interests from shared parameters, and a center embedding aggregator that facilitates multi-hop aggregation. Experiments demonstrate the effectiveness of the framework in achieving better trade-off between fairness and utility across various datasets and backbones.

Fair Inference for Discrete Latent Variable Models

Sep 15, 2022

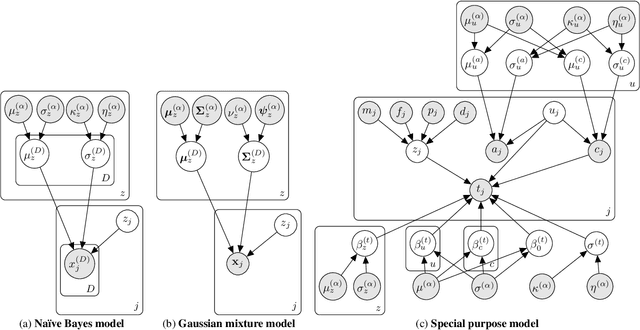

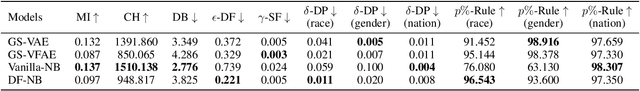

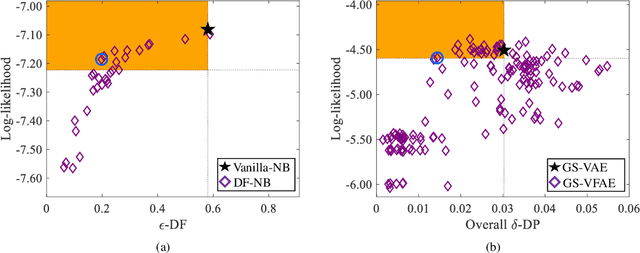

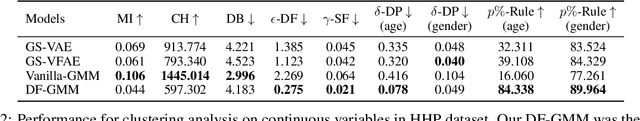

Abstract:It is now well understood that machine learning models, trained on data without due care, often exhibit unfair and discriminatory behavior against certain populations. Traditional algorithmic fairness research has mainly focused on supervised learning tasks, particularly classification. While fairness in unsupervised learning has received some attention, the literature has primarily addressed fair representation learning of continuous embeddings. In this paper, we conversely focus on unsupervised learning using probabilistic graphical models with discrete latent variables. We develop a fair stochastic variational inference technique for the discrete latent variables, which is accomplished by including a fairness penalty on the variational distribution that aims to respect the principles of intersectionality, a critical lens on fairness from the legal, social science, and humanities literature, and then optimizing the variational parameters under this penalty. We first show the utility of our method in improving equity and fairness for clustering using na\"ive Bayes and Gaussian mixture models on benchmark datasets. To demonstrate the generality of our approach and its potential for real-world impact, we then develop a special-purpose graphical model for criminal justice risk assessments, and use our fairness approach to prevent the inferences from encoding unfair societal biases.

Framework for Behavioral Disorder Detection Using Machine Learning and Application of Virtual Cognitive Behavioral Therapy in COVID-19 Pandemic

Apr 29, 2022

Abstract:In this modern world, people are becoming more self-centered and unsocial. On the other hand, people are stressed, becoming more anxious during COVID-19 pandemic situation and exhibits symptoms of behavioral disorder. To measure the symptoms of behavioral disorder, usually psychiatrist use long hour sessions and inputs from specific questionnaire. This process is time consuming and sometime is ineffective to detect the right behavioral disorder. Also, reserved people sometime hesitate to follow this process. We have created a digital framework which can detect behavioral disorder and prescribe virtual Cognitive Behavioral Therapy (vCBT) for recovery. By using this framework people can input required data that are highly responsible for the three behavioral disorders namely depression, anxiety and internet addiction. We have applied machine learning technique to detect specific behavioral disorder from samples. This system guides the user with basic understanding and treatment through vCBT from anywhere any time which would potentially be the steppingstone for the user to be conscious and pursue right treatment.

Bias: Friend or Foe? User Acceptance of Gender Stereotypes in Automated Career Recommendations

Jun 13, 2021

Abstract:Currently, there is a surge of interest in fair Artificial Intelligence (AI) and Machine Learning (ML) research which aims to mitigate discriminatory bias in AI algorithms, e.g. along lines of gender, age, and race. While most research in this domain focuses on developing fair AI algorithms, in this work, we show that a fair AI algorithm on its own may be insufficient to achieve its intended results in the real world. Using career recommendation as a case study, we build a fair AI career recommender by employing gender debiasing machine learning techniques. Our offline evaluation showed that the debiased recommender makes fairer career recommendations without sacrificing its accuracy. Nevertheless, an online user study of more than 200 college students revealed that participants on average prefer the original biased system over the debiased system. Specifically, we found that perceived gender disparity is a determining factor for the acceptance of a recommendation. In other words, our results demonstrate we cannot fully address the gender bias issue in AI recommendations without addressing the gender bias in humans.

Fair Representation Learning for Heterogeneous Information Networks

Apr 18, 2021

Abstract:Recently, much attention has been paid to the societal impact of AI, especially concerns regarding its fairness. A growing body of research has identified unfair AI systems and proposed methods to debias them, yet many challenges remain. Representation learning for Heterogeneous Information Networks (HINs), a fundamental building block used in complex network mining, has socially consequential applications such as automated career counseling, but there have been few attempts to ensure that it will not encode or amplify harmful biases, e.g. sexism in the job market. To address this gap, in this paper we propose a comprehensive set of de-biasing methods for fair HINs representation learning, including sampling-based, projection-based, and graph neural networks (GNNs)-based techniques. We systematically study the behavior of these algorithms, especially their capability in balancing the trade-off between fairness and prediction accuracy. We evaluate the performance of the proposed methods in an automated career counseling application where we mitigate gender bias in career recommendation. Based on the evaluation results on two datasets, we identify the most effective fair HINs representation learning techniques under different conditions.

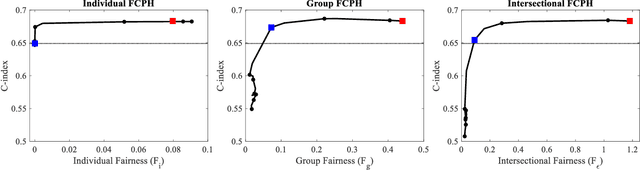

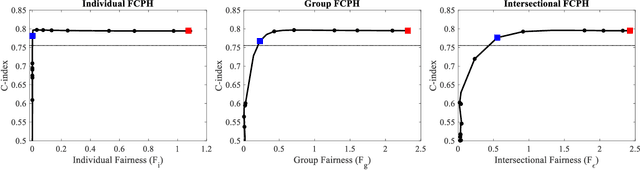

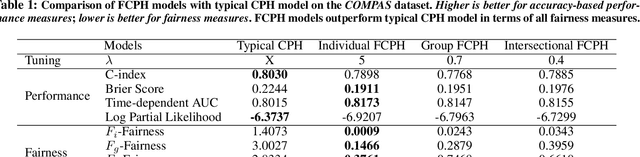

Equitable Allocation of Healthcare Resources with Fair Cox Models

Oct 14, 2020

Abstract:Healthcare programs such as Medicaid provide crucial services to vulnerable populations, but due to limited resources, many of the individuals who need these services the most languish on waiting lists. Survival models, e.g. the Cox proportional hazards model, can potentially improve this situation by predicting individuals' levels of need, which can then be used to prioritize the waiting lists. Providing care to those in need can prevent institutionalization for those individuals, which both improves quality of life and reduces overall costs. While the benefits of such an approach are clear, care must be taken to ensure that the prioritization process is fair or independent of demographic information-based harmful stereotypes. In this work, we develop multiple fairness definitions for survival models and corresponding fair Cox proportional hazards models to ensure equitable allocation of healthcare resources. We demonstrate the utility of our methods in terms of fairness and predictive accuracy on two publicly available survival datasets.

Neural Fair Collaborative Filtering

Sep 02, 2020

Abstract:A growing proportion of human interactions are digitized on social media platforms and subjected to algorithmic decision-making, and it has become increasingly important to ensure fair treatment from these algorithms. In this work, we investigate gender bias in collaborative-filtering recommender systems trained on social media data. We develop neural fair collaborative filtering (NFCF), a practical framework for mitigating gender bias in recommending sensitive items (e.g. jobs, academic concentrations, or courses of study) using a pre-training and fine-tuning approach to neural collaborative filtering, augmented with bias correction techniques. We show the utility of our methods for gender de-biased career and college major recommendations on the MovieLens dataset and a Facebook dataset, respectively, and achieve better performance and fairer behavior than several state-of-the-art models.

Bayesian Modeling of Intersectional Fairness: The Variance of Bias

Nov 18, 2018

Abstract:Intersectionality is a framework that analyzes how interlocking systems of power and oppression affect individuals along overlapping dimensions including race, gender, sexual orientation, class, and disability. Intersectionality theory therefore implies it is important that fairness in artificial intelligence systems be protected with regard to multi-dimensional protected attributes. However, the measurement of fairness becomes statistically challenging in the multi-dimensional setting due to data sparsity, which increases rapidly in the number of dimensions, and in the values per dimension. We present a Bayesian probabilistic modeling approach for the reliable, data-efficient estimation of fairness with multi-dimensional protected attributes, which we apply to novel intersectional fairness metrics. Experimental results on census data and the COMPAS criminal justice recidivism dataset demonstrate the utility of our methodology, and show that Bayesian methods are valuable for the modeling and measurement of fairness in an intersectional context.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge