Pranav Poudel

CAR-MFL: Cross-Modal Augmentation by Retrieval for Multimodal Federated Learning with Missing Modalities

Jul 11, 2024Abstract:Multimodal AI has demonstrated superior performance over unimodal approaches by leveraging diverse data sources for more comprehensive analysis. However, applying this effectiveness in healthcare is challenging due to the limited availability of public datasets. Federated learning presents an exciting solution, allowing the use of extensive databases from hospitals and health centers without centralizing sensitive data, thus maintaining privacy and security. Yet, research in multimodal federated learning, particularly in scenarios with missing modalities a common issue in healthcare datasets remains scarce, highlighting a critical area for future exploration. Toward this, we propose a novel method for multimodal federated learning with missing modalities. Our contribution lies in a novel cross-modal data augmentation by retrieval, leveraging the small publicly available dataset to fill the missing modalities in the clients. Our method learns the parameters in a federated manner, ensuring privacy protection and improving performance in multiple challenging multimodal benchmarks in the medical domain, surpassing several competitive baselines. Code Available: https://github.com/bhattarailab/CAR-MFL

Multimodal Federated Learning in Healthcare: a review

Oct 14, 2023

Abstract:Recent advancements in multimodal machine learning have empowered the development of accurate and robust AI systems in the medical domain, especially within centralized database systems. Simultaneously, Federated Learning (FL) has progressed, providing a decentralized mechanism where data need not be consolidated, thereby enhancing the privacy and security of sensitive healthcare data. The integration of these two concepts supports the ongoing progress of multimodal learning in healthcare while ensuring the security and privacy of patient records within local data-holding agencies. This paper offers a concise overview of the significance of FL in healthcare and outlines the current state-of-the-art approaches to Multimodal Federated Learning (MMFL) within the healthcare domain. It comprehensively examines the existing challenges in the field, shedding light on the limitations of present models. Finally, the paper outlines potential directions for future advancements in the field, aiming to bridge the gap between cutting-edge AI technology and the imperative need for patient data privacy in healthcare applications.

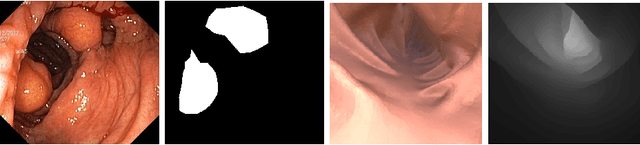

Neural Network Pruning for Real-time Polyp Segmentation

Jun 22, 2023Abstract:Computer-assisted treatment has emerged as a viable application of medical imaging, owing to the efficacy of deep learning models. Real-time inference speed remains a key requirement for such applications to help medical personnel. Even though there generally exists a trade-off between performance and model size, impressive efforts have been made to retain near-original performance by compromising model size. Neural network pruning has emerged as an exciting area that aims to eliminate redundant parameters to make the inference faster. In this study, we show an application of neural network pruning in polyp segmentation. We compute the importance score of convolutional filters and remove the filters having the least scores, which to some value of pruning does not degrade the performance. For computing the importance score, we use the Taylor First Order (TaylorFO) approximation of the change in network output for the removal of certain filters. Specifically, we employ a gradient-normalized backpropagation for the computation of the importance score. Through experiments in the polyp datasets, we validate that our approach can significantly reduce the parameter count and FLOPs retaining similar performance.

CholecTriplet2022: Show me a tool and tell me the triplet -- an endoscopic vision challenge for surgical action triplet detection

Feb 13, 2023

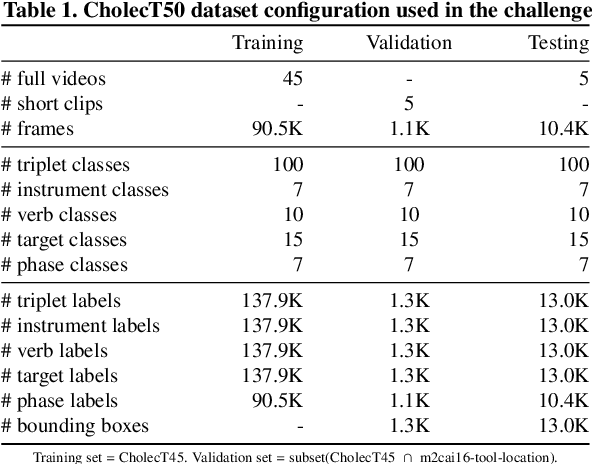

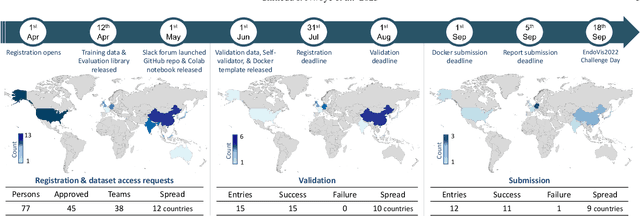

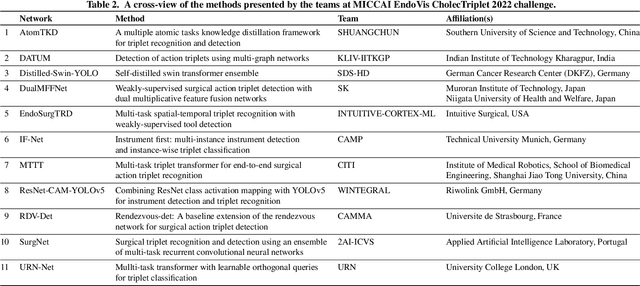

Abstract:Formalizing surgical activities as triplets of the used instruments, actions performed, and target anatomies is becoming a gold standard approach for surgical activity modeling. The benefit is that this formalization helps to obtain a more detailed understanding of tool-tissue interaction which can be used to develop better Artificial Intelligence assistance for image-guided surgery. Earlier efforts and the CholecTriplet challenge introduced in 2021 have put together techniques aimed at recognizing these triplets from surgical footage. Estimating also the spatial locations of the triplets would offer a more precise intraoperative context-aware decision support for computer-assisted intervention. This paper presents the CholecTriplet2022 challenge, which extends surgical action triplet modeling from recognition to detection. It includes weakly-supervised bounding box localization of every visible surgical instrument (or tool), as the key actors, and the modeling of each tool-activity in the form of <instrument, verb, target> triplet. The paper describes a baseline method and 10 new deep learning algorithms presented at the challenge to solve the task. It also provides thorough methodological comparisons of the methods, an in-depth analysis of the obtained results, their significance, and useful insights for future research directions and applications in surgery.

Task-Aware Active Learning for Endoscopic Image Analysis

Apr 07, 2022

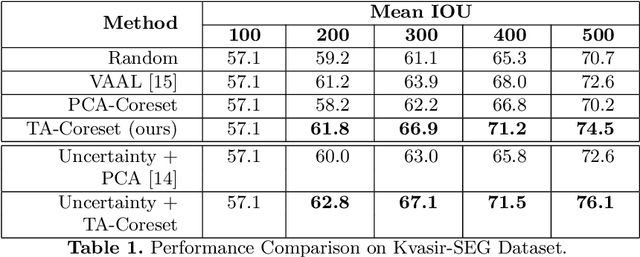

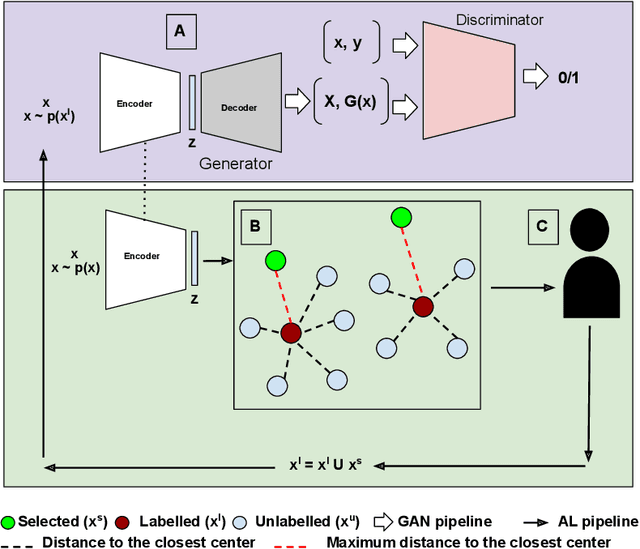

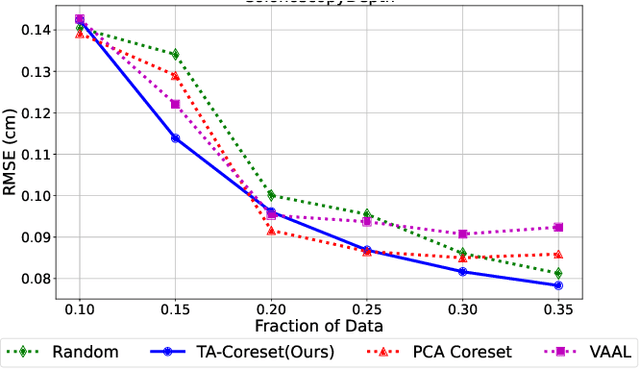

Abstract:Semantic segmentation of polyps and depth estimation are two important research problems in endoscopic image analysis. One of the main obstacles to conduct research on these research problems is lack of annotated data. Endoscopic annotations necessitate the specialist knowledge of expert endoscopists and due to this, it can be difficult to organise, expensive and time consuming. To address this problem, we investigate an active learning paradigm to reduce the number of training examples by selecting the most discriminative and diverse unlabelled examples for the task taken into consideration. Most of the existing active learning pipelines are task-agnostic in nature and are often sub-optimal to the end task. In this paper, we propose a novel task-aware active learning pipeline and applied for two important tasks in endoscopic image analysis: semantic segmentation and depth estimation. We compared our method with the competitive baselines. From the experimental results, we observe a substantial improvement over the compared baselines. Codes are available at https://github.com/thetna/endo-active-learn.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge