Paolo Paoletti

Supervised Visual Docking Network for Unmanned Surface Vehicles Using Auto-labeling in Real-world Water Environments

Mar 05, 2025Abstract:Unmanned Surface Vehicles (USVs) are increasingly applied to water operations such as environmental monitoring and river-map modeling. It faces a significant challenge in achieving precise autonomous docking at ports or stations, still relying on remote human control or external positioning systems for accuracy and safety which limits the full potential of human-out-of-loop deployment for USVs.This paper introduces a novel supervised learning pipeline with the auto-labeling technique for USVs autonomous visual docking. Firstly, we designed an auto-labeling data collection pipeline that appends relative pose and image pair to the dataset. This step does not require conventional manual labeling for supervised learning. Secondly, the Neural Dock Pose Estimator (NDPE) is proposed to achieve relative dock pose prediction without the need for hand-crafted feature engineering, camera calibration, and peripheral markers. Moreover, The NDPE can accurately predict the relative dock pose in real-world water environments, facilitating the implementation of Position-Based Visual Servo (PBVS) and low-level motion controllers for efficient and autonomous docking.Experiments show that the NDPE is robust to the disturbance of the distance and the USV velocity. The effectiveness of our proposed solution is tested and validated in real-world water environments, reflecting its capability to handle real-world autonomous docking tasks.

Multi-class Road Defect Detection and Segmentation using Spatial and Channel-wise Attention for Autonomous Road Repairing

Feb 06, 2024

Abstract:Road pavement detection and segmentation are critical for developing autonomous road repair systems. However, developing an instance segmentation method that simultaneously performs multi-class defect detection and segmentation is challenging due to the textural simplicity of road pavement image, the diversity of defect geometries, and the morphological ambiguity between classes. We propose a novel end-to-end method for multi-class road defect detection and segmentation. The proposed method comprises multiple spatial and channel-wise attention blocks available to learn global representations across spatial and channel-wise dimensions. Through these attention blocks, more globally generalised representations of morphological information (spatial characteristics) of road defects and colour and depth information of images can be learned. To demonstrate the effectiveness of our framework, we conducted various ablation studies and comparisons with prior methods on a newly collected dataset annotated with nine road defect classes. The experiments show that our proposed method outperforms existing state-of-the-art methods for multi-class road defect detection and segmentation methods.

Road Surface Defect Detection -- From Image-based to Non-image-based: A Survey

Feb 06, 2024

Abstract:Ensuring traffic safety is crucial, which necessitates the detection and prevention of road surface defects. As a result, there has been a growing interest in the literature on the subject, leading to the development of various road surface defect detection methods. The methods for detecting road defects can be categorised in various ways depending on the input data types or training methodologies. The predominant approach involves image-based methods, which analyse pixel intensities and surface textures to identify defects. Despite their popularity, image-based methods share the distinct limitation of vulnerability to weather and lighting changes. To address this issue, researchers have explored the use of additional sensors, such as laser scanners or LiDARs, providing explicit depth information to enable the detection of defects in terms of scale and volume. However, the exploration of data beyond images has not been sufficiently investigated. In this survey paper, we provide a comprehensive review of road surface defect detection studies, categorising them based on input data types and methodologies used. Additionally, we review recently proposed non-image-based methods and discuss several challenges and open problems associated with these techniques.

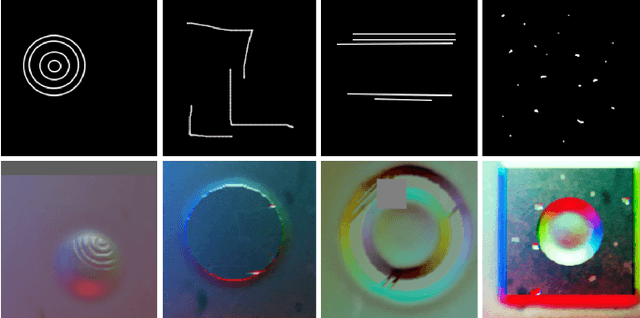

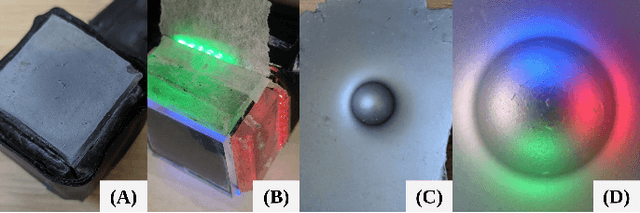

Beyond Flat GelSight Sensors: Simulation of Optical Tactile Sensors of Complex Morphologies for Sim2Real Learning

May 21, 2023Abstract:Recently, several morphologies, each with its advantages, have been proposed for the \textit{GelSight} high-resolution tactile sensors. However, existing simulation methods are limited to flat-surface sensors, which prevents their usage with the newer sensors of non-flat morphologies in Sim2Real experiments. In this paper, we extend a previously proposed GelSight simulation method developed for flat-surface sensors and propose a novel method for curved sensors. In particular, we address the simulation of light rays travelling through a curved tactile membrane in the form of geodesic paths. The method is validated by simulating the finger-shaped GelTip sensor and comparing the generated synthetic tactile images against the corresponding real images. Our extensive experiments show that combining the illumination generated from the geodesic paths, with a background image from the real sensor, produces the best results when compared to the lighting generated by direct linear paths in the same conditions. As the method is parameterised by the sensor mesh, it can be applied in principle to simulate a tactile sensor of any morphology. The proposed method not only unlocks simulating existing optical tactile sensors of complex morphologies but also enables experimenting with sensors of novel morphologies, before the fabrication of the real sensor. Project website: https://danfergo.github.io/geltip-sim

KIDS: kinematics-based (in)activity detection and segmentation in a sleep case study

Jan 04, 2023

Abstract:Sleep behaviour and in-bed movements contain rich information on the neurophysiological health of people, and have a direct link to the general well-being and quality of life. Standard clinical practices rely on polysomnography for sleep assessment; however, it is intrusive, performed in unfamiliar environments and requires trained personnel. Progress has been made on less invasive sensor technologies, such as actigraphy, but clinical validation raises concerns over their reliability and precision. Additionally, the field lacks a widely acceptable algorithm, with proposed approaches ranging from raw signal or feature thresholding to data-hungry classification models, many of which are unfamiliar to medical staff. This paper proposes an online Bayesian probabilistic framework for objective (in)activity detection and segmentation based on clinically meaningful joint kinematics, measured by a custom-made wearable sensor. Intuitive three-dimensional visualisations of kinematic timeseries were accomplished through dimension reduction based preprocessing, offering out-of-the-box framework explainability potentially useful for clinical monitoring and diagnosis. The proposed framework attained up to 99.2\% $F_1$-score and 0.96 Pearson's correlation coefficient in, respectively, the posture change detection and inactivity segmentation tasks. The work paves the way for a reliable home-based analysis of movements during sleep which would serve patient-centred longitudinal care plans.

Sleep Posture One-Shot Learning Framework Using Kinematic Data Augmentation: In-Silico and In-Vivo Case Studies

May 22, 2022

Abstract:Sleep posture is linked to several health conditions such as nocturnal cramps and more serious musculoskeletal issues. However, in-clinic sleep assessments are often limited to vital signs (e.g. brain waves). Wearable sensors with embedded inertial measurement units have been used for sleep posture classification; nonetheless, previous works consider only few (commonly four) postures, which are inadequate for advanced clinical assessments. Moreover, posture learning algorithms typically require longitudinal data collection to function reliably, and often operate on raw inertial sensor readings unfamiliar to clinicians. This paper proposes a new framework for sleep posture classification based on a minimal set of joint angle measurements. The proposed framework is validated on a rich set of twelve postures in two experimental pipelines: computer animation to obtain synthetic postural data, and human participant pilot study using custom-made miniature wearable sensors. Through fusing raw geo-inertial sensor measurements to compute a filtered estimate of relative segment orientations across the wrist and ankle joints, the body posture can be characterised in a way comprehensible to medical experts. The proposed sleep posture learning framework offers plug-and-play posture classification by capitalising on a novel kinematic data augmentation method that requires only one training example per posture. Additionally, a new metric together with data visualisations are employed to extract meaningful insights from the postures dataset, demonstrate the added value of the data augmentation method, and explain the classification performance. The proposed framework attained promising overall accuracy as high as 100% on synthetic data and 92.7% on real data, on par with state of the art data-hungry algorithms available in the literature.

Generation of GelSight Tactile Images for Sim2Real Learning

Jan 18, 2021

Abstract:Most current works in Sim2Real learning for robotic manipulation tasks leverage camera vision that may be significantly occluded by robot hands during the manipulation. Tactile sensing offers complementary information to vision and can compensate for the information loss caused by the occlusion. However, the use of tactile sensing is restricted in the Sim2Real research due to no simulated tactile sensors being available. To mitigate the gap, we introduce a novel approach for simulating a GelSight tactile sensor in the commonly used Gazebo simulator. Similar to the real GelSight sensor, the simulated sensor can produce high-resolution images by an optical sensor from the interaction between the touched object and an opaque soft membrane. It can indirectly sense forces, geometry, texture and other properties of the object and enables Sim2Real learning with tactile sensing. Preliminary experimental results have shown that the simulated sensor could generate realistic outputs similar to the ones captured by a real GelSight sensor. All the materials used in this paper are available at https://danfergo.github.io/gelsight-simulation.

Agricultural Robotics: The Future of Robotic Agriculture

Aug 02, 2018Abstract:Agri-Food is the largest manufacturing sector in the UK. It supports a food chain that generates over {\pounds}108bn p.a., with 3.9m employees in a truly international industry and exports {\pounds}20bn of UK manufactured goods. However, the global food chain is under pressure from population growth, climate change, political pressures affecting migration, population drift from rural to urban regions and the demographics of an aging global population. These challenges are recognised in the UK Industrial Strategy white paper and backed by significant investment via a Wave 2 Industrial Challenge Fund Investment ("Transforming Food Production: from Farm to Fork"). Robotics and Autonomous Systems (RAS) and associated digital technologies are now seen as enablers of this critical food chain transformation. To meet these challenges, this white paper reviews the state of the art in the application of RAS in Agri-Food production and explores research and innovation needs to ensure these technologies reach their full potential and deliver the necessary impacts in the Agri-Food sector.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge