Nobutaka Ono

Incremental Averaging Method to Improve Graph-Based Time-Difference-of-Arrival Estimation

Jul 09, 2025Abstract:Estimating the position of a speech source based on time-differences-of-arrival (TDOAs) is often adversely affected by background noise and reverberation. A popular method to estimate the TDOA between a microphone pair involves maximizing a generalized cross-correlation with phase transform (GCC-PHAT) function. Since the TDOAs across different microphone pairs satisfy consistency relations, generally only a small subset of microphone pairs are used for source position estimation. Although the set of microphone pairs is often determined based on a reference microphone, recently a more robust method has been proposed to determine the set of microphone pairs by computing the minimum spanning tree (MST) of a signal graph of GCC-PHAT function reliabilities. To reduce the influence of noise and reverberation on the TDOA estimation accuracy, in this paper we propose to compute the GCC-PHAT functions of the MST based on an average of multiple cross-power spectral densities (CPSDs) using an incremental method. In each step of the method, we increase the number of CPSDs over which we average by considering CPSDs computed indirectly via other microphones from previous steps. Using signals recorded in a noisy and reverberant laboratory with an array of spatially distributed microphones, the performance of the proposed method is evaluated in terms of TDOA estimation error and 2D source position estimation error. Experimental results for different source and microphone configurations and three reverberation conditions show that the proposed method considering multiple CPSDs improves the TDOA estimation and source position estimation accuracy compared to the reference microphone- and MST-based methods that rely on a single CPSD as well as steered-response power-based source position estimation.

Description and Discussion on DCASE 2025 Challenge Task 4: Spatial Semantic Segmentation of Sound Scenes

Jun 12, 2025

Abstract:Spatial Semantic Segmentation of Sound Scenes (S5) aims to enhance technologies for sound event detection and separation from multi-channel input signals that mix multiple sound events with spatial information. This is a fundamental basis of immersive communication. The ultimate goal is to separate sound event signals with 6 Degrees of Freedom (6DoF) information into dry sound object signals and metadata about the object type (sound event class) and representing spatial information, including direction. However, because several existing challenge tasks already provide some of the subset functions, this task for this year focuses on detecting and separating sound events from multi-channel spatial input signals. This paper outlines the S5 task setting of the Detection and Classification of Acoustic Scenes and Events (DCASE) 2025 Challenge Task 4 and the DCASE2025 Task 4 Dataset, newly recorded and curated for this task. We also report experimental results for an S5 system trained and evaluated on this dataset. The full version of this paper will be published after the challenge results are made public.

Mel-Spectrogram Inversion via Alternating Direction Method of Multipliers

Jan 09, 2025Abstract:Signal reconstruction from its mel-spectrogram is known as mel-spectrogram inversion and has many applications, including speech and foley sound synthesis. In this paper, we propose a mel-spectrogram inversion method based on a rigorous optimization algorithm. To reconstruct a time-domain signal with inverse short-time Fourier transform (STFT), both full-band STFT magnitude and phase should be predicted from a given mel-spectrogram. Their joint estimation has outperformed the cascaded full-band magnitude prediction and phase reconstruction by preventing error accumulation. However, the existing joint estimation method requires many iterations, and there remains room for performance improvement. We present an alternating direction method of multipliers (ADMM)-based joint estimation method motivated by its success in various nonconvex optimization problems including phase reconstruction. An efficient update of each variable is derived by exploiting the conditional independence among the variables. Our experiments demonstrate the effectiveness of the proposed method on speech and foley sounds.

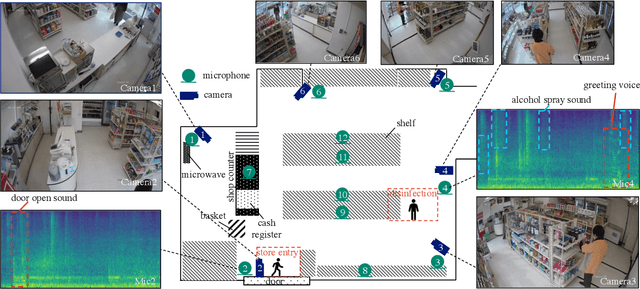

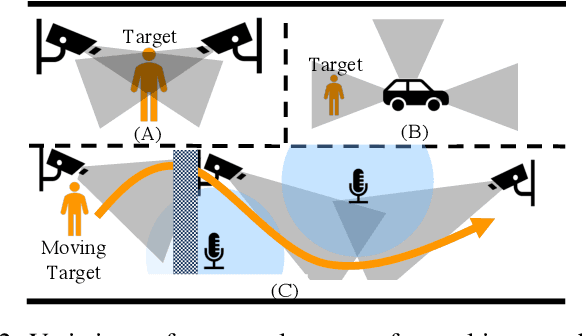

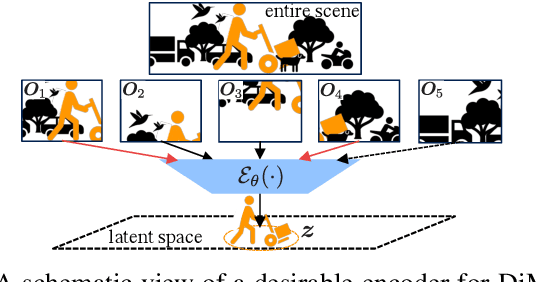

Guided Masked Self-Distillation Modeling for Distributed Multimedia Sensor Event Analysis

Apr 12, 2024

Abstract:Observations with distributed sensors are essential in analyzing a series of human and machine activities (referred to as 'events' in this paper) in complex and extensive real-world environments. This is because the information obtained from a single sensor is often missing or fragmented in such an environment; observations from multiple locations and modalities should be integrated to analyze events comprehensively. However, a learning method has yet to be established to extract joint representations that effectively combine such distributed observations. Therefore, we propose Guided Masked sELf-Distillation modeling (Guided-MELD) for inter-sensor relationship modeling. The basic idea of Guided-MELD is to learn to supplement the information from the masked sensor with information from other sensors needed to detect the event. Guided-MELD is expected to enable the system to effectively distill the fragmented or redundant target event information obtained by the sensors without being overly dependent on any specific sensors. To validate the effectiveness of the proposed method in novel tasks of distributed multimedia sensor event analysis, we recorded two new datasets that fit the problem setting: MM-Store and MM-Office. These datasets consist of human activities in a convenience store and an office, recorded using distributed cameras and microphones. Experimental results on these datasets show that the proposed Guided-MELD improves event tagging and detection performance and outperforms conventional inter-sensor relationship modeling methods. Furthermore, the proposed method performed robustly even when sensors were reduced.

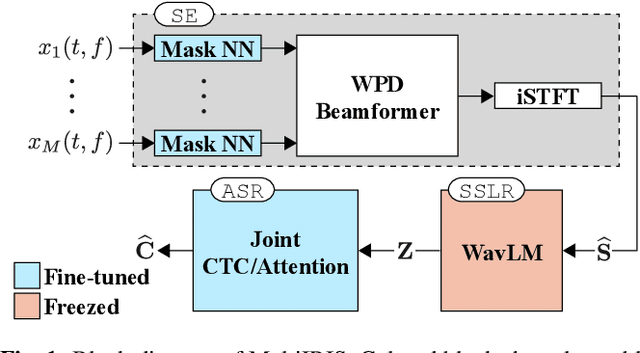

Exploring the Integration of Speech Separation and Recognition with Self-Supervised Learning Representation

Jul 23, 2023

Abstract:Neural speech separation has made remarkable progress and its integration with automatic speech recognition (ASR) is an important direction towards realizing multi-speaker ASR. This work provides an insightful investigation of speech separation in reverberant and noisy-reverberant scenarios as an ASR front-end. In detail, we explore multi-channel separation methods, mask-based beamforming and complex spectral mapping, as well as the best features to use in the ASR back-end model. We employ the recent self-supervised learning representation (SSLR) as a feature and improve the recognition performance from the case with filterbank features. To further improve multi-speaker recognition performance, we present a carefully designed training strategy for integrating speech separation and recognition with SSLR. The proposed integration using TF-GridNet-based complex spectral mapping and WavLM-based SSLR achieves a 2.5% word error rate in reverberant WHAMR! test set, significantly outperforming an existing mask-based MVDR beamforming and filterbank integration (28.9%).

Signal Reconstruction from Mel-spectrogram Based on Bi-level Consistency of Full-band Magnitude and Phase

Jul 23, 2023Abstract:We propose an optimization-based method for reconstructing a time-domain signal from a low-dimensional spectral representation such as a mel-spectrogram. Phase reconstruction has been studied to reconstruct a time-domain signal from the full-band short-time Fourier transform (STFT) magnitude. The Griffin-Lim algorithm (GLA) has been widely used because it relies only on the redundancy of STFT and is applicable to various audio signals. In this paper, we jointly reconstruct the full-band magnitude and phase by considering the bi-level relationships among the time-domain signal, its STFT coefficients, and its mel-spectrogram. The proposed method is formulated as a rigorous optimization problem and estimates the full-band magnitude based on the criterion used in GLA. Our experiments demonstrate the effectiveness of the proposed method on speech, music, and environmental signals.

Multi-Channel Target Speaker Extraction with Refinement: The WavLab Submission to the Second Clarity Enhancement Challenge

Feb 15, 2023Abstract:This paper describes our submission to the Second Clarity Enhancement Challenge (CEC2), which consists of target speech enhancement for hearing-aid (HA) devices in noisy-reverberant environments with multiple interferers such as music and competing speakers. Our approach builds upon the powerful iterative neural/beamforming enhancement (iNeuBe) framework introduced in our recent work, and this paper extends it for target speaker extraction. We therefore name the proposed approach as iNeuBe-X, where the X stands for extraction. To address the challenges encountered in the CEC2 setting, we introduce four major novelties: (1) we extend the state-of-the-art TF-GridNet model, originally designed for monaural speaker separation, for multi-channel, causal speech enhancement, and large improvements are observed by replacing the TCNDenseNet used in iNeuBe with this new architecture; (2) we leverage a recent dual window size approach with future-frame prediction to ensure that iNueBe-X satisfies the 5 ms constraint on algorithmic latency required by CEC2; (3) we introduce a novel speaker-conditioning branch for TF-GridNet to achieve target speaker extraction; (4) we propose a fine-tuning step, where we compute an additional loss with respect to the target speaker signal compensated with the listener audiogram. Without using external data, on the official development set our best model reaches a hearing-aid speech perception index (HASPI) score of 0.942 and a scale-invariant signal-to-distortion ratio improvement (SI-SDRi) of 18.8 dB. These results are promising given the fact that the CEC2 data is extremely challenging (e.g., on the development set the mixture SI-SDR is -12.3 dB). A demo of our submitted system is available at WAVLab CEC2 demo.

End-to-End Integration of Speech Recognition, Dereverberation, Beamforming, and Self-Supervised Learning Representation

Oct 19, 2022

Abstract:Self-supervised learning representation (SSLR) has demonstrated its significant effectiveness in automatic speech recognition (ASR), mainly with clean speech. Recent work pointed out the strength of integrating SSLR with single-channel speech enhancement for ASR in noisy environments. This paper further advances this integration by dealing with multi-channel input. We propose a novel end-to-end architecture by integrating dereverberation, beamforming, SSLR, and ASR within a single neural network. Our system achieves the best performance reported in the literature on the CHiME-4 6-channel track with a word error rate (WER) of 1.77%. While the WavLM-based strong SSLR demonstrates promising results by itself, the end-to-end integration with the weighted power minimization distortionless response beamformer, which simultaneously performs dereverberation and denoising, improves WER significantly. Its effectiveness is also validated on the REVERB dataset.

Inverse-free Online Independent Vector Analysis with Flexible Iterative Source Steering

Sep 02, 2022

Abstract:In this paper, we propose a new online independent vector analysis (IVA) algorithm for real-time blind source separation (BSS). In many BSS algorithms, the iterative projection (IP) has been used for updating the demixing matrix, a parameter to be estimated in BSS. However, it requires matrix inversion, which can be costly, particularly in online processing. To improve this situation, we introduce iterative source steering (ISS) to online IVA. ISS does not require any matrix inversions, and thus its computational complexity is less than that of IP. Furthermore, when only part of the sources are moving, ISS enables us to update the demixing matrix flexibly and effectively so that the steering vectors of only the moving sources are updated. Numerical experiments under a dynamic condition confirm the efficacy of the proposed method.

Joint Analysis of Acoustic Scenes and Sound Events with Weakly labeled Data

Jul 10, 2022

Abstract:Considering that acoustic scenes and sound events are closely related to each other, in some previous papers, a joint analysis of acoustic scenes and sound events utilizing multitask learning (MTL)-based neural networks was proposed. In conventional methods, a strongly supervised scheme is applied to sound event detection in MTL models, which requires strong labels of sound events in model training; however, annotating strong event labels is quite time-consuming. In this paper, we thus propose a method for the joint analysis of acoustic scenes and sound events based on the MTL framework with weak labels of sound events. In particular, in the proposed method, we introduce the multiple-instance learning scheme for weakly supervised training of sound event detection and evaluate four pooling functions, namely, max pooling, average pooling, exponential softmax pooling, and attention pooling. Experimental results obtained using parts of the TUT Acoustic Scenes 2016/2017 and TUT Sound Events 2016/2017 datasets show that the proposed MTL-based method with weak labels outperforms the conventional single-task-based scene classification and event detection models with weak labels in terms of both the scene classification and event detection performances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge