Mohammadhadi Bagheri

MULAN: Multitask Universal Lesion Analysis Network for Joint Lesion Detection, Tagging, and Segmentation

Aug 12, 2019

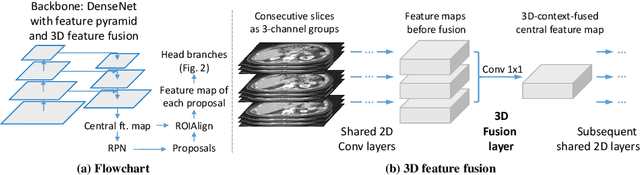

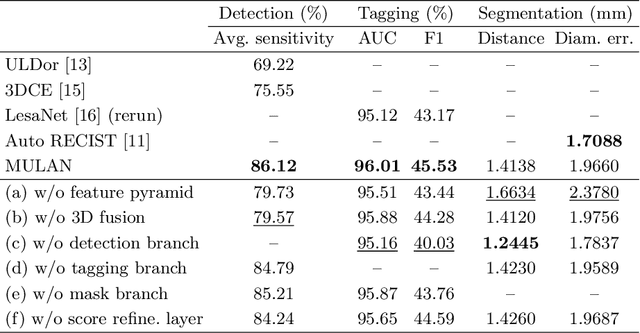

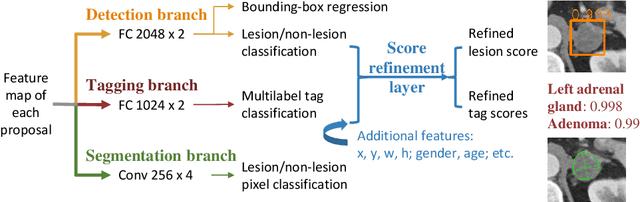

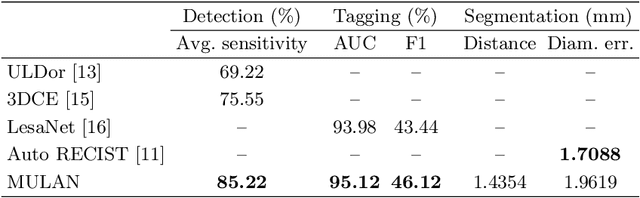

Abstract:When reading medical images such as a computed tomography (CT) scan, radiologists generally search across the image to find lesions, characterize and measure them, and then describe them in the radiological report. To automate this process, we propose a multitask universal lesion analysis network (MULAN) for joint detection, tagging, and segmentation of lesions in a variety of body parts, which greatly extends existing work of single-task lesion analysis on specific body parts. MULAN is based on an improved Mask R-CNN framework with three head branches and a 3D feature fusion strategy. It achieves the state-of-the-art accuracy in the detection and tagging tasks on the DeepLesion dataset, which contains 32K lesions in the whole body. We also analyze the relationship between the three tasks and show that tag predictions can improve detection accuracy via a score refinement layer.

Holistic and Comprehensive Annotation of Clinically Significant Findings on Diverse CT Images: Learning from Radiology Reports and Label Ontology

Apr 27, 2019

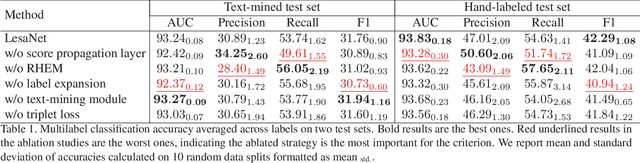

Abstract:In radiologists' routine work, one major task is to read a medical image, e.g., a CT scan, find significant lesions, and describe them in the radiology report. In this paper, we study the lesion description or annotation problem. Given a lesion image, our aim is to predict a comprehensive set of relevant labels, such as the lesion's body part, type, and attributes, which may assist downstream fine-grained diagnosis. To address this task, we first design a deep learning module to extract relevant semantic labels from the radiology reports associated with the lesion images. With the images and text-mined labels, we propose a lesion annotation network (LesaNet) based on a multilabel convolutional neural network (CNN) to learn all labels holistically. Hierarchical relations and mutually exclusive relations between the labels are leveraged to improve the label prediction accuracy. The relations are utilized in a label expansion strategy and a relational hard example mining algorithm. We also attach a simple score propagation layer on LesaNet to enhance recall and explore implicit relation between labels. Multilabel metric learning is combined with classification to enable interpretable prediction. We evaluated LesaNet on the public DeepLesion dataset, which contains over 32K diverse lesion images. Experiments show that LesaNet can precisely annotate the lesions using an ontology of 171 fine-grained labels with an average AUC of 0.9344.

Spatial-Temporal Convolutional LSTMs for Tumor Growth Prediction by Learning 4D Longitudinal Patient Data

Feb 23, 2019

Abstract:Prognostic tumor growth modeling via medical imaging observations is a challenging yet important problem in precision and predictive medicine. Traditionally, this problem is tackled through mathematical modeling and evaluated using relatively small patient datasets. Recent advances of convolutional networks (ConvNets) have demonstrated their higher accuracy than mathematical models in predicting future tumor volumes. This indicates that deep learning may have great potentials on addressing such problem. The state-of-the-art work models the cell invasion and mass-effect of tumor growth by training separate ConvNets on 2D image patches. Nevertheless such a 2D modeling approach cannot make full use of the spatial-temporal imaging context of the tumor's longitudinal 4D (3D + time) patient data. Moreover, previous methods are incapable to predict clinically-relevant tumor properties, other than the tumor volumes. In this paper, we exploit to formulate the tumor growth process through convolutional LSTMs (ConvLSTM) that extract tumor's static imaging appearances and simultaneously capture its temporal dynamic changes within a single network. We extend ConvLSTM into the spatial-temporal domain (ST-ConvLSTM) by jointly learning the inter-slice 3D contexts and the longitudinal dynamics. Our approach can incorporate other non-imaging patient information in an end-to-end trainable manner. Experiments are conducted on the largest 4D longitudinal tumor dataset of 33 patients to date. Results validate that the proposed ST-ConvLSTM model produces a Dice score of 83.2%+-5.1% and a RVD of 11.2%+-10.8%, both statistically significantly outperforming (p<0.05) other compared methods of traditional linear model, ConvLSTM, and generative adversarial network (GAN) under the metric of predicting future tumor volumes. Last, our new method enables the prediction of both cell density and CT intensity numbers.

3D Context Enhanced Region-based Convolutional Neural Network for End-to-End Lesion Detection

Jul 29, 2018

Abstract:Detecting lesions from computed tomography (CT) scans is an important but difficult problem because non-lesions and true lesions can appear similar. 3D context is known to be helpful in this differentiation task. However, existing end-to-end detection frameworks of convolutional neural networks (CNNs) are mostly designed for 2D images. In this paper, we propose 3D context enhanced region-based CNN (3DCE) to incorporate 3D context information efficiently by aggregating feature maps of 2D images. 3DCE is easy to train and end-to-end in training and inference. A universal lesion detector is developed to detect all kinds of lesions in one algorithm using the DeepLesion dataset. Experimental results on this challenging task prove the effectiveness of 3DCE. We have released the code of 3DCE in https://github.com/rsummers11/CADLab/tree/master/lesion_detector_3DCE.

Semi-Automatic RECIST Labeling on CT Scans with Cascaded Convolutional Neural Networks

Jun 25, 2018

Abstract:Response evaluation criteria in solid tumors (RECIST) is the standard measurement for tumor extent to evaluate treatment responses in cancer patients. As such, RECIST annotations must be accurate. However, RECIST annotations manually labeled by radiologists require professional knowledge and are time-consuming, subjective, and prone to inconsistency among different observers. To alleviate these problems, we propose a cascaded convolutional neural network based method to semi-automatically label RECIST annotations and drastically reduce annotation time. The proposed method consists of two stages: lesion region normalization and RECIST estimation. We employ the spatial transformer network (STN) for lesion region normalization, where a localization network is designed to predict the lesion region and the transformation parameters with a multi-task learning strategy. For RECIST estimation, we adapt the stacked hourglass network (SHN), introducing a relationship constraint loss to improve the estimation precision. STN and SHN can both be learned in an end-to-end fashion. We train our system on the DeepLesion dataset, obtaining a consensus model trained on RECIST annotations performed by multiple radiologists over a multi-year period. Importantly, when judged against the inter-reader variability of two additional radiologist raters, our system performs more stably and with less variability, suggesting that RECIST annotations can be reliably obtained with reduced labor and time.

Deep LOGISMOS: Deep Learning Graph-based 3D Segmentation of Pancreatic Tumors on CT scans

Jan 25, 2018

Abstract:This paper reports Deep LOGISMOS approach to 3D tumor segmentation by incorporating boundary information derived from deep contextual learning to LOGISMOS - layered optimal graph image segmentation of multiple objects and surfaces. Accurate and reliable tumor segmentation is essential to tumor growth analysis and treatment selection. A fully convolutional network (FCN), UNet, is first trained using three adjacent 2D patches centered at the tumor, providing contextual UNet segmentation and probability map for each 2D patch. The UNet segmentation is then refined by Gaussian Mixture Model (GMM) and morphological operations. The refined UNet segmentation is used to provide the initial shape boundary to build a segmentation graph. The cost for each node of the graph is determined by the UNet probability maps. Finally, a max-flow algorithm is employed to find the globally optimal solution thus obtaining the final segmentation. For evaluation, we applied the method to pancreatic tumor segmentation on a dataset of 51 CT scans, among which 30 scans were used for training and 21 for testing. With Deep LOGISMOS, DICE Similarity Coefficient (DSC) and Relative Volume Difference (RVD) reached 83.2+-7.8% and 18.6+-17.4% respectively, both are significantly improved (p<0.05) compared with contextual UNet and/or LOGISMOS alone.

Unsupervised Joint Mining of Deep Features and Image Labels for Large-scale Radiology Image Categorization and Scene Recognition

Dec 27, 2017

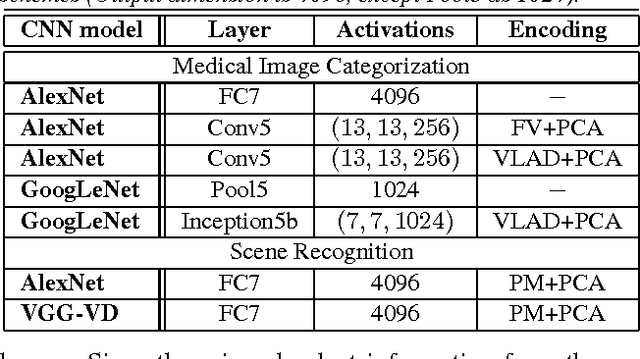

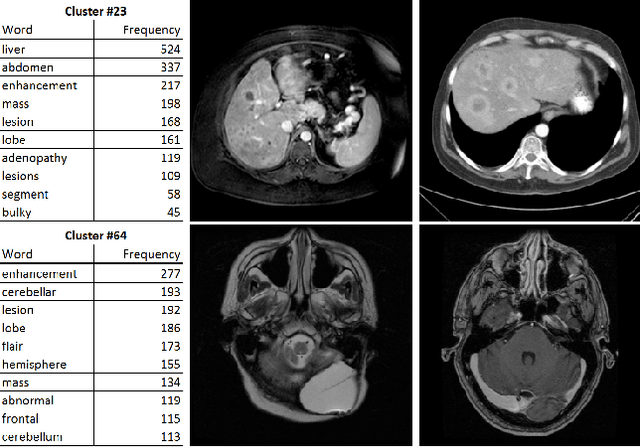

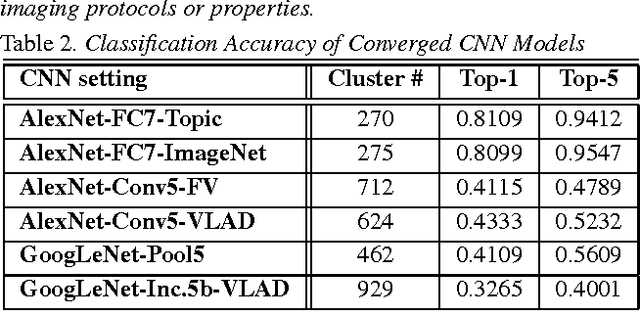

Abstract:The recent rapid and tremendous success of deep convolutional neural networks (CNN) on many challenging computer vision tasks largely derives from the accessibility of the well-annotated ImageNet and PASCAL VOC datasets. Nevertheless, unsupervised image categorization (i.e., without the ground-truth labeling) is much less investigated, yet critically important and difficult when annotations are extremely hard to obtain in the conventional way of "Google Search" and crowd sourcing. We address this problem by presenting a looped deep pseudo-task optimization (LDPO) framework for joint mining of deep CNN features and image labels. Our method is conceptually simple and rests upon the hypothesized "convergence" of better labels leading to better trained CNN models which in turn feed more discriminative image representations to facilitate more meaningful clusters/labels. Our proposed method is validated in tackling two important applications: 1) Large-scale medical image annotation has always been a prohibitively expensive and easily-biased task even for well-trained radiologists. Significantly better image categorization results are achieved via our proposed approach compared to the previous state-of-the-art method. 2) Unsupervised scene recognition on representative and publicly available datasets with our proposed technique is examined. The LDPO achieves excellent quantitative scene classification results. On the MIT indoor scene dataset, it attains a clustering accuracy of 75.3%, compared to the state-of-the-art supervised classification accuracy of 81.0% (when both are based on the VGG-VD model).

NegBio: a high-performance tool for negation and uncertainty detection in radiology reports

Dec 27, 2017

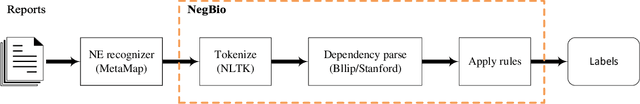

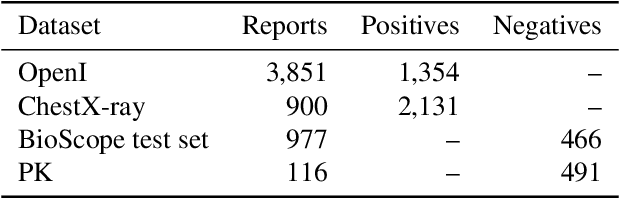

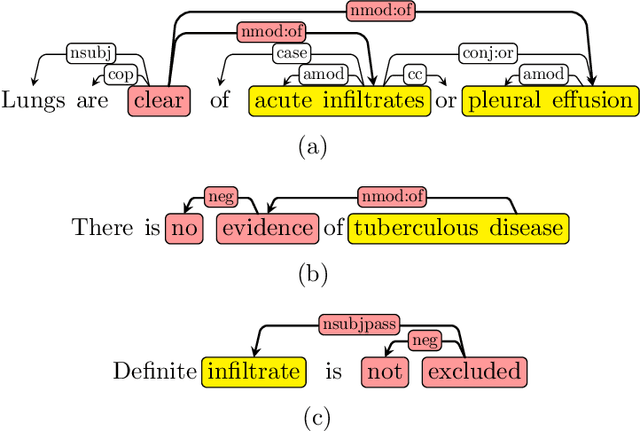

Abstract:Negative and uncertain medical findings are frequent in radiology reports, but discriminating them from positive findings remains challenging for information extraction. Here, we propose a new algorithm, NegBio, to detect negative and uncertain findings in radiology reports. Unlike previous rule-based methods, NegBio utilizes patterns on universal dependencies to identify the scope of triggers that are indicative of negation or uncertainty. We evaluated NegBio on four datasets, including two public benchmarking corpora of radiology reports, a new radiology corpus that we annotated for this work, and a public corpus of general clinical texts. Evaluation on these datasets demonstrates that NegBio is highly accurate for detecting negative and uncertain findings and compares favorably to a widely-used state-of-the-art system NegEx (an average of 9.5% improvement in precision and 5.1% in F1-score).

ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases

Dec 14, 2017

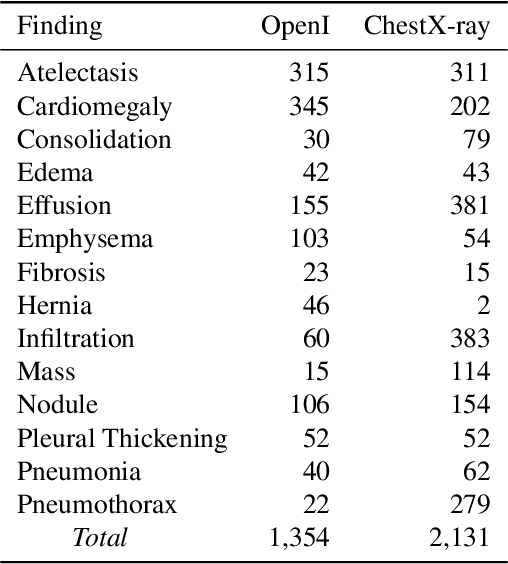

Abstract:The chest X-ray is one of the most commonly accessible radiological examinations for screening and diagnosis of many lung diseases. A tremendous number of X-ray imaging studies accompanied by radiological reports are accumulated and stored in many modern hospitals' Picture Archiving and Communication Systems (PACS). On the other side, it is still an open question how this type of hospital-size knowledge database containing invaluable imaging informatics (i.e., loosely labeled) can be used to facilitate the data-hungry deep learning paradigms in building truly large-scale high precision computer-aided diagnosis (CAD) systems. In this paper, we present a new chest X-ray database, namely "ChestX-ray8", which comprises 108,948 frontal-view X-ray images of 32,717 unique patients with the text-mined eight disease image labels (where each image can have multi-labels), from the associated radiological reports using natural language processing. Importantly, we demonstrate that these commonly occurring thoracic diseases can be detected and even spatially-located via a unified weakly-supervised multi-label image classification and disease localization framework, which is validated using our proposed dataset. Although the initial quantitative results are promising as reported, deep convolutional neural network based "reading chest X-rays" (i.e., recognizing and locating the common disease patterns trained with only image-level labels) remains a strenuous task for fully-automated high precision CAD systems. Data download link: https://nihcc.app.box.com/v/ChestXray-NIHCC

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge