Mingyang Yang

FPSAttention: Training-Aware FP8 and Sparsity Co-Design for Fast Video Diffusion

Jun 06, 2025Abstract:Diffusion generative models have become the standard for producing high-quality, coherent video content, yet their slow inference speeds and high computational demands hinder practical deployment. Although both quantization and sparsity can independently accelerate inference while maintaining generation quality, naively combining these techniques in existing training-free approaches leads to significant performance degradation due to the lack of joint optimization. We introduce FPSAttention, a novel training-aware co-design of FP8 quantization and sparsity for video generation, with a focus on the 3D bi-directional attention mechanism. Our approach features three key innovations: 1) A unified 3D tile-wise granularity that simultaneously supports both quantization and sparsity; 2) A denoising step-aware strategy that adapts to the noise schedule, addressing the strong correlation between quantization/sparsity errors and denoising steps; 3) A native, hardware-friendly kernel that leverages FlashAttention and is implemented with optimized Hopper architecture features for highly efficient execution. Trained on Wan2.1's 1.3B and 14B models and evaluated on the VBench benchmark, FPSAttention achieves a 7.09x kernel speedup for attention operations and a 4.96x end-to-end speedup for video generation compared to the BF16 baseline at 720p resolution-without sacrificing generation quality.

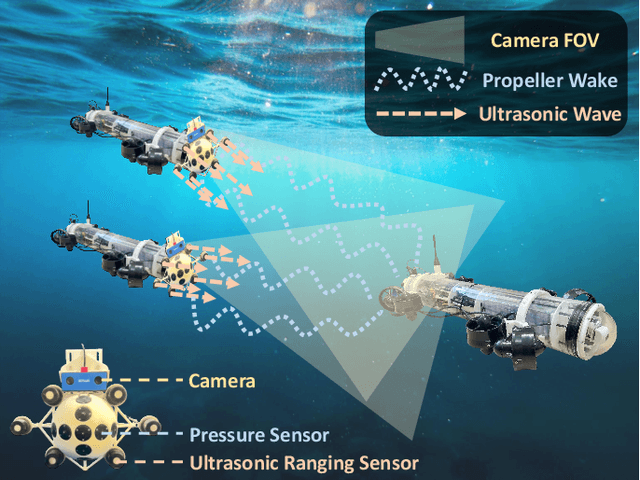

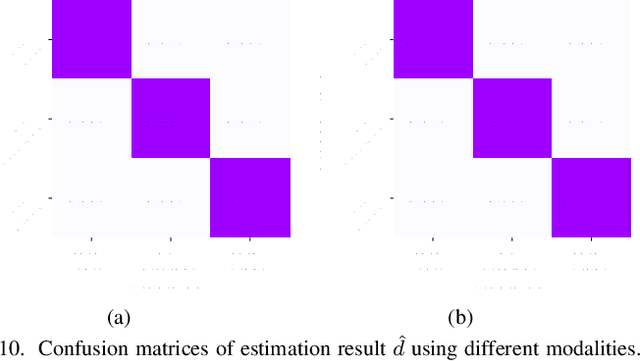

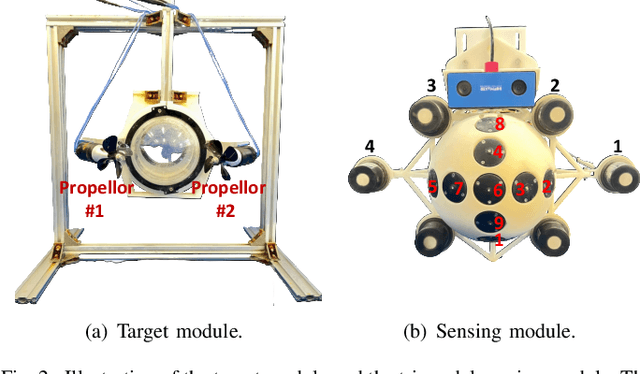

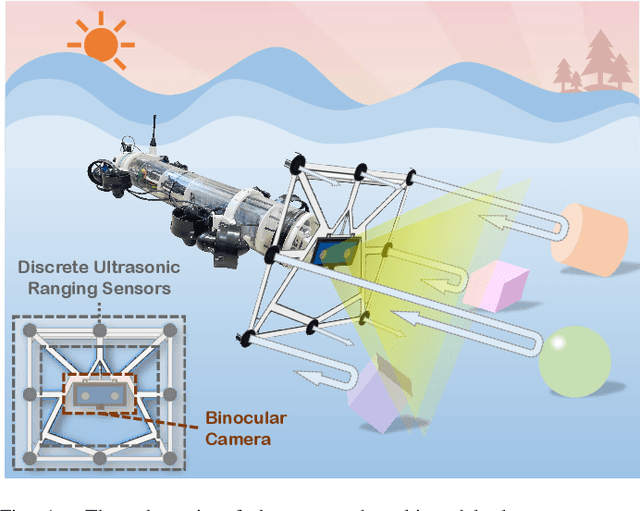

Learning-Based Leader Localization for Underwater Vehicles With Optical-Acoustic-Pressure Sensor Fusion

Feb 28, 2025

Abstract:Underwater vehicles have emerged as a critical technology for exploring and monitoring aquatic environments. The deployment of multi-vehicle systems has gained substantial interest due to their capability to perform collaborative tasks with improved efficiency. However, achieving precise localization of a leader underwater vehicle within a multi-vehicle configuration remains a significant challenge, particularly in dynamic and complex underwater conditions. To address this issue, this paper presents a novel tri-modal sensor fusion neural network approach that integrates optical, acoustic, and pressure sensors to localize the leader vehicle. The proposed method leverages the unique strengths of each sensor modality to improve localization accuracy and robustness. Specifically, optical sensors provide high-resolution imaging for precise relative positioning, acoustic sensors enable long-range detection and ranging, and pressure sensors offer environmental context awareness. The fusion of these sensor modalities is implemented using a deep learning architecture designed to extract and combine complementary features from raw sensor data. The effectiveness of the proposed method is validated through a custom-designed testing platform. Extensive data collection and experimental evaluations demonstrate that the tri-modal approach significantly improves the accuracy and robustness of leader localization, outperforming both single-modal and dual-modal methods.

TheAgentCompany: Benchmarking LLM Agents on Consequential Real World Tasks

Dec 18, 2024Abstract:We interact with computers on an everyday basis, be it in everyday life or work, and many aspects of work can be done entirely with access to a computer and the Internet. At the same time, thanks to improvements in large language models (LLMs), there has also been a rapid development in AI agents that interact with and affect change in their surrounding environments. But how performant are AI agents at helping to accelerate or even autonomously perform work-related tasks? The answer to this question has important implications for both industry looking to adopt AI into their workflows, and for economic policy to understand the effects that adoption of AI may have on the labor market. To measure the progress of these LLM agents' performance on performing real-world professional tasks, in this paper, we introduce TheAgentCompany, an extensible benchmark for evaluating AI agents that interact with the world in similar ways to those of a digital worker: by browsing the Web, writing code, running programs, and communicating with other coworkers. We build a self-contained environment with internal web sites and data that mimics a small software company environment, and create a variety of tasks that may be performed by workers in such a company. We test baseline agents powered by both closed API-based and open-weights language models (LMs), and find that with the most competitive agent, 24% of the tasks can be completed autonomously. This paints a nuanced picture on task automation with LM agents -- in a setting simulating a real workplace, a good portion of simpler tasks could be solved autonomously, but more difficult long-horizon tasks are still beyond the reach of current systems.

RealisDance: Equip controllable character animation with realistic hands

Sep 10, 2024Abstract:Controllable character animation is an emerging task that generates character videos controlled by pose sequences from given character images. Although character consistency has made significant progress via reference UNet, another crucial factor, pose control, has not been well studied by existing methods yet, resulting in several issues: 1) The generation may fail when the input pose sequence is corrupted. 2) The hands generated using the DWPose sequence are blurry and unrealistic. 3) The generated video will be shaky if the pose sequence is not smooth enough. In this paper, we present RealisDance to handle all the above issues. RealisDance adaptively leverages three types of poses, avoiding failed generation caused by corrupted pose sequences. Among these pose types, HaMeR provides accurate 3D and depth information of hands, enabling RealisDance to generate realistic hands even for complex gestures. Besides using temporal attention in the main UNet, RealisDance also inserts temporal attention into the pose guidance network, smoothing the video from the pose condition aspect. Moreover, we introduce pose shuffle augmentation during training to further improve generation robustness and video smoothness. Qualitative experiments demonstrate the superiority of RealisDance over other existing methods, especially in hand quality.

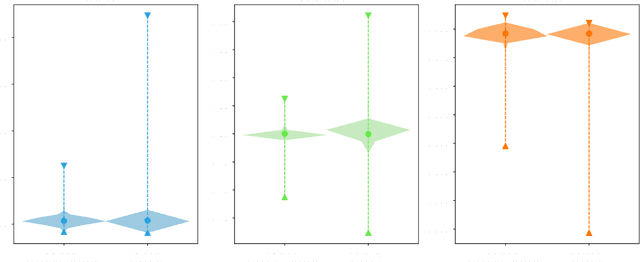

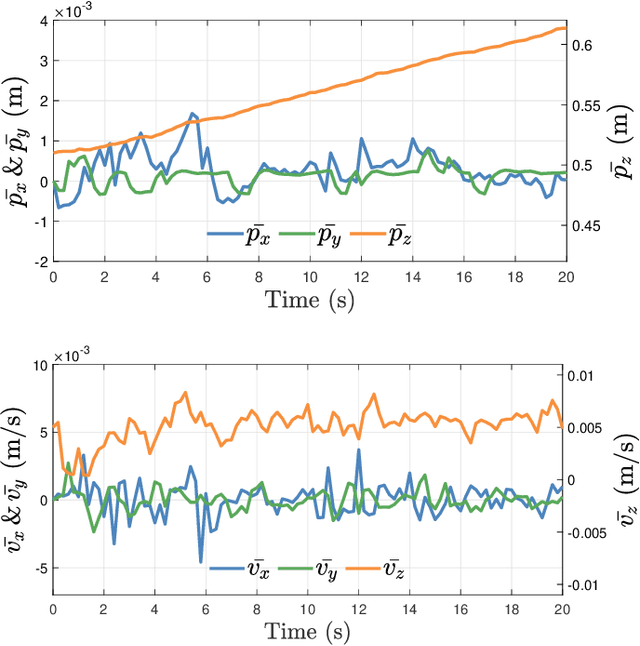

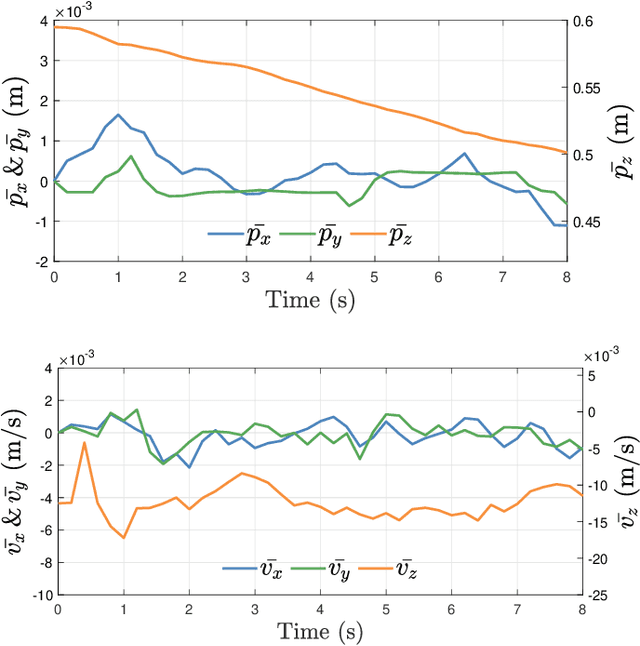

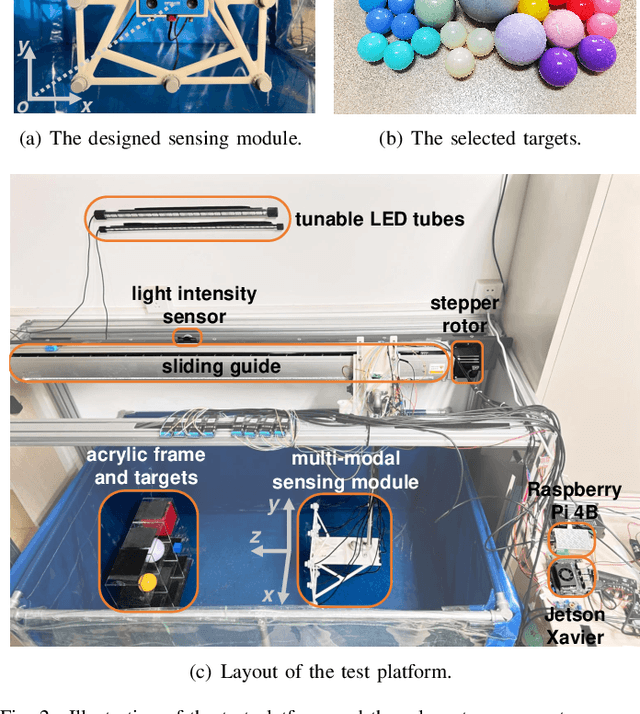

A Multi-Modal Approach Based on Large Vision Model for Close-Range Underwater Target Localization

Jan 09, 2024

Abstract:Underwater target localization uses real-time sensory measurements to estimate the position of underwater objects of interest, providing critical feedback information for underwater robots. While acoustic sensing is the most acknowledged method in underwater robots and possibly the only effective approach for long-range underwater target localization, such a sensing modality generally suffers from low resolution, high cost and high energy consumption, thus leading to a mediocre performance when applied to close-range underwater target localization. On the other hand, optical sensing has attracted increasing attention in the underwater robotics community for its advantages of high resolution and low cost, holding a great potential particularly in close-range underwater target localization. However, most existing studies in underwater optical sensing are restricted to specific types of targets due to the limited training data available. In addition, these studies typically focus on the design of estimation algorithms and ignore the influence of illumination conditions on the sensing performance, thus hindering wider applications in the real world. To address the aforementioned issues, this paper proposes a novel target localization method that assimilates both optical and acoustic sensory measurements to estimate the 3D positions of close-range underwater targets. A test platform with controllable illumination conditions is designed and developed to experimentally investigate the proposed multi-modal sensing approach. A large vision model is applied to process the optical imaging measurements, eliminating the requirement for training data acquisition, thus significantly expanding the scope of potential applications. Extensive experiments are conducted, the results of which validate the effectiveness of the proposed underwater target localization method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge