A Multi-Modal Approach Based on Large Vision Model for Close-Range Underwater Target Localization

Paper and Code

Jan 09, 2024

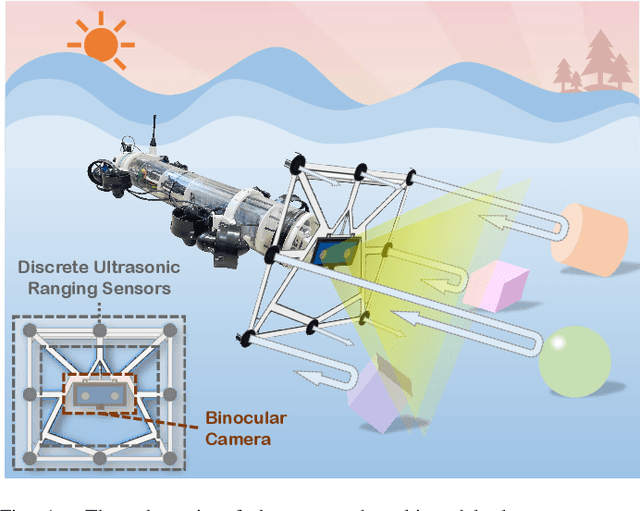

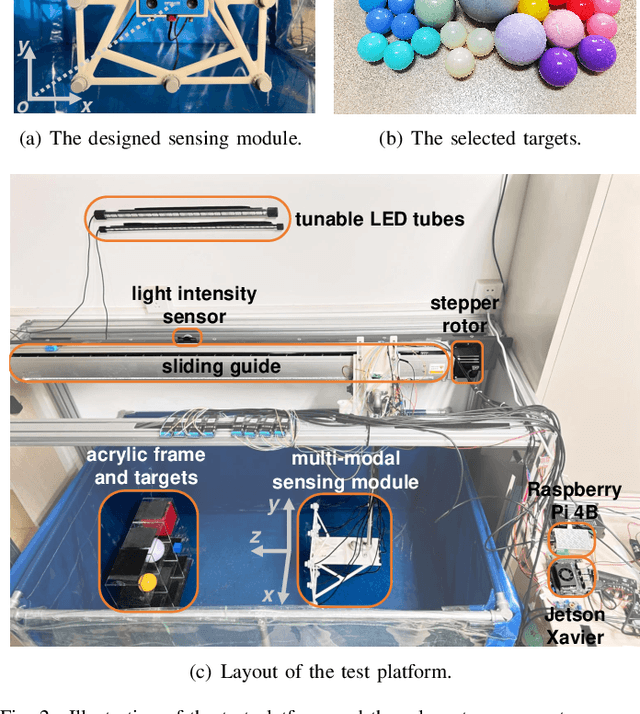

Underwater target localization uses real-time sensory measurements to estimate the position of underwater objects of interest, providing critical feedback information for underwater robots. While acoustic sensing is the most acknowledged method in underwater robots and possibly the only effective approach for long-range underwater target localization, such a sensing modality generally suffers from low resolution, high cost and high energy consumption, thus leading to a mediocre performance when applied to close-range underwater target localization. On the other hand, optical sensing has attracted increasing attention in the underwater robotics community for its advantages of high resolution and low cost, holding a great potential particularly in close-range underwater target localization. However, most existing studies in underwater optical sensing are restricted to specific types of targets due to the limited training data available. In addition, these studies typically focus on the design of estimation algorithms and ignore the influence of illumination conditions on the sensing performance, thus hindering wider applications in the real world. To address the aforementioned issues, this paper proposes a novel target localization method that assimilates both optical and acoustic sensory measurements to estimate the 3D positions of close-range underwater targets. A test platform with controllable illumination conditions is designed and developed to experimentally investigate the proposed multi-modal sensing approach. A large vision model is applied to process the optical imaging measurements, eliminating the requirement for training data acquisition, thus significantly expanding the scope of potential applications. Extensive experiments are conducted, the results of which validate the effectiveness of the proposed underwater target localization method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge