Mathieu Labbé

Appearance-Based Loop Closure Detection for Online Large-Scale and Long-Term Operation

Jul 22, 2024

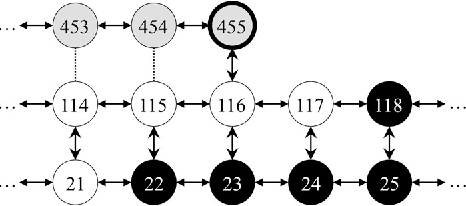

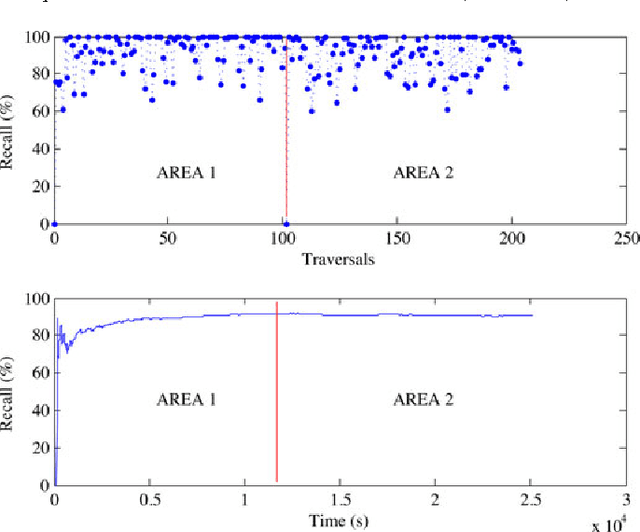

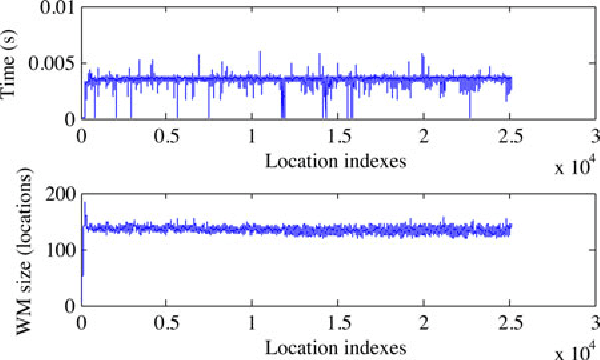

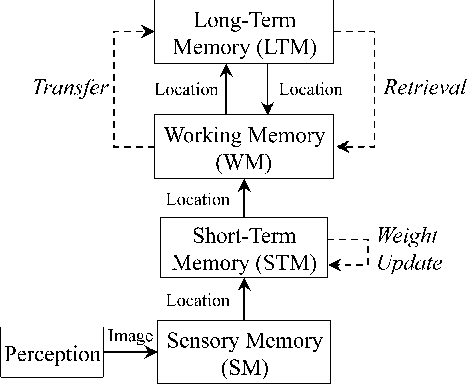

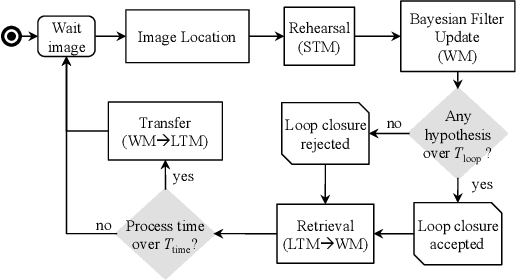

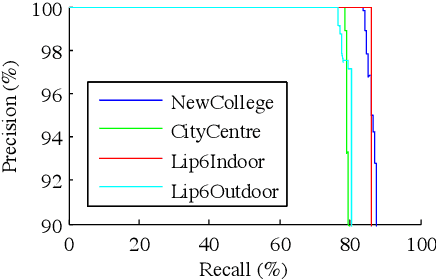

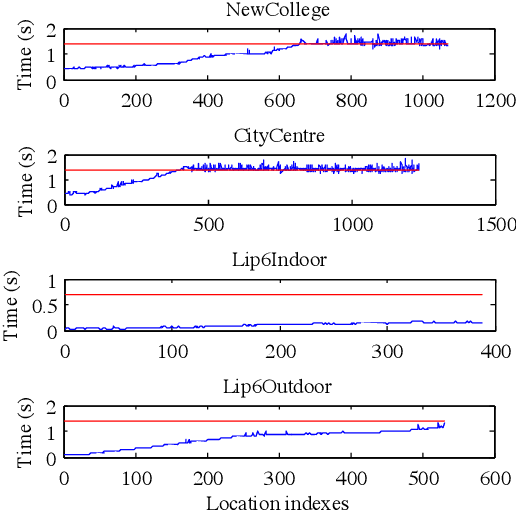

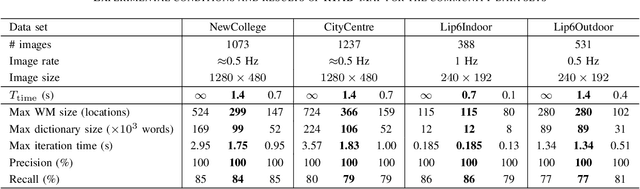

Abstract:In appearance-based localization and mapping, loop closure detection is the process used to determinate if the current observation comes from a previously visited location or a new one. As the size of the internal map increases, so does the time required to compare new observations with all stored locations, eventually limiting online processing. This paper presents an online loop closure detection approach for large-scale and long-term operation. The approach is based on a memory management method, which limits the number of locations used for loop closure detection so that the computation time remains under real-time constraints. The idea consists of keeping the most recent and frequently observed locations in a Working Memory (WM) used for loop closure detection, and transferring the others into a Long-Term Memory (LTM). When a match is found between the current location and one stored in WM, associated locations stored in LTM can be updated and remembered for additional loop closure detections. Results demonstrate the approach's adaptability and scalability using ten standard data sets from other appearance-based loop closure approaches, one custom data set using real images taken over a 2 km loop of our university campus, and one custom data set (7 hours) using virtual images from the racing video game ``Need for Speed: Most Wanted''.

* 12 pages, 11 figures

Memory Management for Real-Time Appearance-Based Loop Closure Detection

Jul 22, 2024

Abstract:Loop closure detection is the process involved when trying to find a match between the current and a previously visited locations in SLAM. Over time, the amount of time required to process new observations increases with the size of the internal map, which may influence real-time processing. In this paper, we present a novel real-time loop closure detection approach for large-scale and long-term SLAM. Our approach is based on a memory management method that keeps computation time for each new observation under a fixed limit. Results demonstrate the approach's adaptability and scalability using four standard data sets.

* 6 pages, 3 figures. arXiv admin note: substantial text overlap with arXiv:2407.15304

RTAB-Map as an Open-Source Lidar and Visual SLAM Library for Large-Scale and Long-Term Online Operation

Mar 10, 2024Abstract:Distributed as an open source library since 2013, RTAB-Map started as an appearance-based loop closure detection approach with memory management to deal with large-scale and long-term online operation. It then grew to implement Simultaneous Localization and Mapping (SLAM) on various robots and mobile platforms. As each application brings its own set of contraints on sensors, processing capabilities and locomotion, it raises the question of which SLAM approach is the most appropriate to use in terms of cost, accuracy, computation power and ease of integration. Since most of SLAM approaches are either visual or lidar-based, comparison is difficult. Therefore, we decided to extend RTAB-Map to support both visual and lidar SLAM, providing in one package a tool allowing users to implement and compare a variety of 3D and 2D solutions for a wide range of applications with different robots and sensors. This paper presents this extended version of RTAB-Map and its use in comparing, both quantitatively and qualitatively, a large selection of popular real-world datasets (e.g., KITTI, EuRoC, TUM RGB-D, MIT Stata Center on PR2 robot), outlining strengths and limitations of visual and lidar SLAM configurations from a practical perspective for autonomous navigation applications.

* 40 pages, 19 figures

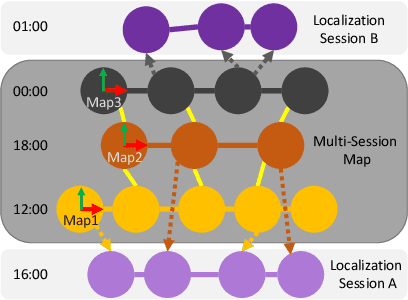

Long-Term Online Multi-Session Graph-Based SPLAM with Memory Management

Dec 30, 2022Abstract:For long-term simultaneous planning, localization and mapping (SPLAM), a robot should be able to continuously update its map according to the dynamic changes of the environment and the new areas explored. With limited onboard computation capabilities, a robot should also be able to limit the size of the map used for online localization and mapping. This paper addresses these challenges using a memory management mechanism, which identifies locations that should remain in a Working Memory (WM) for online processing from locations that should be transferred to a Long-Term Memory (LTM). When revisiting previously mapped areas that are in LTM, the mechanism can retrieve these locations and place them back in WM for online SPLAM. The approach is tested on a robot equipped with a short-range laser rangefinder and a RGB-D camera, patrolling autonomously 10.5 km in an indoor environment over 11 sessions while having encountered 139 people.

* 19 pages, 19 figures

Have I been here before? Learning to Close the Loop with LiDAR Data in Graph-Based SLAM

Mar 11, 2021

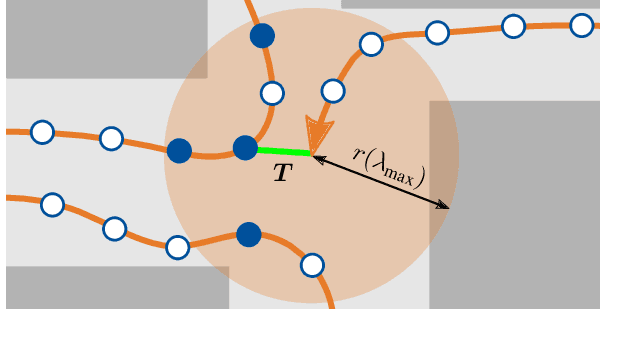

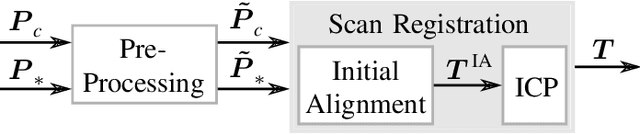

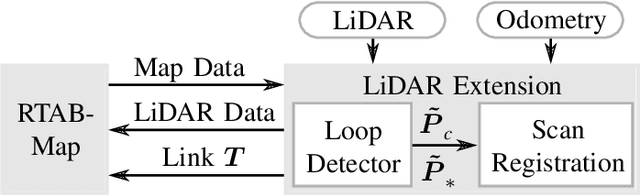

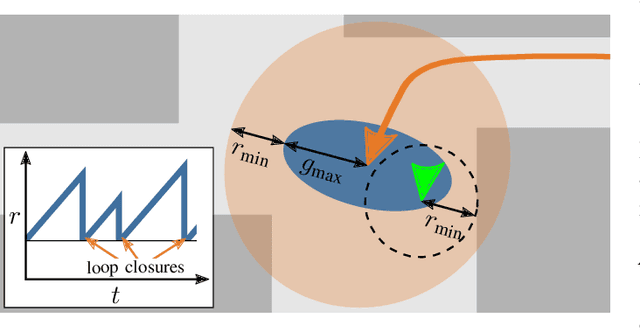

Abstract:This work presents an extension of graph-based SLAM methods to exploit the potential of 3D laser scans for loop detection. Every high-dimensional point cloud is replaced by a compact global descriptor, whereby a trained detector decides whether a loop exists. Searching for loops is performed locally in a variable space to consider the odometry drift. Since closing a wrong loop has fatal consequences, an extensive verification is performed before acceptance. The proposed algorithm is implemented as an extension of the widely used state-of-the-art library RTAB-Map, and several experiments show the improvement: During SLAM with a mobile service robot in changing indoor and outdoor campus environments, our approach improves RTAB-Map regarding total number of closed loops. Especially in the presence of significant environmental changes, which typically lead to failure, localization becomes possible by our extension. Experiments with a car in traffic (KITTI benchmark) show the general applicability of our approach. These results are comparable to the state-of-the-art LiDAR method LOAM. The developed ROS package is freely available.

OpenTera: A Microservice Architecture Solution for Rapid Prototyping of Robotic Solutions to COVID-19 Challenges in Care Facilities

Mar 10, 2021

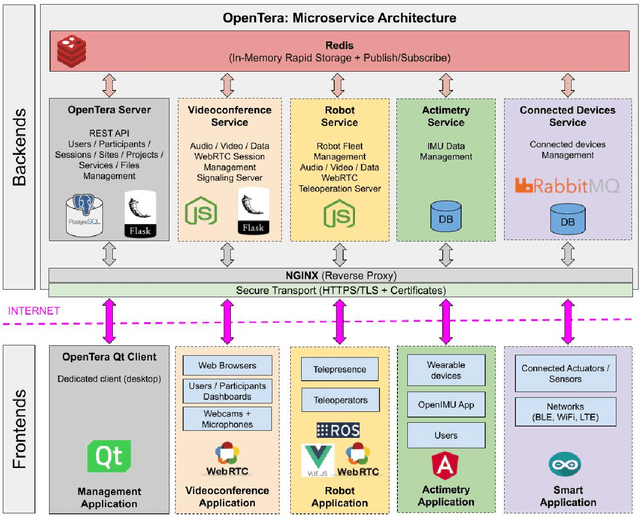

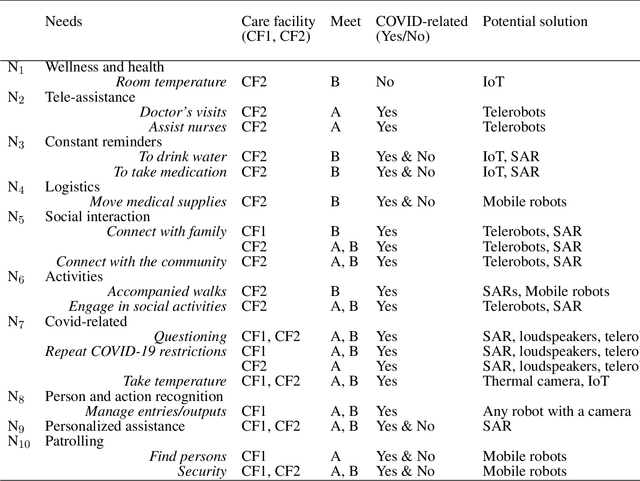

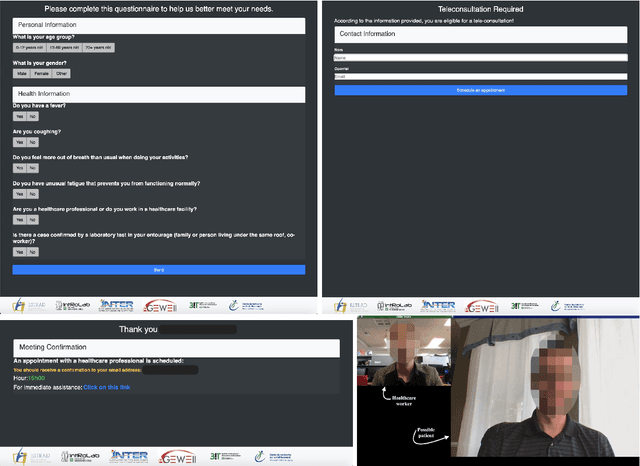

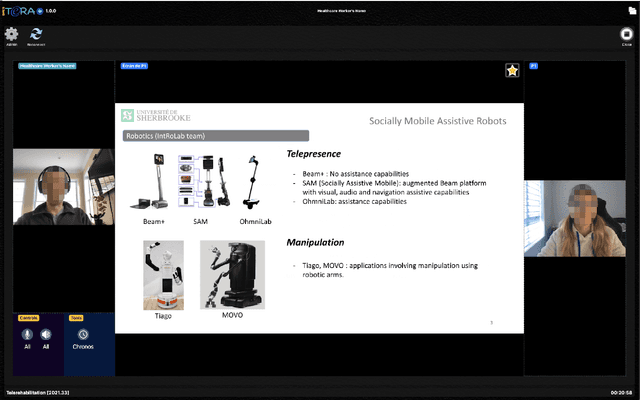

Abstract:As telecommunications technology progresses, telehealth frameworks are becoming more widely adopted in the context of long-term care (LTC) for older adults, both in care facilities and in homes. Today, robots could assist healthcare workers when they provide care to elderly patients, who constitute a particularly vulnerable population during the COVID-19 pandemic. Previous work on user-centered design of assistive technologies in LTC facilities for seniors has identified positive impacts. The need to deal with the effects of the COVID-19 pandemic emphasizes the benefits of this approach, but also highlights some new challenges for which robots could be interesting solutions to be deployed in LTC facilities. This requires customization of telecommunication and audio/video/data processing to address specific clinical requirements and needs. This paper presents OpenTera, an open source telehealth framework, aiming to facilitate prototyping of such solutions by software and robotic designers. Designed as a microservice-oriented platform, OpenTera is an end-to-end solution that employs a series of independent modules for tasks such as data and session management, telehealth, daily assistive tasks/actions, together with smart devices and environments, all connected through the framework. After explaining the framework, we illustrate how OpenTera can be used to implement robotic solutions for different applications identified in LTC facilities and homes, and we describe how we plan to validate them through field trials.

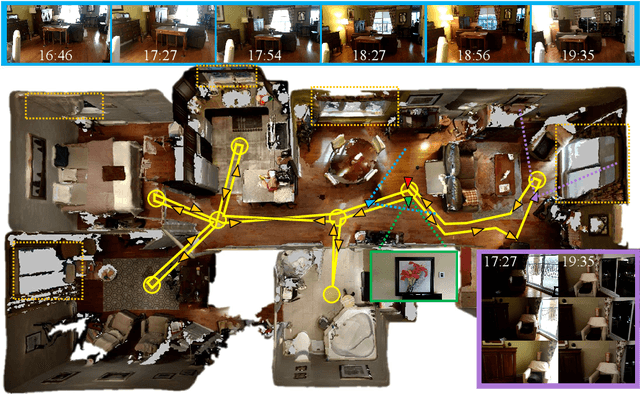

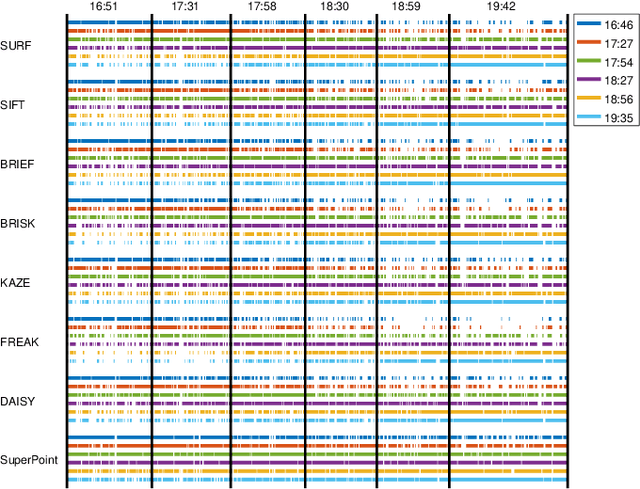

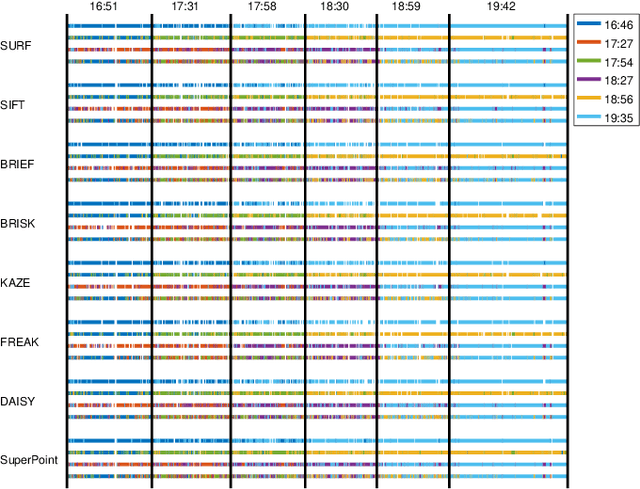

Multi-Session Visual SLAM for Illumination Invariant Localization in Indoor Environments

Mar 05, 2021

Abstract:For robots navigating using only a camera, illumination changes in indoor environments can cause localization failures during autonomous navigation. In this paper, we present a multi-session visual SLAM approach to create a map made of multiple variations of the same locations in different illumination conditions. The multi-session map can then be used at any hour of the day for improved localization capability. The approach presented is independent of the visual features used, and this is demonstrated by comparing localization performance between multi-session maps created using the RTAB-Map library with SURF, SIFT, BRIEF, FREAK, BRISK, KAZE, DAISY and SuperPoint visual features. The approach is tested on six mapping and six localization sessions recorded at 30 minutes intervals during sunset using a Google Tango phone in a real apartment.

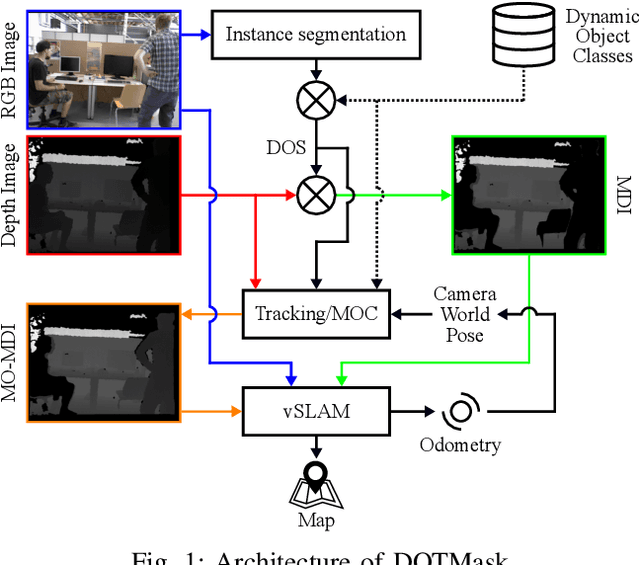

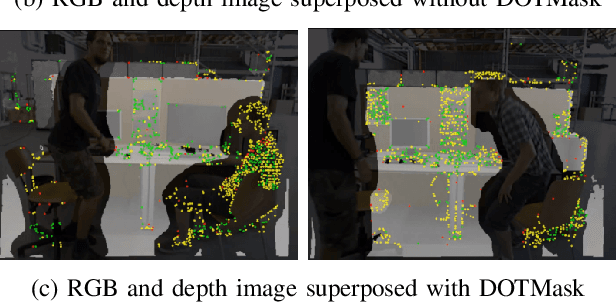

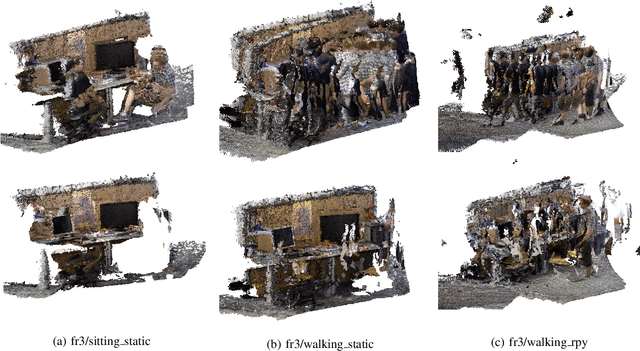

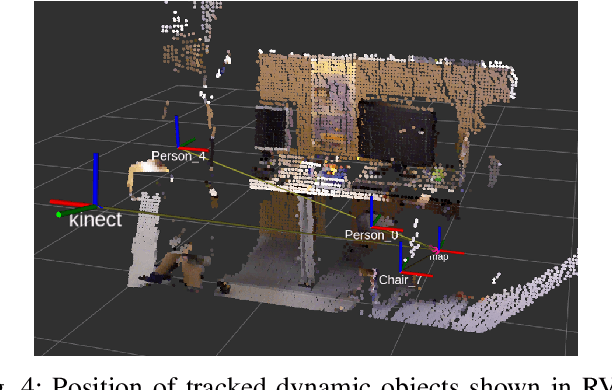

Dynamic Object Tracking and Masking for Visual SLAM

Jul 31, 2020

Abstract:In dynamic environments, performance of visual SLAM techniques can be impaired by visual features taken from moving objects. One solution is to identify those objects so that their visual features can be removed for localization and mapping. This paper presents a simple and fast pipeline that uses deep neural networks, extended Kalman filters and visual SLAM to improve both localization and mapping in dynamic environments (around 14 fps on a GTX 1080). Results on the dynamic sequences from the TUM dataset using RTAB-Map as visual SLAM suggest that the approach achieves similar localization performance compared to other state-of-the-art methods, while also providing the position of the tracked dynamic objects, a 3D map free of those dynamic objects, better loop closure detection with the whole pipeline able to run on a robot moving at moderate speed.

Cheap or Robust? The Practical Realization of Self-Driving Wheelchair Technology

Jul 13, 2018

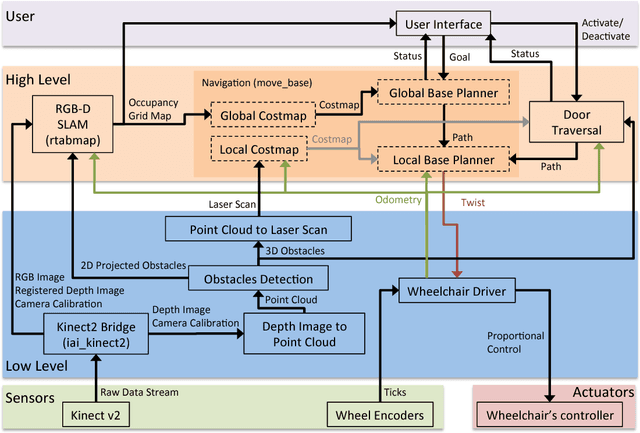

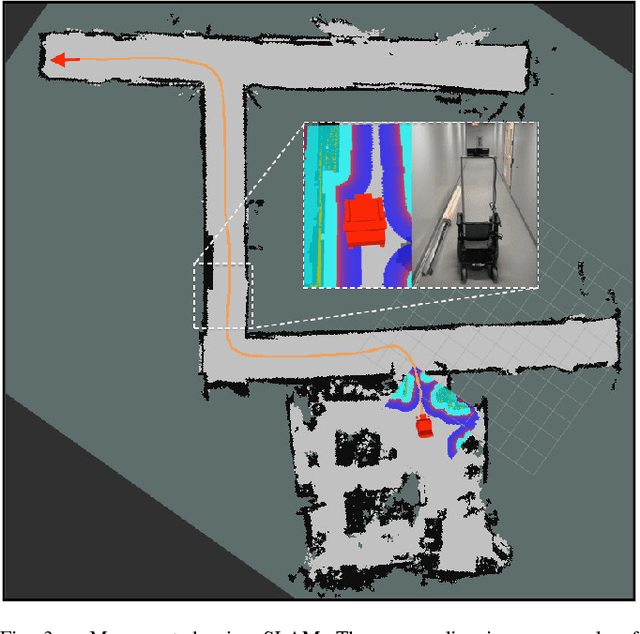

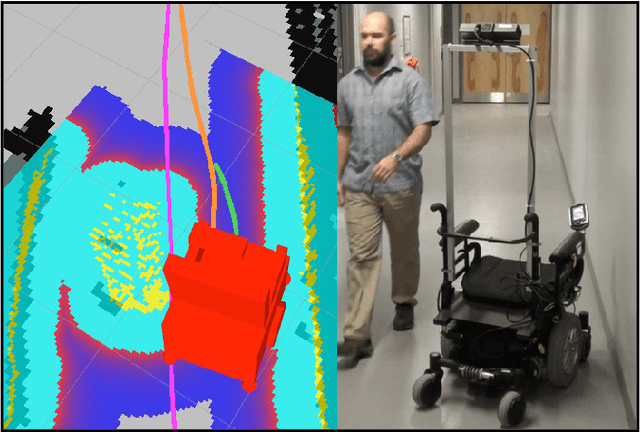

Abstract:To date, self-driving experimental wheelchair technologies have been either inexpensive or robust, but not both. Yet, in order to achieve real-world acceptance, both qualities are fundamentally essential. We present a unique approach to achieve inexpensive and robust autonomous and semi-autonomous assistive navigation for existing fielded wheelchairs, of which there are approximately 5 million units in Canada and United States alone. Our prototype wheelchair platform is capable of localization and mapping, as well as robust obstacle avoidance, using only a commodity RGB-D sensor and wheel odometry. As a specific example of the navigation capabilities, we focus on the single most common navigation problem: the traversal of narrow doorways in arbitrary environments. The software we have developed is generalizable to corridor following, desk docking, and other navigation tasks that are either extremely difficult or impossible for people with upper-body mobility impairments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge