Jonathan Vincent

Fast Cross-Correlation for TDoA Estimation on Small Aperture Microphone Arrays

Apr 28, 2022

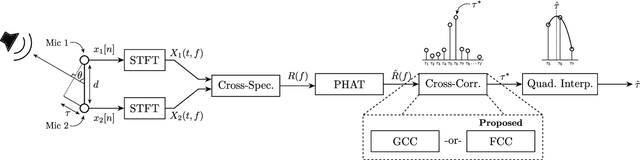

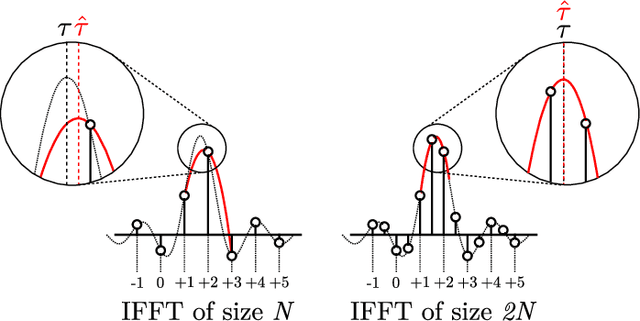

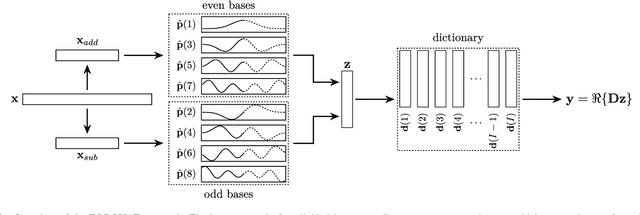

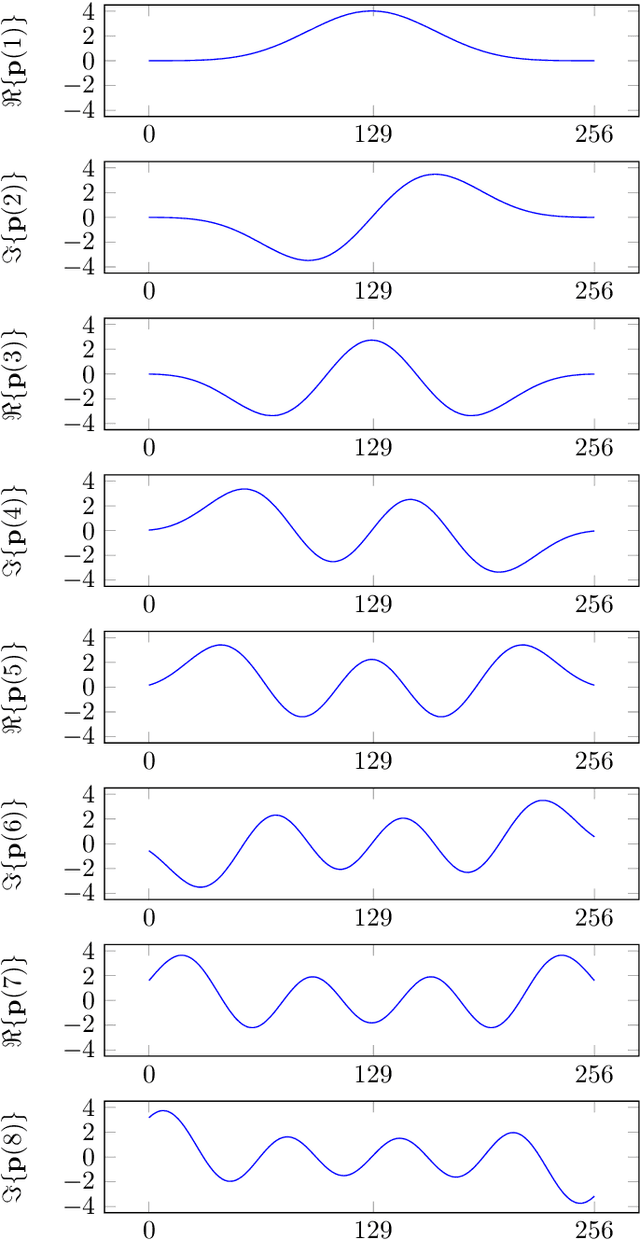

Abstract:This paper introduces the Fast Cross-Correlation (FCC) method for Time Difference of Arrival (TDoA) Estimation for pairs of microphones on a small aperture microphone array. FCC relies on low-rank decomposition and exploits symmetry in even and odd bases to speed up computation while preserving TDoA accuracy. FCC reduces the number of flops by a factor of 4.5 and the execution speed by factors of 8.2, 2.6 and 2.7 on a Raspberry Pi Zero, a Raspberry Pi 4 and a Nvidia Jetson TX2 devices, respectively, compared to the state-of-the-art Generalized Cross-Correlation (GCC) method that relies on the Fast Fourier Transform (FFT). This improvement can provide portable microphone arrays with extended battery life and allow real-time processing on low-cost hardware.

SMP-PHAT: Lightweight DoA Estimation by Merging Microphone Pairs

Mar 27, 2022

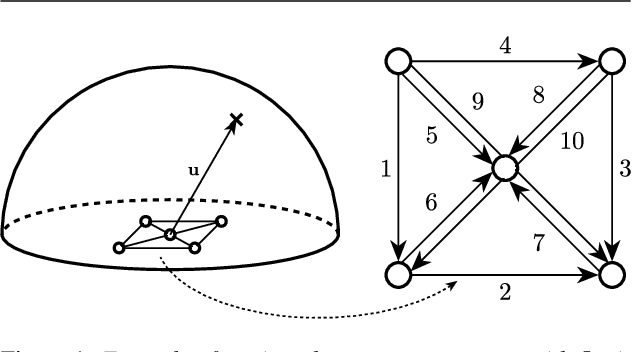

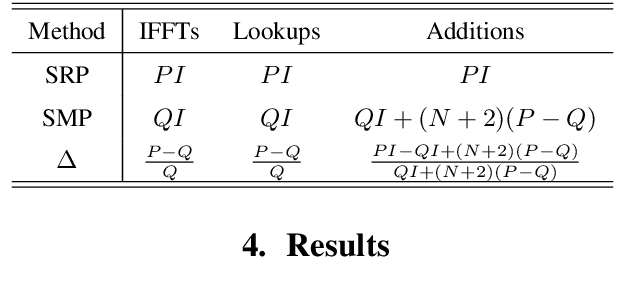

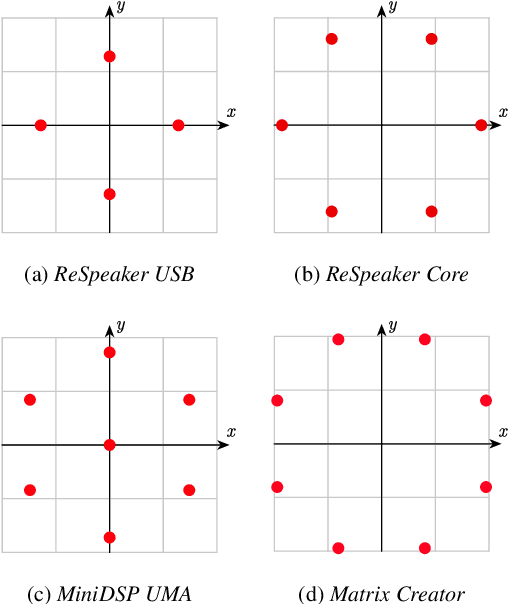

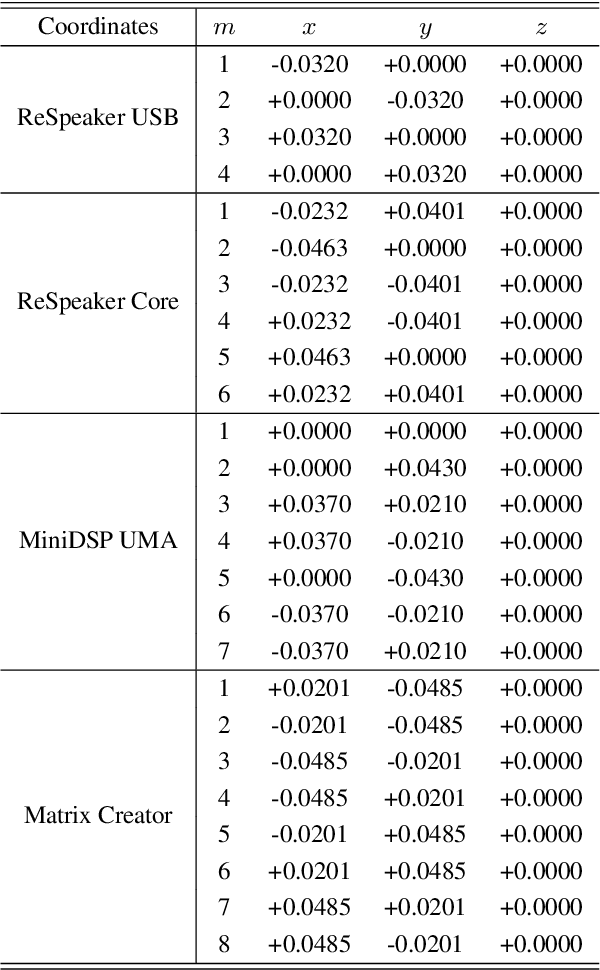

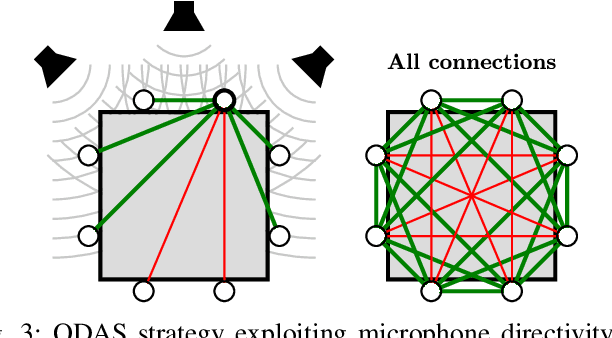

Abstract:This paper introduces SMP-PHAT, which performs direction of arrival (DoA) of sound estimation with a microphone array by merging pairs of microphones that are parallel in space. This approach reduces the number of pairwise cross-correlation computations, and brings down the number of flops and memory lookups when searching for DoA. Experiments on low-cost hardware with commonly used microphone arrays show that the proposed method provides the same accuracy as the former SRP-PHAT approach, while reducing the computational load by 39% in some cases.

ODAS: Open embeddeD Audition System

Mar 05, 2021

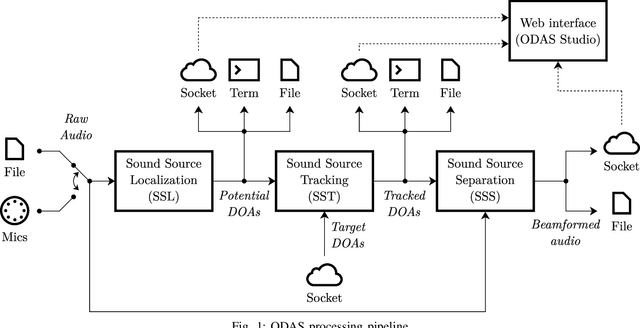

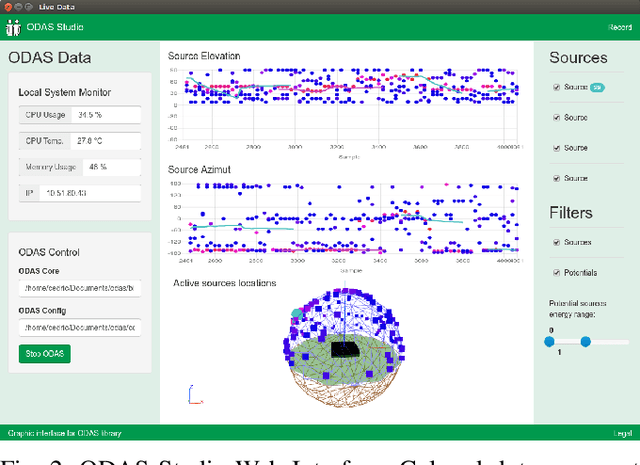

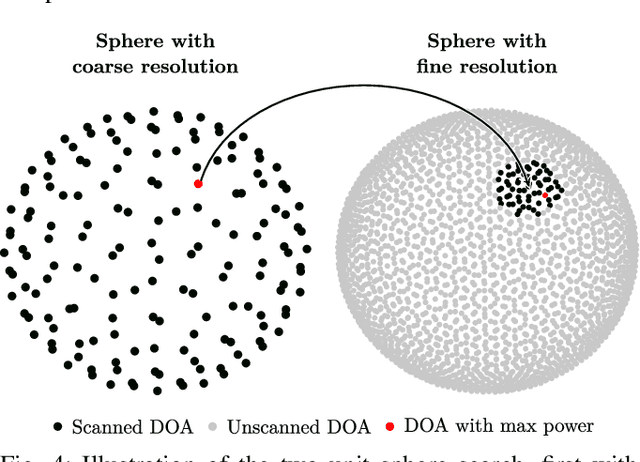

Abstract:Artificial audition aims at providing hearing capabilities to machines, computers and robots. Existing frameworks in robot audition offer interesting sound source localization, tracking and separation performance, but involve a significant amount of computations that limit their use on robots with embedded computing capabilities. This paper presents ODAS, the Open embeddeD Audition System framework, which includes strategies to reduce the computational load and perform robot audition tasks on low-cost embedded computing systems. It presents key features of ODAS, along with cases illustrating its uses in different robots and artificial audition applications.

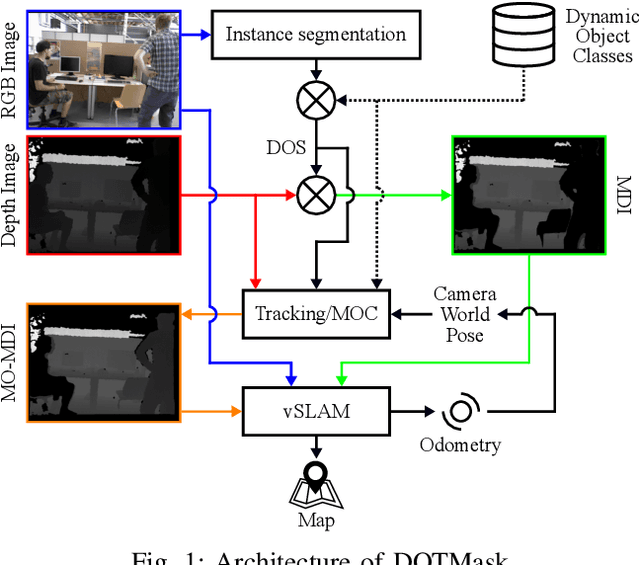

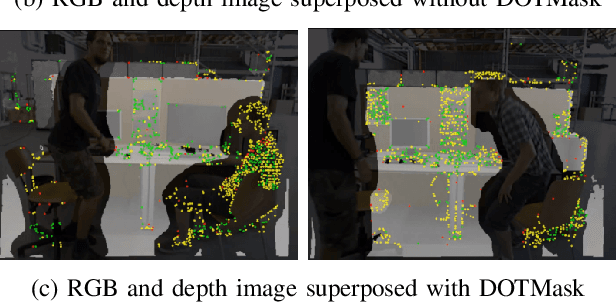

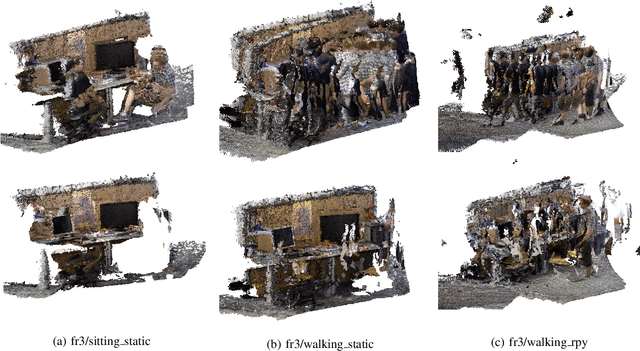

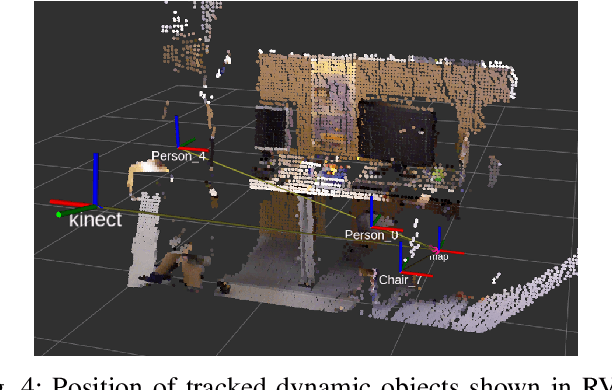

Dynamic Object Tracking and Masking for Visual SLAM

Jul 31, 2020

Abstract:In dynamic environments, performance of visual SLAM techniques can be impaired by visual features taken from moving objects. One solution is to identify those objects so that their visual features can be removed for localization and mapping. This paper presents a simple and fast pipeline that uses deep neural networks, extended Kalman filters and visual SLAM to improve both localization and mapping in dynamic environments (around 14 fps on a GTX 1080). Results on the dynamic sequences from the TUM dataset using RTAB-Map as visual SLAM suggest that the approach achieves similar localization performance compared to other state-of-the-art methods, while also providing the position of the tracked dynamic objects, a 3D map free of those dynamic objects, better loop closure detection with the whole pipeline able to run on a robot moving at moderate speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge