Marija Ivanovska

Second Competition on Presentation Attack Detection on ID Card

Jul 27, 2025Abstract:This work summarises and reports the results of the second Presentation Attack Detection competition on ID cards. This new version includes new elements compared to the previous one. (1) An automatic evaluation platform was enabled for automatic benchmarking; (2) Two tracks were proposed in order to evaluate algorithms and datasets, respectively; and (3) A new ID card dataset was shared with Track 1 teams to serve as the baseline dataset for the training and optimisation. The Hochschule Darmstadt, Fraunhofer-IGD, and Facephi company jointly organised this challenge. 20 teams were registered, and 74 submitted models were evaluated. For Track 1, the "Dragons" team reached first place with an Average Ranking and Equal Error rate (EER) of AV-Rank of 40.48% and 11.44% EER, respectively. For the more challenging approach in Track 2, the "Incode" team reached the best results with an AV-Rank of 14.76% and 6.36% EER, improving on the results of the first edition of 74.30% and 21.87% EER, respectively. These results suggest that PAD on ID cards is improving, but it is still a challenging problem related to the number of images, especially of bona fide images.

SelfMAD: Enhancing Generalization and Robustness in Morphing Attack Detection via Self-Supervised Learning

Apr 07, 2025Abstract:With the continuous advancement of generative models, face morphing attacks have become a significant challenge for existing face verification systems due to their potential use in identity fraud and other malicious activities. Contemporary Morphing Attack Detection (MAD) approaches frequently rely on supervised, discriminative models trained on examples of bona fide and morphed images. These models typically perform well with morphs generated with techniques seen during training, but often lead to sub-optimal performance when subjected to novel unseen morphing techniques. While unsupervised models have been shown to perform better in terms of generalizability, they typically result in higher error rates, as they struggle to effectively capture features of subtle artifacts. To address these shortcomings, we present SelfMAD, a novel self-supervised approach that simulates general morphing attack artifacts, allowing classifiers to learn generic and robust decision boundaries without overfitting to the specific artifacts induced by particular face morphing methods. Through extensive experiments on widely used datasets, we demonstrate that SelfMAD significantly outperforms current state-of-the-art MADs, reducing the detection error by more than 64% in terms of EER when compared to the strongest unsupervised competitor, and by more than 66%, when compared to the best performing discriminative MAD model, tested in cross-morph settings. The source code for SelfMAD is available at https://github.com/LeonTodorov/SelfMAD.

MADation: Face Morphing Attack Detection with Foundation Models

Jan 08, 2025Abstract:Despite the considerable performance improvements of face recognition algorithms in recent years, the same scientific advances responsible for this progress can also be used to create efficient ways to attack them, posing a threat to their secure deployment. Morphing attack detection (MAD) systems aim to detect a specific type of threat, morphing attacks, at an early stage, preventing them from being considered for verification in critical processes. Foundation models (FM) learn from extensive amounts of unlabeled data, achieving remarkable zero-shot generalization to unseen domains. Although this generalization capacity might be weak when dealing with domain-specific downstream tasks such as MAD, FMs can easily adapt to these settings while retaining the built-in knowledge acquired during pre-training. In this work, we recognize the potential of FMs to perform well in the MAD task when properly adapted to its specificities. To this end, we adapt FM CLIP architectures with LoRA weights while simultaneously training a classification header. The proposed framework, MADation surpasses our alternative FM and transformer-based frameworks and constitutes the first adaption of FMs to the MAD task. MADation presents competitive results with current MAD solutions in the literature and even surpasses them in several evaluation scenarios. To encourage reproducibility and facilitate further research in MAD, we publicly release the implementation of MADation at https: //github.com/gurayozgur/MADation

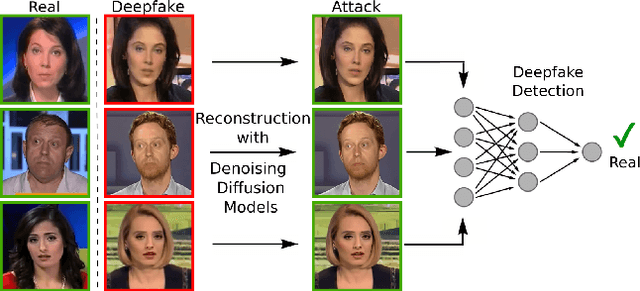

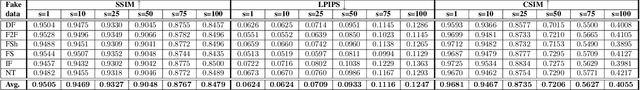

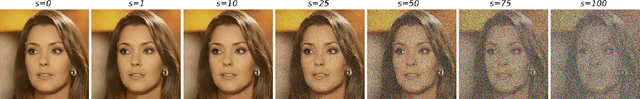

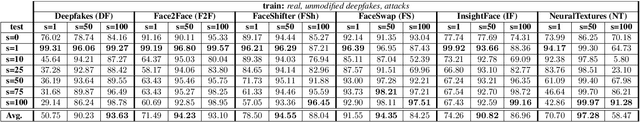

On the Vulnerability of DeepFake Detectors to Attacks Generated by Denoising Diffusion Models

Jul 11, 2023

Abstract:The detection of malicious Deepfakes is a constantly evolving problem, that requires continuous monitoring of detectors, to ensure they are able to detect image manipulations generated by the latest emerging models. In this paper, we present a preliminary study that investigates the vulnerability of single-image Deepfake detectors to attacks created by a representative of the newest generation of generative methods, i.e. Denoising Diffusion Models (DDMs). Our experiments are run on FaceForensics++, a commonly used benchmark dataset, consisting of Deepfakes generated with various techniques for face swapping and face reenactment. The analysis shows, that reconstructing existing Deepfakes with only one denoising diffusion step significantly decreases the accuracy of all tested detectors, without introducing visually perceptible image changes.

TomatoDIFF: On-plant Tomato Segmentation with Denoising Diffusion Models

Jul 03, 2023Abstract:Artificial intelligence applications enable farmers to optimize crop growth and production while reducing costs and environmental impact. Computer vision-based algorithms in particular, are commonly used for fruit segmentation, enabling in-depth analysis of the harvest quality and accurate yield estimation. In this paper, we propose TomatoDIFF, a novel diffusion-based model for semantic segmentation of on-plant tomatoes. When evaluated against other competitive methods, our model demonstrates state-of-the-art (SOTA) performance, even in challenging environments with highly occluded fruits. Additionally, we introduce Tomatopia, a new, large and challenging dataset of greenhouse tomatoes. The dataset comprises high-resolution RGB-D images and pixel-level annotations of the fruits.

Face Morphing Attack Detection with Denoising Diffusion Probabilistic Models

Jun 27, 2023

Abstract:Morphed face images have recently become a growing concern for existing face verification systems, as they are relatively easy to generate and can be used to impersonate someone's identity for various malicious purposes. Efficient Morphing Attack Detection (MAD) that generalizes well across different morphing techniques is, therefore, of paramount importance. Existing MAD techniques predominantly rely on discriminative models that learn from examples of bona fide and morphed images and, as a result, often exhibit sub-optimal generalization performance when confronted with unknown types of morphing attacks. To address this problem, we propose a novel, diffusion-based MAD method in this paper that learns only from the characteristics of bona fide images. Various forms of morphing attacks are then detected by our model as out-of-distribution samples. We perform rigorous experiments over four different datasets (CASIA-WebFace, FRLL-Morphs, FERET-Morphs and FRGC-Morphs) and compare the proposed solution to both discriminatively-trained and once-class MAD models. The experimental results show that our MAD model achieves highly competitive results on all considered datasets.

* Published at IWBF 2023

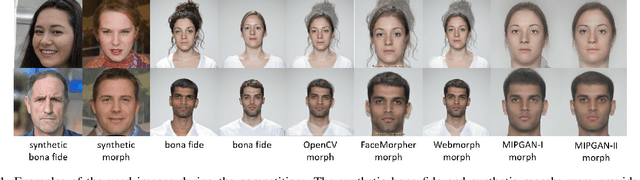

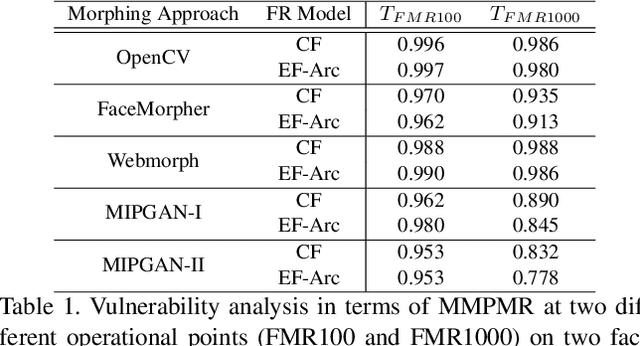

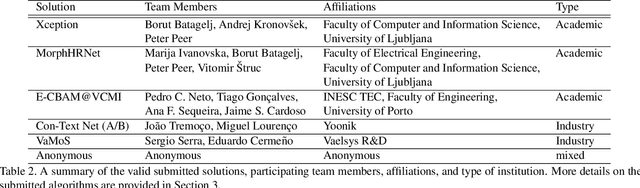

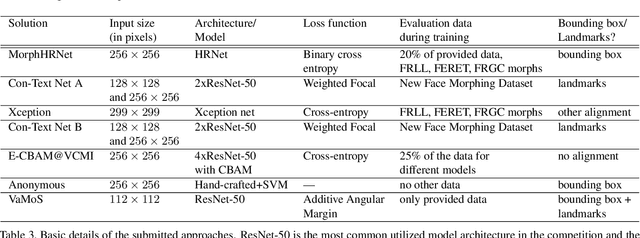

SYN-MAD 2022: Competition on Face Morphing Attack Detection Based on Privacy-aware Synthetic Training Data

Aug 15, 2022

Abstract:This paper presents a summary of the Competition on Face Morphing Attack Detection Based on Privacy-aware Synthetic Training Data (SYN-MAD) held at the 2022 International Joint Conference on Biometrics (IJCB 2022). The competition attracted a total of 12 participating teams, both from academia and industry and present in 11 different countries. In the end, seven valid submissions were submitted by the participating teams and evaluated by the organizers. The competition was held to present and attract solutions that deal with detecting face morphing attacks while protecting people's privacy for ethical and legal reasons. To ensure this, the training data was limited to synthetic data provided by the organizers. The submitted solutions presented innovations that led to outperforming the considered baseline in many experimental settings. The evaluation benchmark is now available at: https://github.com/marcohuber/SYN-MAD-2022.

Face Morphing Attack Detection Using Privacy-Aware Training Data

Jul 02, 2022

Abstract:Images of morphed faces pose a serious threat to face recognition--based security systems, as they can be used to illegally verify the identity of multiple people with a single morphed image. Modern detection algorithms learn to identify such morphing attacks using authentic images of real individuals. This approach raises various privacy concerns and limits the amount of publicly available training data. In this paper, we explore the efficacy of detection algorithms that are trained only on faces of non--existing people and their respective morphs. To this end, two dedicated algorithms are trained with synthetic data and then evaluated on three real-world datasets, i.e.: FRLL-Morphs, FERET-Morphs and FRGC-Morphs. Our results show that synthetic facial images can be successfully employed for the training process of the detection algorithms and generalize well to real-world scenarios.

Y-GAN: Learning Dual Data Representations for Efficient Anomaly Detection

Sep 28, 2021

Abstract:We propose a novel reconstruction-based model for anomaly detection, called Y-GAN. The model consists of a Y-shaped auto-encoder and represents images in two separate latent spaces. The first captures meaningful image semantics, key for representing (normal) training data, whereas the second encodes low-level residual image characteristics. To ensure the dual representations encode mutually exclusive information, a disentanglement procedure is designed around a latent (proxy) classifier. Additionally, a novel consistency loss is proposed to prevent information leakage between the latent spaces. The model is trained in a one-class learning setting using normal training data only. Due to the separation of semantically-relevant and residual information, Y-GAN is able to derive informative data representations that allow for efficient anomaly detection across a diverse set of anomaly detection tasks. The model is evaluated in comprehensive experiments with several recent anomaly detection models using four popular datasets, i.e., MNIST, FMNIST and CIFAR10, and PlantVillage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge