On the Vulnerability of DeepFake Detectors to Attacks Generated by Denoising Diffusion Models

Paper and Code

Jul 11, 2023

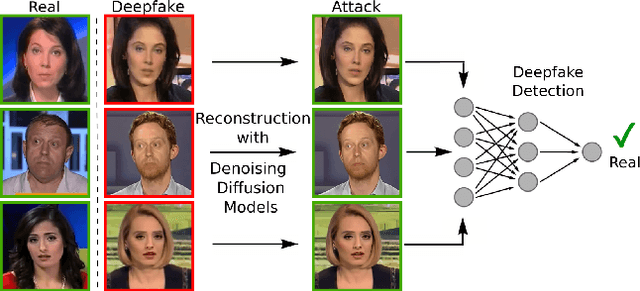

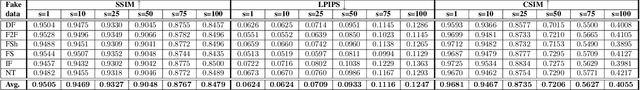

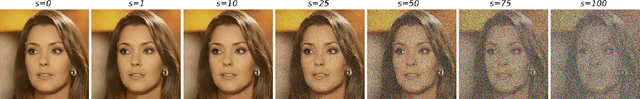

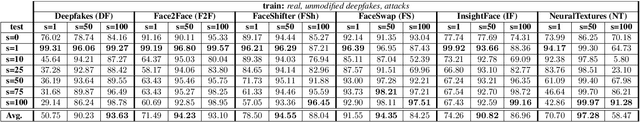

The detection of malicious Deepfakes is a constantly evolving problem, that requires continuous monitoring of detectors, to ensure they are able to detect image manipulations generated by the latest emerging models. In this paper, we present a preliminary study that investigates the vulnerability of single-image Deepfake detectors to attacks created by a representative of the newest generation of generative methods, i.e. Denoising Diffusion Models (DDMs). Our experiments are run on FaceForensics++, a commonly used benchmark dataset, consisting of Deepfakes generated with various techniques for face swapping and face reenactment. The analysis shows, that reconstructing existing Deepfakes with only one denoising diffusion step significantly decreases the accuracy of all tested detectors, without introducing visually perceptible image changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge