Deepak Kumar Jain

Dalian University of Technology, China

SelfMAD: Enhancing Generalization and Robustness in Morphing Attack Detection via Self-Supervised Learning

Apr 07, 2025Abstract:With the continuous advancement of generative models, face morphing attacks have become a significant challenge for existing face verification systems due to their potential use in identity fraud and other malicious activities. Contemporary Morphing Attack Detection (MAD) approaches frequently rely on supervised, discriminative models trained on examples of bona fide and morphed images. These models typically perform well with morphs generated with techniques seen during training, but often lead to sub-optimal performance when subjected to novel unseen morphing techniques. While unsupervised models have been shown to perform better in terms of generalizability, they typically result in higher error rates, as they struggle to effectively capture features of subtle artifacts. To address these shortcomings, we present SelfMAD, a novel self-supervised approach that simulates general morphing attack artifacts, allowing classifiers to learn generic and robust decision boundaries without overfitting to the specific artifacts induced by particular face morphing methods. Through extensive experiments on widely used datasets, we demonstrate that SelfMAD significantly outperforms current state-of-the-art MADs, reducing the detection error by more than 64% in terms of EER when compared to the strongest unsupervised competitor, and by more than 66%, when compared to the best performing discriminative MAD model, tested in cross-morph settings. The source code for SelfMAD is available at https://github.com/LeonTodorov/SelfMAD.

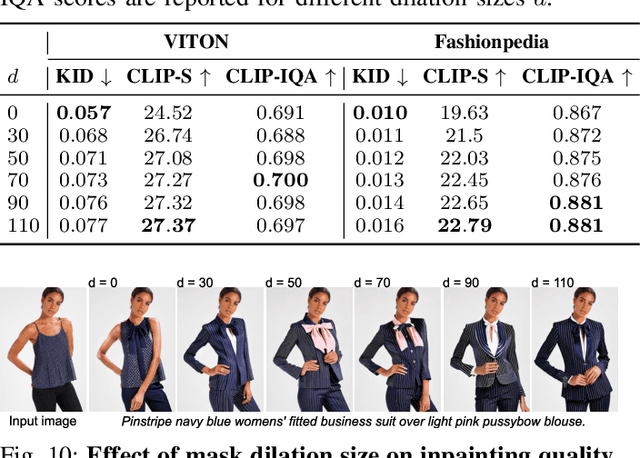

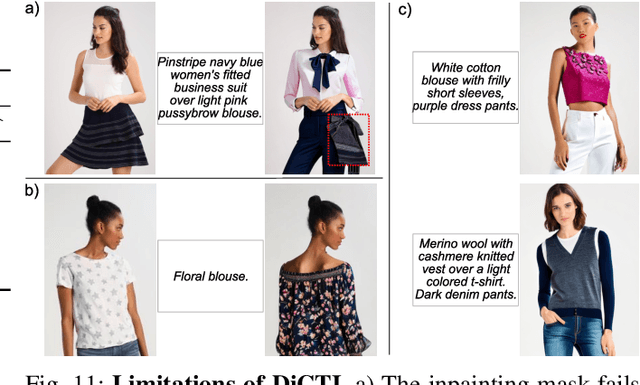

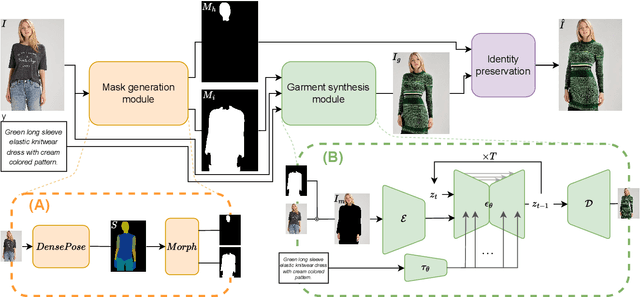

DiCTI: Diffusion-based Clothing Designer via Text-guided Input

Jul 04, 2024

Abstract:Recent developments in deep generative models have opened up a wide range of opportunities for image synthesis, leading to significant changes in various creative fields, including the fashion industry. While numerous methods have been proposed to benefit buyers, particularly in virtual try-on applications, there has been relatively less focus on facilitating fast prototyping for designers and customers seeking to order new designs. To address this gap, we introduce DiCTI (Diffusion-based Clothing Designer via Text-guided Input), a straightforward yet highly effective approach that allows designers to quickly visualize fashion-related ideas using text inputs only. Given an image of a person and a description of the desired garments as input, DiCTI automatically generates multiple high-resolution, photorealistic images that capture the expressed semantics. By leveraging a powerful diffusion-based inpainting model conditioned on text inputs, DiCTI is able to synthesize convincing, high-quality images with varied clothing designs that viably follow the provided text descriptions, while being able to process very diverse and challenging inputs, captured in completely unconstrained settings. We evaluate DiCTI in comprehensive experiments on two different datasets (VITON-HD and Fashionpedia) and in comparison to the state-of-the-art (SoTa). The results of our experiments show that DiCTI convincingly outperforms the SoTA competitor in generating higher quality images with more elaborate garments and superior text prompt adherence, both according to standard quantitative evaluation measures and human ratings, generated as part of a user study.

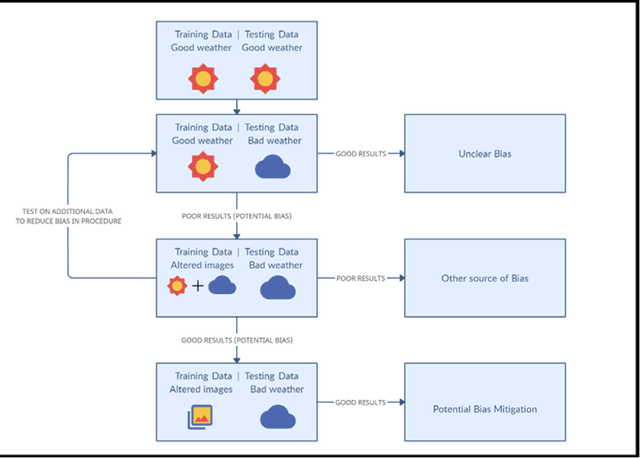

In Rain or Shine: Understanding and Overcoming Dataset Bias for Improving Robustness Against Weather Corruptions for Autonomous Vehicles

Apr 03, 2022

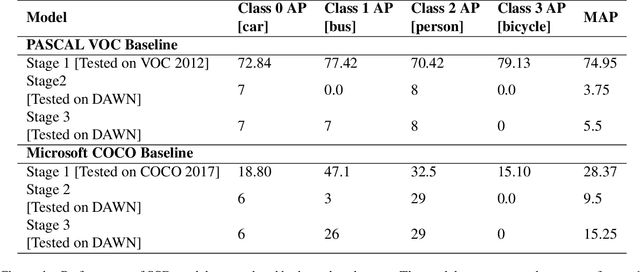

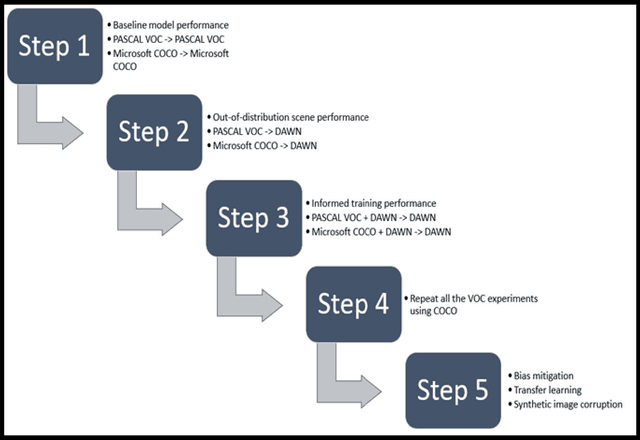

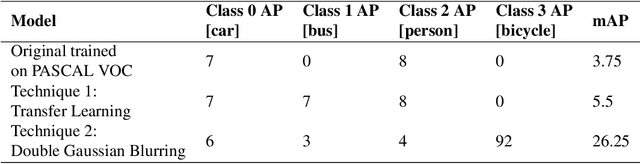

Abstract:Several popular computer vision (CV) datasets, specifically employed for Object Detection (OD) in autonomous driving tasks exhibit biases due to a range of factors including weather and lighting conditions. These biases may impair a model's generalizability, rendering it ineffective for OD in novel and unseen datasets. Especially, in autonomous driving, it may prove extremely high risk and unsafe for the vehicle and its surroundings. This work focuses on understanding these datasets better by identifying such "good-weather" bias. Methods to mitigate such bias which allows the OD models to perform better and improve the robustness are also demonstrated. A simple yet effective OD framework for studying bias mitigation is proposed. Using this framework, the performance on popular datasets is analyzed and a significant difference in model performance is observed. Additionally, a knowledge transfer technique and a synthetic image corruption technique are proposed to mitigate the identified bias. Finally, using the DAWN dataset, the findings are validated on the OD task, demonstrating the effectiveness of our techniques in mitigating real-world "good-weather" bias. The experiments show that the proposed techniques outperform baseline methods by averaged fourfold improvement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge