Lizhao You

User Centric Semantic Communications

Nov 05, 2024Abstract:Current studies on semantic communications mainly focus on efficiently extracting semantic information to reduce bandwidth usage between a transmitter and a user. Although significant process has been made in the semantic communications, a fundamental design problem is that the semantic information is extracted based on certain criteria at the transmitter side along, without considering the user's actual requirements. As a result, critical information that is of primary concern to the user may be lost. In such cases, the semantic transmission becomes meaningless to the user, as all received information is irrelevant to the user's interests. To solve this problem, this paper presents a user centric semantic communication system, where the user sends its request for the desired semantic information to the transmitter at the start of each transmission. Then, the transmitter extracts the required semantic information accordingly. A key challenge is how the transmitter can understand the user's requests for semantic information and extract the required semantic information in a reasonable and robust manner. We solve this challenge by designing a well-structured framework and leveraging off-the-shelf products, such as GPT-4, along with several specialized tools for detection and estimation. Evaluation results demonstrate the feasibility and effectiveness of the proposed user centric semantic communication system.

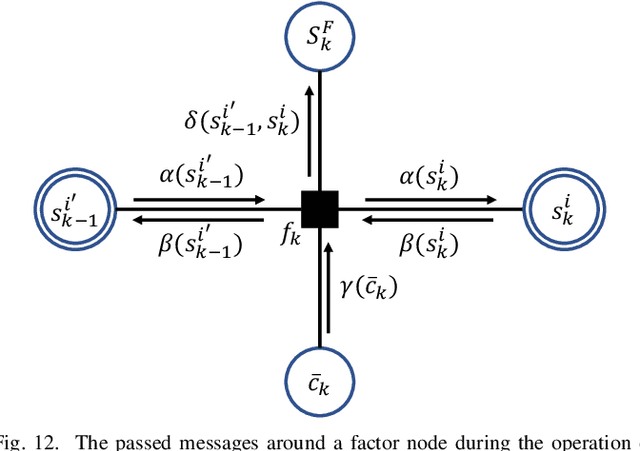

Rethinking Grant-Free Protocol in mMTC

Apr 24, 2024Abstract:This paper revisits the identity detection problem under the current grant-free protocol in massive machine-type communications (mMTC) by asking the following question: for stable identity detection performance, is it enough to permit active devices to transmit preambles without any handshaking with the base station (BS)? Specifically, in the current grant-free protocol, the BS blindly allocates a fixed length of preamble to devices for identity detection as it lacks the prior information on the number of active devices $K$. However, in practice, $K$ varies dynamically over time, resulting in degraded identity detection performance especially when $K$ is large. Consequently, the current grant-free protocol fails to ensure stable identity detection performance. To address this issue, we propose a two-stage communication protocol which consists of estimation of $K$ in Phase I and detection of identities of active devices in Phase II. The preamble length for identity detection in Phase II is dynamically allocated based on the estimated $K$ in Phase I through a table lookup manner such that the identity detection performance could always be better than a predefined threshold. In addition, we design an algorithm for estimating $K$ in Phase I, and exploit the estimated $K$ to reduce the computational complexity of the identity detector in Phase II. Numerical results demonstrate the effectiveness of the proposed two-stage communication protocol and algorithms.

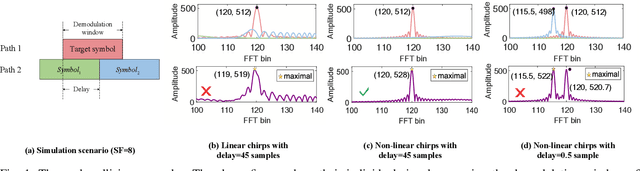

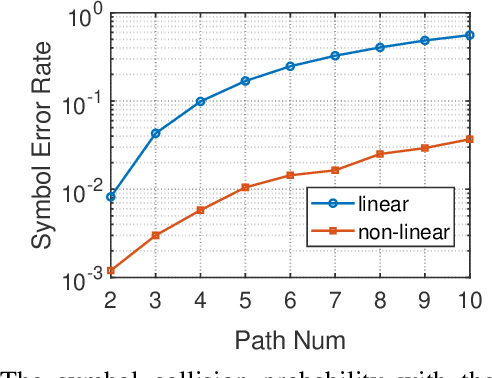

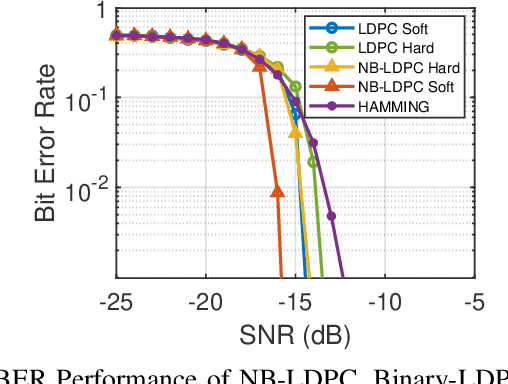

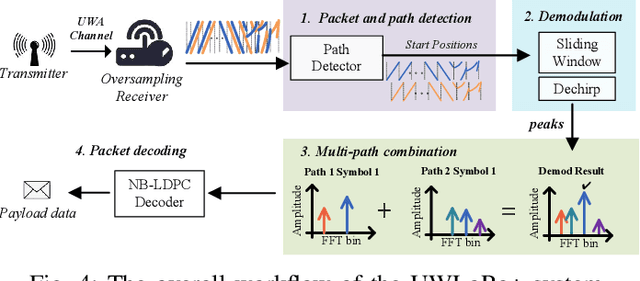

Combating Multi-path Interference to Improve Chirp-based Underwater Acoustic Communication

Nov 29, 2023

Abstract:Linear chirp-based underwater acoustic communication has been widely used due to its reliability and long-range transmission capability. However, unlike the counterpart chirp technology in wireless -- LoRa, its throughput is severely limited by the number of modulated chirps in a symbol. The fundamental challenge lies in the underwater multi-path channel, where the delayed copied of one symbol may cause inter-symbol and intra-symbol interfere. In this paper, we present UWLoRa+, a system that realizes the same chirp modulation as LoRa with higher data rate, and enhances LoRa's design to address the multi-path challenge via the following designs: a) we replace the linear chirp used by LoRa with the non-linear chirp to reduce the signal interference range and the collision probability; b) we design an algorithm that first demodulates each path and then combines the demodulation results of detected paths; and c) we replace the Hamming codes used by LoRa with the non-binary LDPC codes to mitigate the impact of the inevitable collision.Experiment results show that the new designs improve the bit error rate (BER) by 3x, and the packet error rate (PER) significantly, compared with the LoRa's naive design. Compared with an state-of-the-art system for decoding underwater LoRa chirp signal, UWLoRa+ improves the throughput by up to 50 times.

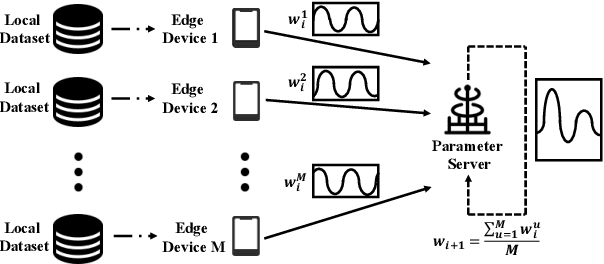

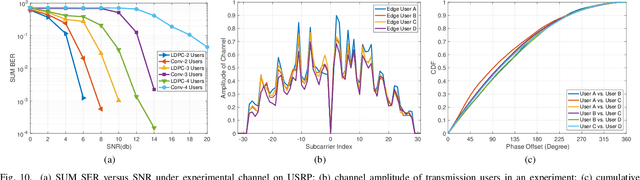

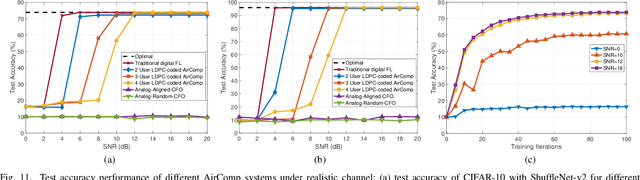

Broadband Digital Over-the-Air Computation for Wireless Federated Edge Learning

Dec 13, 2022

Abstract:This paper presents the first orthogonal frequency-division multiplexing(OFDM)-based digital over-the-air computation (AirComp) system for wireless federated edge learning, where multiple edge devices transmit model data simultaneously using non-orthogonal wireless resources, and the edge server aggregates data directly from the superimposed signal. Existing analog AirComp systems often assume perfect phase alignment via channel precoding and utilize uncoded analog transmission for model aggregation. In contrast, our digital AirComp system leverages digital modulation and channel codes to overcome phase asynchrony, thereby achieving accurate model aggregation for phase-asynchronous multi-user OFDM systems. To realize a digital AirComp system, we develop a medium access control (MAC) protocol that allows simultaneous transmissions from different users using non-orthogonal OFDM subcarriers, and put forth joint channel decoding and aggregation decoders tailored for convolutional and LDPC codes. To verify the proposed system design, we build a digital AirComp prototype on the USRP software-defined radio platform, and demonstrate a real-time LDPC-coded AirComp system with up to four users. Trace-driven simulation results on test accuracy versus SNR show that: 1) analog AirComp is sensitive to phase asynchrony in practical multi-user OFDM systems, and the test accuracy performance fails to improve even at high SNRs; 2) our digital AirComp system outperforms two analog AirComp systems at all SNRs, and approaches the optimal performance when SNR $\geq$ 6 dB for two-user LDPC-coded AirComp, demonstrating the advantage of digital AirComp in phase-asynchronous multi-user OFDM systems.

Quick and Reliable LoRa Physical-layer Data Aggregation through Multi-Packet Reception

Dec 13, 2022Abstract:This paper presents a Long Range (LoRa) physical-layer data aggregation system (LoRaPDA) that aggregates data (e.g., sum, average, min, max) directly in the physical layer. In particular, after coordinating a few nodes to transmit their data simultaneously, the gateway leverages a new multi-packet reception (MPR) approach to compute aggregate data from the phase-asynchronous superimposed signal. Different from the analog approach which requires additional power synchronization and phase synchronization, our MRP-based digital approach is compatible with commercial LoRa nodes and is more reliable. Different from traditional MPR approaches that are designed for the collision decoding scenario, our new MPR approach allows simultaneous transmissions with small packet arrival time offsets, and addresses a new co-located peak problem through the following components: 1) an improved channel and offset estimation algorithm that enables accurate phase tracking in each symbol, 2) a new symbol demodulation algorithm that finds the maximum likelihood sequence of nodes' data, and 3) a soft-decision packet decoding algorithm that utilizes the likelihoods of several sequences to improve decoding performance. Trace-driven simulation results show that the symbol demodulation algorithm outperforms the state-of-the-art MPR decoder by 5.3$\times$ in terms of physical-layer throughput, and the soft decoder is more robust to unavoidable adverse phase misalignment and estimation error in practice. Moreover, LoRaPDA outperforms the state-of-the-art MPR scheme by at least 2.1$\times$ for all SNRs in terms of network throughput, demonstrating quick and reliable data aggregation.

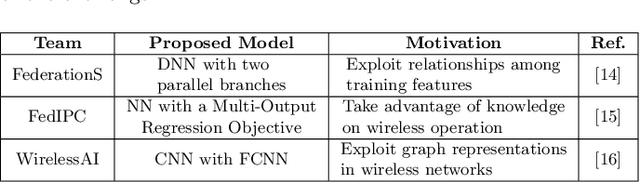

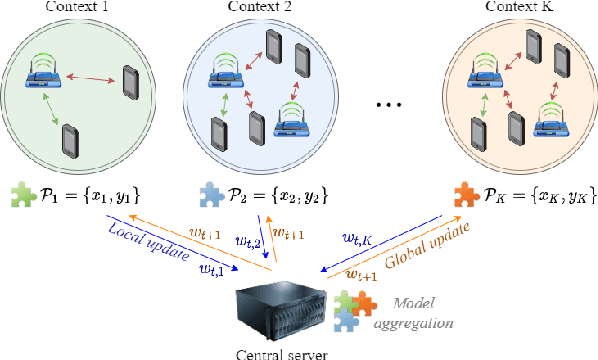

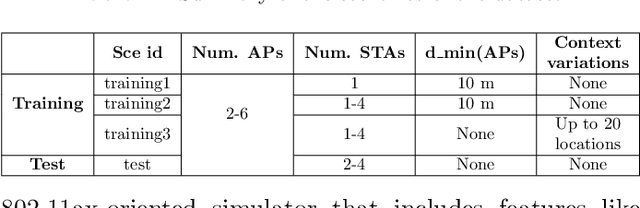

Federated Spatial Reuse Optimization in Next-Generation Decentralized IEEE 802.11 WLANs

Mar 20, 2022

Abstract:As wireless standards evolve, more complex functionalities are introduced to address the increasing requirements in terms of throughput, latency, security, and efficiency. To unleash the potential of such new features, artificial intelligence (AI) and machine learning (ML) are currently being exploited for deriving models and protocols from data, rather than by hand-programming. In this paper, we explore the feasibility of applying ML in next-generation wireless local area networks (WLANs). More specifically, we focus on the IEEE 802.11ax spatial reuse (SR) problem and predict its performance through federated learning (FL) models. The set of FL solutions overviewed in this work is part of the 2021 International Telecommunication Union (ITU) AI for 5G Challenge.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge