Lie Lu

SPUR: A Plug-and-Play Framework for Integrating Spatial Audio Understanding and Reasoning into Large Audio-Language Models

Nov 13, 2025

Abstract:Spatial perception is central to auditory intelligence, enabling accurate understanding of real-world acoustic scenes and advancing human-level perception of the world around us. While recent large audio-language models (LALMs) show strong reasoning over complex audios, most operate on monaural inputs and lack the ability to capture spatial cues such as direction, elevation, and distance. We introduce SPUR, a lightweight, plug-in approach that equips LALMs with spatial perception through minimal architectural changes. SPUR consists of: (i) a First-Order Ambisonics (FOA) encoder that maps (W, X, Y, Z) channels to rotation-aware, listener-centric spatial features, integrated into target LALMs via a multimodal adapter; and (ii) SPUR-Set, a spatial QA dataset combining open-source FOA recordings with controlled simulations, emphasizing relative direction, elevation, distance, and overlap for supervised spatial reasoning. Fine-tuning our model on the SPUR-Set consistently improves spatial QA and multi-speaker attribution while preserving general audio understanding. SPUR provides a simple recipe that transforms monaural LALMs into spatially aware models. Extensive ablations validate the effectiveness of our approach.

Transformation of audio embeddings into interpretable, concept-based representations

Apr 18, 2025Abstract:Advancements in audio neural networks have established state-of-the-art results on downstream audio tasks. However, the black-box structure of these models makes it difficult to interpret the information encoded in their internal audio representations. In this work, we explore the semantic interpretability of audio embeddings extracted from these neural networks by leveraging CLAP, a contrastive learning model that brings audio and text into a shared embedding space. We implement a post-hoc method to transform CLAP embeddings into concept-based, sparse representations with semantic interpretability. Qualitative and quantitative evaluations show that the concept-based representations outperform or match the performance of original audio embeddings on downstream tasks while providing interpretability. Additionally, we demonstrate that fine-tuning the concept-based representations can further improve their performance on downstream tasks. Lastly, we publish three audio-specific vocabularies for concept-based interpretability of audio embeddings.

XAttnMark: Learning Robust Audio Watermarking with Cross-Attention

Feb 07, 2025

Abstract:The rapid proliferation of generative audio synthesis and editing technologies has raised significant concerns about copyright infringement, data provenance, and the spread of misinformation through deepfake audio. Watermarking offers a proactive solution by embedding imperceptible, identifiable, and traceable marks into audio content. While recent neural network-based watermarking methods like WavMark and AudioSeal have improved robustness and quality, they struggle to achieve both robust detection and accurate attribution simultaneously. This paper introduces Cross-Attention Robust Audio Watermark (XAttnMark), which bridges this gap by leveraging partial parameter sharing between the generator and the detector, a cross-attention mechanism for efficient message retrieval, and a temporal conditioning module for improved message distribution. Additionally, we propose a psychoacoustic-aligned temporal-frequency masking loss that captures fine-grained auditory masking effects, enhancing watermark imperceptibility. Our approach achieves state-of-the-art performance in both detection and attribution, demonstrating superior robustness against a wide range of audio transformations, including challenging generative editing with strong editing strength. The project webpage is available at https://liuyixin-louis.github.io/xattnmark/.

CiTrus: Squeezing Extra Performance out of Low-data Bio-signal Transfer Learning

Dec 16, 2024

Abstract:Transfer learning for bio-signals has recently become an important technique to improve prediction performance on downstream tasks with small bio-signal datasets. Recent works have shown that pre-training a neural network model on a large dataset (e.g. EEG) with a self-supervised task, replacing the self-supervised head with a linear classification head, and fine-tuning the model on different downstream bio-signal datasets (e.g., EMG or ECG) can dramatically improve the performance on those datasets. In this paper, we propose a new convolution-transformer hybrid model architecture with masked auto-encoding for low-data bio-signal transfer learning, introduce a frequency-based masked auto-encoding task, employ a more comprehensive evaluation framework, and evaluate how much and when (multimodal) pre-training improves fine-tuning performance. We also introduce a dramatically more performant method of aligning a downstream dataset with a different temporal length and sampling rate to the original pre-training dataset. Our findings indicate that the convolution-only part of our hybrid model can achieve state-of-the-art performance on some low-data downstream tasks. The performance is often improved even further with our full model. In the case of transformer-based models we find that pre-training especially improves performance on downstream datasets, multimodal pre-training often increases those gains further, and our frequency-based pre-training performs the best on average for the lowest and highest data regimes.

Audio Match Cutting: Finding and Creating Matching Audio Transitions in Movies and Videos

Aug 20, 2024Abstract:A "match cut" is a common video editing technique where a pair of shots that have a similar composition transition fluidly from one to another. Although match cuts are often visual, certain match cuts involve the fluid transition of audio, where sounds from different sources merge into one indistinguishable transition between two shots. In this paper, we explore the ability to automatically find and create "audio match cuts" within videos and movies. We create a self-supervised audio representation for audio match cutting and develop a coarse-to-fine audio match pipeline that recommends matching shots and creates the blended audio. We further annotate a dataset for the proposed audio match cut task and compare the ability of multiple audio representations to find audio match cut candidates. Finally, we evaluate multiple methods to blend two matching audio candidates with the goal of creating a smooth transition. Project page and examples are available at: https://denfed.github.io/audiomatchcut/

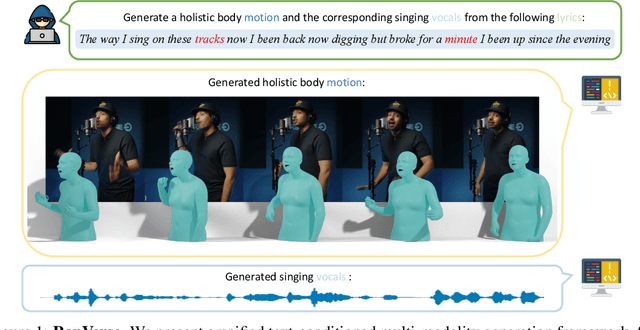

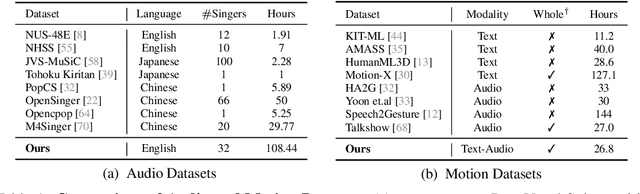

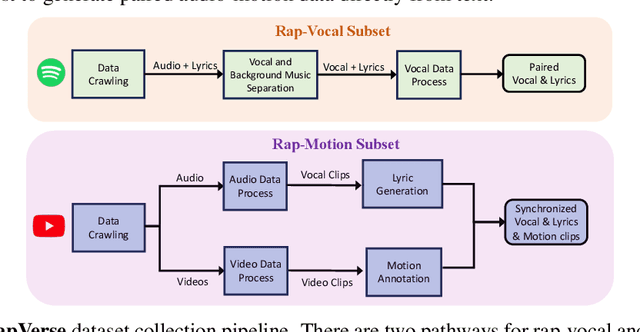

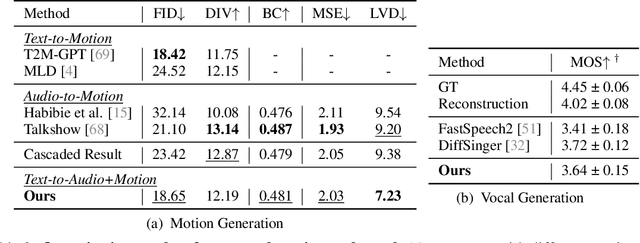

RapVerse: Coherent Vocals and Whole-Body Motions Generations from Text

May 30, 2024

Abstract:In this work, we introduce a challenging task for simultaneously generating 3D holistic body motions and singing vocals directly from textual lyrics inputs, advancing beyond existing works that typically address these two modalities in isolation. To facilitate this, we first collect the RapVerse dataset, a large dataset containing synchronous rapping vocals, lyrics, and high-quality 3D holistic body meshes. With the RapVerse dataset, we investigate the extent to which scaling autoregressive multimodal transformers across language, audio, and motion can enhance the coherent and realistic generation of vocals and whole-body human motions. For modality unification, a vector-quantized variational autoencoder is employed to encode whole-body motion sequences into discrete motion tokens, while a vocal-to-unit model is leveraged to obtain quantized audio tokens preserving content, prosodic information, and singer identity. By jointly performing transformer modeling on these three modalities in a unified way, our framework ensures a seamless and realistic blend of vocals and human motions. Extensive experiments demonstrate that our unified generation framework not only produces coherent and realistic singing vocals alongside human motions directly from textual inputs but also rivals the performance of specialized single-modality generation systems, establishing new benchmarks for joint vocal-motion generation. The project page is available for research purposes at https://vis-www.cs.umass.edu/RapVerse.

DeepSpace: Dynamic Spatial and Source Cue Based Source Separation for Dialog Enhancement

Feb 22, 2023Abstract:Dialog Enhancement (DE) is a feature which allows a user to increase the level of dialog in TV or movie content relative to non-dialog sounds. When only the original mix is available, DE is "unguided," and requires source separation. In this paper, we describe the DeepSpace system, which performs source separation using both dynamic spatial cues and source cues to support unguided DE. Its technologies include spatio-level filtering (SLF) and deep-learning based dialog classification and denoising. Using subjective listening tests, we show that DeepSpace demonstrates significantly improved overall performance relative to state-of-the-art systems available for testing. We explore the feasibility of using existing automated metrics to evaluate unguided DE systems.

High Quality Audio Coding with MDCTNet

Dec 08, 2022

Abstract:We propose a neural audio generative model, MDCTNet, operating in the perceptually weighted domain of an adaptive modified discrete cosine transform (MDCT). The architecture of the model captures correlations in both time and frequency directions with recurrent layers (RNNs). An audio coding system is obtained by training MDCTNet on a diverse set of fullband monophonic audio signals at 48 kHz sampling, conditioned by a perceptual audio encoder. In a subjective listening test with ten excerpts chosen to be balanced across content types, yet stressful for both codecs, the mean performance of the proposed system for 24 kb/s variable bitrate (VBR) is similar to that of Opus at twice the bitrate.

Stereo Speech Enhancement Using Custom Mid-Side Signals and Monaural Processing

Nov 25, 2022Abstract:Speech Enhancement (SE) systems typically operate on monaural input and are used for applications including voice communications and capture cleanup for user generated content. Recent advancements and changes in the devices used for these applications are likely to lead to an increase in the amount of two-channel content for the same applications. However, SE systems are typically designed for monaural input; stereo results produced using trivial methods such as channel independent or mid-side processing may be unsatisfactory, including substantial speech distortions. To address this, we propose a system which creates a novel representation of stereo signals called Custom Mid-Side Signals (CMSS). CMSS allow benefits of mid-side signals for center-panned speech to be extended to a much larger class of input signals. This in turn allows any existing monaural SE system to operate as an efficient stereo system by processing the custom mid signal. We describe how the parameters needed for CMSS can be efficiently estimated by a component of the spatio-level filtering source separation system. Subjective listening using state-of-the-art deep learning-based SE systems on stereo content with various speech mixing styles shows that CMSS processing leads to improved speech quality at approximately half the cost of channel-independent processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge