Lachlan Tychsen-Smith

Heterogeneous robot teams with unified perception and autonomy: How Team CSIRO Data61 tied for the top score at the DARPA Subterranean Challenge

Feb 26, 2023

Abstract:The DARPA Subterranean Challenge was designed for competitors to develop and deploy teams of autonomous robots to explore difficult unknown underground environments. Categorised in to human-made tunnels, underground urban infrastructure and natural caves, each of these subdomains had many challenging elements for robot perception, locomotion, navigation and autonomy. These included degraded wireless communication, poor visibility due to smoke, narrow passages and doorways, clutter, uneven ground, slippery and loose terrain, stairs, ledges, overhangs, dripping water, and dynamic obstacles that move to block paths among others. In the Final Event of this challenge held in September 2021, the course consisted of all three subdomains. The task was for the robot team to perform a scavenger hunt for a number of pre-defined artefacts within a limited time frame. Only one human supervisor was allowed to communicate with the robots once they were in the course. Points were scored when accurate detections and their locations were communicated back to the scoring server. A total of 8 teams competed in the finals held at the Mega Cavern in Louisville, KY, USA. This article describes the systems deployed by Team CSIRO Data61 that tied for the top score and won second place at the event.

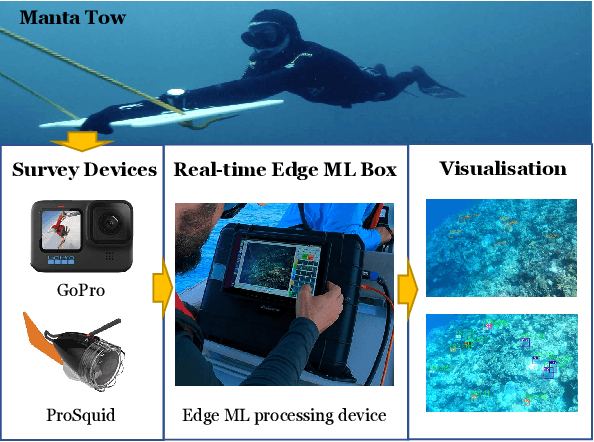

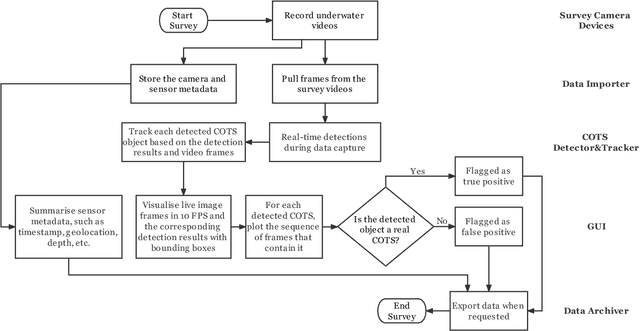

A Real-time Edge-AI System for Reef Surveys

Aug 01, 2022

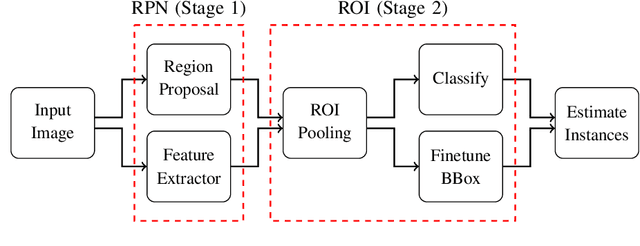

Abstract:Crown-of-Thorn Starfish (COTS) outbreaks are a major cause of coral loss on the Great Barrier Reef (GBR) and substantial surveillance and control programs are ongoing to manage COTS populations to ecologically sustainable levels. In this paper, we present a comprehensive real-time machine learning-based underwater data collection and curation system on edge devices for COTS monitoring. In particular, we leverage the power of deep learning-based object detection techniques, and propose a resource-efficient COTS detector that performs detection inferences on the edge device to assist marine experts with COTS identification during the data collection phase. The preliminary results show that several strategies for improving computational efficiency (e.g., batch-wise processing, frame skipping, model input size) can be combined to run the proposed detection model on edge hardware with low resource consumption and low information loss.

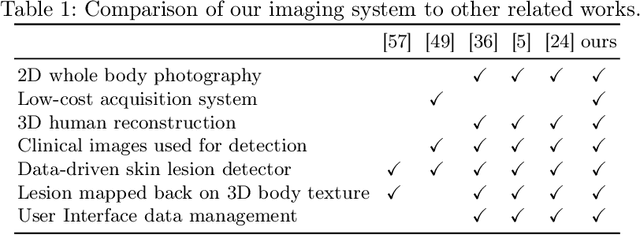

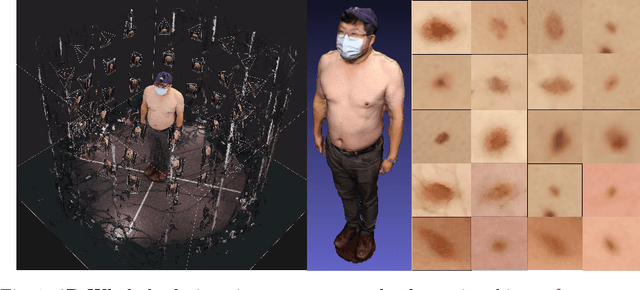

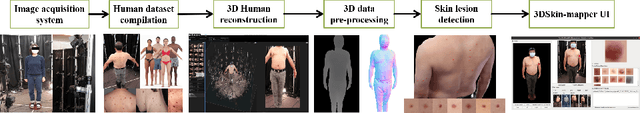

Monitoring of Pigmented Skin Lesions Using 3D Whole Body Imaging

May 14, 2022

Abstract:Modern data-driven machine learning research that enables revolutionary advances in image analysis has now become a critical tool to redefine how skin lesions are documented, mapped, and tracked. We propose a 3D whole body imaging prototype to enable rapid evaluation and mapping of skin lesions. A modular camera rig arranged in a cylindrical configuration is designed to automatically capture synchronised images from multiple angles for entire body scanning. We develop algorithms for 3D body image reconstruction, data processing and skin lesion detection based on deep convolutional neural networks. We also propose a customised, intuitive and flexible interface that allows the user to interact and collaborate with the machine to understand the data. The hybrid of the human and computer is represented by the analysis of 2D lesion detection, 3D mapping and data management. The experimental results using synthetic and real images demonstrate the effectiveness of the proposed solution by providing multiple views of the target skin lesion, enabling further 3D geometry analysis. Skin lesions are identified as outliers which deserve more attention from a skin cancer physician. Our detector identifies lesions at a comparable performance level as a physician. The proposed 3D whole body imaging system can be used by dermatological clinics, allowing for fast documentation of lesions, quick and accurate analysis of the entire body to detect suspicious lesions. Because of its fast examination, the method might be used for screening or epidemiological investigations. 3D data analysis has the potential to change the paradigm of total-body photography with many applications in skin diseases, including inflammatory and pigmentary disorders.

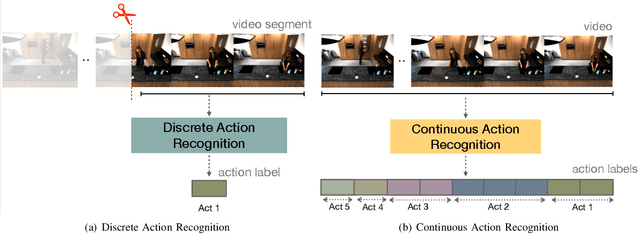

Continuous Human Action Recognition for Human-Machine Interaction: A Review

Feb 26, 2022

Abstract:With advances in data-driven machine learning research, a wide variety of prediction models have been proposed to capture spatio-temporal features for the analysis of video streams. Recognising actions and detecting action transitions within an input video are challenging but necessary tasks for applications that require real-time human-machine interaction. By reviewing a large body of recent related work in the literature, we thoroughly analyse, explain and compare action segmentation methods and provide details on the feature extraction and learning strategies that are used on most state-of-the-art methods. We cover the impact of the performance of object detection and tracking techniques on human action segmentation methodologies. We investigate the application of such models to real-world scenarios and discuss several limitations and key research directions towards improving interpretability, generalisation, optimisation and deployment.

The CSIRO Crown-of-Thorn Starfish Detection Dataset

Nov 29, 2021

Abstract:Crown-of-Thorn Starfish (COTS) outbreaks are a major cause of coral loss on the Great Barrier Reef (GBR) and substantial surveillance and control programs are underway in an attempt to manage COTS populations to ecologically sustainable levels. We release a large-scale, annotated underwater image dataset from a COTS outbreak area on the GBR, to encourage research on Machine Learning and AI-driven technologies to improve the detection, monitoring, and management of COTS populations at reef scale. The dataset is released and hosted in a Kaggle competition that challenges the international Machine Learning community with the task of COTS detection from these underwater images.

Heterogeneous Ground and Air Platforms, Homogeneous Sensing: Team CSIRO Data61's Approach to the DARPA Subterranean Challenge

Apr 19, 2021

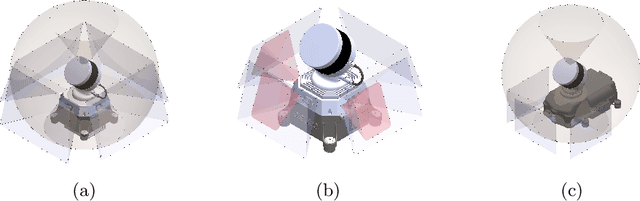

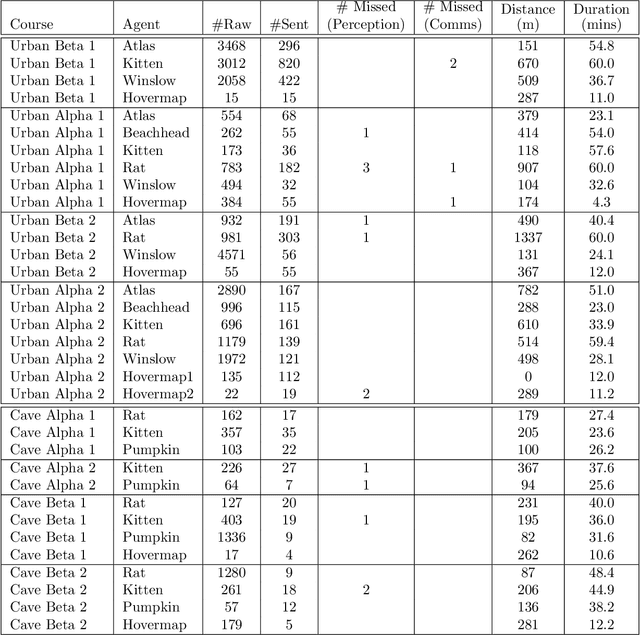

Abstract:Heterogeneous teams of robots, leveraging a balance between autonomy and human interaction, bring powerful capabilities to the problem of exploring dangerous, unstructured subterranean environments. Here we describe the solution developed by Team CSIRO Data61, consisting of CSIRO, Emesent and Georgia Tech, during the DARPA Subterranean Challenge. These presented systems were fielded in the Tunnel Circuit in August 2019, the Urban Circuit in February 2020, and in our own Cave event, conducted in September 2020. A unique capability of the fielded team is the homogeneous sensing of the platforms utilised, which is leveraged to obtain a decentralised multi-agent SLAM solution on each platform (both ground agents and UAVs) using peer-to-peer communications. This enabled a shift in focus from constructing a pervasive communications network to relying on multi-agent autonomy, motivated by experiences in early circuit events. These experiences also showed the surprising capability of rugged tracked platforms for challenging terrain, which in turn led to the heterogeneous team structure based on a BIA5 OzBot Titan ground robot and an Emesent Hovermap UAV, supplemented by smaller tracked or legged ground robots. The ground agents use a common CatPack perception module, which allowed reuse of the perception and autonomy stack across all ground agents with minimal adaptation.

Improving Object Localization with Fitness NMS and Bounded IoU Loss

Mar 12, 2018

Abstract:We demonstrate that many detection methods are designed to identify only a sufficently accurate bounding box, rather than the best available one. To address this issue we propose a simple and fast modification to the existing methods called Fitness NMS. This method is tested with the DeNet model and obtains a significantly improved MAP at greater localization accuracies without a loss in evaluation rate, and can be used in conjunction with Soft NMS for additional improvements. Next we derive a novel bounding box regression loss based on a set of IoU upper bounds that better matches the goal of IoU maximization while still providing good convergence properties. Following these novelties we investigate RoI clustering schemes for improving evaluation rates for the DeNet wide model variants and provide an analysis of localization performance at various input image dimensions. We obtain a MAP of 33.6%@79Hz and 41.8%@5Hz for MSCOCO and a Titan X (Maxwell). Source code available from: https://github.com/lachlants/denet

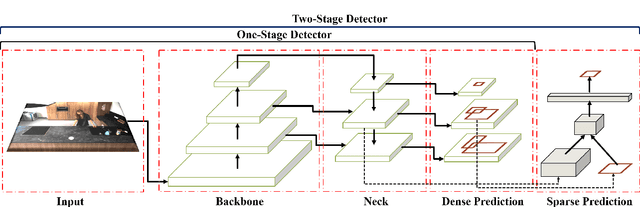

DeNet: Scalable Real-time Object Detection with Directed Sparse Sampling

Jul 21, 2017

Abstract:We define the object detection from imagery problem as estimating a very large but extremely sparse bounding box dependent probability distribution. Subsequently we identify a sparse distribution estimation scheme, Directed Sparse Sampling, and employ it in a single end-to-end CNN based detection model. This methodology extends and formalizes previous state-of-the-art detection models with an additional emphasis on high evaluation rates and reduced manual engineering. We introduce two novelties, a corner based region-of-interest estimator and a deconvolution based CNN model. The resulting model is scene adaptive, does not require manually defined reference bounding boxes and produces highly competitive results on MSCOCO, Pascal VOC 2007 and Pascal VOC 2012 with real-time evaluation rates. Further analysis suggests our model performs particularly well when finegrained object localization is desirable. We argue that this advantage stems from the significantly larger set of available regions-of-interest relative to other methods. Source-code is available from: https://github.com/lachlants/denet

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge