Jintao Fei

Citrus: Leveraging Expert Cognitive Pathways in a Medical Language Model for Advanced Medical Decision Support

Feb 26, 2025Abstract:Large language models (LLMs), particularly those with reasoning capabilities, have rapidly advanced in recent years, demonstrating significant potential across a wide range of applications. However, their deployment in healthcare, especially in disease reasoning tasks, is hindered by the challenge of acquiring expert-level cognitive data. In this paper, we introduce Citrus, a medical language model that bridges the gap between clinical expertise and AI reasoning by emulating the cognitive processes of medical experts. The model is trained on a large corpus of simulated expert disease reasoning data, synthesized using a novel approach that accurately captures the decision-making pathways of clinicians. This approach enables Citrus to better simulate the complex reasoning processes involved in diagnosing and treating medical conditions. To further address the lack of publicly available datasets for medical reasoning tasks, we release the last-stage training data, including a custom-built medical diagnostic dialogue dataset. This open-source contribution aims to support further research and development in the field. Evaluations using authoritative benchmarks such as MedQA, covering tasks in medical reasoning and language understanding, show that Citrus achieves superior performance compared to other models of similar size. These results highlight Citrus potential to significantly enhance medical decision support systems, providing a more accurate and efficient tool for clinical decision-making.

JoyVASA: Portrait and Animal Image Animation with Diffusion-Based Audio-Driven Facial Dynamics and Head Motion Generation

Nov 14, 2024

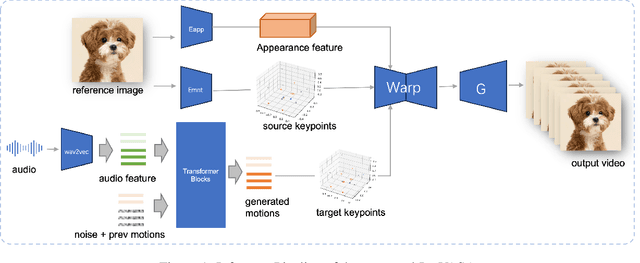

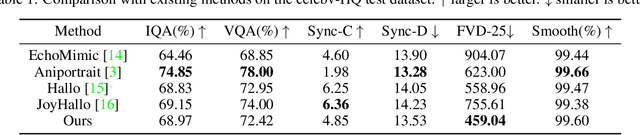

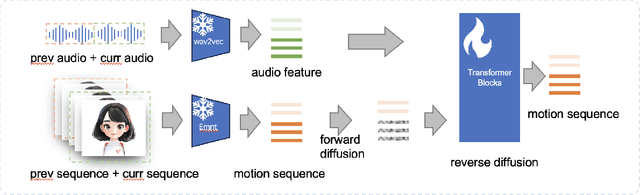

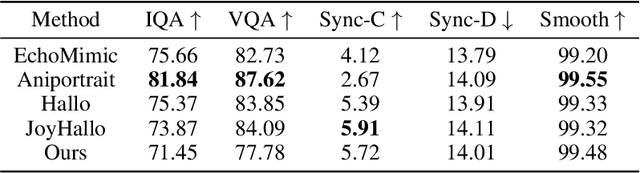

Abstract:Audio-driven portrait animation has made significant advances with diffusion-based models, improving video quality and lipsync accuracy. However, the increasing complexity of these models has led to inefficiencies in training and inference, as well as constraints on video length and inter-frame continuity. In this paper, we propose JoyVASA, a diffusion-based method for generating facial dynamics and head motion in audio-driven facial animation. Specifically, in the first stage, we introduce a decoupled facial representation framework that separates dynamic facial expressions from static 3D facial representations. This decoupling allows the system to generate longer videos by combining any static 3D facial representation with dynamic motion sequences. Then, in the second stage, a diffusion transformer is trained to generate motion sequences directly from audio cues, independent of character identity. Finally, a generator trained in the first stage uses the 3D facial representation and the generated motion sequences as inputs to render high-quality animations. With the decoupled facial representation and the identity-independent motion generation process, JoyVASA extends beyond human portraits to animate animal faces seamlessly. The model is trained on a hybrid dataset of private Chinese and public English data, enabling multilingual support. Experimental results validate the effectiveness of our approach. Future work will focus on improving real-time performance and refining expression control, further expanding the applications in portrait animation. The code will be available at: https://jdhalgo.github.io/JoyVASA.

MHAD: Multimodal Home Activity Dataset with Multi-Angle Videos and Synchronized Physiological Signals

Sep 14, 2024

Abstract:Video-based physiology, exemplified by remote photoplethysmography (rPPG), extracts physiological signals such as pulse and respiration by analyzing subtle changes in video recordings. This non-contact, real-time monitoring method holds great potential for home settings. Despite the valuable contributions of public benchmark datasets to this technology, there is currently no dataset specifically designed for passive home monitoring. Existing datasets are often limited to close-up, static, frontal recordings and typically include only 1-2 physiological signals. To advance video-based physiology in real home settings, we introduce the MHAD dataset. It comprises 1,440 videos from 40 subjects, capturing 6 typical activities from 3 angles in a real home environment. Additionally, 5 physiological signals were recorded, making it a comprehensive video-based physiology dataset. MHAD is compatible with the rPPG-toolbox and has been validated using several unsupervised and supervised methods. Our dataset is publicly available at https://github.com/jdh-algo/MHAD-Dataset.

MVKT-ECG: Efficient Single-lead ECG Classification on Multi-Label Arrhythmia by Multi-View Knowledge Transferring

Jan 28, 2023Abstract:The widespread emergence of smart devices for ECG has sparked demand for intelligent single-lead ECG-based diagnostic systems. However, it is challenging to develop a single-lead-based ECG interpretation model for multiple diseases diagnosis due to the lack of some key disease information. In this work, we propose inter-lead Multi-View Knowledge Transferring of ECG (MVKT-ECG) to boost single-lead ECG's ability for multi-label disease diagnosis. This training strategy can transfer superior disease knowledge from multiple different views of ECG (e.g. 12-lead ECG) to single-lead-based ECG interpretation model to mine details in single-lead ECG signals that are easily overlooked by neural networks. MVKT-ECG allows this lead variety as a supervision signal within a teacher-student paradigm, where the teacher observes multi-lead ECG educates a student who observes only single-lead ECG. Since the mutual disease information between the single-lead ECG and muli-lead ECG plays a key role in knowledge transferring, we present a new disease-aware Contrastive Lead-information Transferring(CLT) to improve the mutual disease information between the single-lead ECG and muli-lead ECG. Moreover, We modify traditional Knowledge Distillation to multi-label disease Knowledge Distillation (MKD) to make it applicable for multi-label disease diagnosis. The comprehensive experiments verify that MVKT-ECG has an excellent performance in improving the diagnostic effect of single-lead ECG.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge