Guijin Wang

PRISM: Probabilistic and Robust Inverse Solver with Measurement-Conditioned Diffusion Prior for Blind Inverse Problems

Sep 19, 2025Abstract:Diffusion models are now commonly used to solve inverse problems in computational imaging. However, most diffusion-based inverse solvers require complete knowledge of the forward operator to be used. In this work, we introduce a novel probabilistic and robust inverse solver with measurement-conditioned diffusion prior (PRISM) to effectively address blind inverse problems. PRISM offers a technical advancement over current methods by incorporating a powerful measurement-conditioned diffusion model into a theoretically principled posterior sampling scheme. Experiments on blind image deblurring validate the effectiveness of the proposed method, demonstrating the superior performance of PRISM over state-of-the-art baselines in both image and blur kernel recovery.

VISTA: Unsupervised 2D Temporal Dependency Representations for Time Series Anomaly Detection

Apr 03, 2025Abstract:Time Series Anomaly Detection (TSAD) is essential for uncovering rare and potentially harmful events in unlabeled time series data. Existing methods are highly dependent on clean, high-quality inputs, making them susceptible to noise and real-world imperfections. Additionally, intricate temporal relationships in time series data are often inadequately captured in traditional 1D representations, leading to suboptimal modeling of dependencies. We introduce VISTA, a training-free, unsupervised TSAD algorithm designed to overcome these challenges. VISTA features three core modules: 1) Time Series Decomposition using Seasonal and Trend Decomposition via Loess (STL) to decompose noisy time series into trend, seasonal, and residual components; 2) Temporal Self-Attention, which transforms 1D time series into 2D temporal correlation matrices for richer dependency modeling and anomaly detection; and 3) Multivariate Temporal Aggregation, which uses a pretrained feature extractor to integrate cross-variable information into a unified, memory-efficient representation. VISTA's training-free approach enables rapid deployment and easy hyperparameter tuning, making it suitable for industrial applications. It achieves state-of-the-art performance on five multivariate TSAD benchmarks.

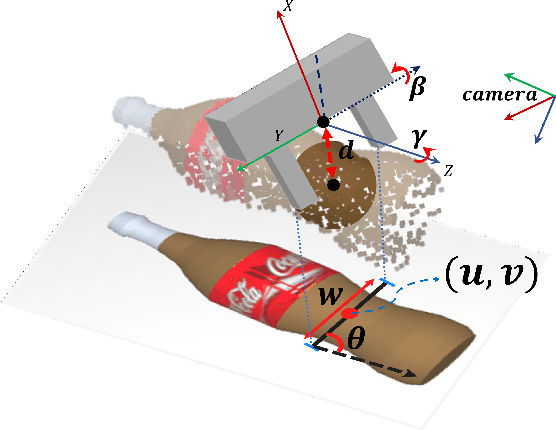

Efficient End-to-End 6-Dof Grasp Detection Framework for Edge Devices with Hierarchical Heatmaps and Feature Propagation

Oct 30, 2024

Abstract:6-DoF grasp detection is critically important for the advancement of intelligent embodied systems, as it provides feasible robot poses for object grasping. Various methods have been proposed to detect 6-DoF grasps through the extraction of 3D geometric features from RGBD or point cloud data. However, most of these approaches encounter challenges during real robot deployment due to their significant computational demands, which can be particularly problematic for mobile robot platforms, especially those reliant on edge computing devices. This paper presents an Efficient End-to-End Grasp Detection Network (E3GNet) for 6-DoF grasp detection utilizing hierarchical heatmap representations. E3GNet effectively identifies high-quality and diverse grasps in cluttered real-world environments. Benefiting from our end-to-end methodology and efficient network design, our approach surpasses previous methods in model inference efficiency and achieves real-time 6-Dof grasp detection on edge devices. Furthermore, real-world experiments validate the effectiveness of our method, achieving a satisfactory 94% object grasping success rate.

Diffusion-Based Depth Inpainting for Transparent and Reflective Objects

Oct 11, 2024

Abstract:Transparent and reflective objects, which are common in our everyday lives, present a significant challenge to 3D imaging techniques due to their unique visual and optical properties. Faced with these types of objects, RGB-D cameras fail to capture the real depth value with their accurate spatial information. To address this issue, we propose DITR, a diffusion-based Depth Inpainting framework specifically designed for Transparent and Reflective objects. This network consists of two stages, including a Region Proposal stage and a Depth Inpainting stage. DITR dynamically analyzes the optical and geometric depth loss and inpaints them automatically. Furthermore, comprehensive experimental results demonstrate that DITR is highly effective in depth inpainting tasks of transparent and reflective objects with robust adaptability.

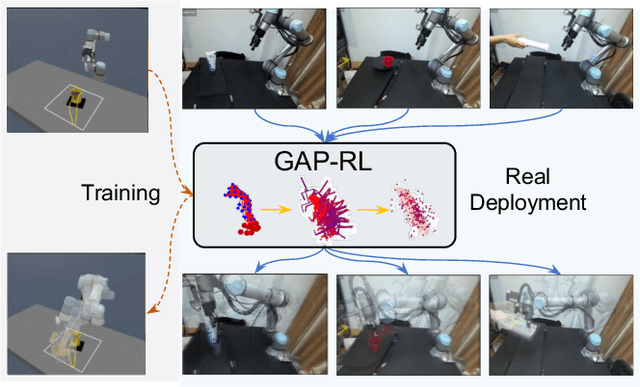

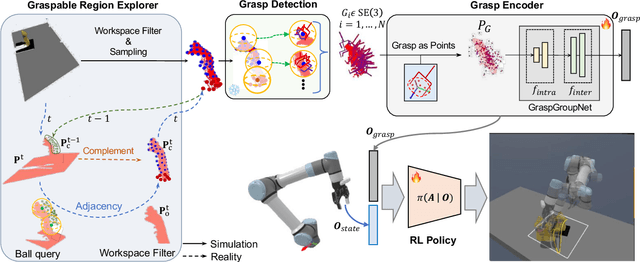

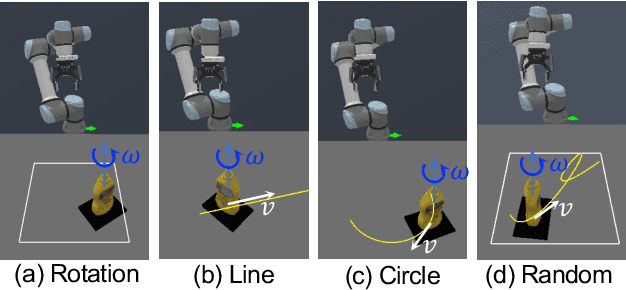

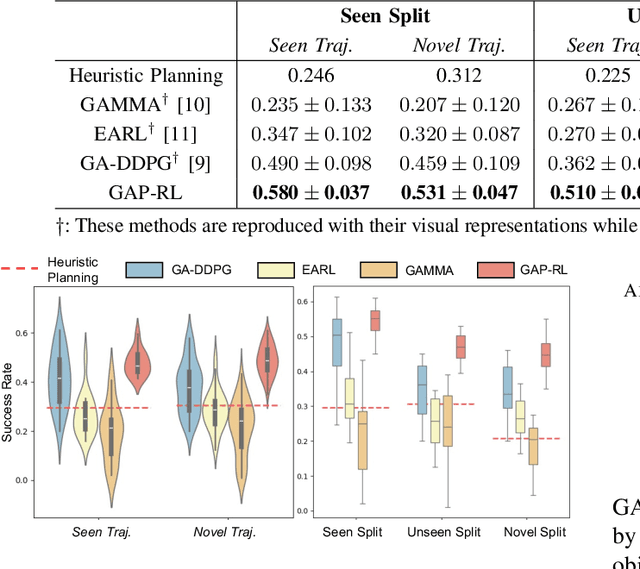

GAP-RL: Grasps As Points for RL Towards Dynamic Object Grasping

Oct 04, 2024

Abstract:Dynamic grasping of moving objects in complex, continuous motion scenarios remains challenging. Reinforcement Learning (RL) has been applied in various robotic manipulation tasks, benefiting from its closed-loop property. However, existing RL-based methods do not fully explore the potential for enhancing visual representations. In this letter, we propose a novel framework called Grasps As Points for RL (GAP-RL) to effectively and reliably grasp moving objects. By implementing a fast region-based grasp detector, we build a Grasp Encoder by transforming 6D grasp poses into Gaussian points and extracting grasp features as a higher-level abstraction than the original object point features. Additionally, we develop a Graspable Region Explorer for real-world deployment, which searches for consistent graspable regions, enabling smoother grasp generation and stable policy execution. To assess the performance fairly, we construct a simulated dynamic grasping benchmark involving objects with various complex motions. Experiment results demonstrate that our method effectively generalizes to novel objects and unseen dynamic motions compared to other baselines. Real-world experiments further validate the framework's sim-to-real transferability.

Target-Oriented Object Grasping via Multimodal Human Guidance

Aug 20, 2024

Abstract:In the context of human-robot interaction and collaboration scenarios, robotic grasping still encounters numerous challenges. Traditional grasp detection methods generally analyze the entire scene to predict grasps, leading to redundancy and inefficiency. In this work, we reconsider 6-DoF grasp detection from a target-referenced perspective and propose a Target-Oriented Grasp Network (TOGNet). TOGNet specifically targets local, object-agnostic region patches to predict grasps more efficiently. It integrates seamlessly with multimodal human guidance, including language instructions, pointing gestures, and interactive clicks. Thus our system comprises two primary functional modules: a guidance module that identifies the target object in 3D space and TOGNet, which detects region-focal 6-DoF grasps around the target, facilitating subsequent motion planning. Through 50 target-grasping simulation experiments in cluttered scenes, our system achieves a success rate improvement of about 13.7%. In real-world experiments, we demonstrate that our method excels in various target-oriented grasping scenarios.

Region-aware Grasp Framework with Normalized Grasp Space for 6-DoF Grasping in Cluttered Scene

Jun 03, 2024

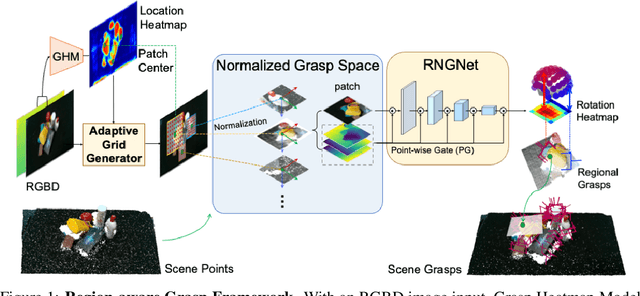

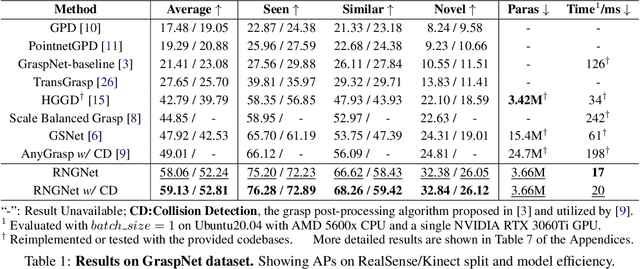

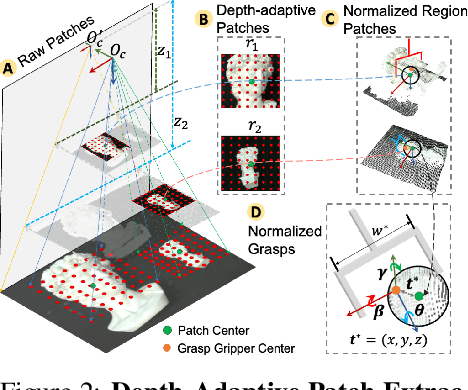

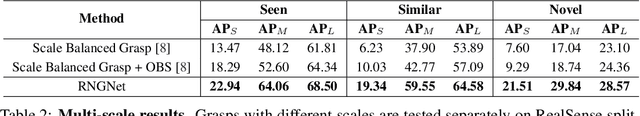

Abstract:Regional geometric information is crucial for determining grasp poses. A series of region-based methods succeed in extracting regional features and enhancing grasp detection quality. However, faced with a cluttered scene with multiple objects and potential collision, the definition of the grasp-relevant region remains inconsistent among methods, and the relationship between grasps and regional spaces remains incompletely investigated. In this paper, from a novel region-aware and grasp-centric viewpoint, we propose Normalized Grasp Space (NGS), unifying the grasp representation within a normalized regional space. The relationship among the grasp widths, region scales, and gripper sizes is considered and empowers our method to generalize to grippers and scenes with different scales. Leveraging the characteristics of the NGS, we find that 2D CNNs are surprisingly underestimated for complicated 6-DoF grasp detection tasks in clutter scenes and build a highly efficient Region-aware Normalized Grasp Network (RNGNet). Experiments conducted on the public benchmark show that our method achieves the best grasp detection results compared to the previous state-of-the-arts while attaining a real-time inference speed of approximately 50 FPS. Real-world cluttered scene clearance experiments underscore the effectiveness of our method with a higher success rate than other methods. Further human-to-robot handover and moving object grasping experiments demonstrate the potential of our proposed method for closed-loop grasping in dynamic scenarios.

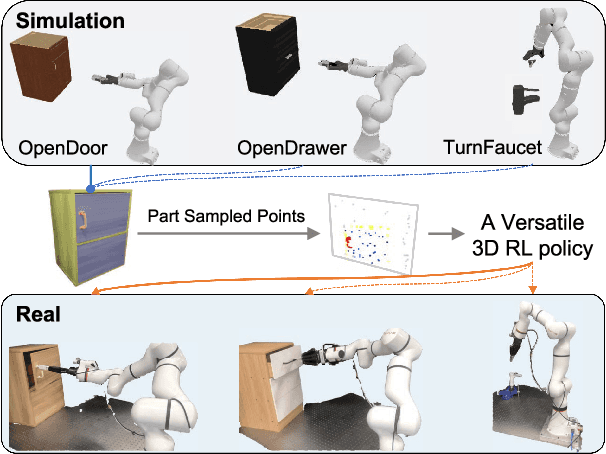

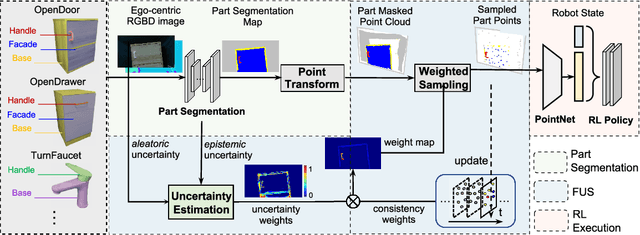

Part-Guided 3D RL for Sim2Real Articulated Object Manipulation

Apr 26, 2024

Abstract:Manipulating unseen articulated objects through visual feedback is a critical but challenging task for real robots. Existing learning-based solutions mainly focus on visual affordance learning or other pre-trained visual models to guide manipulation policies, which face challenges for novel instances in real-world scenarios. In this paper, we propose a novel part-guided 3D RL framework, which can learn to manipulate articulated objects without demonstrations. We combine the strengths of 2D segmentation and 3D RL to improve the efficiency of RL policy training. To improve the stability of the policy on real robots, we design a Frame-consistent Uncertainty-aware Sampling (FUS) strategy to get a condensed and hierarchical 3D representation. In addition, a single versatile RL policy can be trained on multiple articulated object manipulation tasks simultaneously in simulation and shows great generalizability to novel categories and instances. Experimental results demonstrate the effectiveness of our framework in both simulation and real-world settings. Our code is available at https://github.com/THU-VCLab/Part-Guided-3D-RL-for-Sim2Real-Articulated-Object-Manipulation.

TOP-Nav: Legged Navigation Integrating Terrain, Obstacle and Proprioception Estimation

Apr 24, 2024

Abstract:Legged navigation is typically examined within open-world, off-road, and challenging environments. In these scenarios, estimating external disturbances requires a complex synthesis of multi-modal information. This underlines a major limitation in existing works that primarily focus on avoiding obstacles. In this work, we propose TOP-Nav, a novel legged navigation framework that integrates a comprehensive path planner with Terrain awareness, Obstacle avoidance and close-loop Proprioception. TOP-Nav underscores the synergies between vision and proprioception in both path and motion planning. Within the path planner, we present and integrate a terrain estimator that enables the robot to select waypoints on terrains with higher traversability while effectively avoiding obstacles. In the motion planning level, we not only implement a locomotion controller to track the navigation commands, but also construct a proprioception advisor to provide motion evaluations for the path planner. Based on the close-loop motion feedback, we make online corrections for the vision-based terrain and obstacle estimations. Consequently, TOP-Nav achieves open-world navigation that the robot can handle terrains or disturbances beyond the distribution of prior knowledge and overcomes constraints imposed by visual conditions. Building upon extensive experiments conducted in both simulation and real-world environments, TOP-Nav demonstrates superior performance in open-world navigation compared to existing methods.

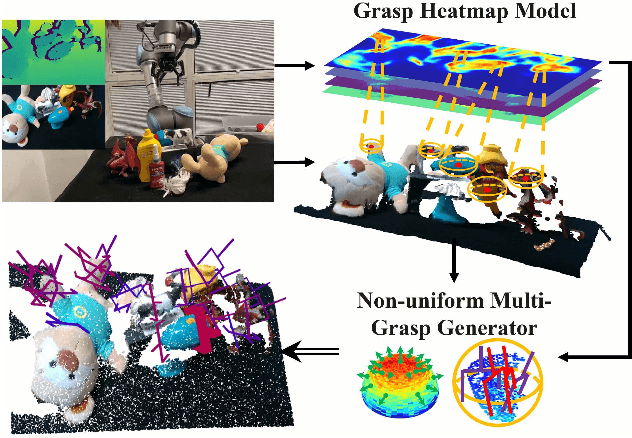

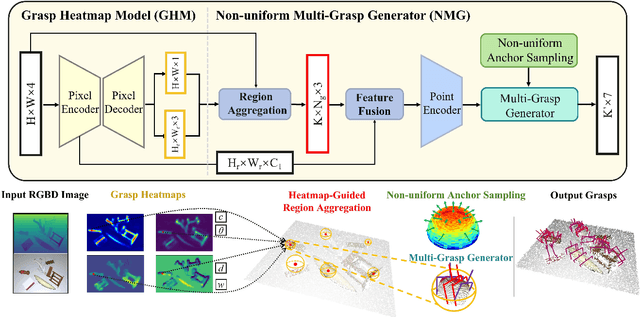

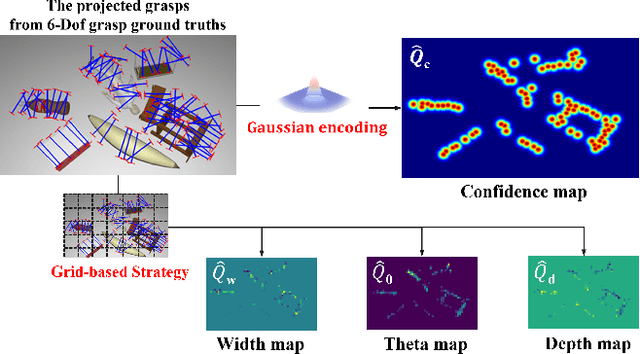

Efficient Heatmap-Guided 6-Dof Grasp Detection in Cluttered Scenes

Mar 27, 2024

Abstract:Fast and robust object grasping in clutter is a crucial component of robotics. Most current works resort to the whole observed point cloud for 6-Dof grasp generation, ignoring the guidance information excavated from global semantics, thus limiting high-quality grasp generation and real-time performance. In this work, we show that the widely used heatmaps are underestimated in the efficiency of 6-Dof grasp generation. Therefore, we propose an effective local grasp generator combined with grasp heatmaps as guidance, which infers in a global-to-local semantic-to-point way. Specifically, Gaussian encoding and the grid-based strategy are applied to predict grasp heatmaps as guidance to aggregate local points into graspable regions and provide global semantic information. Further, a novel non-uniform anchor sampling mechanism is designed to improve grasp accuracy and diversity. Benefiting from the high-efficiency encoding in the image space and focusing on points in local graspable regions, our framework can perform high-quality grasp detection in real-time and achieve state-of-the-art results. In addition, real robot experiments demonstrate the effectiveness of our method with a success rate of 94% and a clutter completion rate of 100%. Our code is available at https://github.com/THU-VCLab/HGGD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge