Huachun Tan

Safe and Stylized Trajectory Planning for Autonomous Driving via Diffusion Model

Feb 04, 2026Abstract:Achieving safe and stylized trajectory planning in complex real-world scenarios remains a critical challenge for autonomous driving systems. This paper proposes the SDD Planner, a diffusion-based framework designed to effectively reconcile safety constraints with driving styles in real time. The framework integrates two core modules: a Multi-Source Style-Aware Encoder, which employs distance-sensitive attention to fuse dynamic agent data and environmental contexts for heterogeneous safety-style perception; and a Style-Guided Dynamic Trajectory Generator, which adaptively modulates priority weights within the diffusion denoising process to generate user-preferred yet safe trajectories. Extensive experiments demonstrate that SDD Planner achieves state-of-the-art performance. On the StyleDrive benchmark, it improves the SM-PDMS metric by 3.9% over WoTE, the strongest baseline. Furthermore, on the NuPlan Test14 and Test14-hard benchmarks, SDD Planner ranks first with overall scores of 91.76 and 80.32, respectively, outperforming leading methods such as PLUTO. Real-vehicle closed-loop tests further confirm that SDD Planner maintains high safety standards while aligning with preset driving styles, validating its practical applicability for real-world deployment.

Improving speech recognition models with small samples for air traffic control systems

Feb 16, 2021

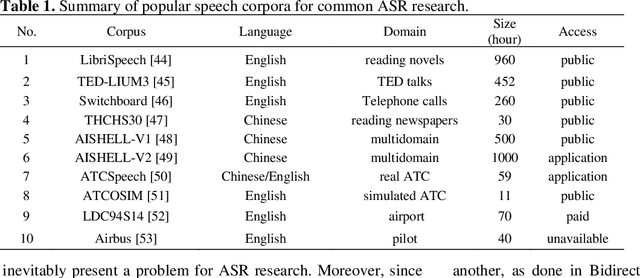

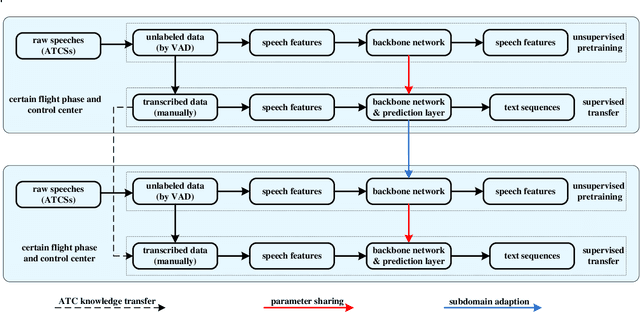

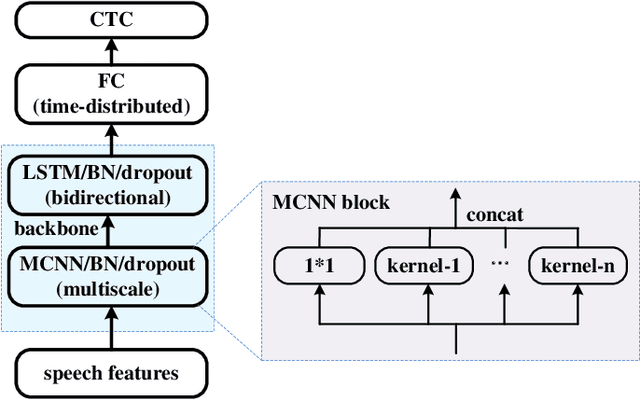

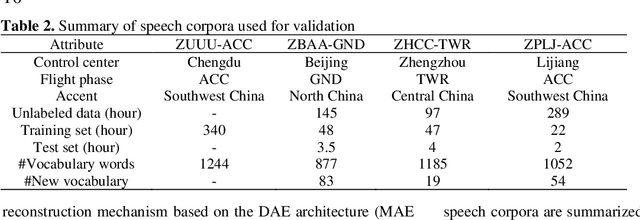

Abstract:In the domain of air traffic control (ATC) systems, efforts to train a practical automatic speech recognition (ASR) model always faces the problem of small training samples since the collection and annotation of speech samples are expert- and domain-dependent task. In this work, a novel training approach based on pretraining and transfer learning is proposed to address this issue, and an improved end-to-end deep learning model is developed to address the specific challenges of ASR in the ATC domain. An unsupervised pretraining strategy is first proposed to learn speech representations from unlabeled samples for a certain dataset. Specifically, a masking strategy is applied to improve the diversity of the sample without losing their general patterns. Subsequently, transfer learning is applied to fine-tune a pretrained or other optimized baseline models to finally achieves the supervised ASR task. By virtue of the common terminology used in the ATC domain, the transfer learning task can be regarded as a sub-domain adaption task, in which the transferred model is optimized using a joint corpus consisting of baseline samples and new transcribed samples from the target dataset. This joint corpus construction strategy enriches the size and diversity of the training samples, which is important for addressing the issue of the small transcribed corpus. In addition, speed perturbation is applied to augment the new transcribed samples to further improve the quality of the speech corpus. Three real ATC datasets are used to validate the proposed ASR model and training strategies. The experimental results demonstrate that the ASR performance is significantly improved on all three datasets, with an absolute character error rate only one-third of that achieved through the supervised training. The applicability of the proposed strategies to other ASR approaches is also validated.

Non-recurrent Traffic Congestion Detection with a Coupled Scalable Bayesian Robust Tensor Factorization Model

May 10, 2020

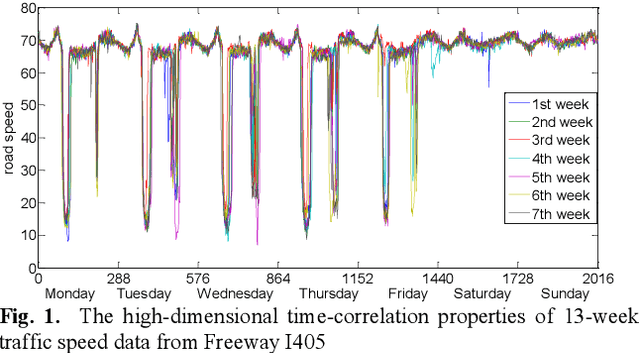

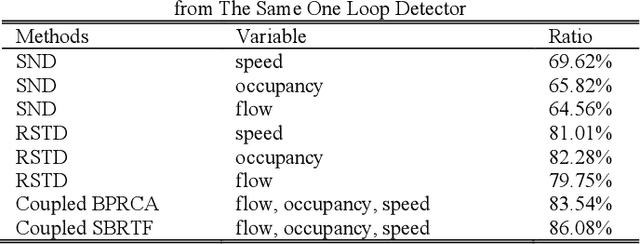

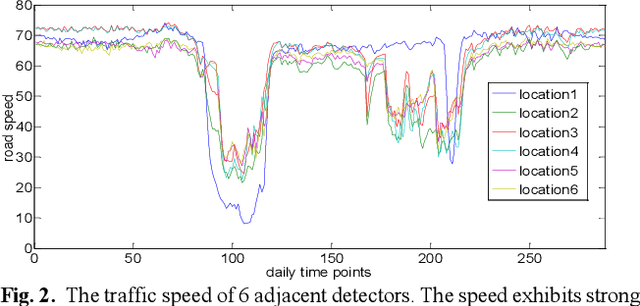

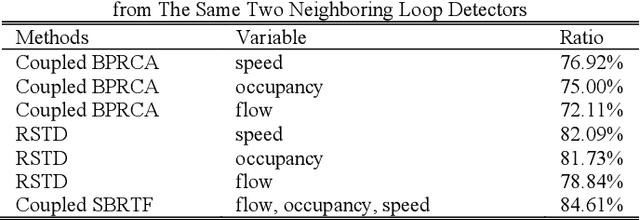

Abstract:Non-recurrent traffic congestion (NRTC) usually brings unexpected delays to commuters. Hence, it is critical to accurately detect and recognize the NRTC in a real-time manner. The advancement of road traffic detectors and loop detectors provides researchers with a large-scale multivariable temporal-spatial traffic data, which allows the deep research on NRTC to be conducted. However, it remains a challenging task to construct an analytical framework through which the natural spatial-temporal structural properties of multivariable traffic information can be effectively represented and exploited to better understand and detect NRTC. In this paper, we present a novel analytical training-free framework based on coupled scalable Bayesian robust tensor factorization (Coupled SBRTF). The framework can couple multivariable traffic data including traffic flow, road speed, and occupancy through sharing a similar or the same sparse structure. And, it naturally captures the high-dimensional spatial-temporal structural properties of traffic data by tensor factorization. With its entries revealing the distribution and magnitude of NRTC, the shared sparse structure of the framework compasses sufficiently abundant information about NRTC. While the low-rank part of the framework, expresses the distribution of general expected traffic condition as an auxiliary product. Experimental results on real-world traffic data show that the proposed method outperforms coupled Bayesian robust principal component analysis (coupled BRPCA), the rank sparsity tensor decomposition (RSTD), and standard normal deviates (SND) in detecting NRTC. The proposed method performs even better when only traffic data in weekdays are utilized, and hence can provide more precise estimation of NRTC for daily commuters.

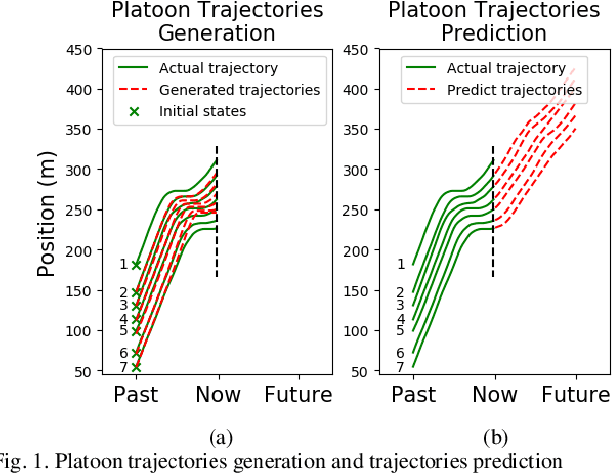

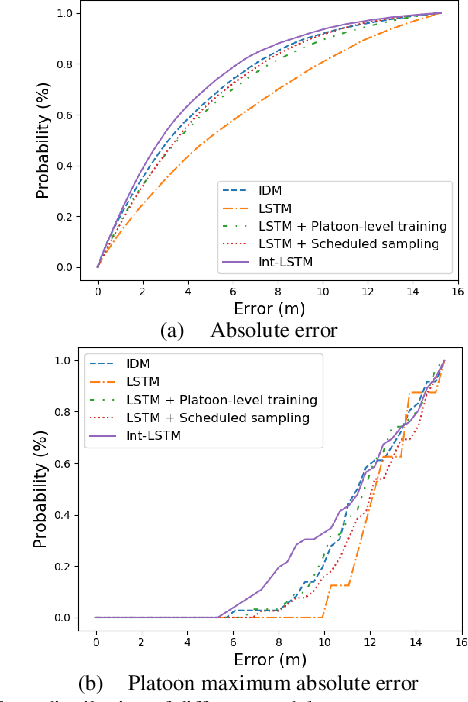

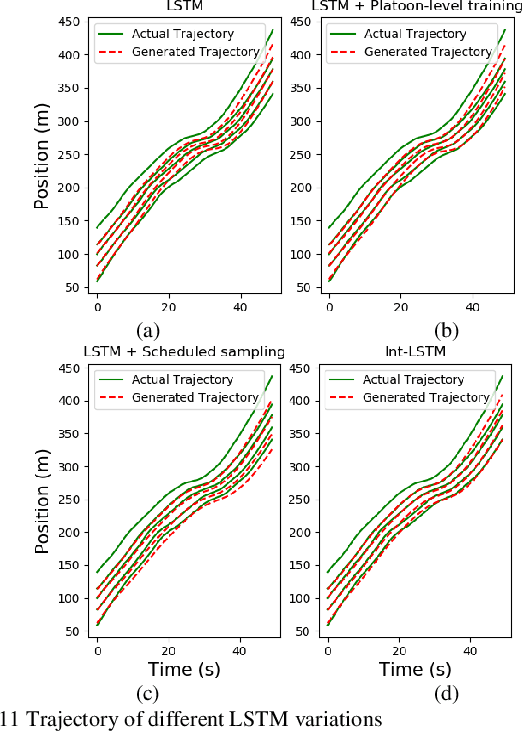

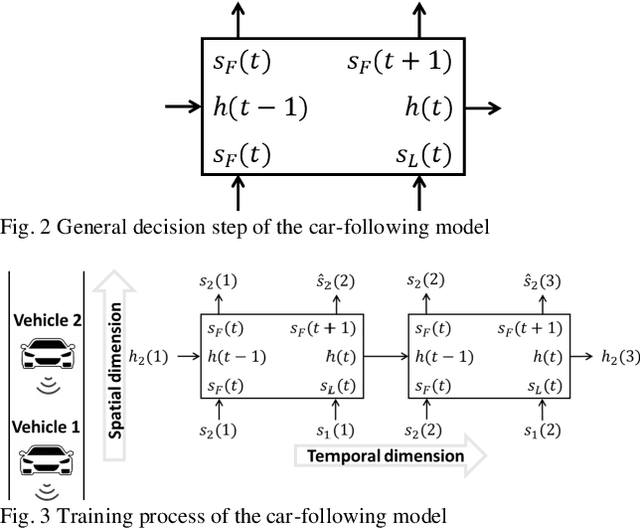

Platoon trajectories generation: A unidirectional interconnected LSTM-based car following model

Oct 25, 2019

Abstract:Car following models have been widely applied and made remarkable achievements in traffic engineering. However, the traffic micro-simulation accuracy of car following models in a platoon level, especially during traffic oscillations, still needs to be enhanced. Rather than using traditional individual car following models, we proposed a new trajectory generation approach to generate platoon level trajectories given the first leading vehicle's trajectory. In this paper, we discussed the temporal and spatial error propagation issue for the traditional approach by a car following block diagram representation. Based on the analysis, we pointed out that error comes from the training method and the model structure. In order to fix that, we adopt two improvements on the basis of the traditional LSTM based car following model. We utilized a scheduled sampling technique during the training process to solve the error propagation in the temporal dimension. Furthermore, we developed a unidirectional interconnected LSTM model structure to extract trajectories features from the perspective of the platoon. As indicated by the systematic empirical experiments, the proposed novel structure could efficiently reduce the temporal and spatial error propagation. Compared with the traditional LSTM based car following model, the proposed model has almost 40% less error. The findings will benefit the design and analysis of micro-simulation for platoon level car following models.

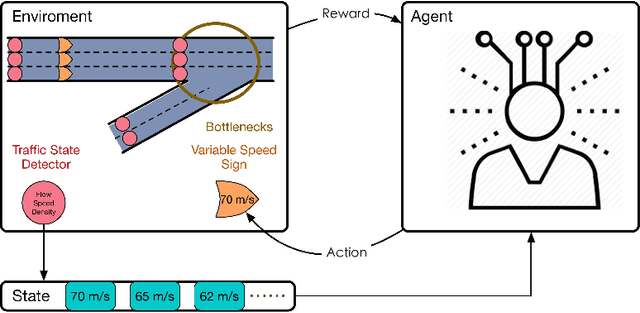

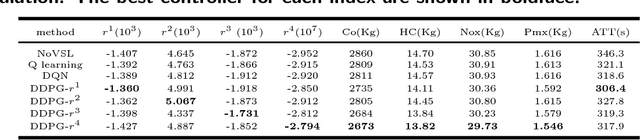

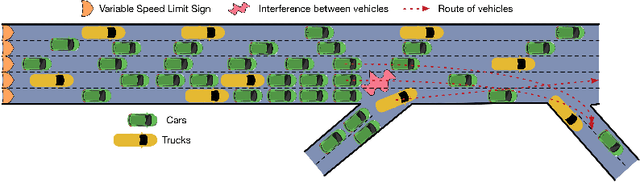

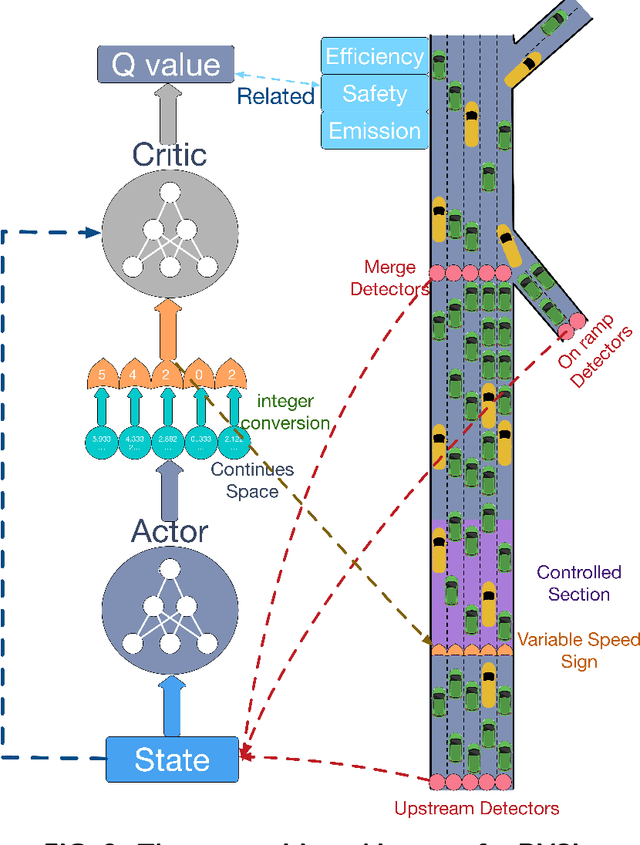

Differential Variable Speed Limits Control for Freeway Recurrent Bottlenecks via Deep Reinforcement learning

Oct 25, 2018

Abstract:Variable speed limits (VSL) control is a flexible way to improve traffic condition,increase safety and reduce emission. There is an emerging trend of using reinforcement learning technique for VSL control and recent studies have shown promising results. Currently, deep learning is enabling reinforcement learning to develope autonomous control agents for problems that were previously intractable. In this paper, we propose a more effective deep reinforcement learning (DRL) model for differential variable speed limits (DVSL) control, in which the dynamic and different speed limits among lanes can be imposed. The proposed DRL models use a novel actor-critic architecture which can learn a large number of discrete speed limits in a continues action space. Different reward signals, e.g. total travel time, bottleneck speed, emergency braking, and vehicular emission are used to train the DVSL controller, and comparison between these reward signals are conducted. We test proposed DRL baased DVSL controllers on a simulated freeway recurrent bottleneck. Results show that the efficiency, safety and emissions can be improved by the proposed method. We also show some interesting findings through the visulization of the control policies generated from DRL models.

Variational Deep Embedding: An Unsupervised and Generative Approach to Clustering

Jun 28, 2017

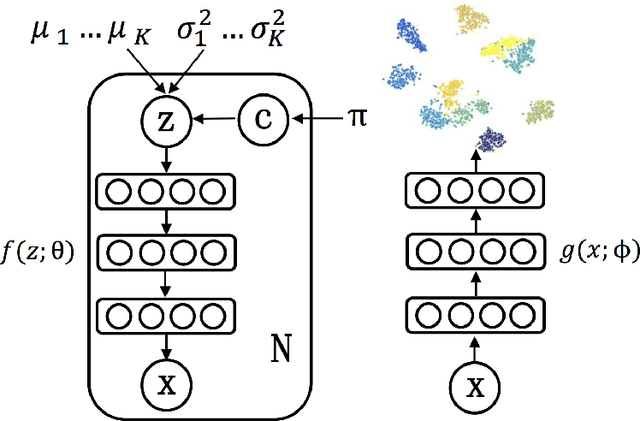

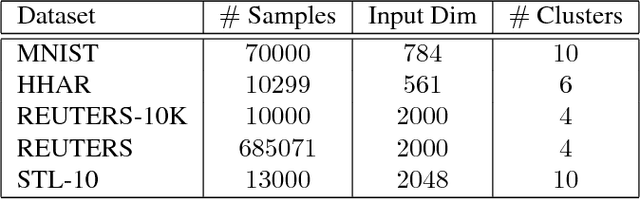

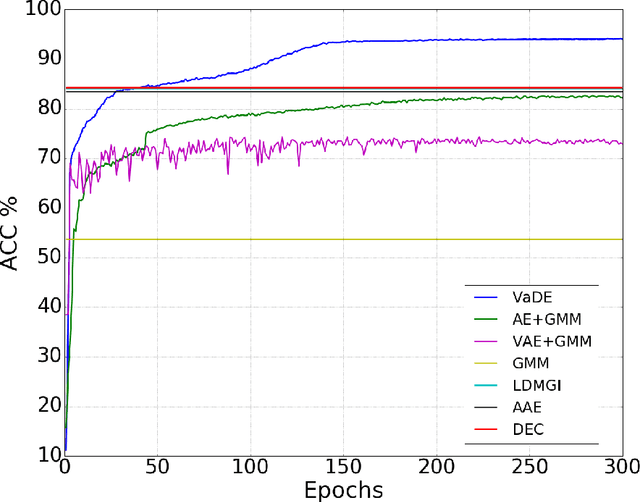

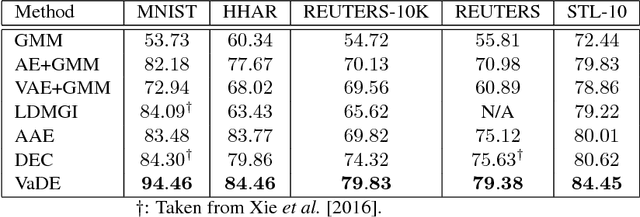

Abstract:Clustering is among the most fundamental tasks in computer vision and machine learning. In this paper, we propose Variational Deep Embedding (VaDE), a novel unsupervised generative clustering approach within the framework of Variational Auto-Encoder (VAE). Specifically, VaDE models the data generative procedure with a Gaussian Mixture Model (GMM) and a deep neural network (DNN): 1) the GMM picks a cluster; 2) from which a latent embedding is generated; 3) then the DNN decodes the latent embedding into observables. Inference in VaDE is done in a variational way: a different DNN is used to encode observables to latent embeddings, so that the evidence lower bound (ELBO) can be optimized using Stochastic Gradient Variational Bayes (SGVB) estimator and the reparameterization trick. Quantitative comparisons with strong baselines are included in this paper, and experimental results show that VaDE significantly outperforms the state-of-the-art clustering methods on 4 benchmarks from various modalities. Moreover, by VaDE's generative nature, we show its capability of generating highly realistic samples for any specified cluster, without using supervised information during training. Lastly, VaDE is a flexible and extensible framework for unsupervised generative clustering, more general mixture models than GMM can be easily plugged in.

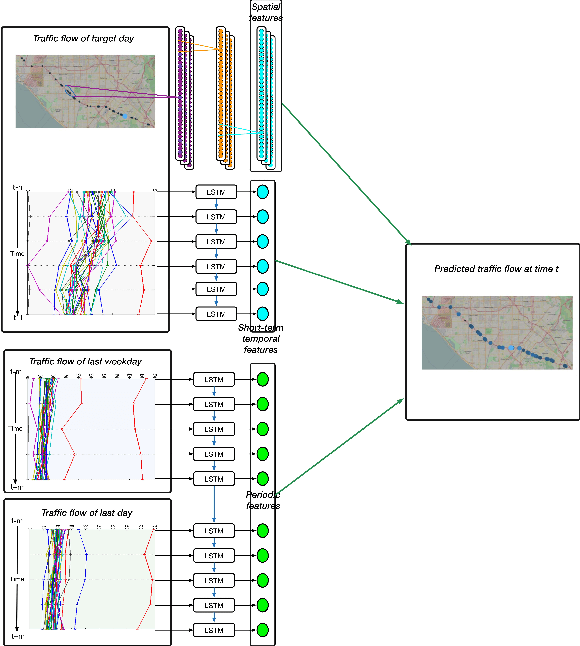

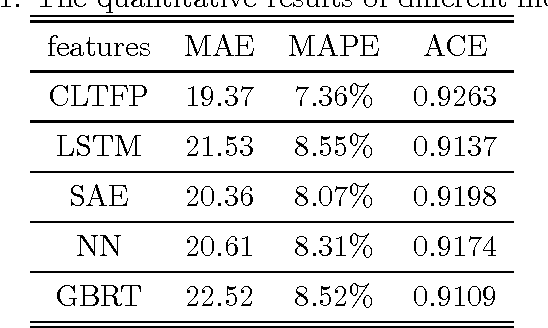

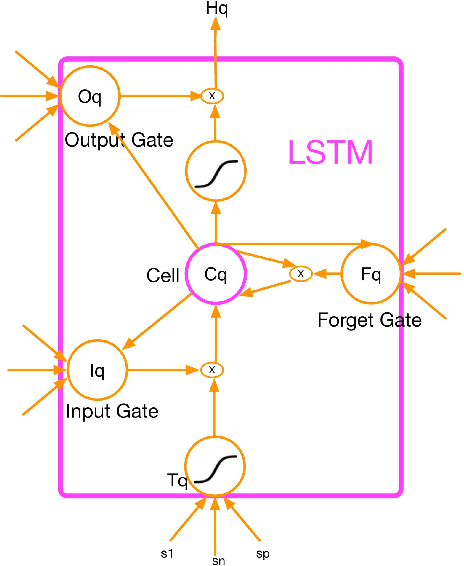

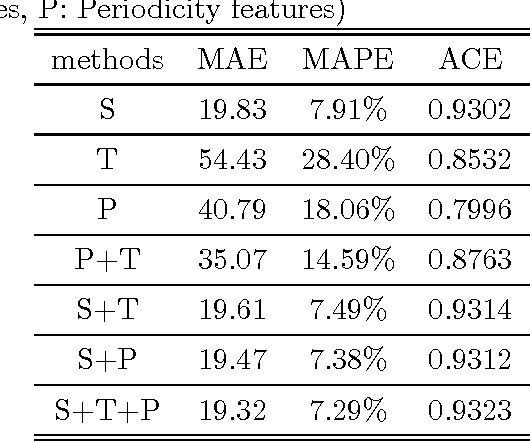

Short-term traffic flow forecasting with spatial-temporal correlation in a hybrid deep learning framework

Dec 03, 2016

Abstract:Deep learning approaches have reached a celebrity status in artificial intelligence field, its success have mostly relied on Convolutional Networks (CNN) and Recurrent Networks. By exploiting fundamental spatial properties of images and videos, the CNN always achieves dominant performance on visual tasks. And the Recurrent Networks (RNN) especially long short-term memory methods (LSTM) can successfully characterize the temporal correlation, thus exhibits superior capability for time series tasks. Traffic flow data have plentiful characteristics on both time and space domain. However, applications of CNN and LSTM approaches on traffic flow are limited. In this paper, we propose a novel deep architecture combined CNN and LSTM to forecast future traffic flow (CLTFP). An 1-dimension CNN is exploited to capture spatial features of traffic flow, and two LSTMs are utilized to mine the short-term variability and periodicities of traffic flow. Given those meaningful features, the feature-level fusion is performed to achieve short-term forecasting. The proposed CLTFP is compared with other popular forecasting methods on an open datasets. Experimental results indicate that the CLTFP has considerable advantages in traffic flow forecasting. in additional, the proposed CLTFP is analyzed from the view of Granger Causality, and several interesting properties of CLTFP are discovered and discussed .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge