Differential Variable Speed Limits Control for Freeway Recurrent Bottlenecks via Deep Reinforcement learning

Paper and Code

Oct 25, 2018

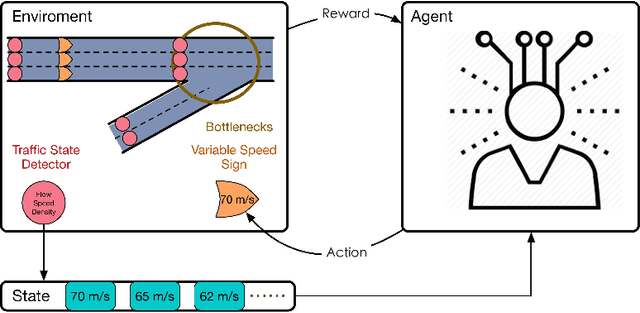

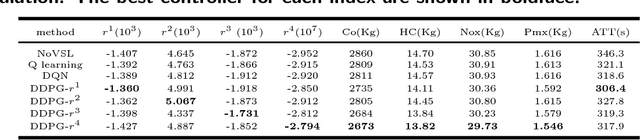

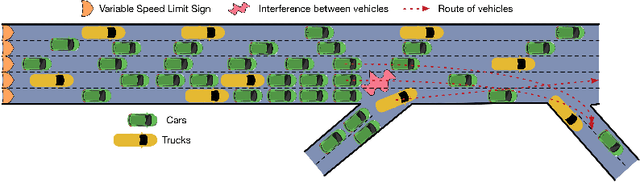

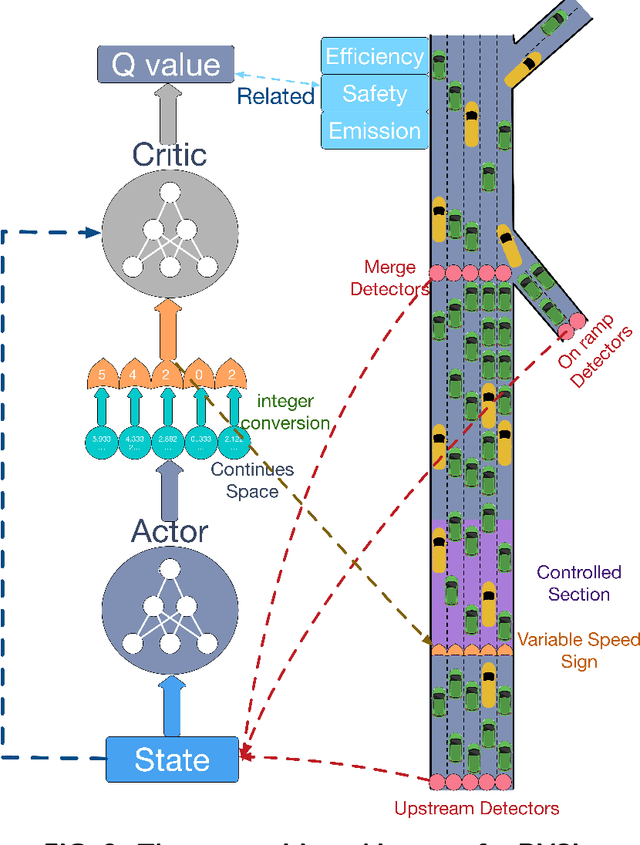

Variable speed limits (VSL) control is a flexible way to improve traffic condition,increase safety and reduce emission. There is an emerging trend of using reinforcement learning technique for VSL control and recent studies have shown promising results. Currently, deep learning is enabling reinforcement learning to develope autonomous control agents for problems that were previously intractable. In this paper, we propose a more effective deep reinforcement learning (DRL) model for differential variable speed limits (DVSL) control, in which the dynamic and different speed limits among lanes can be imposed. The proposed DRL models use a novel actor-critic architecture which can learn a large number of discrete speed limits in a continues action space. Different reward signals, e.g. total travel time, bottleneck speed, emergency braking, and vehicular emission are used to train the DVSL controller, and comparison between these reward signals are conducted. We test proposed DRL baased DVSL controllers on a simulated freeway recurrent bottleneck. Results show that the efficiency, safety and emissions can be improved by the proposed method. We also show some interesting findings through the visulization of the control policies generated from DRL models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge