Hongbin Zhang

Information-Theoretic Multi-Model Fusion for Target-Oriented Adaptive Sampling in Materials Design

Feb 03, 2026Abstract:Target-oriented discovery under limited evaluation budgets requires making reliable progress in high-dimensional, heterogeneous design spaces where each new measurement is costly, whether experimental or high-fidelity simulation. We present an information-theoretic framework for target-oriented adaptive sampling that reframes optimization as trajectory discovery: instead of approximating the full response surface, the method maintains and refines a low-entropy information state that concentrates search on target-relevant directions. The approach couples data, model beliefs, and physics/structure priors through dimension-aware information budgeting, adaptive bootstrapped distillation over a heterogeneous surrogate reservoir, and structure-aware candidate manifold analysis with Kalman-inspired multi-model fusion to balance consensus-driven exploitation and disagreement-driven exploration. Evaluated under a single unified protocol without dataset-specific tuning, the framework improves sample efficiency and reliability across 14 single- and multi-objective materials design tasks spanning candidate pools from $600$ to $4 \times 10^6$ and feature dimensions from $10$ to $10^3$, typically reaching top-performing regions within 100 evaluations. Complementary 20-dimensional synthetic benchmarks (Ackley, Rastrigin, Schwefel) further demonstrate robustness to rugged and multimodal landscapes.

Exploring the Feasibility of End-to-End Large Language Model as a Compiler

Nov 06, 2025Abstract:In recent years, end-to-end Large Language Model (LLM) technology has shown substantial advantages across various domains. As critical system software and infrastructure, compilers are responsible for transforming source code into target code. While LLMs have been leveraged to assist in compiler development and maintenance, their potential as an end-to-end compiler remains largely unexplored. This paper explores the feasibility of LLM as a Compiler (LaaC) and its future directions. We designed the CompilerEval dataset and framework specifically to evaluate the capabilities of mainstream LLMs in source code comprehension and assembly code generation. In the evaluation, we analyzed various errors, explored multiple methods to improve LLM-generated code, and evaluated cross-platform compilation capabilities. Experimental results demonstrate that LLMs exhibit basic capabilities as compilers but currently achieve low compilation success rates. By optimizing prompts, scaling up the model, and incorporating reasoning methods, the quality of assembly code generated by LLMs can be significantly enhanced. Based on these findings, we maintain an optimistic outlook for LaaC and propose practical architectural designs and future research directions. We believe that with targeted training, knowledge-rich prompts, and specialized infrastructure, LaaC has the potential to generate high-quality assembly code and drive a paradigm shift in the field of compilation.

NTIRE 2025 Challenge on Day and Night Raindrop Removal for Dual-Focused Images: Methods and Results

Apr 19, 2025

Abstract:This paper reviews the NTIRE 2025 Challenge on Day and Night Raindrop Removal for Dual-Focused Images. This challenge received a wide range of impressive solutions, which are developed and evaluated using our collected real-world Raindrop Clarity dataset. Unlike existing deraining datasets, our Raindrop Clarity dataset is more diverse and challenging in degradation types and contents, which includes day raindrop-focused, day background-focused, night raindrop-focused, and night background-focused degradations. This dataset is divided into three subsets for competition: 14,139 images for training, 240 images for validation, and 731 images for testing. The primary objective of this challenge is to establish a new and powerful benchmark for the task of removing raindrops under varying lighting and focus conditions. There are a total of 361 participants in the competition, and 32 teams submitting valid solutions and fact sheets for the final testing phase. These submissions achieved state-of-the-art (SOTA) performance on the Raindrop Clarity dataset. The project can be found at https://lixinustc.github.io/CVPR-NTIRE2025-RainDrop-Competition.github.io/.

Exploring Translation Mechanism of Large Language Models

Feb 17, 2025Abstract:Large language models (LLMs) have succeeded remarkably in multilingual translation tasks. However, the inherent translation mechanisms of LLMs remain poorly understood, largely due to sophisticated architectures and vast parameter scales. In response to this issue, this study explores the translation mechanism of LLM from the perspective of computational components (e.g., attention heads and MLPs). Path patching is utilized to explore causal relationships between components, detecting those crucial for translation tasks and subsequently analyzing their behavioral patterns in human-interpretable terms. Comprehensive analysis reveals that translation is predominantly facilitated by a sparse subset of specialized attention heads (less than 5\%), which extract source language, indicator, and positional features. MLPs subsequently integrate and process these features by transiting towards English-centric latent representations. Notably, building on the above findings, targeted fine-tuning of only 64 heads achieves translation improvement comparable to full-parameter tuning while preserving general capabilities.

LinguaLIFT: An Effective Two-stage Instruction Tuning Framework for Low-Resource Language Tasks

Dec 17, 2024Abstract:Large language models (LLMs) have demonstrated impressive multilingual understanding and reasoning capabilities, driven by extensive pre-training multilingual corpora and fine-tuning instruction data. However, a performance gap persists between high-resource and low-resource language tasks due to language imbalance in the pre-training corpus, even using more low-resource data during fine-tuning. To alleviate this issue, we propose LinguaLIFT, a two-stage instruction tuning framework for advancing low-resource language tasks. An additional language alignment layer is first integrated into the LLM to adapt a pre-trained multilingual encoder, thereby enhancing multilingual alignment through code-switched fine-tuning. The second stage fine-tunes LLM with English-only instruction data while freezing the language alignment layer, allowing LLM to transfer task-specific capabilities from English to low-resource language tasks. Additionally, we introduce the Multilingual Math World Problem (MMWP) benchmark, which spans 21 low-resource, 17 medium-resource, and 10 high-resource languages, enabling comprehensive evaluation of multilingual reasoning. Experimental results show that LinguaLIFT outperforms several competitive baselines across MMWP and other widely used benchmarks.

Paying More Attention to Source Context: Mitigating Unfaithful Translations from Large Language Model

Jun 11, 2024Abstract:Large language models (LLMs) have showcased impressive multilingual machine translation ability. However, unlike encoder-decoder style models, decoder-only LLMs lack an explicit alignment between source and target contexts. Analyzing contribution scores during generation processes revealed that LLMs can be biased towards previously generated tokens over corresponding source tokens, leading to unfaithful translations. To address this issue, we propose to encourage LLMs to pay more attention to the source context from both source and target perspectives in zeroshot prompting: 1) adjust source context attention weights; 2) suppress irrelevant target prefix influence; Additionally, we propose 3) avoiding over-reliance on the target prefix in instruction tuning. Experimental results from both human-collected unfaithfulness test sets focusing on LLM-generated unfaithful translations and general test sets, verify our methods' effectiveness across multiple language pairs. Further human evaluation shows our method's efficacy in reducing hallucinatory translations and facilitating faithful translation generation.

Improving CTC-AED model with integrated-CTC and auxiliary loss regularization

Aug 15, 2023Abstract:Connectionist temporal classification (CTC) and attention-based encoder decoder (AED) joint training has been widely applied in automatic speech recognition (ASR). Unlike most hybrid models that separately calculate the CTC and AED losses, our proposed integrated-CTC utilizes the attention mechanism of AED to guide the output of CTC. In this paper, we employ two fusion methods, namely direct addition of logits (DAL) and preserving the maximum probability (PMP). We achieve dimensional consistency by adaptively affine transforming the attention results to match the dimensions of CTC. To accelerate model convergence and improve accuracy, we introduce auxiliary loss regularization for accelerated convergence. Experimental results demonstrate that the DAL method performs better in attention rescoring, while the PMP method excels in CTC prefix beam search and greedy search.

A BERT-based Unsupervised Grammatical Error Correction Framework

Mar 30, 2023

Abstract:Grammatical error correction (GEC) is a challenging task of natural language processing techniques. While more attempts are being made in this approach for universal languages like English or Chinese, relatively little work has been done for low-resource languages for the lack of large annotated corpora. In low-resource languages, the current unsupervised GEC based on language model scoring performs well. However, the pre-trained language model is still to be explored in this context. This study proposes a BERT-based unsupervised GEC framework, where GEC is viewed as multi-class classification task. The framework contains three modules: data flow construction module, sentence perplexity scoring module, and error detecting and correcting module. We propose a novel scoring method for pseudo-perplexity to evaluate a sentence's probable correctness and construct a Tagalog corpus for Tagalog GEC research. It obtains competitive performance on the Tagalog corpus we construct and open-source Indonesian corpus and it demonstrates that our framework is complementary to baseline method for low-resource GEC task.

MNL-Bandits under Inventory and Limited Switches Constraints

Apr 22, 2022

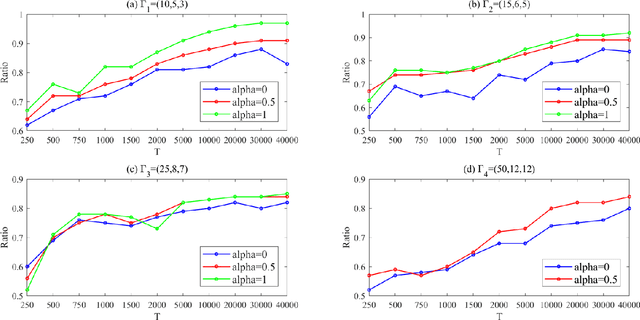

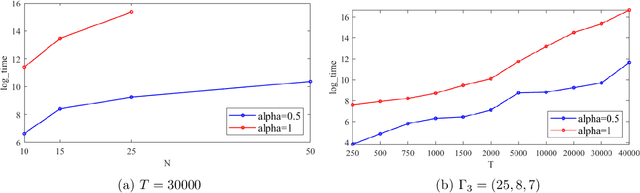

Abstract:Optimizing the assortment of products to display to customers is a key to increasing revenue for both offline and online retailers. To trade-off between exploring customers' preference and exploiting customers' choices learned from data, in this paper, by adopting the Multi-Nomial Logit (MNL) choice model to capture customers' choices over products, we study the problem of optimizing assortments over a planning horizon $T$ for maximizing the profit of the retailer. To make the problem setting more practical, we consider both the inventory constraint and the limited switches constraint, where the retailer cannot use up the resource inventory before time $T$ and is forbidden to switch the assortment shown to customers too many times. Such a setting suits the case when an online retailer wants to dynamically optimize the assortment selection for a population of customers. We develop an efficient UCB-like algorithm to optimize the assortments while learning customers' choices from data. We prove that our algorithm can achieve a sub-linear regret bound $\tilde{O}\left(T^{1-\alpha/2}\right)$ if $O(T^\alpha)$ switches are allowed. %, and our regret bound is optimal with respect to $T$. Extensive numerical experiments show that our algorithm outperforms baselines and the gap between our algorithm's performance and the theoretical upper bound is small.

Deep Learning Interfacial Momentum Closures in Coarse-Mesh CFD Two-Phase Flow Simulation Using Validation Data

May 07, 2020

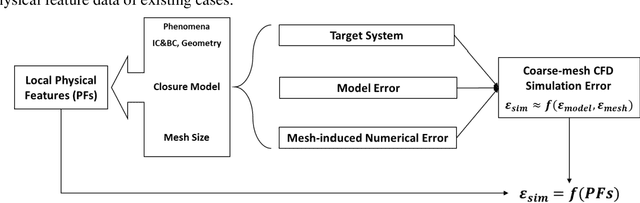

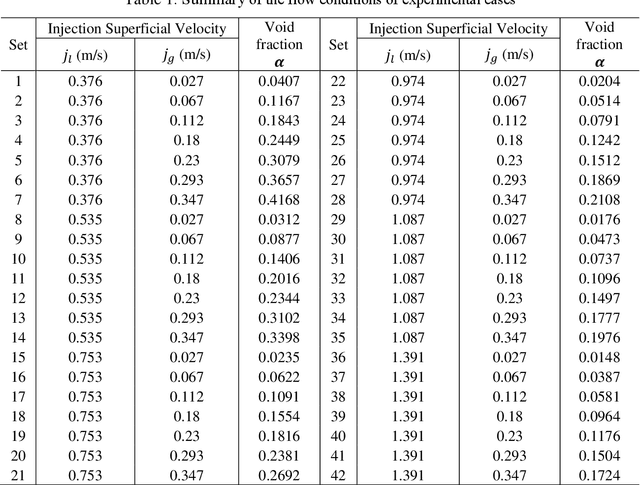

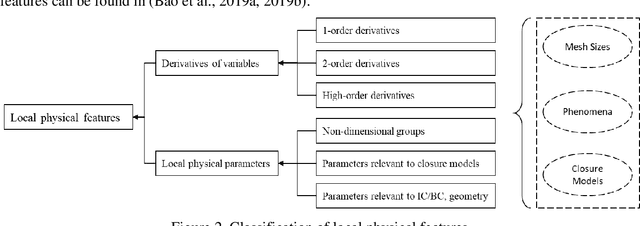

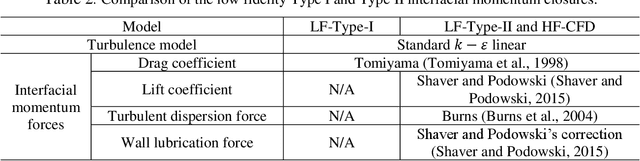

Abstract:Multiphase flow phenomena have been widely observed in the industrial applications, yet it remains a challenging unsolved problem. Three-dimensional computational fluid dynamics (CFD) approaches resolve of the flow fields on finer spatial and temporal scales, which can complement dedicated experimental study. However, closures must be introduced to reflect the underlying physics in multiphase flow. Among them, the interfacial forces, including drag, lift, turbulent-dispersion and wall-lubrication forces, play an important role in bubble distribution and migration in liquid-vapor two-phase flows. Development of those closures traditionally rely on the experimental data and analytical derivation with simplified assumptions that usually cannot deliver a universal solution across a wide range of flow conditions. In this paper, a data-driven approach, named as feature-similarity measurement (FSM), is developed and applied to improve the simulation capability of two-phase flow with coarse-mesh CFD approach. Interfacial momentum transfer in adiabatic bubbly flow serves as the focus of the present study. Both a mature and a simplified set of interfacial closures are taken as the low-fidelity data. Validation data (including relevant experimental data and validated fine-mesh CFD simulations results) are adopted as high-fidelity data. Qualitative and quantitative analysis are performed in this paper. These reveal that FSM can substantially improve the prediction of the coarse-mesh CFD model, regardless of the choice of interfacial closures, and it provides scalability and consistency across discontinuous flow regimes. It demonstrates that data-driven methods can aid the multiphase flow modeling by exploring the connections between local physical features and simulation errors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge