Haoyao Chen

HEATS: A Hierarchical Framework for Efficient Autonomous Target Search with Mobile Manipulators

Mar 11, 2025Abstract:Utilizing robots for autonomous target search in complex and unknown environments can greatly improve the efficiency of search and rescue missions. However, existing methods have shown inadequate performance due to hardware platform limitations, inefficient viewpoint selection strategies, and conservative motion planning. In this work, we propose HEATS, which enhances the search capability of mobile manipulators in complex and unknown environments. We design a target viewpoint planner tailored to the strengths of mobile manipulators, ensuring efficient and comprehensive viewpoint planning. Supported by this, a whole-body motion planner integrates global path search with local IPC optimization, enabling the mobile manipulator to safely and agilely visit target viewpoints, significantly improving search performance. We present extensive simulated and real-world tests, in which our method demonstrates reduced search time, higher target search completeness, and lower movement cost compared to classic and state-of-the-art approaches. Our method will be open-sourced for community benefit.

Real-Time LiDAR Point Cloud Compression and Transmission for Resource-constrained Robots

Feb 10, 2025

Abstract:LiDARs are widely used in autonomous robots due to their ability to provide accurate environment structural information. However, the large size of point clouds poses challenges in terms of data storage and transmission. In this paper, we propose a novel point cloud compression and transmission framework for resource-constrained robotic applications, called RCPCC. We iteratively fit the surface of point clouds with a similar range value and eliminate redundancy through their spatial relationships. Then, we use Shape-adaptive DCT (SA-DCT) to transform the unfit points and reduce the data volume by quantizing the transformed coefficients. We design an adaptive bitrate control strategy based on QoE as the optimization goal to control the quality of the transmitted point cloud. Experiments show that our framework achieves compression rates of 40$\times$ to 80$\times$ while maintaining high accuracy for downstream applications. our method significantly outperforms other baselines in terms of accuracy when the compression rate exceeds 70$\times$. Furthermore, in situations of reduced communication bandwidth, our adaptive bitrate control strategy demonstrates significant QoE improvements. The code will be available at https://github.com/HITSZ-NRSL/RCPCC.git.

Generating 6-D Trajectories for Omnidirectional Multirotor Aerial Vehicles in Cluttered Environments

Apr 16, 2024

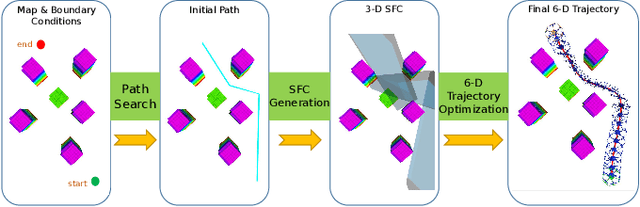

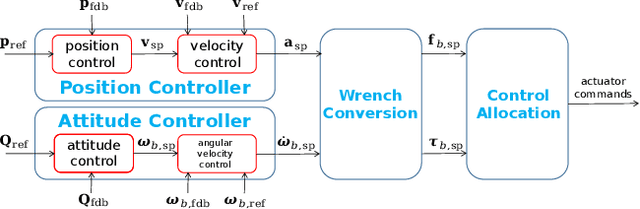

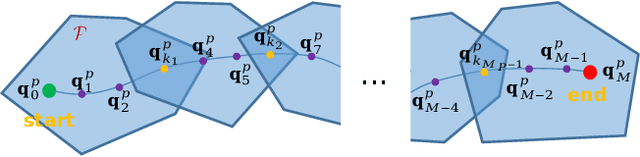

Abstract:As fully-actuated systems, omnidirectional multirotor aerial vehicles (OMAVs) have more flexible maneuverability and advantages in aggressive flight in cluttered environments than traditional underactuated MAVs. %Due to the high dimensionality of configuration space, making the designed trajectory generation algorithm efficient is challenging. This paper aims to achieve safe flight of OMAVs in cluttered environments. Considering existing static obstacles, an efficient optimization-based framework is proposed to generate 6-D $SE(3)$ trajectories for OMAVs. Given the kinodynamic constraints and the 3D collision-free region represented by a series of intersecting convex polyhedra, the proposed method finally generates a safe and dynamically feasible 6-D trajectory. First, we parameterize the vehicle's attitude into a free 3D vector using stereographic projection to eliminate the constraints inherent in the $SO(3)$ manifold, while the complete $SE(3)$ trajectory is represented as a 6-D polynomial in time without inherent constraints. The vehicle's shape is modeled as a cuboid attached to the body frame to achieve whole-body collision evaluation. Then, we formulate the origin trajectory generation problem as a constrained optimization problem. The original constrained problem is finally transformed into an unconstrained one that can be solved efficiently. To verify the proposed framework's performance, simulations and real-world experiments based on a tilt-rotor hexarotor aerial vehicle are carried out.

Robot Safe Planning In Dynamic Environments Based On Model Predictive Control Using Control Barrier Function

Apr 09, 2024

Abstract:Implementing obstacle avoidance in dynamic environments is a challenging problem for robots. Model predictive control (MPC) is a popular strategy for dealing with this type of problem, and recent work mainly uses control barrier function (CBF) as hard constraints to ensure that the system state remains in the safe set. However, in crowded scenarios, effective solutions may not be obtained due to infeasibility problems, resulting in degraded controller performance. We propose a new MPC framework that integrates CBF to tackle the issue of obstacle avoidance in dynamic environments, in which the infeasibility problem induced by hard constraints operating over the whole prediction horizon is solved by softening the constraints and introducing exact penalty, prompting the robot to actively seek out new paths. At the same time, generalized CBF is extended as a single-step safety constraint of the controller to enhance the safety of the robot during navigation. The efficacy of the proposed method is first shown through simulation experiments, in which a double-integrator system and a unicycle system are employed, and the proposed method outperforms other controllers in terms of safety, feasibility, and navigation efficiency. Furthermore, real-world experiment on an MR1000 robot is implemented to demonstrate the effectiveness of the proposed method.

MSI-NeRF: Linking Omni-Depth with View Synthesis through Multi-Sphere Image aided Generalizable Neural Radiance Field

Mar 16, 2024

Abstract:Panoramic observation using fisheye cameras is significant in robot perception, reconstruction, and remote operation. However, panoramic images synthesized by traditional methods lack depth information and can only provide three degrees-of-freedom (3DoF) rotation rendering in virtual reality applications. To fully preserve and exploit the parallax information within the original fisheye cameras, we introduce MSI-NeRF, which combines deep learning omnidirectional depth estimation and novel view rendering. We first construct a multi-sphere image as a cost volume through feature extraction and warping of the input images. It is then processed by geometry and appearance decoders, respectively. Unlike methods that regress depth maps directly, we further build an implicit radiance field using spatial points and interpolated 3D feature vectors as input. In this way, we can simultaneously realize omnidirectional depth estimation and 6DoF view synthesis. Our method is trained in a semi-self-supervised manner. It does not require target view images and only uses depth data for supervision. Our network has the generalization ability to reconstruct unknown scenes efficiently using only four images. Experimental results show that our method outperforms existing methods in depth estimation and novel view synthesis tasks.

Multi-object Detection, Tracking and Prediction in Rugged Dynamic Environments

Aug 23, 2023

Abstract:Multi-object tracking (MOT) has important applications in monitoring, logistics, and other fields. This paper develops a real-time multi-object tracking and prediction system in rugged environments. A 3D object detection algorithm based on Lidar-camera fusion is designed to detect the target objects. Based on the Hungarian algorithm, this paper designs a 3D multi-object tracking algorithm with an adaptive threshold to realize the stable matching and tracking of the objects. We combine Memory Augmented Neural Networks (MANN) and Kalman filter to achieve 3D trajectory prediction on rugged terrains. Besides, we realize a new dynamic SLAM by using the results of multi-object tracking to remove dynamic points for better SLAM performance and static map. To verify the effectiveness of the proposed multi-object tracking and prediction system, several simulations and physical experiments are conducted. The results show that the proposed system can track dynamic objects and provide future trajectory and a more clean static map in real-time.

Towards Real-time Scalable Dense Mapping using Robot-centric Implicit Representation

Jun 18, 2023

Abstract:Real-time and high-quailty dense mapping is essential for robots to perform fine tasks. However, most existing methods can not achieve both speed and quality. Recent works have shown that implicit neural representations of 3D scenes can produce remarkable results, but they are limited to small scenes and lack real-time performance. To address these limitations, we propose a real-time scalable mapping method using robot-centric implicit representation. We train implicit features with a multi-resolution local map and decode them as signed distance values through a shallow neural network. We maintain the learned features in a scalable manner using a global map that consists of a hash table and a submap set. We exploit the characteristics of the local map to achieve highly efficient training and mitigate the catastrophic forgetting problem in incremental implicit mapping. Extensive experiments validate that our method outperforms existing methods in reconstruction quality, real-time performance, and applicability. The code of our system will be available at \url{https://github.com/HITSZ-NRSL/RIM.git}.

Hierarchical Adaptive Voxel-guided Sampling for Real-time Applications in Large-scale Point Clouds

May 23, 2023Abstract:While point-based neural architectures have demonstrated their efficacy, the time-consuming sampler currently prevents them from performing real-time reasoning on scene-level point clouds. Existing methods attempt to overcome this issue by using random sampling strategy instead of the commonly-adopted farthest point sampling~(FPS), but at the expense of lower performance. So the effectiveness/efficiency trade-off remains under-explored. In this paper, we reveal the key to high-quality sampling is ensuring an even spacing between points in the subset, which can be naturally obtained through a grid. Based on this insight, we propose a hierarchical adaptive voxel-guided point sampler with linear complexity and high parallelization for real-time applications. Extensive experiments on large-scale point cloud detection and segmentation tasks demonstrate that our method achieves competitive performance with the most powerful FPS, at an amazing speed that is more than 100 times faster. This breakthrough in efficiency addresses the bottleneck of the sampling step when handling scene-level point clouds. Furthermore, our sampler can be easily integrated into existing models and achieves a 20$\sim$80\% reduction in runtime with minimal effort. The code will be available at https://github.com/OuyangJunyuan/pointcloud-3d-detector-tensorrt

Proxy-based Super Twisting Control Algorithm for Aerial Manipulators

May 06, 2023

Abstract:Aerial manipulators are composed of an aerial multi-rotor that is equipped with a 6-DOF servo robot arm. To achieve precise position and attitude control during the arm's motion, it is critical for the system to have high performance control capabilities. However, the coupling effect between the multi-rotor UAVs' movement poses a challenge to the entire system's control capability. We have proposed a new proxy-based super twisting control approach for quadrotor UAVs that mitigates the disturbance caused by moving manipulators. This approach helps improve the stability of the aerial manipulation system when carrying out hovering or trajectory tracking tasks. The controller's effectiveness has been validated through numerical simulation and further tested in the Gazebo simulation environment.

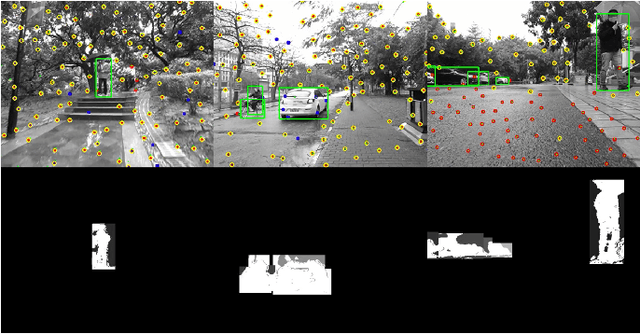

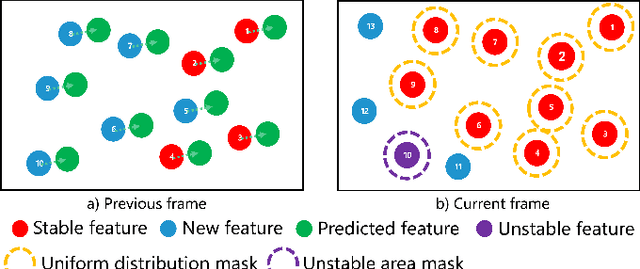

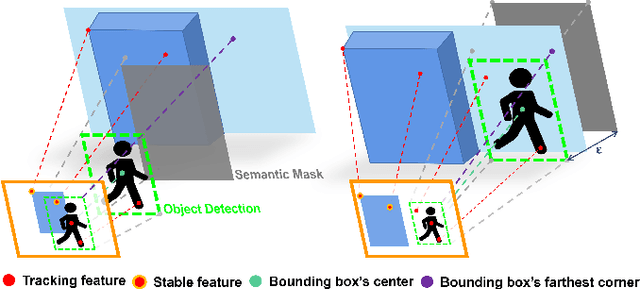

RGB-D Inertial Odometry for a Resource-Restricted Robot in Dynamic Environments

Apr 21, 2023

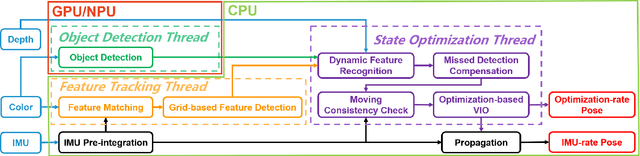

Abstract:Current simultaneous localization and mapping (SLAM) algorithms perform well in static environments but easily fail in dynamic environments. Recent works introduce deep learning-based semantic information to SLAM systems to reduce the influence of dynamic objects. However, it is still challenging to apply a robust localization in dynamic environments for resource-restricted robots. This paper proposes a real-time RGB-D inertial odometry system for resource-restricted robots in dynamic environments named Dynamic-VINS. Three main threads run in parallel: object detection, feature tracking, and state optimization. The proposed Dynamic-VINS combines object detection and depth information for dynamic feature recognition and achieves performance comparable to semantic segmentation. Dynamic-VINS adopts grid-based feature detection and proposes a fast and efficient method to extract high-quality FAST feature points. IMU is applied to predict motion for feature tracking and moving consistency check. The proposed method is evaluated on both public datasets and real-world applications and shows competitive localization accuracy and robustness in dynamic environments. Yet, to the best of our knowledge, it is the best-performance real-time RGB-D inertial odometry for resource-restricted platforms in dynamic environments for now. The proposed system is open source at: https://github.com/HITSZ-NRSL/Dynamic-VINS.git

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge