Hannes Nickisch

Multi-Resolution 3D Convolutional Neural Networks for Automatic Coronary Centerline Extraction in Cardiac CT Angiography Scans

Oct 02, 2020

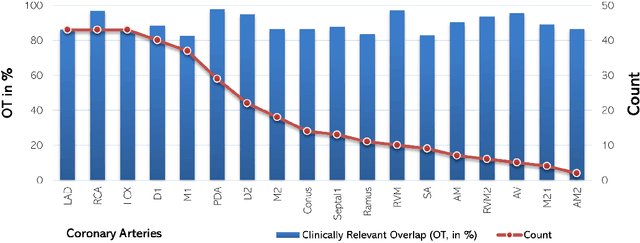

Abstract:We propose a deep learning-based automatic coronary artery tree centerline tracker (AuCoTrack) extending the vessel tracker by Wolterink (arXiv:1810.03143). A dual pathway Convolutional Neural Network (CNN) operating on multi-scale 3D inputs predicts the direction of the coronary arteries as well as the presence of a bifurcation. A similar multi-scale dual pathway 3D CNN is trained to identify coronary artery endpoints for terminating the tracking process. Two or more continuation directions are derived based on the bifurcation detection. The iterative tracker detects the entire left and right coronary artery trees based on only two ostium landmarks derived from a model-based segmentation of the heart. The 3D CNNs were trained on a proprietary dataset consisting of 43 CCTA scans. An average sensitivity of 87.1% and clinically relevant overlap of 89.1% was obtained relative to a refined manual segmentation. In addition, the MICCAI 2008 Coronary Artery Tracking Challenge (CAT08) training and test datasets were used to benchmark the algorithm and to assess its generalization. An average overlap of 93.6% and a clinically relevant overlap of 96.4% were obtained. The proposed method achieved better overlap scores than the current state-of-the-art automatic centerline extraction techniques on the CAT08 dataset with a vessel detection rate of 95%.

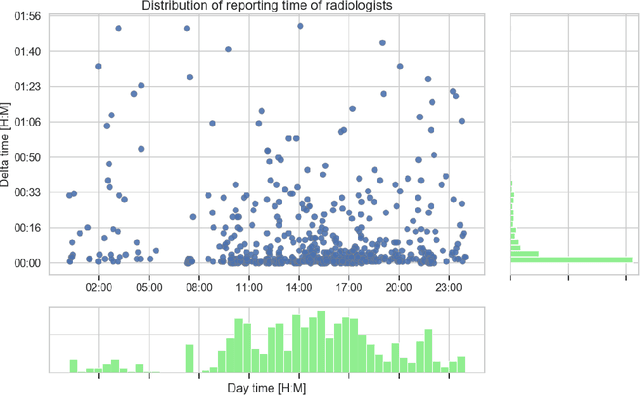

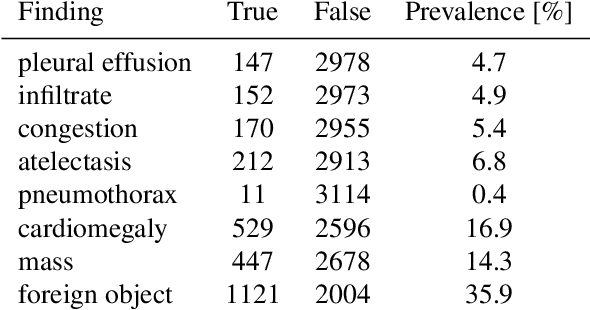

Intelligent Chest X-ray Worklist Prioritization by CNNs: A Clinical Workflow Simulation

Jan 23, 2020

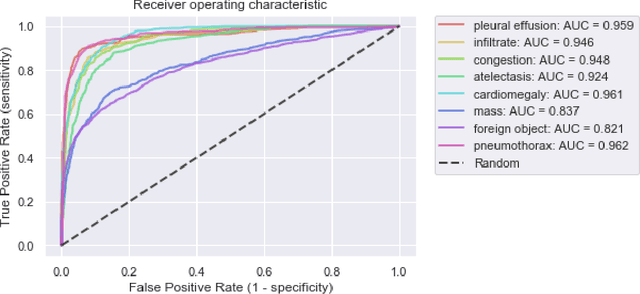

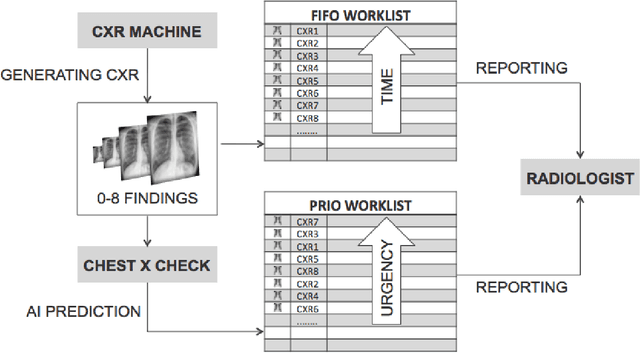

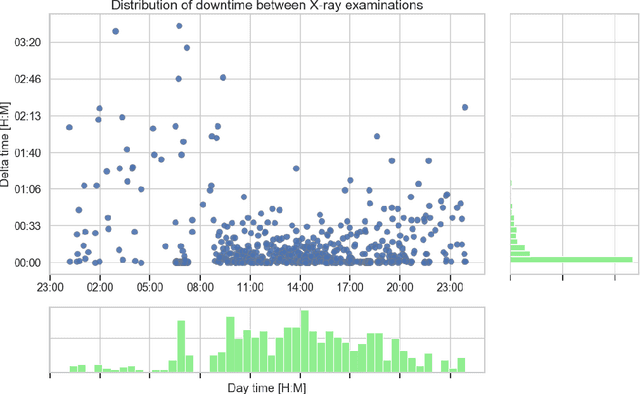

Abstract:Growing radiologic workload and shortage of medical experts worldwide often lead to delayed or even unreported examinations, which poses a risk for patient's safety in case of unrecognized findings in chest radiographs (CXR). The aim was to evaluate, whether deep learning algorithms for an intelligent worklist prioritization might optimize the radiology workflow and can reduce report turnaround times (RTAT) for critical findings, instead of reporting according to the First-In-First-Out-Principle (FIFO). Furthermore, we investigated the problem of false negative prediction in the context of worklist prioritization. To assess the potential benefit of an intelligent worklist prioritization, three different workflow simulations based on our analysis were run and RTAT were compared: FIFO (non-prioritized), Prio1 (prioritized) and Prio2 (prioritized, with RTATmax.). Examination triage was performed by "ChestXCheck", a convolutional neural network, classifying eight different pathological findings ranked in descending order of urgency: pneumothorax, pleural effusion, infiltrate, congestion, atelectasis, cardiomegaly, mass and foreign object. The average RTAT for all critical findings was significantly reduced by both Prio simulations compared to the FIFO simulation (e.g. pneumothorax: 32.1 min vs. 69.7 min; p < 0.0001), while the average RTAT for normal examinations increased at the same time (69.5 min vs. 90.0 min; p < 0.0001). Both effects were slightly lower at Prio2 than at Prio1, whereas the maximum RTAT at Prio1 was substantially higher for all classes, due to individual examinations rated false negative.Our Prio2 simulation demonstrated that intelligent worklist prioritization by deep learning algorithms reduces average RTAT for critical findings in chest X-ray while maintaining a similar maximum RTAT as FIFO.

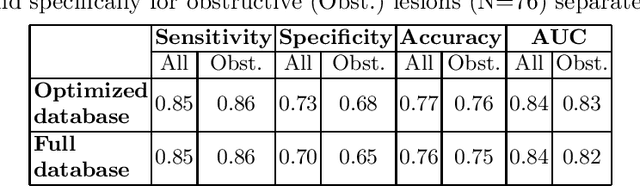

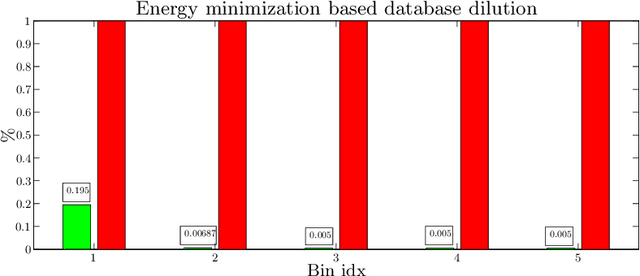

Learning a sparse database for patch-based medical image segmentation

Jun 25, 2019

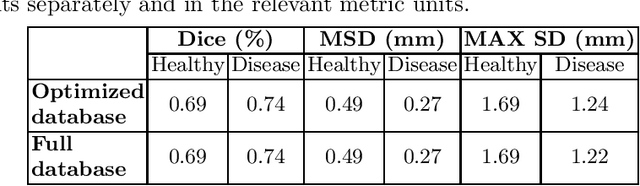

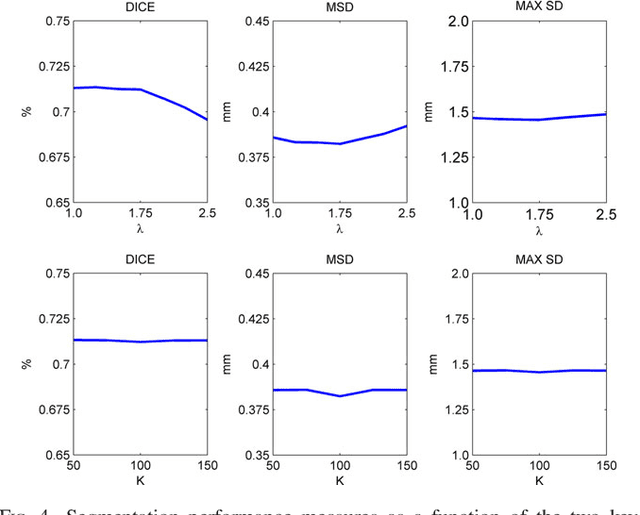

Abstract:We introduce a functional for the learning of an optimal database for patch-based image segmentation with application to coronary lumen segmentation from coronary computed tomography angiography (CCTA) data. The proposed functional consists of fidelity, sparseness and robustness to small-variations terms and their associated weights. Existing work address database optimization by prototype selection aiming to optimize the database by either adding or removing prototypes according to a set of predefined rules. In contrast, we formulate the database optimization task as an energy minimization problem that can be solved using standard numerical tools. We apply the proposed database optimization functional to the task of optimizing a database for patch-base coronary lumen segmentation. Our experiments using the publicly available MICCAI 2012 coronary lumen segmentation challenge data show that optimizing the database using the proposed approach reduced database size by 96% while maintaining the same level of lumen segmentation accuracy. Moreover, we show that the optimized database yields an improved specificity of CCTA based fractional flow reserve (0.73 vs 0.7 for all lesions and 0.68 vs 0.65 for obstructive lesions) using a training set of 132 (76 obstructive) coronary lesions with invasively measured FFR as the reference.

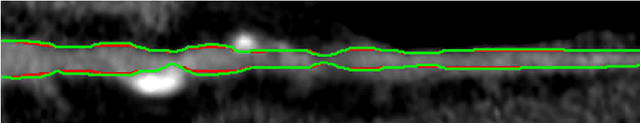

Improving CCTA based lesions' hemodynamic significance assessment by accounting for partial volume modeling in automatic coronary lumen segmentation

Jun 24, 2019

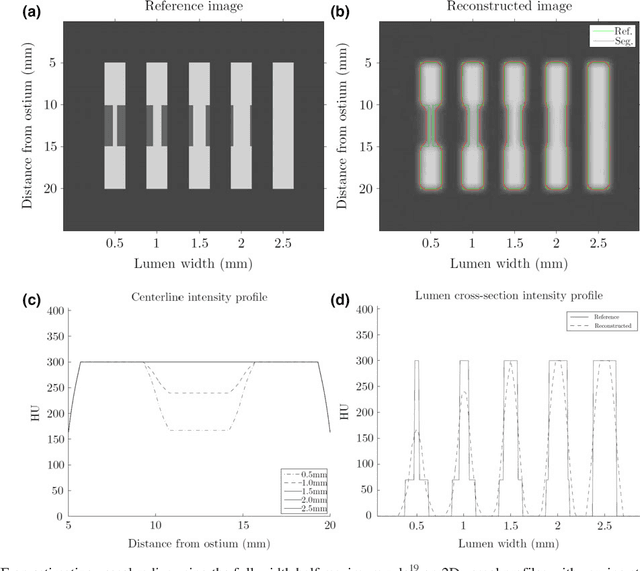

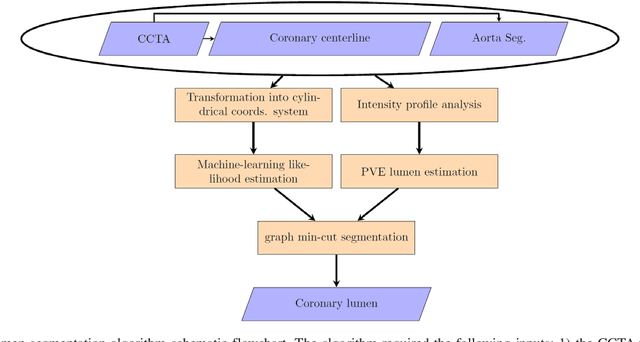

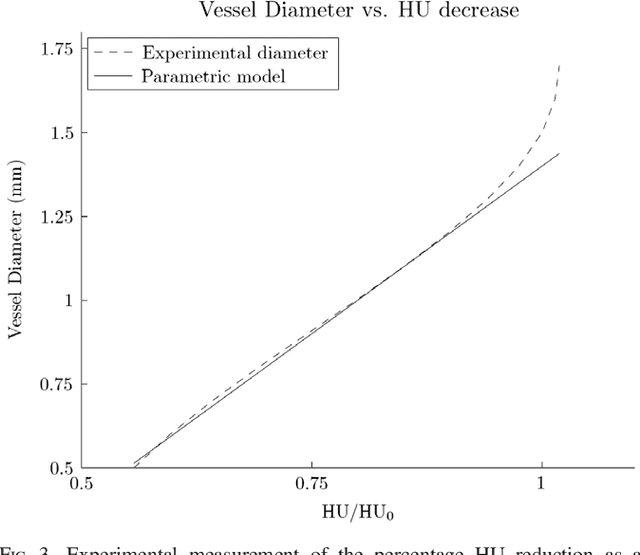

Abstract:Purpose: The goal of this study was to assess the potential added benefit of accounting for partial volume effects (PVE) in an automatic coronary lumen segmentation algorithm from coronary computed tomography angiography (CCTA). Materials and methods: We assessed the potential added value of PVE integration as a part of the automatic coronary lumen segmentation algorithm by means of segmentation accuracy using the MICCAI 2012 challenge framework and by means of flow simulation overall accuracy, sensitivity, specificity, negative and positive predictive values and the receiver operated characteristic (ROC) area under the curve. We also evaluated the potential benefit of accounting for PVE in automatic segmentation for flow-simulation for lesions that were diagnosed as obstructive based on CCTA, which could have indicated a need for an invasive exam and revascularization. Results: Our segmentation algorithm improves the maximal surface distance error by ~39% compared to previously published method on the 18 datasets 50 from the MICCAI 2012 challenge with comparable Dice and mean surface distance. Results with and without accounting for PVE were comparable. In contrast, integrating PVE analysis into an automatic coronary lumen segmentation algorithm improved the flow simulation specificity from 0.6 to 0.68 with the same sensitivity of 0.83. Also, accounting for PVE improved the area under the ROC curve for detecting hemodynamically significant CAD from 0.76 to 0.8 compared to automatic segmentation without PVE analysis with invasive FFR threshold of 0.8 as the reference standard. The improvement in the AUC was statistically significant (N=76, Delong's test, p=0.012). Conclusion: Accounting for the partial volume effects in automatic coronary lumen segmentation algorithms has the potential to improve the accuracy of CCTA-based hemodynamic assessment of coronary artery lesions.

Change Surfaces for Expressive Multidimensional Changepoints and Counterfactual Prediction

Oct 30, 2018

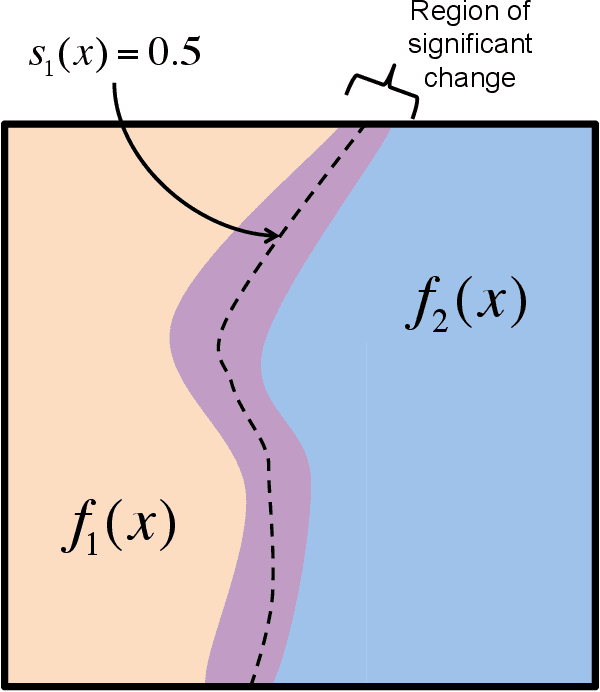

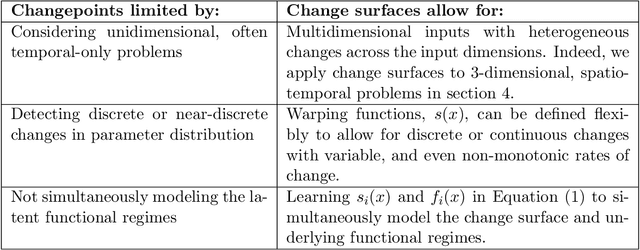

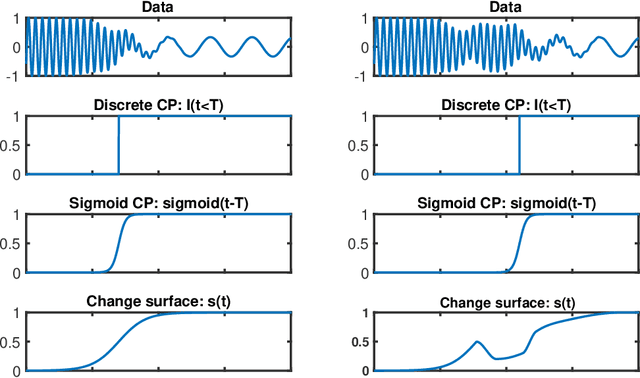

Abstract:Identifying changes in model parameters is fundamental in machine learning and statistics. However, standard changepoint models are limited in expressiveness, often addressing unidimensional problems and assuming instantaneous changes. We introduce change surfaces as a multidimensional and highly expressive generalization of changepoints. We provide a model-agnostic formalization of change surfaces, illustrating how they can provide variable, heterogeneous, and non-monotonic rates of change across multiple dimensions. Additionally, we show how change surfaces can be used for counterfactual prediction. As a concrete instantiation of the change surface framework, we develop Gaussian Process Change Surfaces (GPCS). We demonstrate counterfactual prediction with Bayesian posterior mean and credible sets, as well as massive scalability by introducing novel methods for additive non-separable kernels. Using two large spatio-temporal datasets we employ GPCS to discover and characterize complex changes that can provide scientific and policy relevant insights. Specifically, we analyze twentieth century measles incidence across the United States and discover previously unknown heterogeneous changes after the introduction of the measles vaccine. Additionally, we apply the model to requests for lead testing kits in New York City, discovering distinct spatial and demographic patterns.

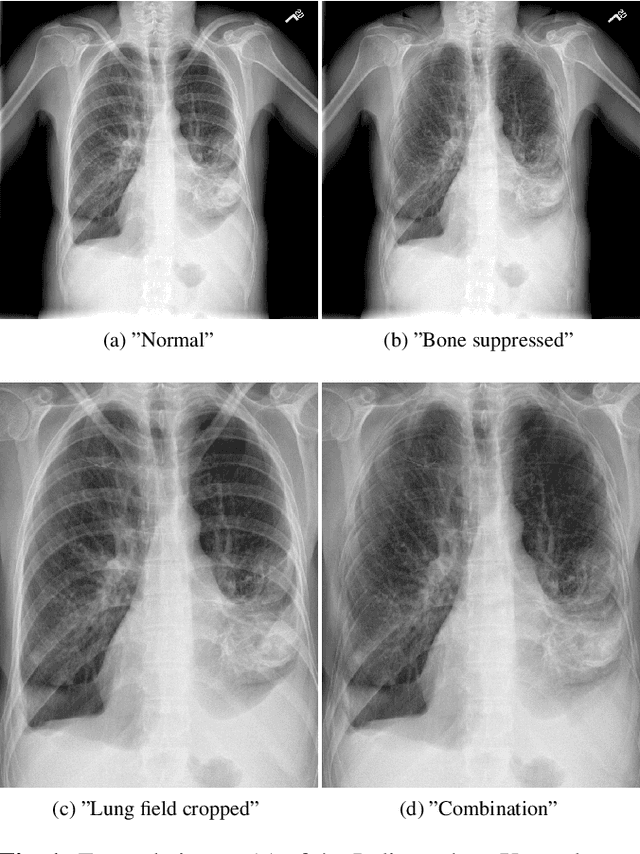

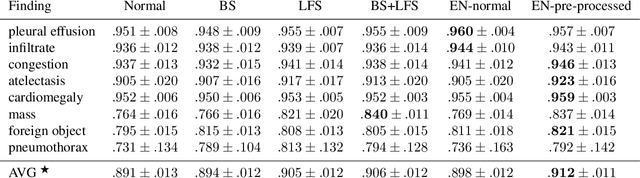

When does Bone Suppression and Lung Field Segmentation Improve Chest X-Ray Disease Classification?

Oct 17, 2018

Abstract:Chest radiography is the most common clinical examination type. To improve the quality of patient care and to reduce workload, methods for automatic pathology classification have been developed. In this contribution we investigate the usefulness of two advanced image pre-processing techniques, initially developed for image reading by radiologists, for the performance of Deep Learning methods. First, we use bone suppression, an algorithm to artificially remove the rib cage. Secondly, we employ an automatic lung field detection to crop the image to the lung area. Furthermore, we consider the combination of both in the context of an ensemble approach. In a five-times re-sampling scheme, we use Receiver Operating Characteristic (ROC) statistics to evaluate the effect of the pre-processing approaches. Using a Convolutional Neural Network (CNN), optimized for X-ray analysis, we achieve a good performance with respect to all pathologies on average. Superior results are obtained for selected pathologies when using pre-processing, i.e. for mass the area under the ROC curve increased by 9.95%. The ensemble with pre-processed trained models yields the best overall results.

State Space Gaussian Processes with Non-Gaussian Likelihood

Jul 05, 2018

Abstract:We provide a comprehensive overview and tooling for GP modeling with non-Gaussian likelihoods using state space methods. The state space formulation allows for solving one-dimensional GP models in $\mathcal{O}(n)$ time and memory complexity. While existing literature has focused on the connection between GP regression and state space methods, the computational primitives allowing for inference using general likelihoods in combination with the Laplace approximation (LA), variational Bayes (VB), and assumed density filtering (ADF, a.k.a. single-sweep expectation propagation, EP) schemes has been largely overlooked. We present means of combining the efficient $\mathcal{O}(n)$ state space methodology with existing inference methods. We extend existing methods, and provide unifying code implementing all approaches.

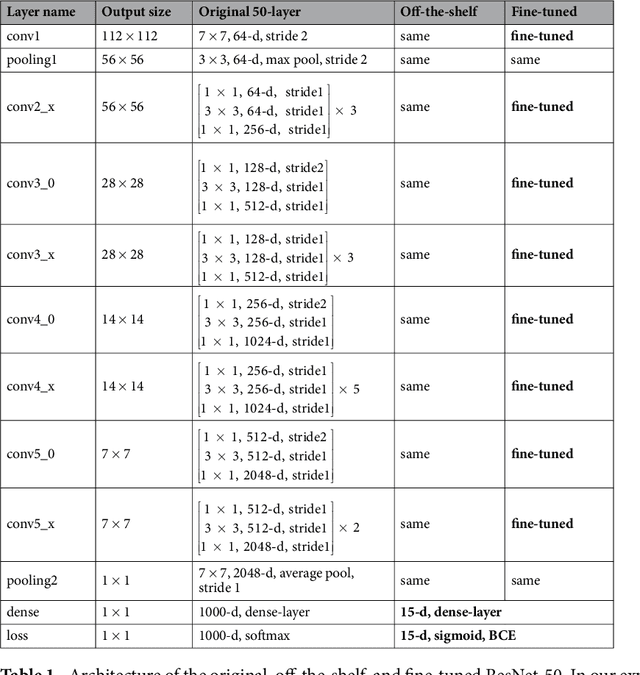

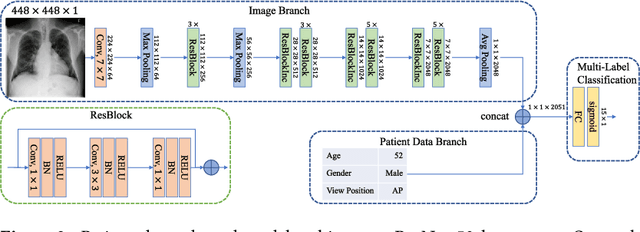

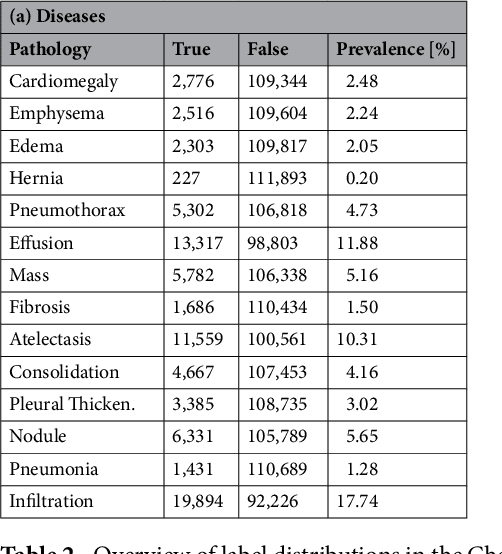

Comparison of Deep Learning Approaches for Multi-Label Chest X-Ray Classification

Mar 06, 2018

Abstract:The increased availability of X-ray image archives (e.g. the ChestX-ray14 dataset from the NIH Clinical Center) has triggered a growing interest in deep learning techniques. To provide better insight into the different approaches, and their applications to chest X-ray classification, we investigate a powerful network architecture in detail: the ResNet-50. Building on prior work in this domain, we consider transfer learning with and without fine-tuning as well as the training of a dedicated X-ray network from scratch. To leverage the high spatial resolutions of X-ray data, we also include an extended ResNet-50 architecture, and a network integrating non-image data (patient age, gender and acquisition type) in the classification process. In a systematic evaluation, using 5-fold re-sampling and a multi-label loss function, we evaluate the performance of the different approaches for pathology classification by ROC statistics and analyze differences between the classifiers using rank correlation. We observe a considerable spread in the achieved performance and conclude that the X-ray-specific ResNet-50, integrating non-image data yields the best overall results.

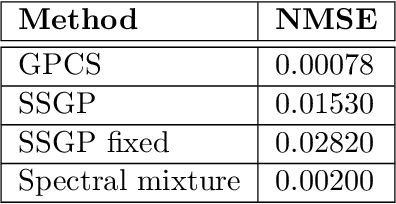

Scalable Log Determinants for Gaussian Process Kernel Learning

Nov 09, 2017

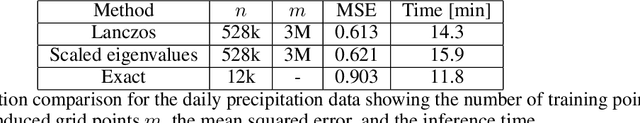

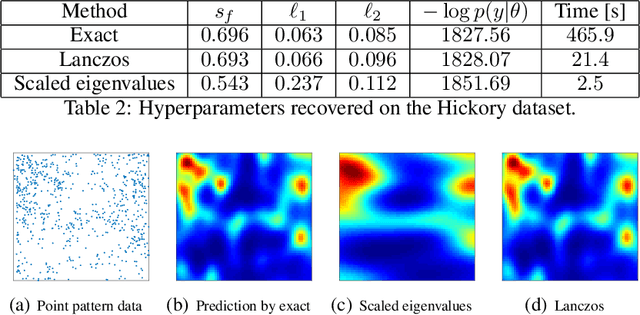

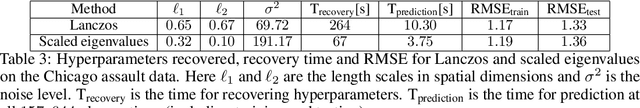

Abstract:For applications as varied as Bayesian neural networks, determinantal point processes, elliptical graphical models, and kernel learning for Gaussian processes (GPs), one must compute a log determinant of an $n \times n$ positive definite matrix, and its derivatives - leading to prohibitive $\mathcal{O}(n^3)$ computations. We propose novel $\mathcal{O}(n)$ approaches to estimating these quantities from only fast matrix vector multiplications (MVMs). These stochastic approximations are based on Chebyshev, Lanczos, and surrogate models, and converge quickly even for kernel matrices that have challenging spectra. We leverage these approximations to develop a scalable Gaussian process approach to kernel learning. We find that Lanczos is generally superior to Chebyshev for kernel learning, and that a surrogate approach can be highly efficient and accurate with popular kernels.

* Appears at Advances in Neural Information Processing Systems 30 (NIPS), 2017

Can Pretrained Neural Networks Detect Anatomy?

Dec 18, 2015

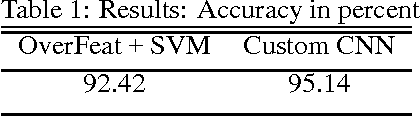

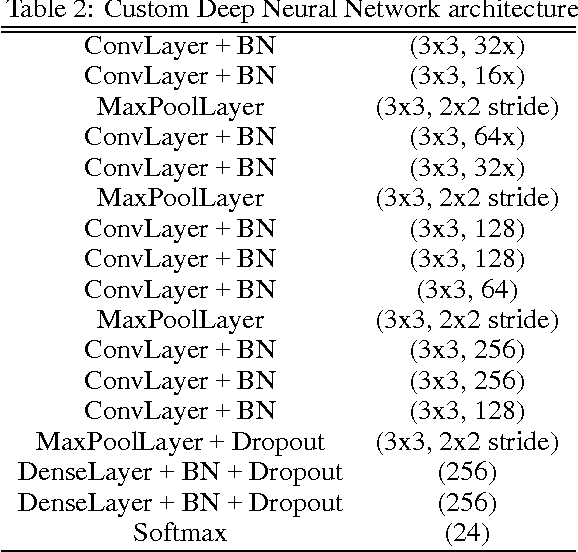

Abstract:Convolutional neural networks demonstrated outstanding empirical results in computer vision and speech recognition tasks where labeled training data is abundant. In medical imaging, there is a huge variety of possible imaging modalities and contrasts, where annotated data is usually very scarce. We present two approaches to deal with this challenge. A network pretrained in a different domain with abundant data is used as a feature extractor, while a subsequent classifier is trained on a small target dataset; and a deep architecture trained with heavy augmentation and equipped with sophisticated regularization methods. We test the approaches on a corpus of X-ray images to design an anatomy detection system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge