Liran Goshen

Non Parametric Data Augmentations Improve Deep-Learning based Brain Tumor Segmentation

Nov 25, 2021

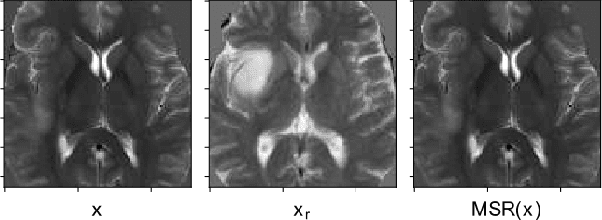

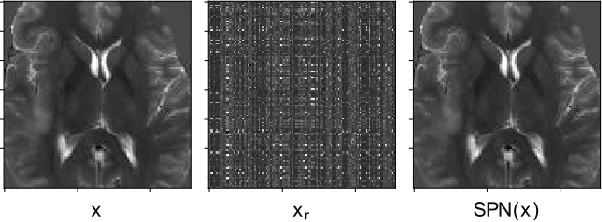

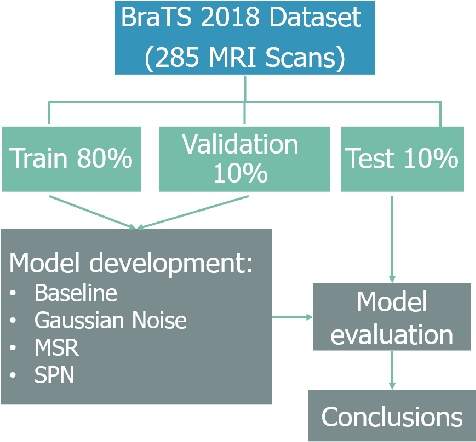

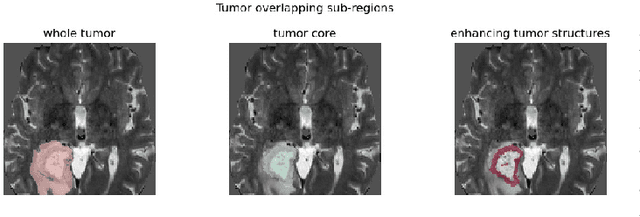

Abstract:Automatic brain tumor segmentation from Magnetic Resonance Imaging (MRI) data plays an important role in assessing tumor response to therapy and personalized treatment stratification.Manual segmentation is tedious and subjective.Deep-learning-based algorithms for brain tumor segmentation have the potential to provide objective and fast tumor segmentation.However, the training of such algorithms requires large datasets which are not always available. Data augmentation techniques may reduce the need for large datasets.However current approaches are mostly parametric and may result in suboptimal performance.We introduce two non-parametric methods of data augmentation for brain tumor segmentation: the mixed structure regularization (MSR) and shuffle pixels noise (SPN).We evaluated the added value of the MSR and SPN augmentation on the brain tumor segmentation (BraTS) 2018 challenge dataset with the encoder-decoder nnU-Net architecture as the segmentation algorithm.Both MSR and SPN improve the nnU-Net segmentation accuracy compared to parametric Gaussian noise augmentation.Mean dice score increased from 80% to 82% and p-values=0.0022, 0.0028 when comparing MSR to non-parametric augmentation for the tumor core and whole tumor experiments respectively.The proposed MSR and SPN augmentations have the potential to improve neural-networks performance in other tasks as well.

Learning a sparse database for patch-based medical image segmentation

Jun 25, 2019

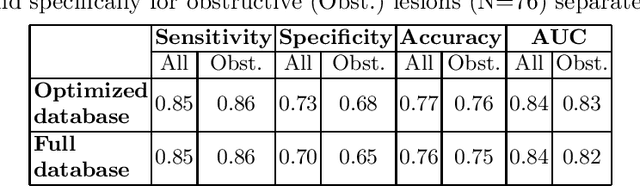

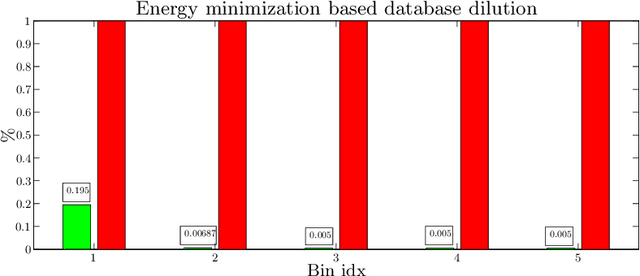

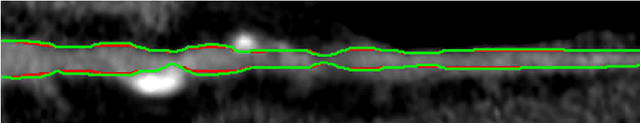

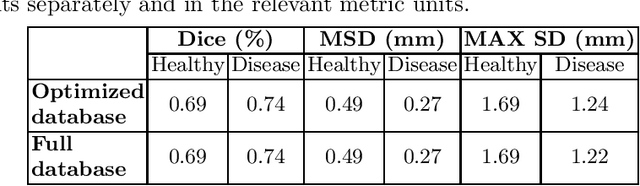

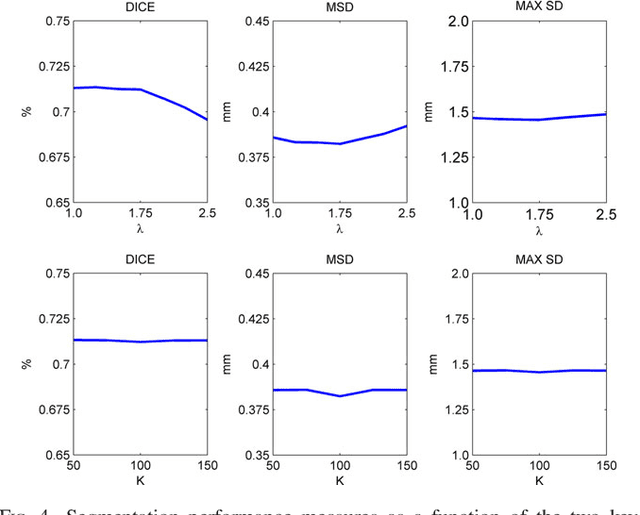

Abstract:We introduce a functional for the learning of an optimal database for patch-based image segmentation with application to coronary lumen segmentation from coronary computed tomography angiography (CCTA) data. The proposed functional consists of fidelity, sparseness and robustness to small-variations terms and their associated weights. Existing work address database optimization by prototype selection aiming to optimize the database by either adding or removing prototypes according to a set of predefined rules. In contrast, we formulate the database optimization task as an energy minimization problem that can be solved using standard numerical tools. We apply the proposed database optimization functional to the task of optimizing a database for patch-base coronary lumen segmentation. Our experiments using the publicly available MICCAI 2012 coronary lumen segmentation challenge data show that optimizing the database using the proposed approach reduced database size by 96% while maintaining the same level of lumen segmentation accuracy. Moreover, we show that the optimized database yields an improved specificity of CCTA based fractional flow reserve (0.73 vs 0.7 for all lesions and 0.68 vs 0.65 for obstructive lesions) using a training set of 132 (76 obstructive) coronary lesions with invasively measured FFR as the reference.

Improving CCTA based lesions' hemodynamic significance assessment by accounting for partial volume modeling in automatic coronary lumen segmentation

Jun 24, 2019

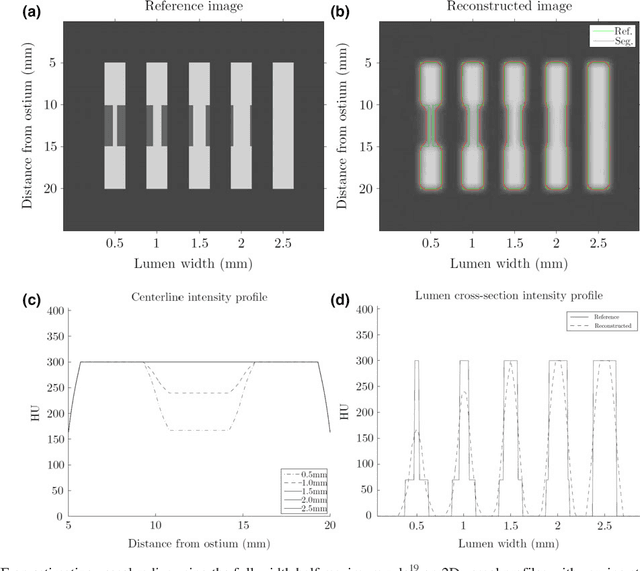

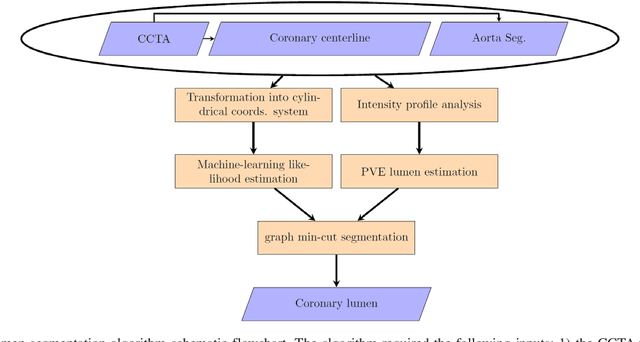

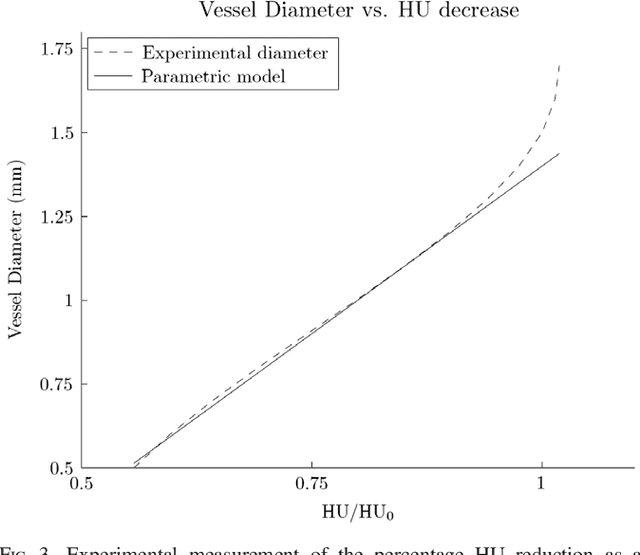

Abstract:Purpose: The goal of this study was to assess the potential added benefit of accounting for partial volume effects (PVE) in an automatic coronary lumen segmentation algorithm from coronary computed tomography angiography (CCTA). Materials and methods: We assessed the potential added value of PVE integration as a part of the automatic coronary lumen segmentation algorithm by means of segmentation accuracy using the MICCAI 2012 challenge framework and by means of flow simulation overall accuracy, sensitivity, specificity, negative and positive predictive values and the receiver operated characteristic (ROC) area under the curve. We also evaluated the potential benefit of accounting for PVE in automatic segmentation for flow-simulation for lesions that were diagnosed as obstructive based on CCTA, which could have indicated a need for an invasive exam and revascularization. Results: Our segmentation algorithm improves the maximal surface distance error by ~39% compared to previously published method on the 18 datasets 50 from the MICCAI 2012 challenge with comparable Dice and mean surface distance. Results with and without accounting for PVE were comparable. In contrast, integrating PVE analysis into an automatic coronary lumen segmentation algorithm improved the flow simulation specificity from 0.6 to 0.68 with the same sensitivity of 0.83. Also, accounting for PVE improved the area under the ROC curve for detecting hemodynamically significant CAD from 0.76 to 0.8 compared to automatic segmentation without PVE analysis with invasive FFR threshold of 0.8 as the reference standard. The improvement in the AUC was statistically significant (N=76, Delong's test, p=0.012). Conclusion: Accounting for the partial volume effects in automatic coronary lumen segmentation algorithms has the potential to improve the accuracy of CCTA-based hemodynamic assessment of coronary artery lesions.

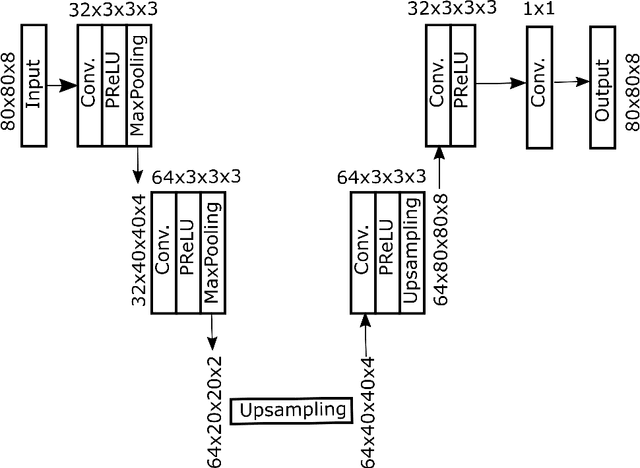

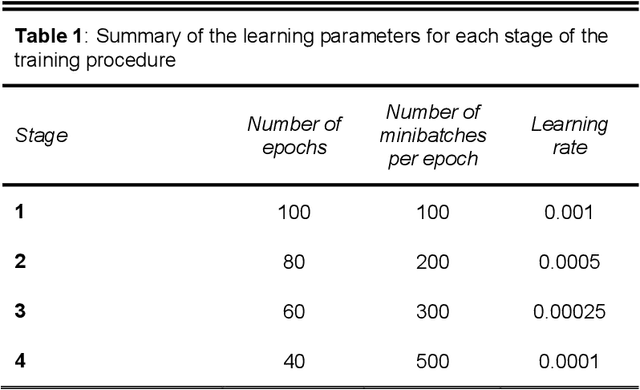

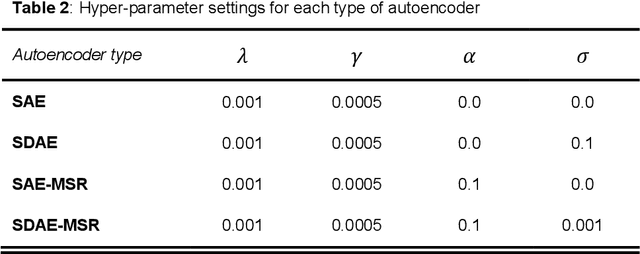

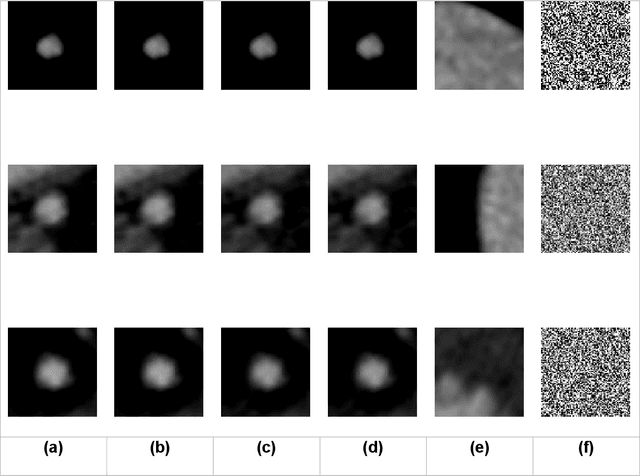

Unsupervised Abnormality Detection through Mixed Structure Regularization (MSR) in Deep Sparse Autoencoders

Feb 28, 2019

Abstract:Deep sparse auto-encoders with mixed structure regularization (MSR) in addition to explicit sparsity regularization term and stochastic corruption of the input data with Gaussian noise have the potential to improve unsupervised abnormality detection. Unsupervised abnormality detection based on identifying outliers using deep sparse auto-encoders is a very appealing approach for medical computer aided detection systems as it requires only healthy data for training rather than expert annotated abnormality. In the task of detecting coronary artery disease from Coronary Computed Tomography Angiography (CCTA), our results suggests that the MSR has the potential to improve overall performance by 20-30% compared to deep sparse and denoising auto-encoders.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge