Pal Maurovich-Horvat

Rapid quantification of COVID-19 pneumonia burden from computed tomography with convolutional LSTM networks

Mar 31, 2021

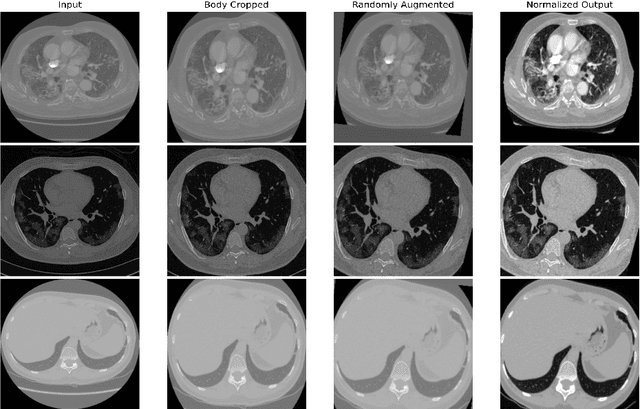

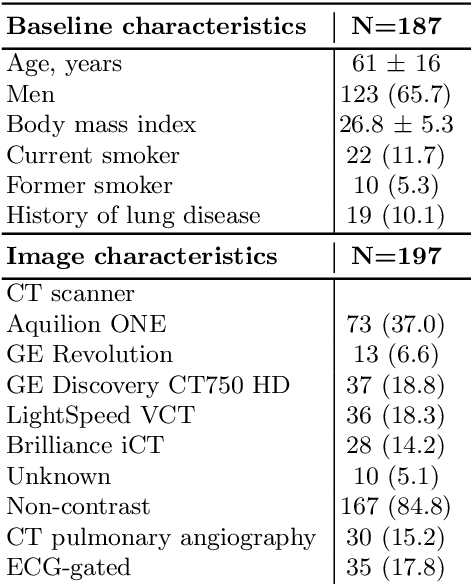

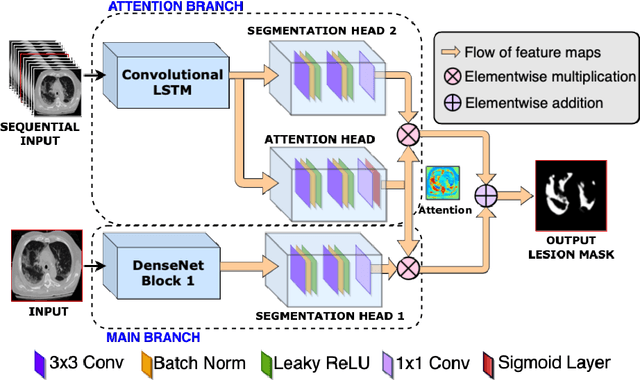

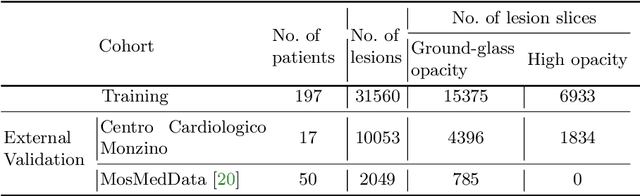

Abstract:Quantitative lung measures derived from computed tomography (CT) have been demonstrated to improve prognostication in coronavirus disease (COVID-19) patients, but are not part of the clinical routine since required manual segmentation of lung lesions is prohibitively time-consuming. We propose a new fully automated deep learning framework for rapid quantification and differentiation between lung lesions in COVID-19 pneumonia from both contrast and non-contrast CT images using convolutional Long Short-Term Memory (ConvLSTM) networks. Utilizing the expert annotations, model training was performed 5 times with separate hold-out sets using 5-fold cross-validation to segment ground-glass opacity and high opacity (including consolidation and pleural effusion). The performance of the method was evaluated on CT data sets from 197 patients with positive reverse transcription polymerase chain reaction test result for SARS-CoV-2. Strong agreement between expert manual and automatic segmentation was obtained for lung lesions with a Dice score coefficient of 0.876 $\pm$ 0.005; excellent correlations of 0.978 and 0.981 for ground-glass opacity and high opacity volumes. In the external validation set of 67 patients, there was dice score coefficient of 0.767 $\pm$ 0.009 as well as excellent correlations of 0.989 and 0.996 for ground-glass opacity and high opacity volumes. Computations for a CT scan comprising 120 slices were performed under 2 seconds on a personal computer equipped with NVIDIA Titan RTX graphics processing unit. Therefore, our deep learning-based method allows rapid fully-automated quantitative measurement of pneumonia burden from CT and may generate results with an accuracy similar to the expert readers.

Learning a sparse database for patch-based medical image segmentation

Jun 25, 2019

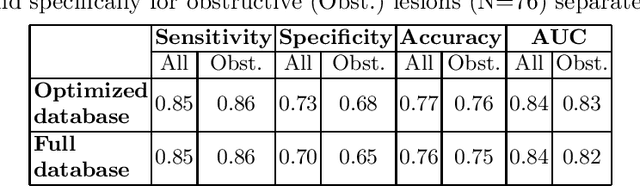

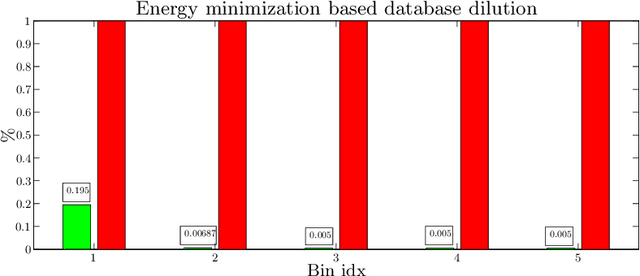

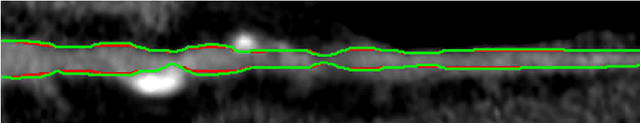

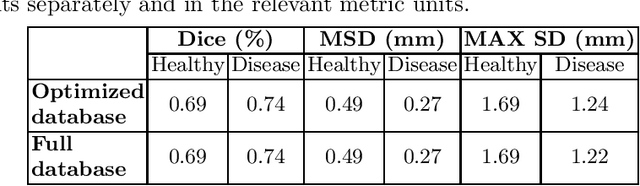

Abstract:We introduce a functional for the learning of an optimal database for patch-based image segmentation with application to coronary lumen segmentation from coronary computed tomography angiography (CCTA) data. The proposed functional consists of fidelity, sparseness and robustness to small-variations terms and their associated weights. Existing work address database optimization by prototype selection aiming to optimize the database by either adding or removing prototypes according to a set of predefined rules. In contrast, we formulate the database optimization task as an energy minimization problem that can be solved using standard numerical tools. We apply the proposed database optimization functional to the task of optimizing a database for patch-base coronary lumen segmentation. Our experiments using the publicly available MICCAI 2012 coronary lumen segmentation challenge data show that optimizing the database using the proposed approach reduced database size by 96% while maintaining the same level of lumen segmentation accuracy. Moreover, we show that the optimized database yields an improved specificity of CCTA based fractional flow reserve (0.73 vs 0.7 for all lesions and 0.68 vs 0.65 for obstructive lesions) using a training set of 132 (76 obstructive) coronary lesions with invasively measured FFR as the reference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge