Axel Saalbach

Bottom-Up Instance Segmentation of Catheters for Chest X-Rays

Dec 06, 2023Abstract:Chest X-ray (CXR) is frequently employed in emergency departments and intensive care units to verify the proper placement of central lines and tubes and to rule out related complications. The automation of the X-ray reading process can be a valuable support tool for non-specialist technicians and minimize reporting delays due to non-availability of experts. While existing solutions for automated catheter segmentation and malposition detection show promising results, the disentanglement of individual catheters remains an open challenge, especially in complex cases where multiple devices appear superimposed in the X-ray projection. Moreover, conventional top-down instance segmentation methods are ineffective on such thin and long devices, that often extend through the entire image. In this paper, we propose a deep learning approach based on associative embeddings for catheter instance segmentation, able to overcome those limitations and effectively handle device intersections.

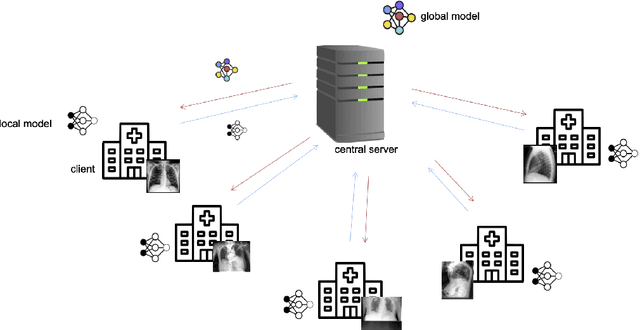

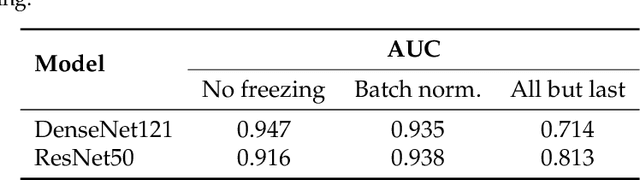

Defending against Reconstruction Attacks through Differentially Private Federated Learning for Classification of Heterogeneous Chest X-Ray Data

May 06, 2022

Abstract:Privacy regulations and the physical distribution of heterogeneous data are often primary concerns for the development of deep learning models in a medical context. This paper evaluates the feasibility of differentially private federated learning for chest X-ray classification as a defense against privacy attacks on DenseNet121 and ResNet50 network architectures. We simulated a federated environment by distributing images from the public CheXpert and Mendeley chest X-ray datasets unevenly among 36 clients. Both non-private baseline models achieved an area under the ROC curve (AUC) of 0.94 on the binary classification task of detecting the presence of a medical finding. We demonstrate that both model architectures are vulnerable to privacy violation by applying image reconstruction attacks to local model updates from individual clients. The attack was particularly successful during later training stages. To mitigate the risk of privacy breach, we integrated R\'enyi differential privacy with a Gaussian noise mechanism into local model training. We evaluate model performance and attack vulnerability for privacy budgets $\epsilon \in$ {1, 3, 6, 10}. The DenseNet121 achieved the best utility-privacy trade-off with an AUC of 0.94 for $\epsilon$ = 6. Model performance deteriorated slightly for individual clients compared to the non-private baseline. The ResNet50 only reached an AUC of 0.76 in the same privacy setting. Its performance was inferior to that of the DenseNet121 for all considered privacy constraints, suggesting that the DenseNet121 architecture is more robust to differentially private training.

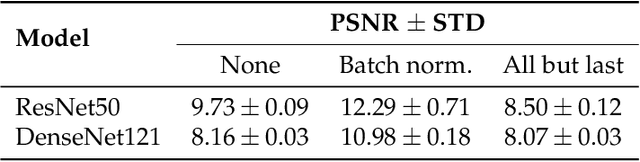

Tubular Shape Aware Data Generation for Semantic Segmentation in Medical Imaging

Oct 02, 2020

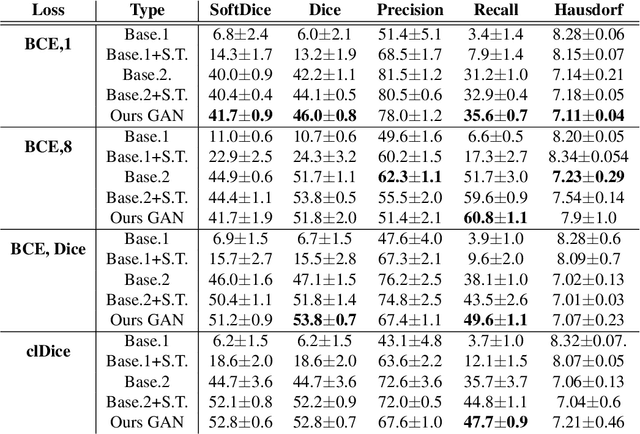

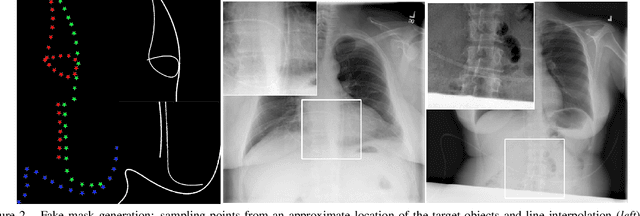

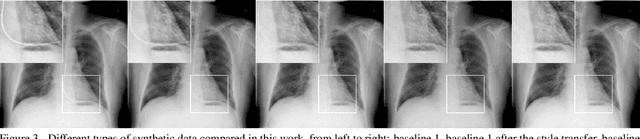

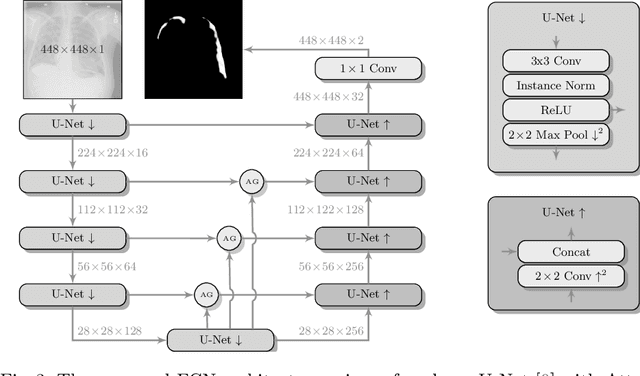

Abstract:Chest X-ray is one of the most widespread examinations of the human body. In interventional radiology, its use is frequently associated with the need to visualize various tube-like objects, such as puncture needles, guiding sheaths, wires, and catheters. Detection and precise localization of these tube-like objects in the X-ray images is, therefore, of utmost value, catalyzing the development of accurate target-specific segmentation algorithms. Similar to the other medical imaging tasks, the manual pixel-wise annotation of the tubes is a resource-consuming process. In this work, we aim to alleviate the lack of the annotated images by using artificial data. Specifically, we present an approach for synthetic data generation of the tube-shaped objects, with a generative adversarial network being regularized with a prior-shape constraint. Our method eliminates the need for paired image--mask data and requires only a weakly-labeled dataset (10--20 images) to reach the accuracy of the fully-supervised models. We report the applicability of the approach for the task of segmenting tubes and catheters in the X-ray images, whereas the results should also hold for the other imaging modalities.

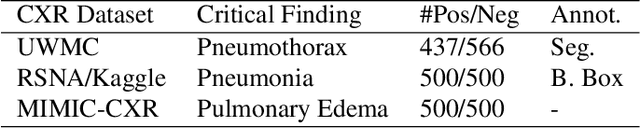

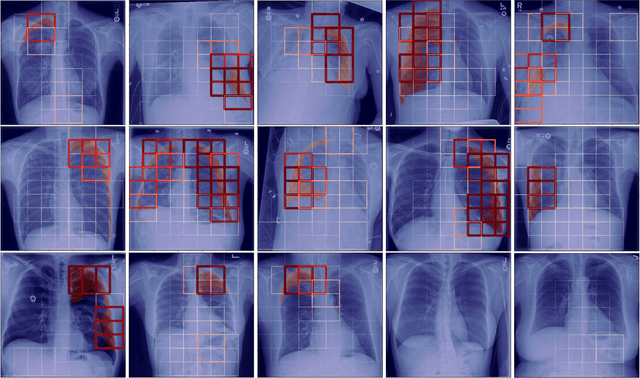

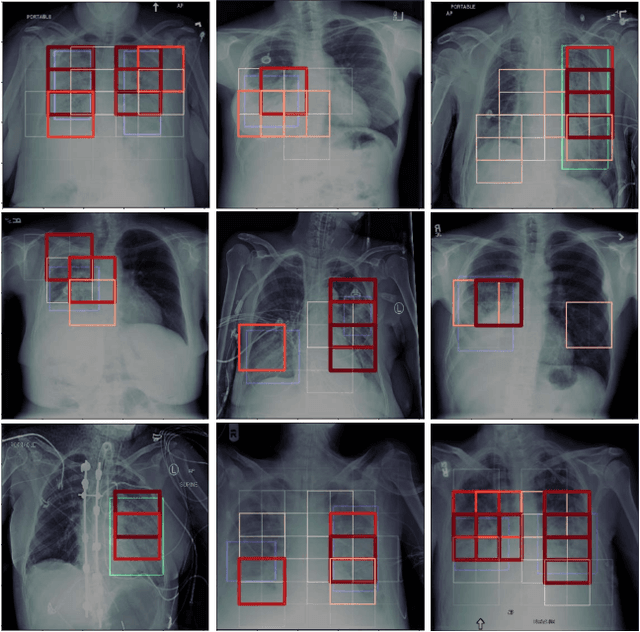

Localization of Critical Findings in Chest X-Ray without Local Annotations Using Multi-Instance Learning

Jan 23, 2020

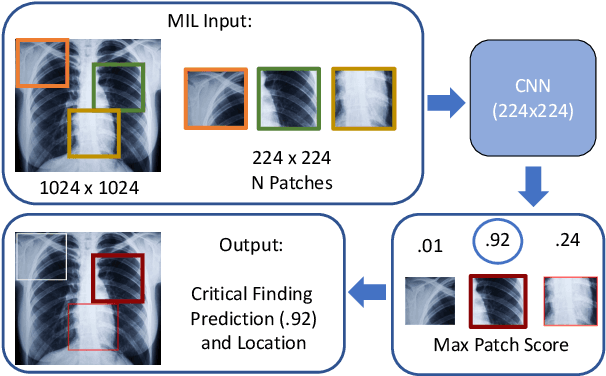

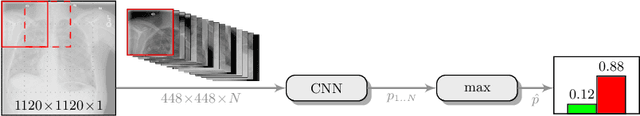

Abstract:The automatic detection of critical findings in chest X-rays (CXR), such as pneumothorax, is important for assisting radiologists in their clinical workflow like triaging time-sensitive cases and screening for incidental findings. While deep learning (DL) models has become a promising predictive technology with near-human accuracy, they commonly suffer from a lack of explainability, which is an important aspect for clinical deployment of DL models in the highly regulated healthcare industry. For example, localizing critical findings in an image is useful for explaining the predictions of DL classification algorithms. While there have been a host of joint classification and localization methods for computer vision, the state-of-the-art DL models require locally annotated training data in the form of pixel level labels or bounding box coordinates. In the medical domain, this requires an expensive amount of manual annotation by medical experts for each critical finding. This requirement becomes a major barrier for training models that can rapidly scale to various findings. In this work, we address these shortcomings with an interpretable DL algorithm based on multi-instance learning that jointly classifies and localizes critical findings in CXR without the need for local annotations. We show competitive classification results on three different critical findings (pneumothorax, pneumonia, and pulmonary edema) from three different CXR datasets.

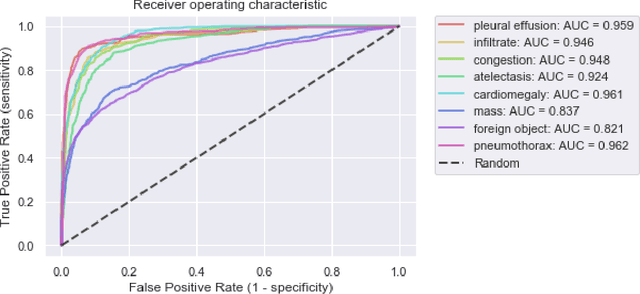

Intelligent Chest X-ray Worklist Prioritization by CNNs: A Clinical Workflow Simulation

Jan 23, 2020

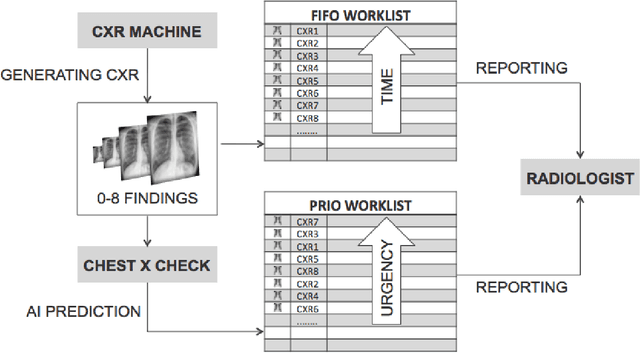

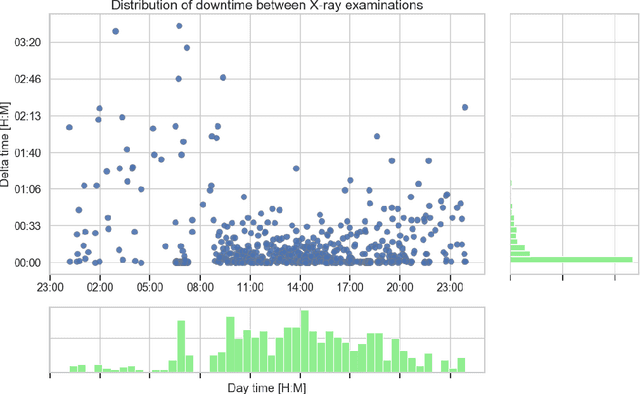

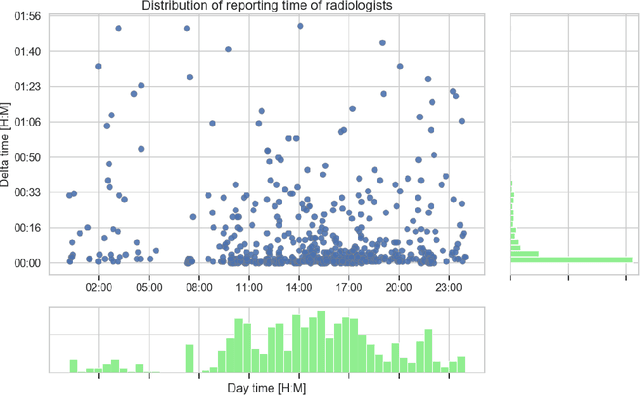

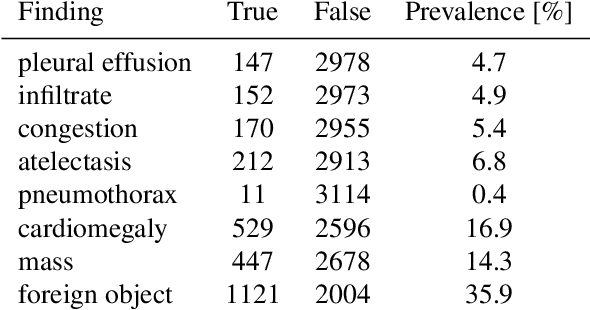

Abstract:Growing radiologic workload and shortage of medical experts worldwide often lead to delayed or even unreported examinations, which poses a risk for patient's safety in case of unrecognized findings in chest radiographs (CXR). The aim was to evaluate, whether deep learning algorithms for an intelligent worklist prioritization might optimize the radiology workflow and can reduce report turnaround times (RTAT) for critical findings, instead of reporting according to the First-In-First-Out-Principle (FIFO). Furthermore, we investigated the problem of false negative prediction in the context of worklist prioritization. To assess the potential benefit of an intelligent worklist prioritization, three different workflow simulations based on our analysis were run and RTAT were compared: FIFO (non-prioritized), Prio1 (prioritized) and Prio2 (prioritized, with RTATmax.). Examination triage was performed by "ChestXCheck", a convolutional neural network, classifying eight different pathological findings ranked in descending order of urgency: pneumothorax, pleural effusion, infiltrate, congestion, atelectasis, cardiomegaly, mass and foreign object. The average RTAT for all critical findings was significantly reduced by both Prio simulations compared to the FIFO simulation (e.g. pneumothorax: 32.1 min vs. 69.7 min; p < 0.0001), while the average RTAT for normal examinations increased at the same time (69.5 min vs. 90.0 min; p < 0.0001). Both effects were slightly lower at Prio2 than at Prio1, whereas the maximum RTAT at Prio1 was substantially higher for all classes, due to individual examinations rated false negative.Our Prio2 simulation demonstrated that intelligent worklist prioritization by deep learning algorithms reduces average RTAT for critical findings in chest X-ray while maintaining a similar maximum RTAT as FIFO.

Continual Learning for Domain Adaptation in Chest X-ray Classification

Jan 16, 2020

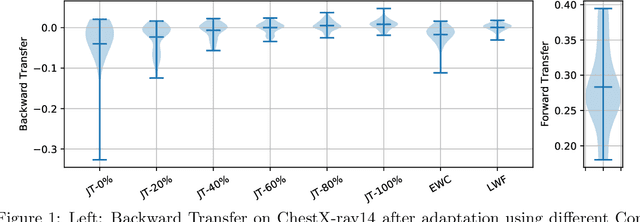

Abstract:Over the last years, Deep Learning has been successfully applied to a broad range of medical applications. Especially in the context of chest X-ray classification, results have been reported which are on par, or even superior to experienced radiologists. Despite this success in controlled experimental environments, it has been noted that the ability of Deep Learning models to generalize to data from a new domain (with potentially different tasks) is often limited. In order to address this challenge, we investigate techniques from the field of Continual Learning (CL) including Joint Training (JT), Elastic Weight Consolidation (EWC) and Learning Without Forgetting (LWF). Using the ChestX-ray14 and the MIMIC-CXR datasets, we demonstrate empirically that these methods provide promising options to improve the performance of Deep Learning models on a target domain and to mitigate effectively catastrophic forgetting for the source domain. To this end, the best overall performance was obtained using JT, while for LWF competitive results could be achieved - even without accessing data from the source domain.

Deep Learning for Pneumothorax Detection and Localization in Chest Radiographs

Jul 16, 2019

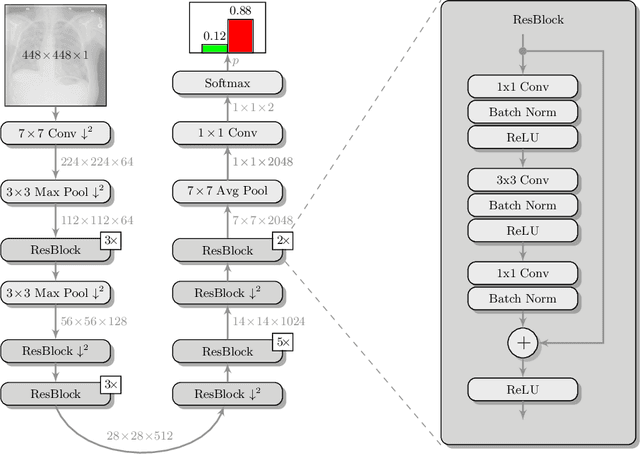

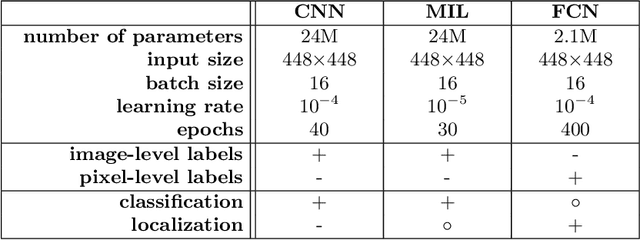

Abstract:Pneumothorax is a critical condition that requires timely communication and immediate action. In order to prevent significant morbidity or patient death, early detection is crucial. For the task of pneumothorax detection, we study the characteristics of three different deep learning techniques: (i) convolutional neural networks, (ii) multiple-instance learning, and (iii) fully convolutional networks. We perform a five-fold cross-validation on a dataset consisting of 1003 chest X-ray images. ROC analysis yields AUCs of 0.96, 0.93, and 0.92 for the three methods, respectively. We review the classification and localization performance of these approaches as well as an ensemble of the three aforementioned techniques.

When does Bone Suppression and Lung Field Segmentation Improve Chest X-Ray Disease Classification?

Oct 17, 2018

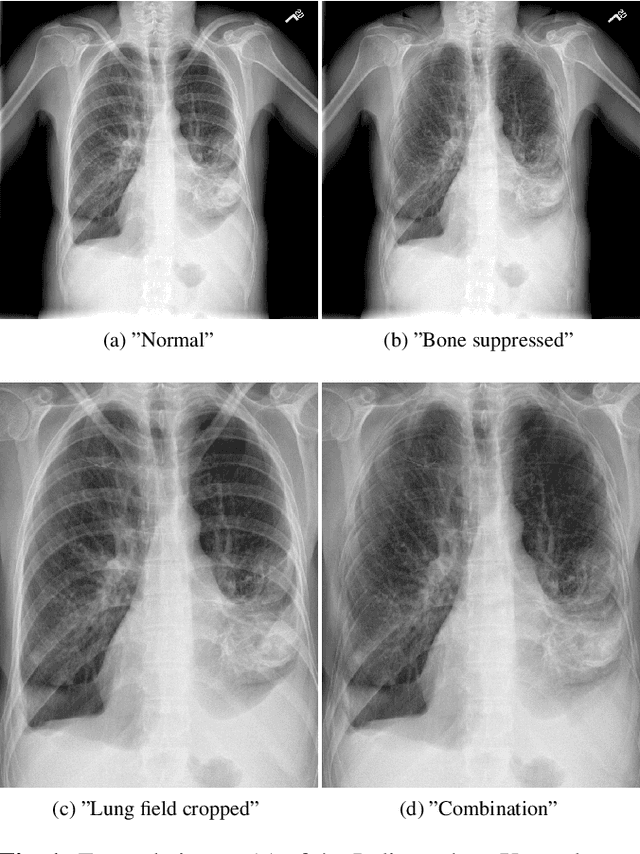

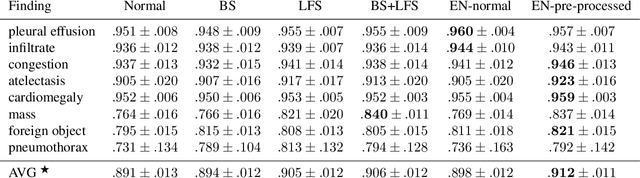

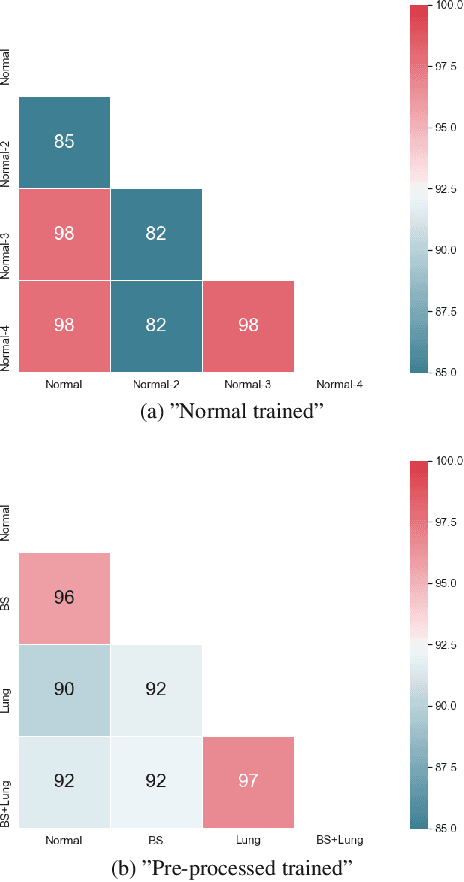

Abstract:Chest radiography is the most common clinical examination type. To improve the quality of patient care and to reduce workload, methods for automatic pathology classification have been developed. In this contribution we investigate the usefulness of two advanced image pre-processing techniques, initially developed for image reading by radiologists, for the performance of Deep Learning methods. First, we use bone suppression, an algorithm to artificially remove the rib cage. Secondly, we employ an automatic lung field detection to crop the image to the lung area. Furthermore, we consider the combination of both in the context of an ensemble approach. In a five-times re-sampling scheme, we use Receiver Operating Characteristic (ROC) statistics to evaluate the effect of the pre-processing approaches. Using a Convolutional Neural Network (CNN), optimized for X-ray analysis, we achieve a good performance with respect to all pathologies on average. Superior results are obtained for selected pathologies when using pre-processing, i.e. for mass the area under the ROC curve increased by 9.95%. The ensemble with pre-processed trained models yields the best overall results.

Comparison of Deep Learning Approaches for Multi-Label Chest X-Ray Classification

Mar 06, 2018

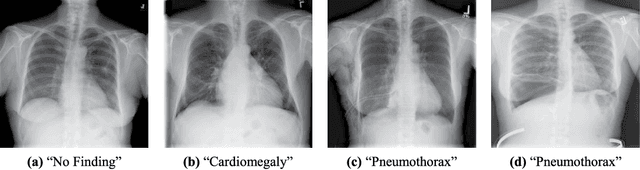

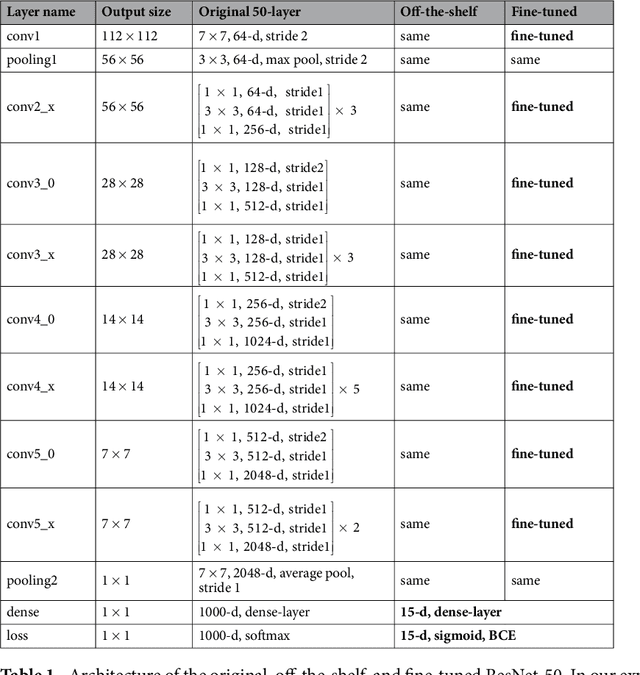

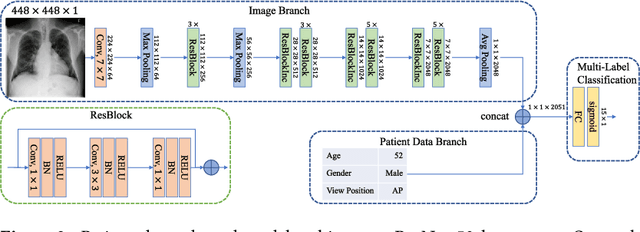

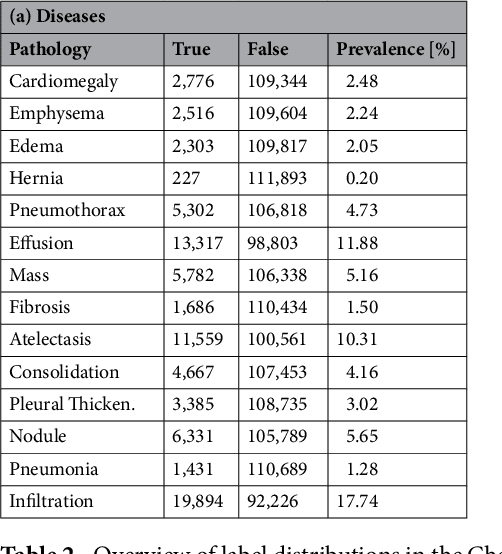

Abstract:The increased availability of X-ray image archives (e.g. the ChestX-ray14 dataset from the NIH Clinical Center) has triggered a growing interest in deep learning techniques. To provide better insight into the different approaches, and their applications to chest X-ray classification, we investigate a powerful network architecture in detail: the ResNet-50. Building on prior work in this domain, we consider transfer learning with and without fine-tuning as well as the training of a dedicated X-ray network from scratch. To leverage the high spatial resolutions of X-ray data, we also include an extended ResNet-50 architecture, and a network integrating non-image data (patient age, gender and acquisition type) in the classification process. In a systematic evaluation, using 5-fold re-sampling and a multi-label loss function, we evaluate the performance of the different approaches for pathology classification by ROC statistics and analyze differences between the classifiers using rank correlation. We observe a considerable spread in the achieved performance and conclude that the X-ray-specific ResNet-50, integrating non-image data yields the best overall results.

Can Pretrained Neural Networks Detect Anatomy?

Dec 18, 2015

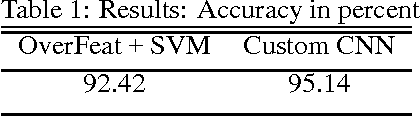

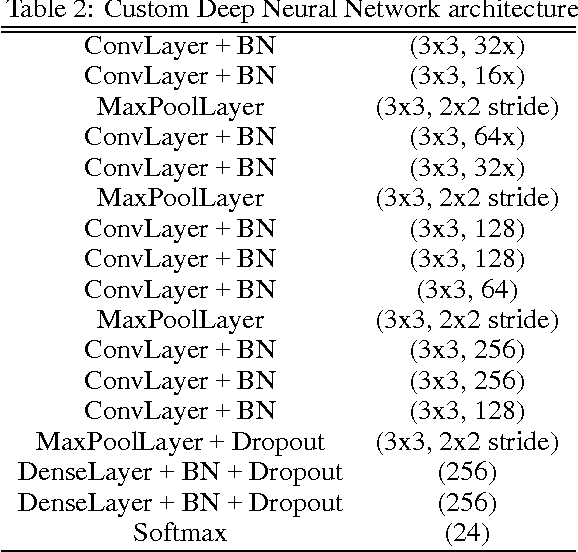

Abstract:Convolutional neural networks demonstrated outstanding empirical results in computer vision and speech recognition tasks where labeled training data is abundant. In medical imaging, there is a huge variety of possible imaging modalities and contrasts, where annotated data is usually very scarce. We present two approaches to deal with this challenge. A network pretrained in a different domain with abundant data is used as a feature extractor, while a subsequent classifier is trained on a small target dataset; and a deep architecture trained with heavy augmentation and equipped with sophisticated regularization methods. We test the approaches on a corpus of X-ray images to design an anatomy detection system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge