Haitao Zhao

Device Association and Resource Allocation for Hierarchical Split Federated Learning in Space-Air-Ground Integrated Network

Jan 20, 2026Abstract:6G facilitates deployment of Federated Learning (FL) in the Space-Air-Ground Integrated Network (SAGIN), yet FL confronts challenges such as resource constrained and unbalanced data distribution. To address these issues, this paper proposes a Hierarchical Split Federated Learning (HSFL) framework and derives its upper bound of loss function. To minimize the weighted sum of training loss and latency, we formulate a joint optimization problem that integrates device association, model split layer selection, and resource allocation. We decompose the original problem into several subproblems, where an iterative optimization algorithm for device association and resource allocation based on brute-force split point search is proposed. Simulation results demonstrate that the proposed algorithm can effectively balance training efficiency and model accuracy for FL in SAGIN.

Phishing Email Detection Using Large Language Models

Dec 14, 2025Abstract:Email phishing is one of the most prevalent and globally consequential vectors of cyber intrusion. As systems increasingly deploy Large Language Models (LLMs) applications, these systems face evolving phishing email threats that exploit their fundamental architectures. Current LLMs require substantial hardening before deployment in email security systems, particularly against coordinated multi-vector attacks that exploit architectural vulnerabilities. This paper proposes LLMPEA, an LLM-based framework to detect phishing email attacks across multiple attack vectors, including prompt injection, text refinement, and multilingual attacks. We evaluate three frontier LLMs (e.g., GPT-4o, Claude Sonnet 4, and Grok-3) and comprehensive prompting design to assess their feasibility, robustness, and limitations against phishing email attacks. Our empirical analysis reveals that LLMs can detect the phishing email over 90% accuracy while we also highlight that LLM-based phishing email detection systems could be exploited by adversarial attack, prompt injection, and multilingual attacks. Our findings provide critical insights for LLM-based phishing detection in real-world settings where attackers exploit multiple vulnerabilities in combination.

Decoding Neighborhood Environments with Large Language Models

May 13, 2025Abstract:Neighborhood environments include physical and environmental conditions such as housing quality, roads, and sidewalks, which significantly influence human health and well-being. Traditional methods for assessing these environments, including field surveys and geographic information systems (GIS), are resource-intensive and challenging to evaluate neighborhood environments at scale. Although machine learning offers potential for automated analysis, the laborious process of labeling training data and the lack of accessible models hinder scalability. This study explores the feasibility of large language models (LLMs) such as ChatGPT and Gemini as tools for decoding neighborhood environments (e.g., sidewalk and powerline) at scale. We train a robust YOLOv11-based model, which achieves an average accuracy of 99.13% in detecting six environmental indicators, including streetlight, sidewalk, powerline, apartment, single-lane road, and multilane road. We then evaluate four LLMs, including ChatGPT, Gemini, Claude, and Grok, to assess their feasibility, robustness, and limitations in identifying these indicators, with a focus on the impact of prompting strategies and fine-tuning. We apply majority voting with the top three LLMs to achieve over 88% accuracy, which demonstrates LLMs could be a useful tool to decode the neighborhood environment without any training effort.

Luminance Component Analysis for Exposure Correction

Nov 25, 2024

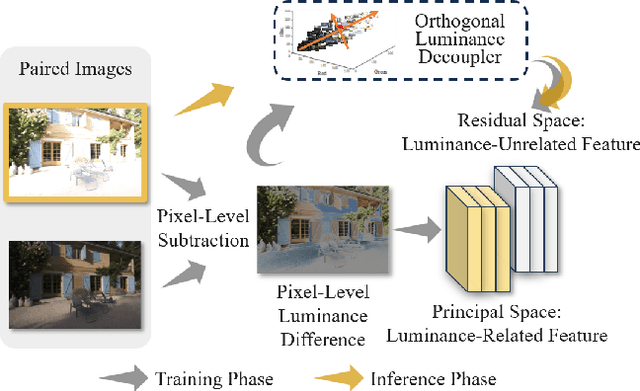

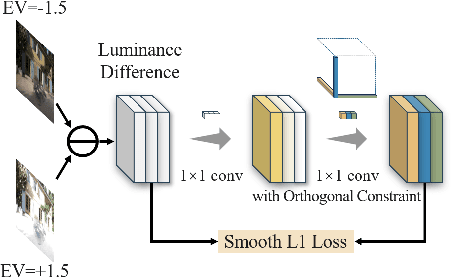

Abstract:Exposure correction methods aim to adjust the luminance while maintaining other luminance-unrelated information. However, current exposure correction methods have difficulty in fully separating luminance-related and luminance-unrelated components, leading to distortions in color, loss of detail, and requiring extra restoration procedures. Inspired by principal component analysis (PCA), this paper proposes an exposure correction method called luminance component analysis (LCA). LCA applies the orthogonal constraint to a U-Net structure to decouple luminance-related and luminance-unrelated features. With decoupled luminance-related features, LCA adjusts only the luminance-related components while keeping the luminance-unrelated components unchanged. To optimize the orthogonal constraint problem, LCA employs a geometric optimization algorithm, which converts the constrained problem in Euclidean space to an unconstrained problem in orthogonal Stiefel manifolds. Extensive experiments show that LCA can decouple the luminance feature from the RGB color space. Moreover, LCA achieves the best PSNR (21.33) and SSIM (0.88) in the exposure correction dataset with 28.72 FPS.

CapHDR2IR: Caption-Driven Transfer from Visible Light to Infrared Domain

Nov 25, 2024

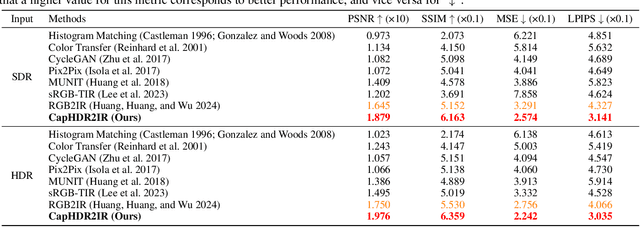

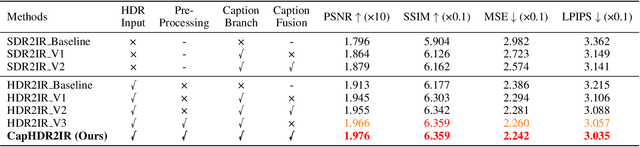

Abstract:Infrared (IR) imaging offers advantages in several fields due to its unique ability of capturing content in extreme light conditions. However, the demanding hardware requirements of high-resolution IR sensors limit its widespread application. As an alternative, visible light can be used to synthesize IR images but this causes a loss of fidelity in image details and introduces inconsistencies due to lack of contextual awareness of the scene. This stems from a combination of using visible light with a standard dynamic range, especially under extreme lighting, and a lack of contextual awareness can result in pseudo-thermal-crossover artifacts. This occurs when multiple objects with similar temperatures appear indistinguishable in the training data, further exacerbating the loss of fidelity. To solve this challenge, this paper proposes CapHDR2IR, a novel framework incorporating vision-language models using high dynamic range (HDR) images as inputs to generate IR images. HDR images capture a wider range of luminance variations, ensuring reliable IR image generation in different light conditions. Additionally, a dense caption branch integrates semantic understanding, resulting in more meaningful and discernible IR outputs. Extensive experiments on the HDRT dataset show that the proposed CapHDR2IR achieves state-of-the-art performance compared with existing general domain transfer methods and those tailored for visible-to-infrared image translation.

Fair Computation Offloading for RSMA-Assisted Mobile Edge Computing Networks

Jun 16, 2024

Abstract:Rate splitting multiple access (RSMA) provides a flexible transmission framework that can be applied in mobile edge computing (MEC) systems. However, the research work on RSMA-assisted MEC systems is still at the infancy and many design issues remain unsolved, such as the MEC server and channel allocation problem in general multi-server and multi-channel scenarios as well as the user fairness issues. In this regard, we study an RSMA-assisted MEC system with multiple MEC servers, channels and devices, and consider the fairness among devices. A max-min fairness computation offloading problem to maximize the minimum computation offloading rate is investigated. Since the problem is difficult to solve optimally, we develop an efficient algorithm to obtain a suboptimal solution. Particularly, the time allocation and the computing frequency allocation are derived as closed-form functions of the transmit power allocation and the successive interference cancellation (SIC) decoding order, while the SIC decoding order is obtained heuristically, and the bisection search and the successive convex approximation methods are employed to optimize the transmit power allocation. For the MEC server and channel allocation problem, we transform it into a hypergraph matching problem and solve it by matching theory. Simulation results demonstrate that the proposed RSMA-assisted MEC system outperforms current MEC systems under various system setups.

HDRT: Infrared Capture for HDR Imaging

Jun 08, 2024

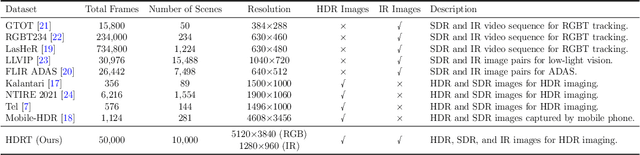

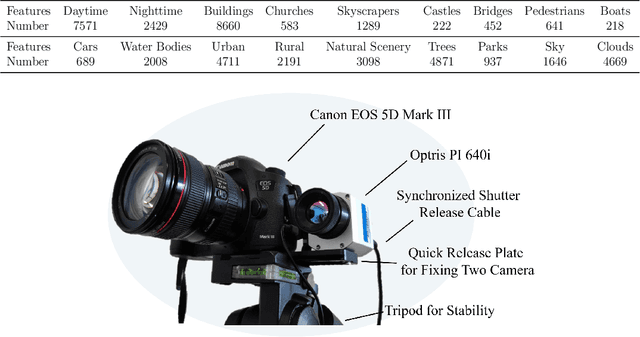

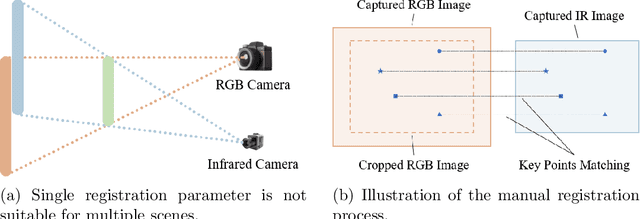

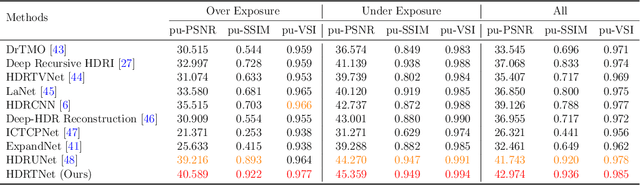

Abstract:Capturing real world lighting is a long standing challenge in imaging and most practical methods acquire High Dynamic Range (HDR) images by either fusing multiple exposures, or boosting the dynamic range of Standard Dynamic Range (SDR) images. Multiple exposure capture is problematic as it requires longer capture times which can often lead to ghosting problems. The main alternative, inverse tone mapping is an ill-defined problem that is especially challenging as single captured exposures usually contain clipped and quantized values, and are therefore missing substantial amounts of content. To alleviate this, we propose a new approach, High Dynamic Range Thermal (HDRT), for HDR acquisition using a separate, commonly available, thermal infrared (IR) sensor. We propose a novel deep neural method (HDRTNet) which combines IR and SDR content to generate HDR images. HDRTNet learns to exploit IR features linked to the RGB image and the IR-specific parameters are subsequently used in a dual branch method that fuses features at shallow layers. This produces an HDR image that is significantly superior to that generated using naive fusion approaches. To validate our method, we have created the first HDR and thermal dataset, and performed extensive experiments comparing HDRTNet with the state-of-the-art. We show substantial quantitative and qualitative quality improvements on both over- and under-exposed images, showing that our approach is robust to capturing in multiple different lighting conditions.

ODCR: Orthogonal Decoupling Contrastive Regularization for Unpaired Image Dehazing

Apr 27, 2024

Abstract:Unpaired image dehazing (UID) holds significant research importance due to the challenges in acquiring haze/clear image pairs with identical backgrounds. This paper proposes a novel method for UID named Orthogonal Decoupling Contrastive Regularization (ODCR). Our method is grounded in the assumption that an image consists of both haze-related features, which influence the degree of haze, and haze-unrelated features, such as texture and semantic information. ODCR aims to ensure that the haze-related features of the dehazing result closely resemble those of the clear image, while the haze-unrelated features align with the input hazy image. To accomplish the motivation, Orthogonal MLPs optimized geometrically on the Stiefel manifold are proposed, which can project image features into an orthogonal space, thereby reducing the relevance between different features. Furthermore, a task-driven Depth-wise Feature Classifier (DWFC) is proposed, which assigns weights to the orthogonal features based on the contribution of each channel's feature in predicting whether the feature source is hazy or clear in a self-supervised fashion. Finally, a Weighted PatchNCE (WPNCE) loss is introduced to achieve the pulling of haze-related features in the output image toward those of clear images, while bringing haze-unrelated features close to those of the hazy input. Extensive experiments demonstrate the superior performance of our ODCR method on UID.

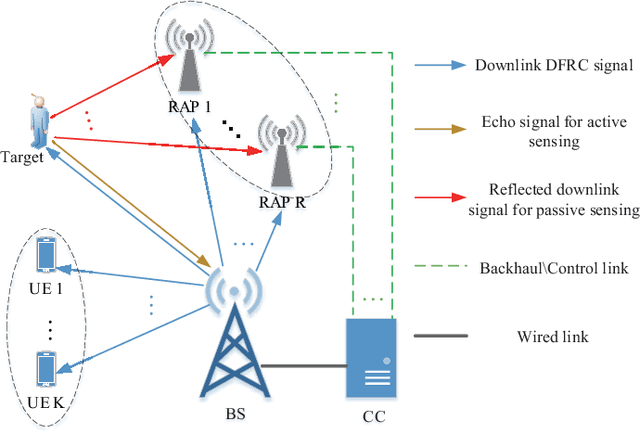

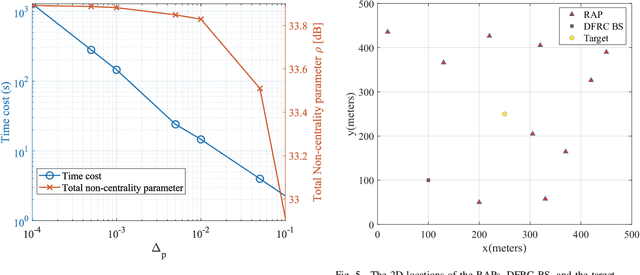

Power Optimization for Integrated Active and Passive Sensing in DFRC Systems

Feb 17, 2024

Abstract:Most existing works on dual-function radar-communication (DFRC) systems mainly focus on active sensing, but ignore passive sensing. To leverage multi-static sensing capability, we explore integrated active and passive sensing (IAPS) in DFRC systems to remedy sensing performance. The multi-antenna base station (BS) is responsible for communication and active sensing by transmitting signals to user equipments while detecting a target according to echo signals. In contrast, passive sensing is performed at the receive access points (RAPs). We consider both the cases where the capacity of the backhaul links between the RAPs and BS is unlimited or limited and adopt different fusion strategies. Specifically, when the backhaul capacity is unlimited, the BS and RAPs transfer sensing signals they have received to the central controller (CC) for signal fusion. The CC processes the signals and leverages the generalized likelihood ratio test detector to determine the present of a target. However, when the backhaul capacity is limited, each RAP, as well as the BS, makes decisions independently and sends its binary inference results to the CC for result fusion via voting aggregation. Then, aiming at maximize the target detection probability under communication quality of service constraints, two power optimization algorithms are proposed. Finally, numerical simulations demonstrate that the sensing performance in case of unlimited backhaul capacity is much better than that in case of limited backhaul capacity. Moreover, it implied that the proposed IAPS scheme outperforms only-passive and only-active sensing schemes, especially in unlimited capacity case.

DFR-Net: Density Feature Refinement Network for Image Dehazing Utilizing Haze Density Difference

Jul 26, 2023

Abstract:In image dehazing task, haze density is a key feature and affects the performance of dehazing methods. However, some of the existing methods lack a comparative image to measure densities, and others create intermediate results but lack the exploitation of their density differences, which can facilitate perception of density. To address these deficiencies, we propose a density-aware dehazing method named Density Feature Refinement Network (DFR-Net) that extracts haze density features from density differences and leverages density differences to refine density features. In DFR-Net, we first generate a proposal image that has lower overall density than the hazy input, bringing in global density differences. Additionally, the dehazing residual of the proposal image reflects the level of dehazing performance and provides local density differences that indicate localized hard dehazing or high density areas. Subsequently, we introduce a Global Branch (GB) and a Local Branch (LB) to achieve density-awareness. In GB, we use Siamese networks for feature extraction of hazy inputs and proposal images, and we propose a Global Density Feature Refinement (GDFR) module that can refine features by pushing features with different global densities further away. In LB, we explore local density features from the dehazing residuals between hazy inputs and proposal images and introduce an Intermediate Dehazing Residual Feedforward (IDRF) module to update local features and pull them closer to clear image features. Sufficient experiments demonstrate that the proposed method achieves results beyond the state-of-the-art methods on various datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge