Wenchao Xia

RIS-Empowered Integrated Location Sensing and Communication with Superimposed Pilots

Apr 05, 2025Abstract:In addition to enhancing wireless communication coverage quality, reconfigurable intelligent surface (RIS) technique can also assist in positioning. In this work, we consider RIS-assisted superimposed pilot and data transmission without the assumption availability of prior channel state information and position information of mobile user equipments (UEs). To tackle this challenge, we design a frame structure of transmission protocol composed of several location coherence intervals, each with pure-pilot and data-pilot transmission durations. The former is used to estimate UE locations, while the latter is time-slotted, duration of which does not exceed the channel coherence time, where the data and pilot signals are transmitted simultaneously. We conduct the Fisher Information matrix (FIM) analysis and derive \text {Cram\'er-Rao bound} (CRB) for the position estimation error. The inverse fast Fourier transform (IFFT) is adopted to obtain the estimation results of UE positions, which are then exploited for channel estimation. Furthermore, we derive the closed-form lower bound of the ergodic achievable rate of superimposed pilot (SP) transmission, which is used to optimize the phase profile of the RIS to maximize the achievable sum rate using the genetic algorithm. Finally, numerical results validate the accuracy of the UE position estimation using the IFFT algorithm and the superiority of the proposed SP scheme by comparison with the regular pilot scheme.

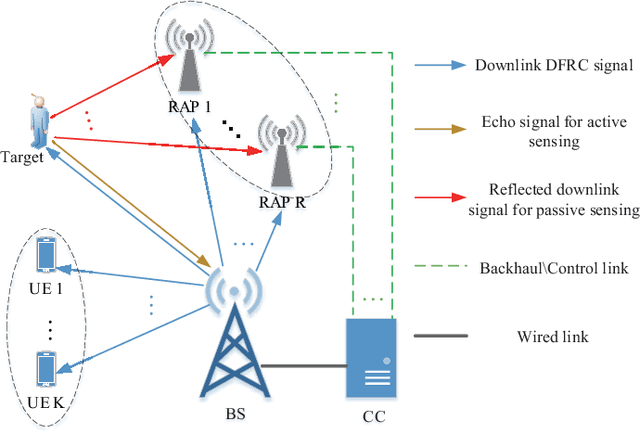

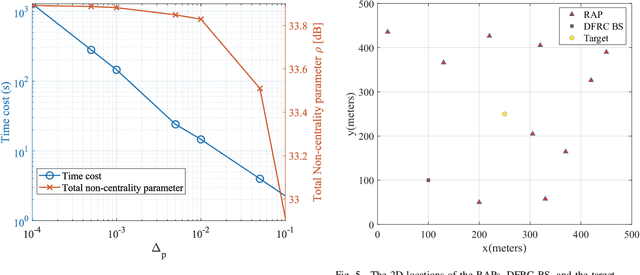

Power Optimization for Integrated Active and Passive Sensing in DFRC Systems

Feb 17, 2024

Abstract:Most existing works on dual-function radar-communication (DFRC) systems mainly focus on active sensing, but ignore passive sensing. To leverage multi-static sensing capability, we explore integrated active and passive sensing (IAPS) in DFRC systems to remedy sensing performance. The multi-antenna base station (BS) is responsible for communication and active sensing by transmitting signals to user equipments while detecting a target according to echo signals. In contrast, passive sensing is performed at the receive access points (RAPs). We consider both the cases where the capacity of the backhaul links between the RAPs and BS is unlimited or limited and adopt different fusion strategies. Specifically, when the backhaul capacity is unlimited, the BS and RAPs transfer sensing signals they have received to the central controller (CC) for signal fusion. The CC processes the signals and leverages the generalized likelihood ratio test detector to determine the present of a target. However, when the backhaul capacity is limited, each RAP, as well as the BS, makes decisions independently and sends its binary inference results to the CC for result fusion via voting aggregation. Then, aiming at maximize the target detection probability under communication quality of service constraints, two power optimization algorithms are proposed. Finally, numerical simulations demonstrate that the sensing performance in case of unlimited backhaul capacity is much better than that in case of limited backhaul capacity. Moreover, it implied that the proposed IAPS scheme outperforms only-passive and only-active sensing schemes, especially in unlimited capacity case.

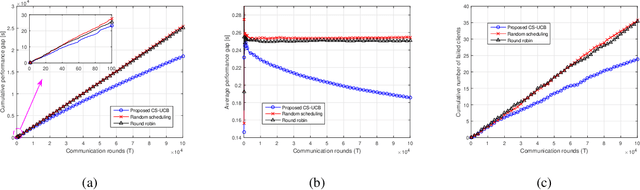

Multi-Armed Bandit Based Client Scheduling for Federated Learning

Jul 05, 2020

Abstract:By exploiting the computing power and local data of distributed clients, federated learning (FL) features ubiquitous properties such as reduction of communication overhead and preserving data privacy. In each communication round of FL, the clients update local models based on their own data and upload their local updates via wireless channels. However, latency caused by hundreds to thousands of communication rounds remains a bottleneck in FL. To minimize the training latency, this work provides a multi-armed bandit-based framework for online client scheduling (CS) in FL without knowing wireless channel state information and statistical characteristics of clients. Firstly, we propose a CS algorithm based on the upper confidence bound policy (CS-UCB) for ideal scenarios where local datasets of clients are independent and identically distributed (i.i.d.) and balanced. An upper bound of the expected performance regret of the proposed CS-UCB algorithm is provided, which indicates that the regret grows logarithmically over communication rounds. Then, to address non-ideal scenarios with non-i.i.d. and unbalanced properties of local datasets and varying availability of clients, we further propose a CS algorithm based on the UCB policy and virtual queue technique (CS-UCB-Q). An upper bound is also derived, which shows that the expected performance regret of the proposed CS-UCB-Q algorithm can have a sub-linear growth over communication rounds under certain conditions. Besides, the convergence performance of FL training is also analyzed. Finally, simulation results validate the efficiency of the proposed algorithms.

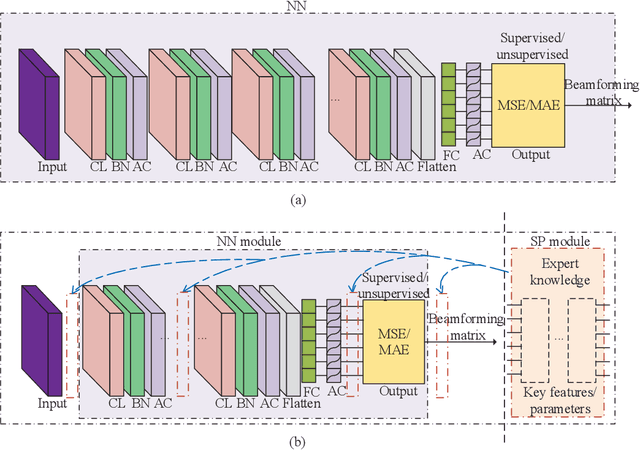

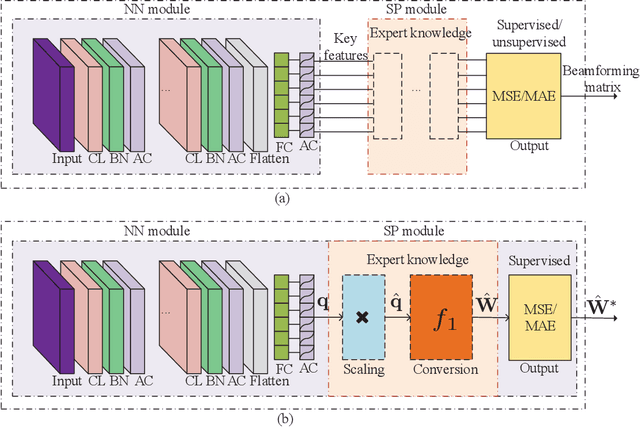

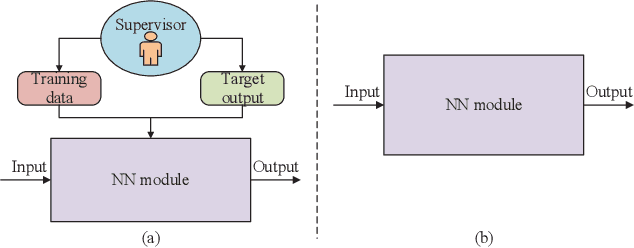

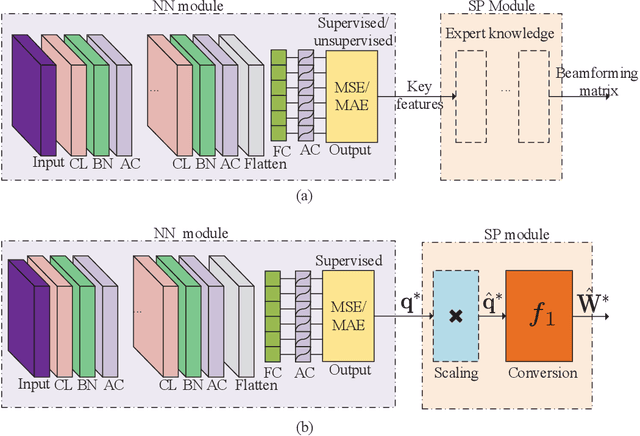

Model-Driven Beamforming Neural Networks

Jan 15, 2020

Abstract:Beamforming is evidently a core technology in recent generations of mobile communication networks. Nevertheless, an iterative process is typically required to optimize the parameters, making it ill-placed for real-time implementation due to high complexity and computational delay. Heuristic solutions such as zero-forcing (ZF) are simpler but at the expense of performance loss. Alternatively, deep learning (DL) is well understood to be a generalizing technique that can deliver promising results for a wide range of applications at much lower complexity if it is sufficiently trained. As a consequence, DL may present itself as an attractive solution to beamforming. To exploit DL, this article introduces general data- and model-driven beamforming neural networks (BNNs), presents various possible learning strategies, and also discusses complexity reduction for the DL-based BNNs. We also offer enhancement methods such as training-set augmentation and transfer learning in order to improve the generality of BNNs, accompanied by computer simulation results and testbed results showing the performance of such BNN solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge