Guanding Yu

GPINND: A deep-learning-based state of health estimation for Lithium-ion battery

Feb 04, 2026Abstract:Electrochemical models offer superior interpretability and reliability for battery degradation diagnosis. However, the high computational cost of iterative parameter identification severely hinders the practical implementation of electrochemically informed state of health (SOH) estimation in real-time systems. To address this challenge, this paper proposes an SOH estimation method that integrates deep learning with electrochemical mechanisms and adopts a sequential training strategy. First, we construct a hybrid-driven surrogate model to learn internal electrochemical dynamics by fusing high-fidelity simulation data with physical constraints. This model subsequently serves as an accurate and differentiable physical kernel for voltage reconstruction. Then, we develop a self-supervised framework to train a parameter identification network by minimizing the voltage reconstruction error. The resulting model enables the non-iterative identification of aging parameters from external measurements. Finally, utilizing the identified parameters as physicochemical health indicators, we establish a high-precision SOH estimation network that leverages data-driven residual correction to compensate for identification deviations. Crucially, a sequential training strategy is applied across these modules to effectively mitigate convergence issues and improve the accuracy of each module. Experimental results demonstrate that the proposed method achieves an average voltage reconstruction root mean square error (RMSE) of 0.0198 V and an SOH estimation RMSE of 0.0014.

Prediction-Powered Risk Monitoring of Deployed Models for Detecting Harmful Distribution Shifts

Feb 02, 2026Abstract:We study the problem of monitoring model performance in dynamic environments where labeled data are limited. To this end, we propose prediction-powered risk monitoring (PPRM), a semi-supervised risk-monitoring approach based on prediction-powered inference (PPI). PPRM constructs anytime-valid lower bounds on the running risk by combining synthetic labels with a small set of true labels. Harmful shifts are detected via a threshold-based comparison with an upper bound on the nominal risk, satisfying assumption-free finite-sample guarantees in the probability of false alarm. We demonstrate the effectiveness of PPRM through extensive experiments on image classification, large language model (LLM), and telecommunications monitoring tasks.

A PAC-Bayesian Analysis of Channel-Induced Degradation in Edge Inference

Jan 16, 2026Abstract:In the emerging paradigm of edge inference, neural networks (NNs) are partitioned across distributed edge devices that collaboratively perform inference via wireless transmission. However, standard NNs are generally trained in a noiseless environment, creating a mismatch with the noisy channels during edge deployment. In this paper, we address this issue by characterizing the channel-induced performance deterioration as a generalization error against unseen channels. We introduce an augmented NN model that incorporates channel statistics directly into the weight space, allowing us to derive PAC-Bayesian generalization bounds that explicitly quantifies the impact of wireless distortion. We further provide closed-form expressions for practical channels to demonstrate the tractability of these bounds. Inspired by the theoretical results, we propose a channel-aware training algorithm that minimizes a surrogate objective based on the derived bound. Simulations show that the proposed algorithm can effectively improve inference accuracy by leveraging channel statistics, without end-to-end re-training.

F$^4$-CKM: Learning Channel Knowledge Map with Radio Frequency Radiance Field Rendering

Jan 07, 2026Abstract:In 6G mobile communications, acquiring accurate and timely channel state information (CSI) becomes increasingly challenging due to the growing antenna array size and bandwidth. To alleviate the CSI feedback burden, the channel knowledge map (CKM) has emerged as a promising approach by leveraging environment-aware techniques to predict CSI based solely on user locations. However, how to effectively construct a CKM remains an open issue. In this paper, we propose F$^4$-CKM, a novel CKM construction framework characterized by four distinctive features: radiance Field rendering, spatial-Frequency-awareness, location-Free usage, and Fast learning. Central to our design is the adaptation of radiance field rendering techniques from computer vision to the radio frequency (RF) domain, enabled by a novel Wireless Radiator Representation (WiRARE) network that captures the spatial-frequency characteristics of wireless channels. Additionally, a novel shaping filter module and an angular sampling strategy are introduced to facilitate CKM construction. Extensive experiments demonstrate that F$^4$-CKM significantly outperforms existing baselines in terms of wireless channel prediction accuracy and efficiency.

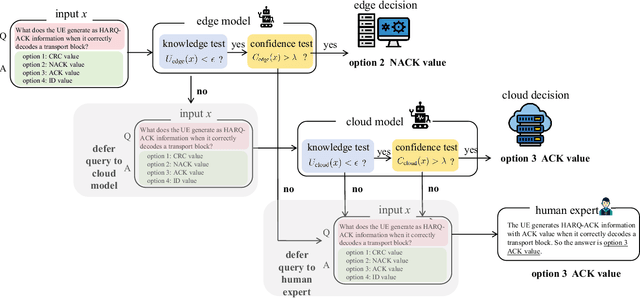

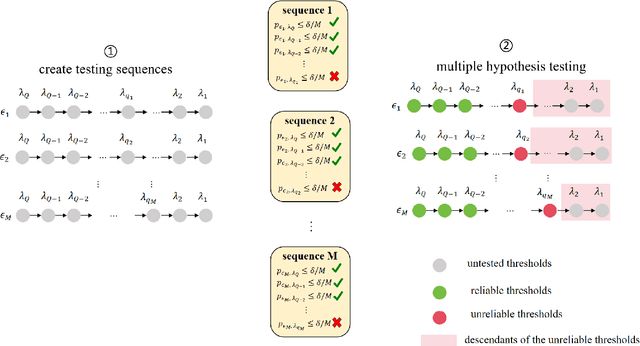

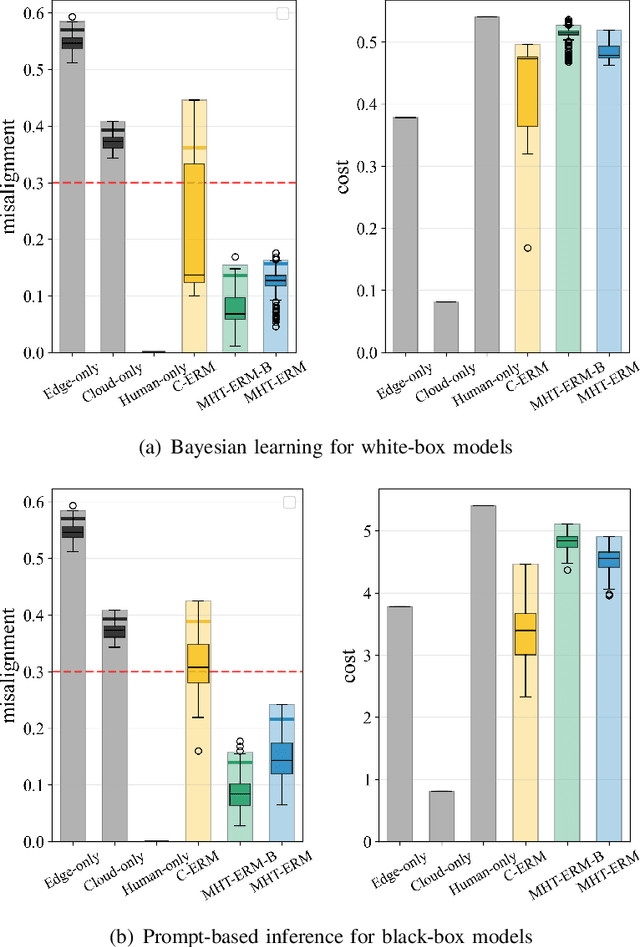

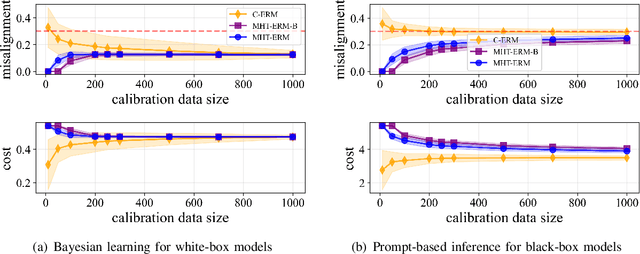

Reliable LLM-Based Edge-Cloud-Expert Cascades for Telecom Knowledge Systems

Dec 23, 2025

Abstract:Large language models (LLMs) are emerging as key enablers of automation in domains such as telecommunications, assisting with tasks including troubleshooting, standards interpretation, and network optimization. However, their deployment in practice must balance inference cost, latency, and reliability. In this work, we study an edge-cloud-expert cascaded LLM-based knowledge system that supports decision-making through a question-and-answer pipeline. In it, an efficient edge model handles routine queries, a more capable cloud model addresses complex cases, and human experts are involved only when necessary. We define a misalignment-cost constrained optimization problem, aiming to minimize average processing cost, while guaranteeing alignment of automated answers with expert judgments. We propose a statistically rigorous threshold selection method based on multiple hypothesis testing (MHT) for a query processing mechanism based on knowledge and confidence tests. The approach provides finite-sample guarantees on misalignment risk. Experiments on the TeleQnA dataset -- a telecom-specific benchmark -- demonstrate that the proposed method achieves superior cost-efficiency compared to conventional cascaded baselines, while ensuring reliability at prescribed confidence levels.

Meta-SimGNN: Adaptive and Robust WiFi Localization Across Dynamic Configurations and Diverse Scenarios

Nov 18, 2025Abstract:To promote the practicality of deep learning-based localization, existing studies aim to address the issue of scenario dependence through meta-learning. However, these studies primarily focus on variations in environmental layouts while overlooking the impact of changes in device configurations, such as bandwidth, the number of access points (APs), and the number of antennas used. Unlike environmental changes, variations in device configurations affect the dimensionality of channel state information (CSI), thereby compromising neural network usability. To address this issue, we propose Meta-SimGNN, a novel WiFi localization system that integrates graph neural networks with meta-learning to improve localization generalization and robustness. First, we introduce a fine-grained CSI graph construction scheme, where each AP is treated as a graph node, allowing for adaptability to changes in the number of APs. To structure the features of each node, we propose an amplitude-phase fusion method and a feature extraction method. The former utilizes both amplitude and phase to construct CSI images, enhancing data reliability, while the latter extracts dimension-consistent features to address variations in bandwidth and the number of antennas. Second, a similarity-guided meta-learning strategy is developed to enhance adaptability in diverse scenarios. The initial model parameters for the fine-tuning stage are determined by comparing the similarity between the new scenario and historical scenarios, facilitating rapid adaptation of the model to the new localization scenario. Extensive experimental results over commodity WiFi devices in different scenarios show that Meta-SimGNN outperforms the baseline methods in terms of localization generalization and accuracy.

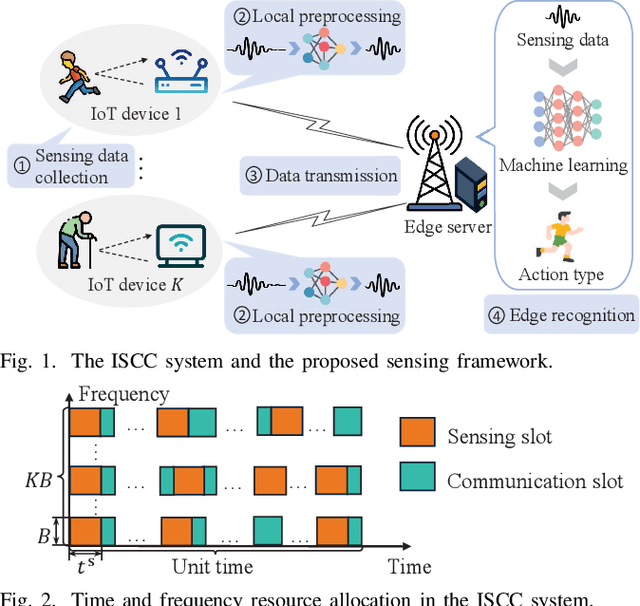

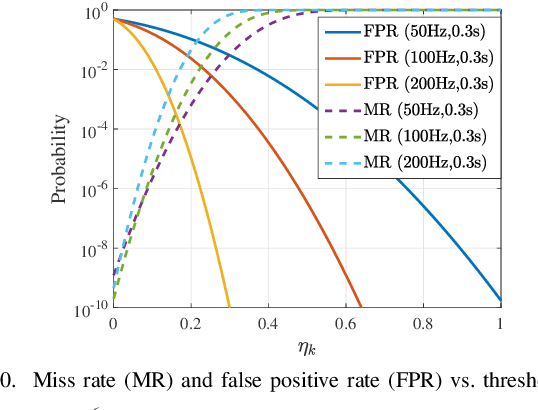

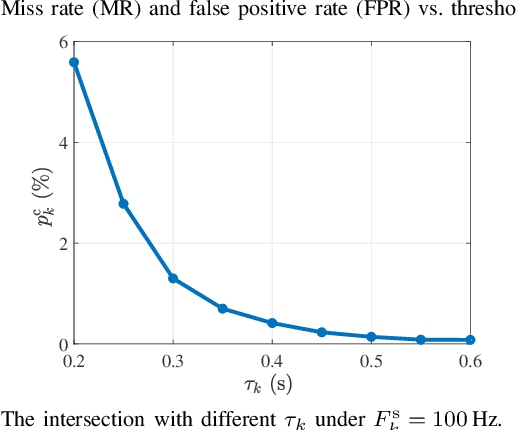

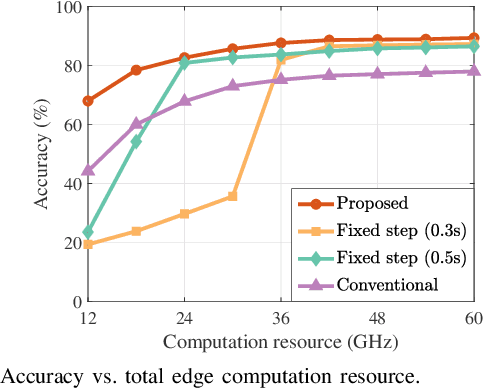

Sensing Framework Design and Performance Optimization with Action Detection for ISCC

May 05, 2025

Abstract:Integrated sensing, communication, and computation (ISCC) has been regarded as a prospective technology for the next-generation wireless network, supporting humancentric intelligent applications. However, the delay sensitivity of these computation-intensive applications, especially in a multidevice ISCC system with limited resources, highlights the urgent need for efficient sensing task execution frameworks. To address this, we propose a resource-efficient sensing framework in this paper. Different from existing solutions, it features a novel action detection module deployed at each device to detect the onset of an action. Only time windows filled with signals of interest are offloaded to the edge server and processed by the edge recognition module, thus reducing overhead. Furthermore, we quantitatively analyze the sensing performance of the proposed sensing framework and formulate a sensing accuracy maximization problem under power, delay, and resource limitations for the multi-device ISCC system. By decomposing it into two subproblems, we develop an alternating direction method of multipliers (ADMM)-based distributed algorithm. It alternatively solves a sensing accuracy maximization subproblem at each device and employs a closed-form computation resource allocation strategy at the edge server till convergence. Finally, a real-world test is conducted using commodity wireless devices to validate the sensing performance analysis. Extensive test results demonstrate that our proposal achieves higher sensing accuracy under the limited resource compared to two baselines.

Online Conformal Probabilistic Numerics via Adaptive Edge-Cloud Offloading

Mar 18, 2025

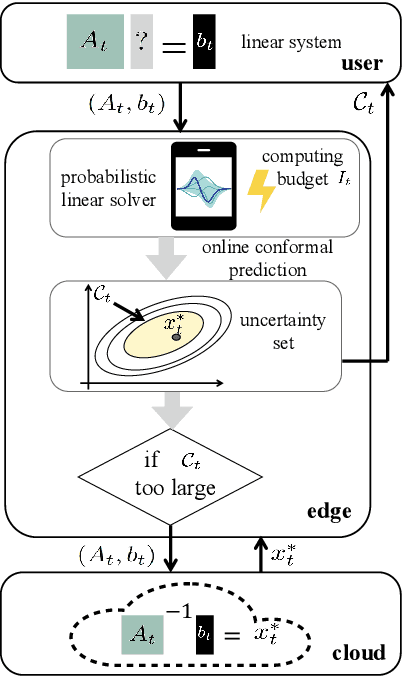

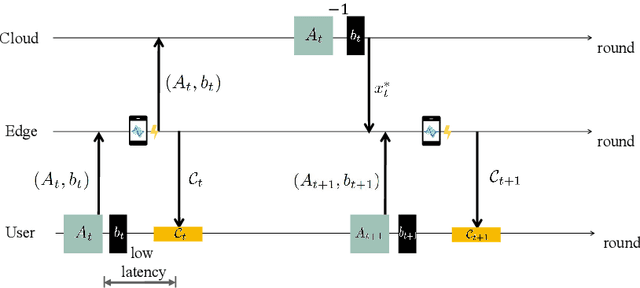

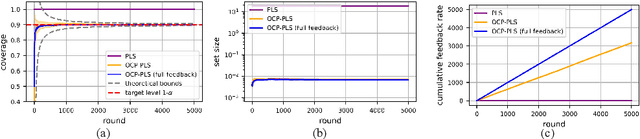

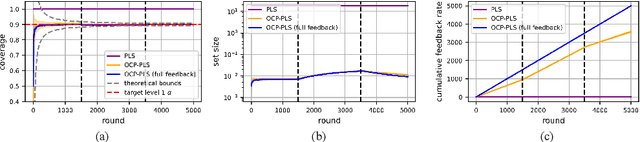

Abstract:Consider an edge computing setting in which a user submits queries for the solution of a linear system to an edge processor, which is subject to time-varying computing availability. The edge processor applies a probabilistic linear solver (PLS) so as to be able to respond to the user's query within the allotted time and computing budget. Feedback to the user is in the form of an uncertainty set. Due to model misspecification, the uncertainty set obtained via a direct application of PLS does not come with coverage guarantees with respect to the true solution of the linear system. This work introduces a new method to calibrate the uncertainty sets produced by PLS with the aim of guaranteeing long-term coverage requirements. The proposed method, referred to as online conformal prediction-PLS (OCP-PLS), assumes sporadic feedback from cloud to edge. This enables the online calibration of uncertainty thresholds via online conformal prediction (OCP), an online optimization method previously studied in the context of prediction models. The validity of OCP-PLS is verified via experiments that bring insights into trade-offs between coverage, prediction set size, and cloud usage.

Graph Neural Network for Location- and Orientation-Assisted mmWave Beam Alignment

Mar 10, 2025Abstract:In massive multi-input multi-output (MIMO) systems, the main bottlenecks of location- and orientation-assisted beam alignment using deep neural networks (DNNs) are large training overhead and significant performance degradation. This paper proposes a graph neural network (GNN)-based beam selection approach that reduces the training overhead and improves the alignment accuracy, by capitalizing on the strong expressive ability and few trainable parameters of GNN. The channels of beams are correlated according to the beam direction. Therefore, we establish a graph according to the angular correlation between beams and use GNN to capture the channel correlation between adjacent beams, which helps accelerate the learning process and enhance the beam alignment performance. Compared to existing DNN-based algorithms, the proposed method requires only 20\% of the dataset size to achieve equivalent accuracy and improves the Top-1 accuracy by 10\% when using the same dataset.

Joint Transmission and Deblurring: A Semantic Communication Approach Using Events

Jan 16, 2025

Abstract:Deep learning-based joint source-channel coding (JSCC) is emerging as a promising technology for effective image transmission. However, most existing approaches focus on transmitting clear images, overlooking real-world challenges such as motion blur caused by camera shaking or fast-moving objects. Motion blur often degrades image quality, making transmission and reconstruction more challenging. Event cameras, which asynchronously record pixel intensity changes with extremely low latency, have shown great potential for motion deblurring tasks. However, the efficient transmission of the abundant data generated by event cameras remains a significant challenge. In this work, we propose a novel JSCC framework for the joint transmission of blurry images and events, aimed at achieving high-quality reconstructions under limited channel bandwidth. This approach is designed as a deblurring task-oriented JSCC system. Since RGB cameras and event cameras capture the same scene through different modalities, their outputs contain both shared and domain-specific information. To avoid repeatedly transmitting the shared information, we extract and transmit their shared information and domain-specific information, respectively. At the receiver, the received signals are processed by a deblurring decoder to generate clear images. Additionally, we introduce a multi-stage training strategy to train the proposed model. Simulation results demonstrate that our method significantly outperforms existing JSCC-based image transmission schemes, addressing motion blur effectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge