Qiqi Xiao

Meta-SimGNN: Adaptive and Robust WiFi Localization Across Dynamic Configurations and Diverse Scenarios

Nov 18, 2025Abstract:To promote the practicality of deep learning-based localization, existing studies aim to address the issue of scenario dependence through meta-learning. However, these studies primarily focus on variations in environmental layouts while overlooking the impact of changes in device configurations, such as bandwidth, the number of access points (APs), and the number of antennas used. Unlike environmental changes, variations in device configurations affect the dimensionality of channel state information (CSI), thereby compromising neural network usability. To address this issue, we propose Meta-SimGNN, a novel WiFi localization system that integrates graph neural networks with meta-learning to improve localization generalization and robustness. First, we introduce a fine-grained CSI graph construction scheme, where each AP is treated as a graph node, allowing for adaptability to changes in the number of APs. To structure the features of each node, we propose an amplitude-phase fusion method and a feature extraction method. The former utilizes both amplitude and phase to construct CSI images, enhancing data reliability, while the latter extracts dimension-consistent features to address variations in bandwidth and the number of antennas. Second, a similarity-guided meta-learning strategy is developed to enhance adaptability in diverse scenarios. The initial model parameters for the fine-tuning stage are determined by comparing the similarity between the new scenario and historical scenarios, facilitating rapid adaptation of the model to the new localization scenario. Extensive experimental results over commodity WiFi devices in different scenarios show that Meta-SimGNN outperforms the baseline methods in terms of localization generalization and accuracy.

Graph Neural Network for Location- and Orientation-Assisted mmWave Beam Alignment

Mar 10, 2025Abstract:In massive multi-input multi-output (MIMO) systems, the main bottlenecks of location- and orientation-assisted beam alignment using deep neural networks (DNNs) are large training overhead and significant performance degradation. This paper proposes a graph neural network (GNN)-based beam selection approach that reduces the training overhead and improves the alignment accuracy, by capitalizing on the strong expressive ability and few trainable parameters of GNN. The channels of beams are correlated according to the beam direction. Therefore, we establish a graph according to the angular correlation between beams and use GNN to capture the channel correlation between adjacent beams, which helps accelerate the learning process and enhance the beam alignment performance. Compared to existing DNN-based algorithms, the proposed method requires only 20\% of the dataset size to achieve equivalent accuracy and improves the Top-1 accuracy by 10\% when using the same dataset.

Improving Lesion Segmentation for Diabetic Retinopathy using Adversarial Learning

Jul 27, 2020

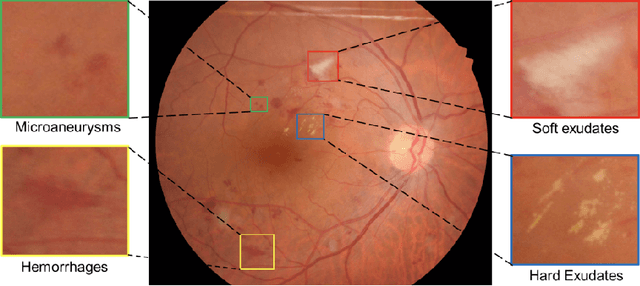

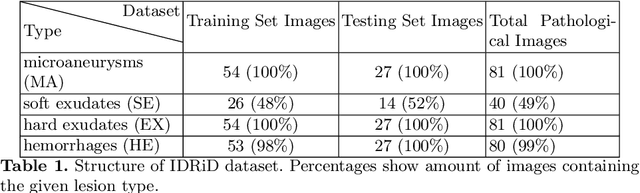

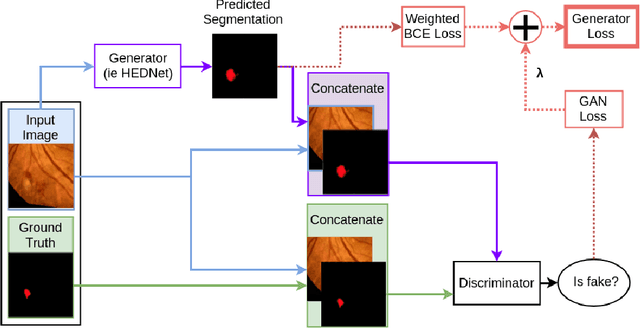

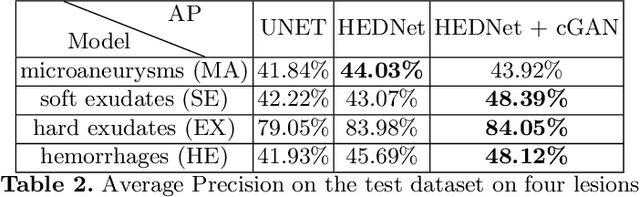

Abstract:Diabetic Retinopathy (DR) is a leading cause of blindness in working age adults. DR lesions can be challenging to identify in fundus images, and automatic DR detection systems can offer strong clinical value. Of the publicly available labeled datasets for DR, the Indian Diabetic Retinopathy Image Dataset (IDRiD) presents retinal fundus images with pixel-level annotations of four distinct lesions: microaneurysms, hemorrhages, soft exudates and hard exudates. We utilize the HEDNet edge detector to solve a semantic segmentation task on this dataset, and then propose an end-to-end system for pixel-level segmentation of DR lesions by incorporating HEDNet into a Conditional Generative Adversarial Network (cGAN). We design a loss function that adds adversarial loss to segmentation loss. Our experiments show that the addition of the adversarial loss improves the lesion segmentation performance over the baseline.

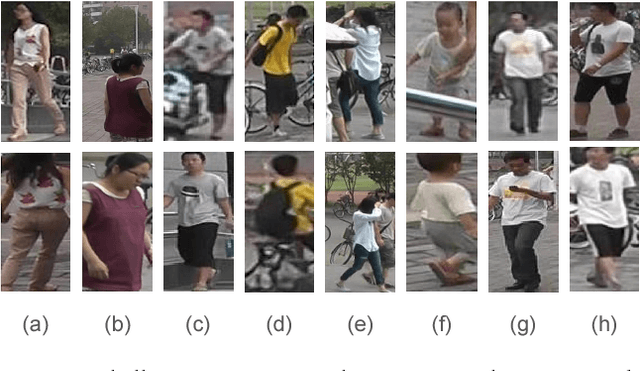

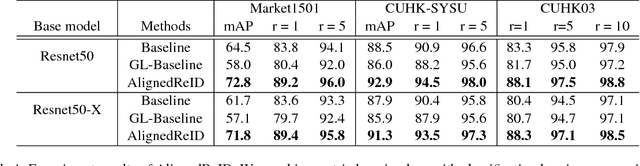

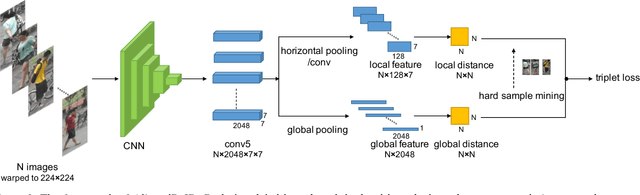

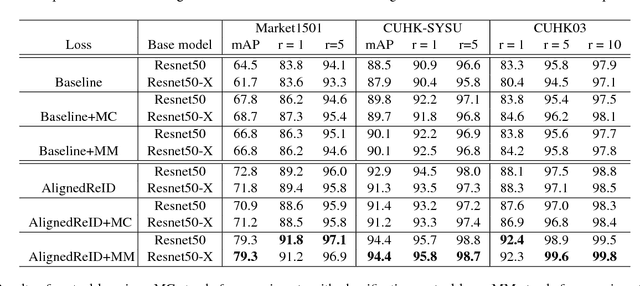

AlignedReID: Surpassing Human-Level Performance in Person Re-Identification

Jan 31, 2018

Abstract:In this paper, we propose a novel method called AlignedReID that extracts a global feature which is jointly learned with local features. Global feature learning benefits greatly from local feature learning, which performs an alignment/matching by calculating the shortest path between two sets of local features, without requiring extra supervision. After the joint learning, we only keep the global feature to compute the similarities between images. Our method achieves rank-1 accuracy of 94.4% on Market1501 and 97.8% on CUHK03, outperforming state-of-the-art methods by a large margin. We also evaluate human-level performance and demonstrate that our method is the first to surpass human-level performance on Market1501 and CUHK03, two widely used Person ReID datasets.

Margin Sample Mining Loss: A Deep Learning Based Method for Person Re-identification

Oct 07, 2017

Abstract:Person re-identification (ReID) is an important task in computer vision. Recently, deep learning with a metric learning loss has become a common framework for ReID. In this paper, we also propose a new metric learning loss with hard sample mining called margin smaple mining loss (MSML) which can achieve better accuracy compared with other metric learning losses, such as triplet loss. In experi- ments, our proposed methods outperforms most of the state-of-the-art algorithms on Market1501, MARS, CUHK03 and CUHK-SYSU.

Cross Domain Knowledge Transfer for Person Re-identification

Nov 18, 2016

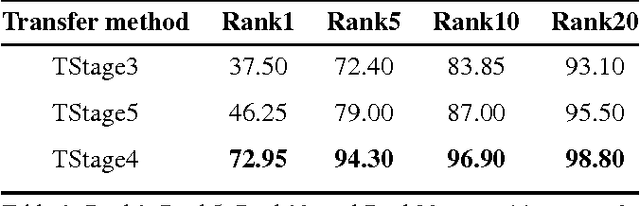

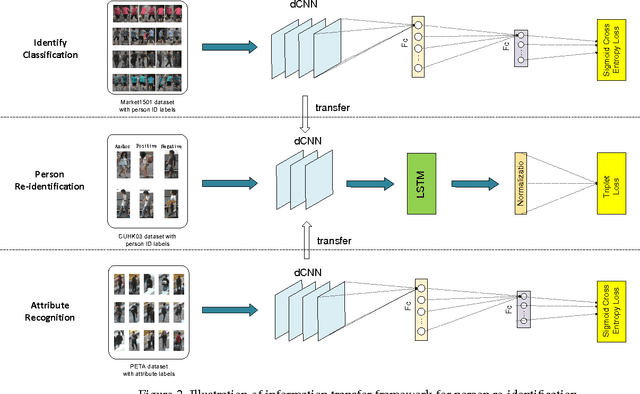

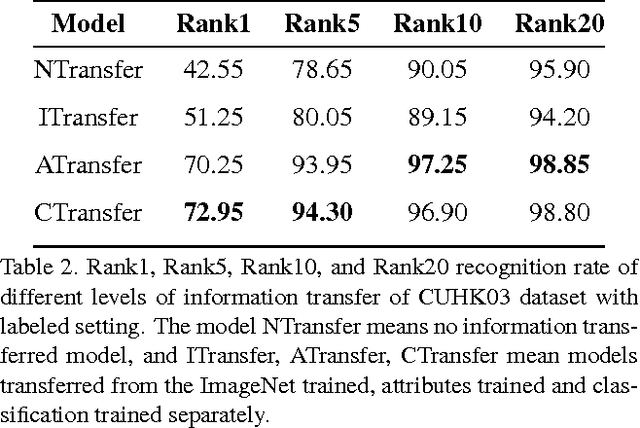

Abstract:Person Re-Identification (re-id) is a challenging task in computer vision, especially when there are limited training data from multiple camera views. In this paper, we pro- pose a deep learning based person re-identification method by transferring knowledge of mid-level attribute features and high-level classification features. Building on the idea that identity classification, attribute recognition and re- identification share the same mid-level semantic representations, they can be trained sequentially by fine-tuning one based on another. In our framework, we train identity classification and attribute recognition tasks from deep Convolutional Neural Network (dCNN) to learn person information. The information can be transferred to the person re-id task and improves its accuracy by a large margin. Further- more, a Long Short Term Memory(LSTM) based Recurrent Neural Network (RNN) component is extended by a spacial gate. This component is used in the re-id model to pay attention to certain spacial parts in each recurrent unit. Experimental results show that our method achieves 78.3% of rank-1 recognition accuracy on the CUHK03 benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge