Hanlei Li

F$^4$-CKM: Learning Channel Knowledge Map with Radio Frequency Radiance Field Rendering

Jan 07, 2026Abstract:In 6G mobile communications, acquiring accurate and timely channel state information (CSI) becomes increasingly challenging due to the growing antenna array size and bandwidth. To alleviate the CSI feedback burden, the channel knowledge map (CKM) has emerged as a promising approach by leveraging environment-aware techniques to predict CSI based solely on user locations. However, how to effectively construct a CKM remains an open issue. In this paper, we propose F$^4$-CKM, a novel CKM construction framework characterized by four distinctive features: radiance Field rendering, spatial-Frequency-awareness, location-Free usage, and Fast learning. Central to our design is the adaptation of radiance field rendering techniques from computer vision to the radio frequency (RF) domain, enabled by a novel Wireless Radiator Representation (WiRARE) network that captures the spatial-frequency characteristics of wireless channels. Additionally, a novel shaping filter module and an angular sampling strategy are introduced to facilitate CKM construction. Extensive experiments demonstrate that F$^4$-CKM significantly outperforms existing baselines in terms of wireless channel prediction accuracy and efficiency.

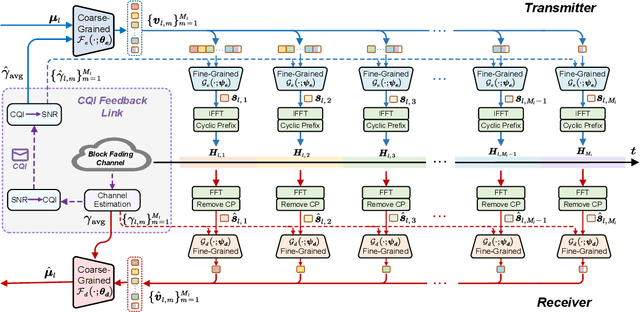

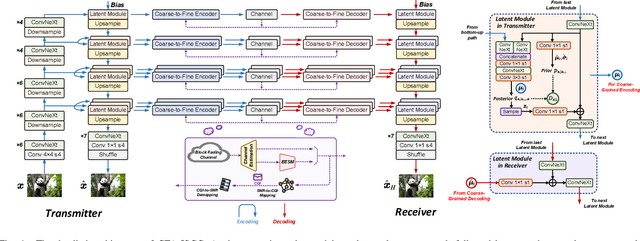

Coarse-to-Fine: A Dual-Phase Channel-Adaptive Method for Wireless Image Transmission

Dec 11, 2024

Abstract:Developing channel-adaptive deep joint source-channel coding (JSCC) systems is a critical challenge in wireless image transmission. While recent advancements have been made, most existing approaches are designed for static channel environments, limiting their ability to capture the dynamics of channel environments. As a result, their performance may degrade significantly in practical systems. In this paper, we consider time-varying block fading channels, where the transmission of a single image can experience multiple fading events. We propose a novel coarse-to-fine channel-adaptive JSCC framework (CFA-JSCC) that is designed to handle both significant fluctuations and rapid changes in wireless channels. Specifically, in the coarse-grained phase, CFA-JSCC utilizes the average signal-to-noise ratio (SNR) to adjust the encoding strategy, providing a preliminary adaptation to the prevailing channel conditions. Subsequently, in the fine-grained phase, CFA-JSCC leverages instantaneous SNR to dynamically refine the encoding strategy. This refinement is achieved by re-encoding the remaining channel symbols whenever the channel conditions change. Additionally, to reduce the overhead for SNR feedback, we utilize a limited set of channel quality indicators (CQIs) to represent the channel SNR and further propose a reinforcement learning (RL)-based CQI selection strategy to learn this mapping. This strategy incorporates a novel reward shaping scheme that provides intermediate rewards to facilitate the training process. Experimental results demonstrate that our CFA-JSCC provides enhanced flexibility in capturing channel variations and improved robustness in time-varying channel environments.

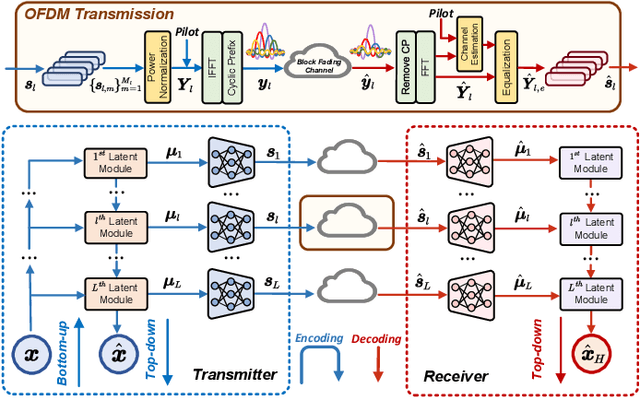

Learned Image Transmission with Hierarchical Variational Autoencoder

Sep 04, 2024

Abstract:In this paper, we introduce an innovative hierarchical joint source-channel coding (HJSCC) framework for image transmission, utilizing a hierarchical variational autoencoder (VAE). Our approach leverages a combination of bottom-up and top-down paths at the transmitter to autoregressively generate multiple hierarchical representations of the original image. These representations are then directly mapped to channel symbols for transmission by the JSCC encoder. We extend this framework to scenarios with a feedback link, modeling transmission over a noisy channel as a probabilistic sampling process and deriving a novel generative formulation for JSCC with feedback. Compared with existing approaches, our proposed HJSCC provides enhanced adaptability by dynamically adjusting transmission bandwidth, encoding these representations into varying amounts of channel symbols. Additionally, we introduce a rate attention module to guide the JSCC encoder in optimizing its encoding strategy based on prior information. Extensive experiments on images of varying resolutions demonstrate that our proposed model outperforms existing baselines in rate-distortion performance and maintains robustness against channel noise.

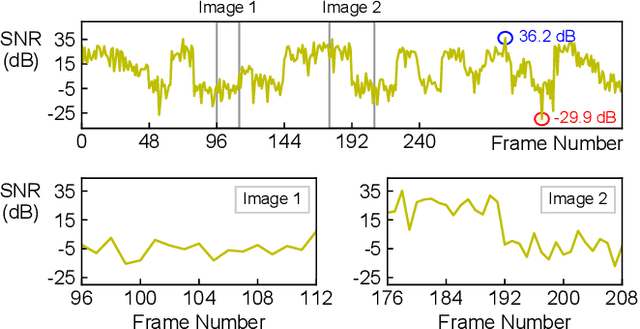

Deep Refinement-Based Joint Source Channel Coding over Time-Varying Channels

Nov 26, 2023

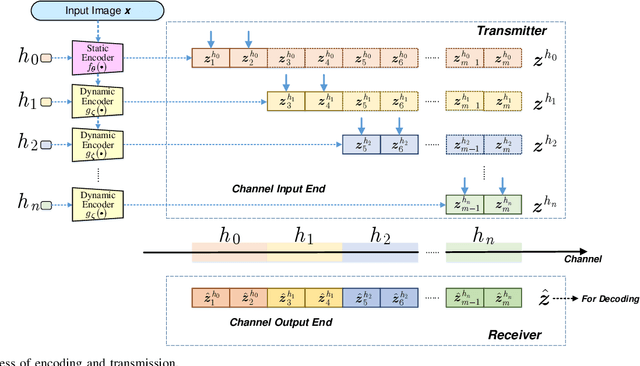

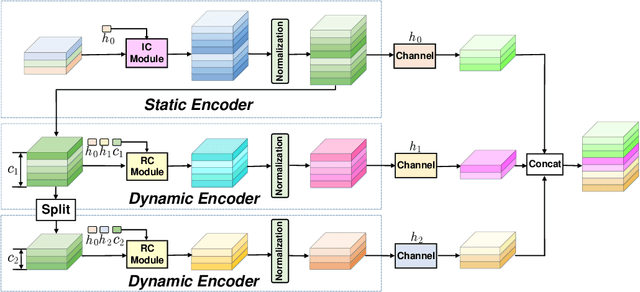

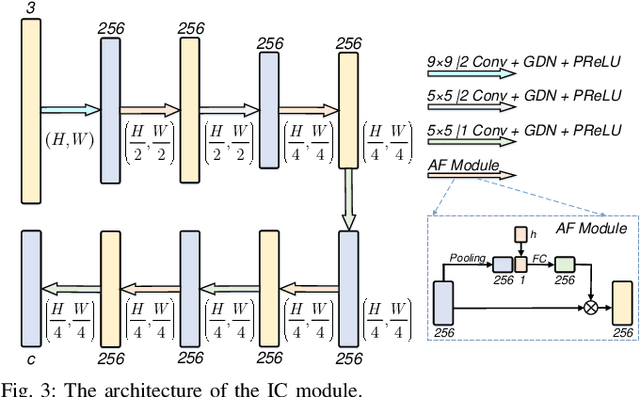

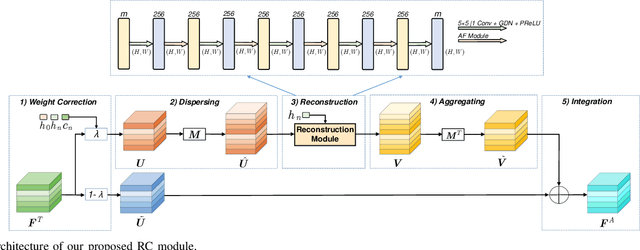

Abstract:In recent developments, deep learning (DL)-based joint source-channel coding (JSCC) for wireless image transmission has made significant strides in performance enhancement. Nonetheless, the majority of existing DL-based JSCC methods are tailored for scenarios featuring stable channel conditions, notably a fixed signal-to-noise ratio (SNR). This specialization poses a limitation, as their performance tends to wane in practical scenarios marked by highly dynamic channels, given that a fixed SNR inadequately represents the dynamic nature of such channels. In response to this challenge, we introduce a novel solution, namely deep refinement-based JSCC (DRJSCC). This innovative method is designed to seamlessly adapt to channels exhibiting temporal variations. By leveraging instantaneous channel state information (CSI), we dynamically optimize the encoding strategy through re-encoding the channel symbols. This dynamic adjustment ensures that the encoding strategy consistently aligns with the varying channel conditions during the transmission process. Specifically, our approach begins with the division of encoded symbols into multiple blocks, which are transmitted progressively to the receiver. In the event of changing channel conditions, we propose a mechanism to re-encode the remaining blocks, allowing them to adapt to the current channel conditions. Experimental results show that the DRJSCC scheme achieves comparable performance to the other mainstream DL-based JSCC models in stable channel conditions, and also exhibits great robustness against time-varying channels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge